TestMu AI Cloud GitHub App Integration

1. Overview

What is the TestMu AI Cloud GitHub App?

The TestMu AI Cloud GitHub App leverages KaneAI, our advanced AI testing agent, to transform how your team approaches quality assurance. Instead of manually writing test cases for every code change, the app intelligently analyzes your pull requests, understands the business logic and technical implementation, and automatically generates relevant end-to-end test scenarios.

How It Works

When a developer creates a pull request, simply add a comment to trigger the AI workflow. The app then:

- Analyzes the code changes, PR content and repository context (including your README)

- Generates intelligent test cases using KaneAI's AI engine

- Authors executable test scripts with proper assertions and validations

- Executes tests on TestMu AI's cloud infrastructure via HyperExecute

- Reports results with AI-powered Root Cause Analysis (RCA) for failures

All of this happens automatically, with real-time progress updates posted directly to your pull request.

Key Benefits

- Accelerated Test Coverage: Automatically generate comprehensive test cases for every PR, significantly reducing the time from code commit to production-ready testing

- Intelligent Test Design: AI-powered analysis creates contextually relevant test scenarios that understand both code changes and business logic

- Seamless Developer Experience: Native GitHub integration means developers never leave their workflow—all updates, results, and insights appear directly in PR comments

- Enterprise Scalability: Leverages HyperExecute for parallel test execution across multiple browsers, devices, and platforms

- Continuous Quality Intelligence: AI-driven Root Cause Analysis automatically diagnoses test failures, reducing debugging time and accelerating resolution

- Unified Test Management: All generated tests sync with TestMu AI Test Manager, providing centralized visibility and control across your QA operations

2. Prerequisites

Before implementing the TestMu AI Cloud GitHub App in your development workflow, ensure your organization has the following:

Account Requirements

-

TestMu AI Enterprise Account: An active TestMu AI account with appropriate licensing. Sign up now if you don't have an account yet.

-

KaneAI Access: This integration requires KaneAI to be enabled on your TestMu AI account. New signups receive a 14-day free trial of KaneAI with full feature access.

Repository Requirements

- GitHub Repository Access: Administrative access to the GitHub repositories where you want to install the app

3. Installation

Follow these steps to install and authorize the TestMu AI Cloud GitHub App for your organization.

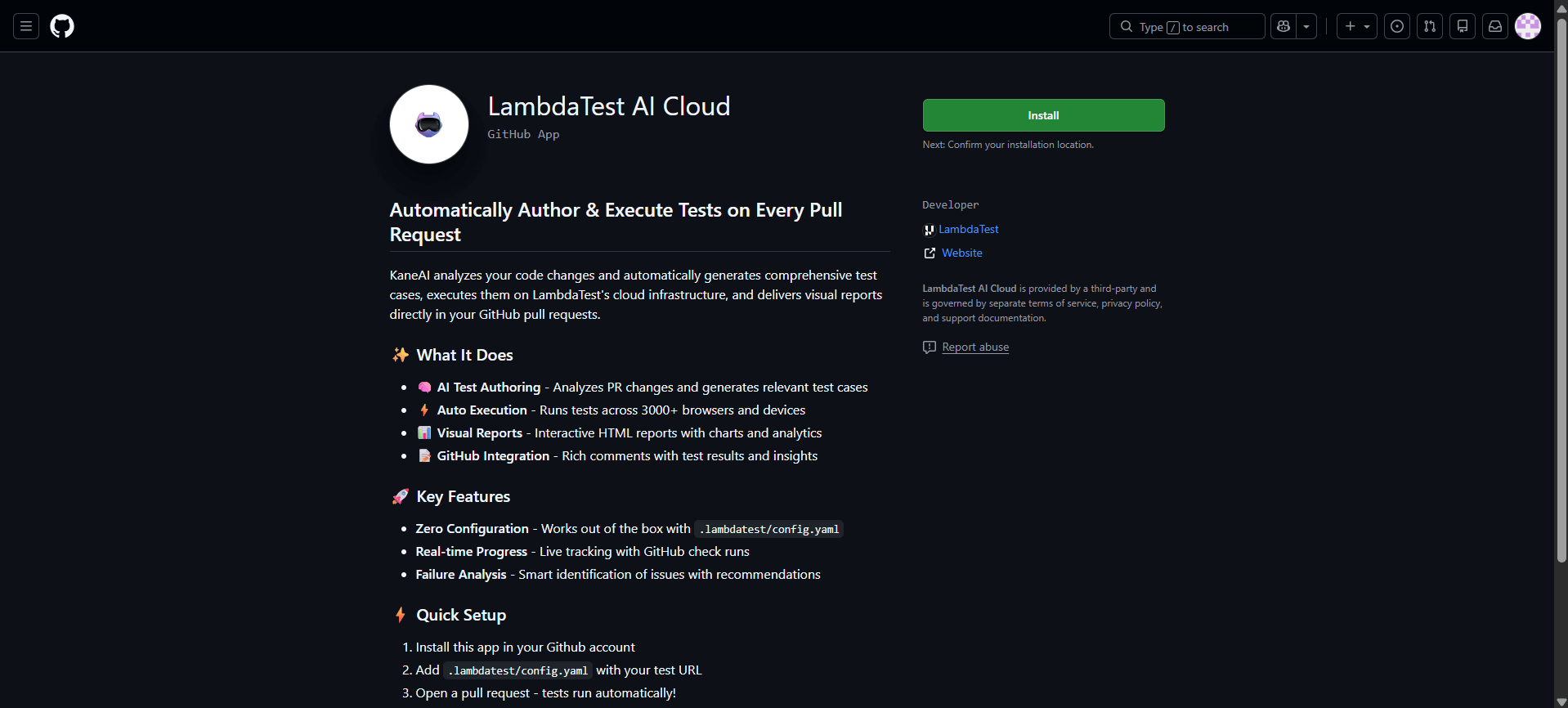

Step 1: Access GitHub Marketplace

Navigate to the TestMu AI Cloud GitHub App on GitHub Marketplace and click Install to begin the installation process.

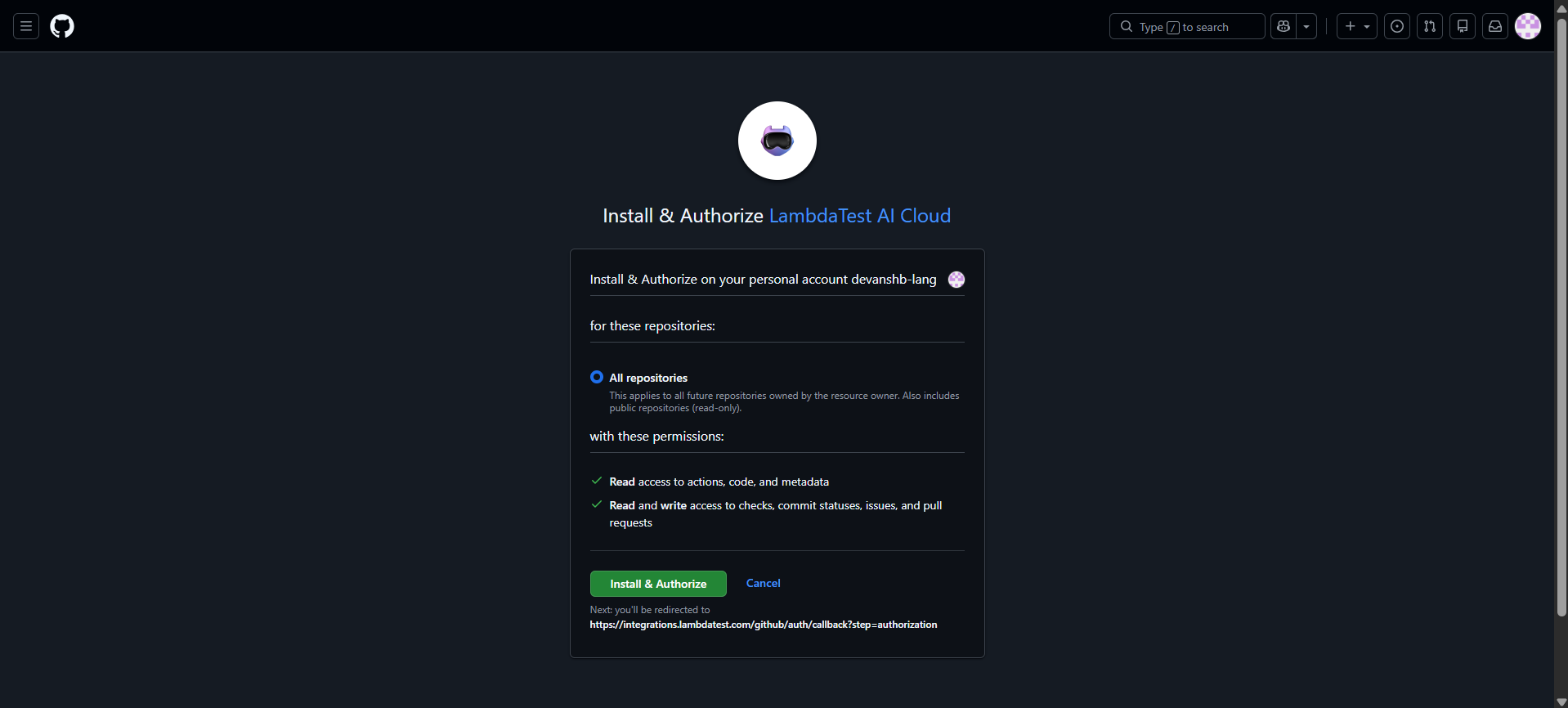

Step 2: Configure Repository Access

During installation, you'll need to specify which repositories should have access to the app:

- Organization-wide Installation: Select All repositories to enable the app across your entire GitHub organization

- Selective Installation: Choose Only select repositories and specify individual repositories for more granular control

After making your selection and clicking the Install and Authorize button, you will be redirected to TestMu AI where you will be asked to login.

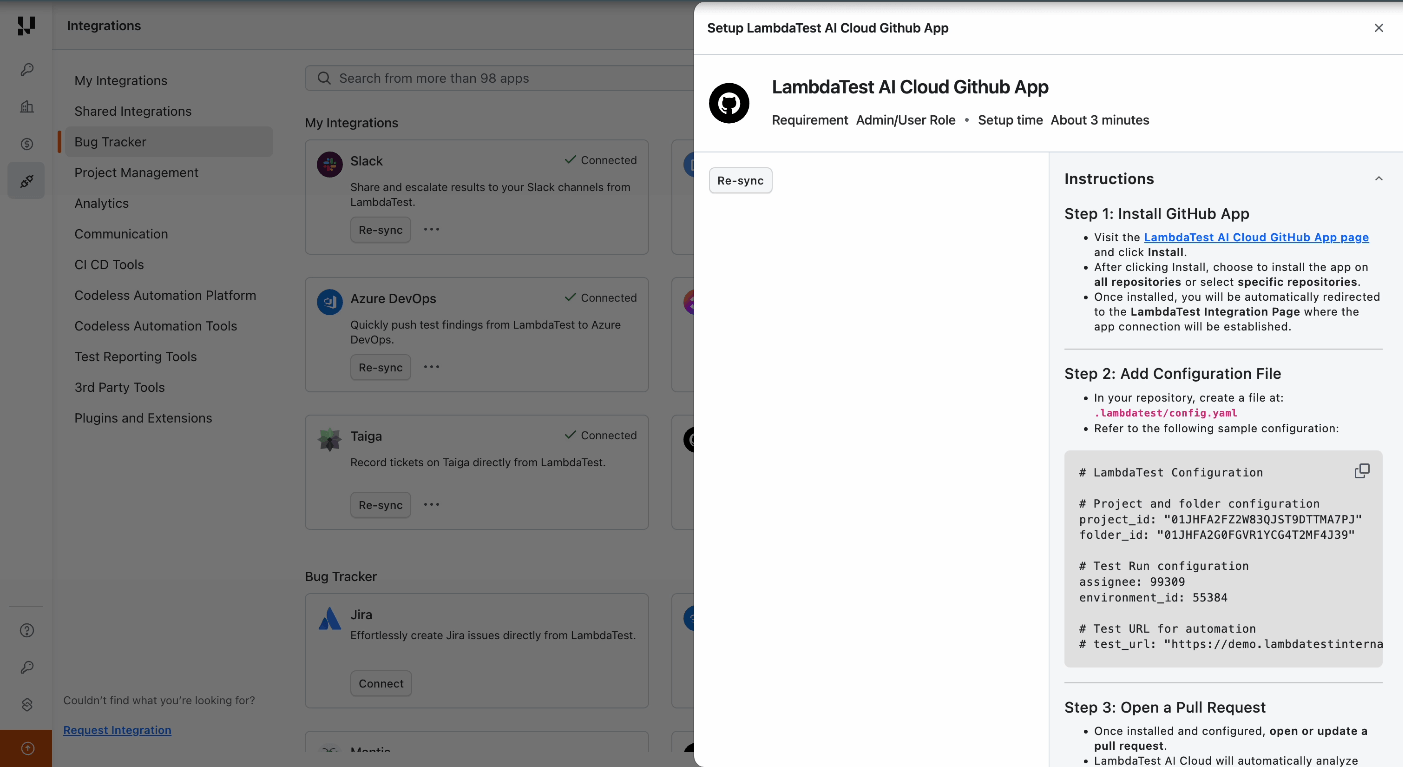

Once done, you will be redirected to the Integrations page.

In the sidebar, you can see that the TestMu AI Cloud GitHub App has been successfully integrated. You can also view and copy the configurations such as project ID and folder ID directly from the sidebar and paste them into your repository.

For pilot programs or initial rollouts, we recommend installing on a select subset of repositories first. Once your team is comfortable with the workflow, you can expand access organization-wide.

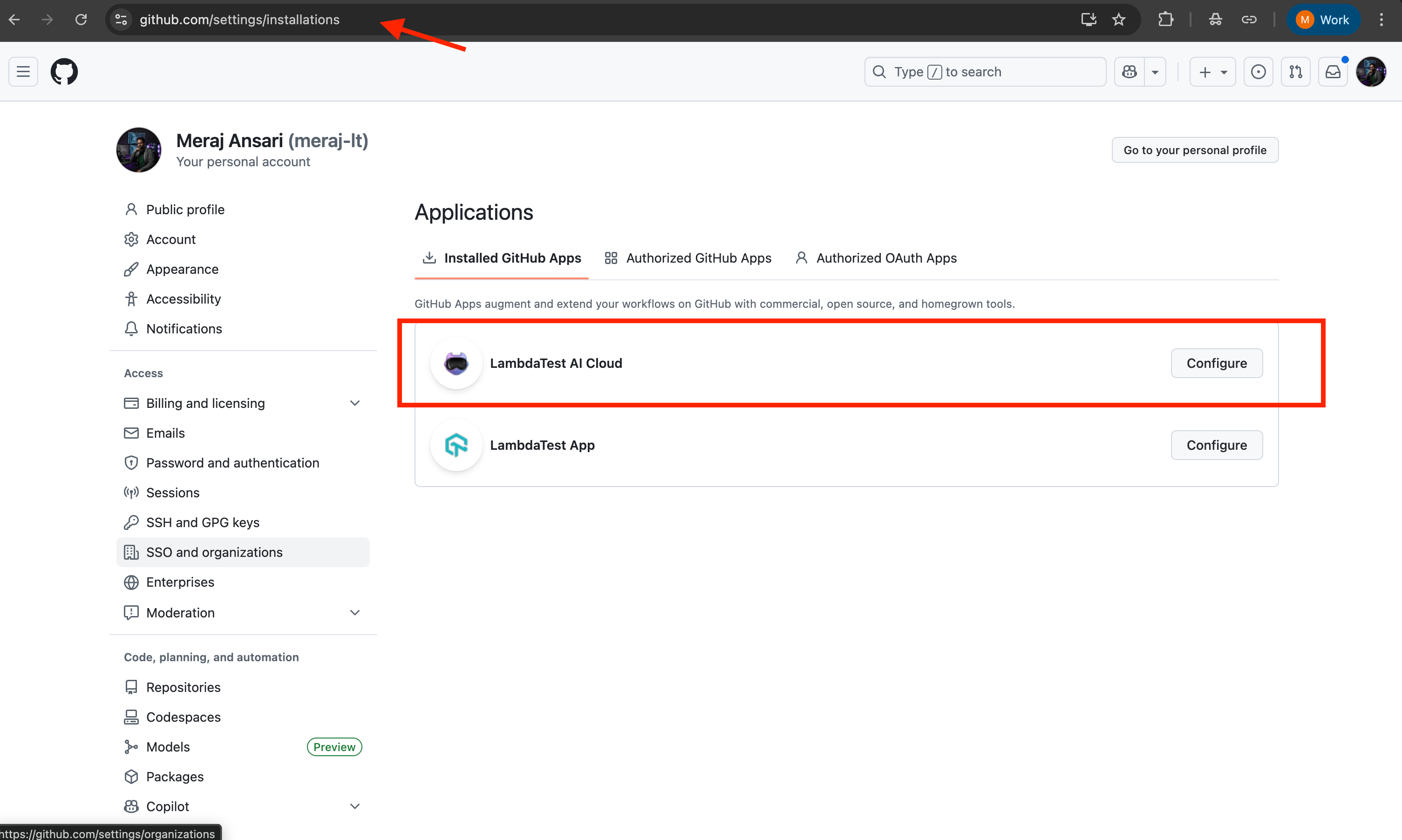

Step 3: Verify Successful Installation

Confirm the installation was successful by:

- Navigating to your GitHub organization's Settings page

- In left side menu under Integrations, click Applications.

- Selecting Installed GitHub Apps from the left sidebar

- Verifying that TestMu AI Cloud appears in the list of installed applications

You can also click on the app to review and modify repository access permissions at any time.

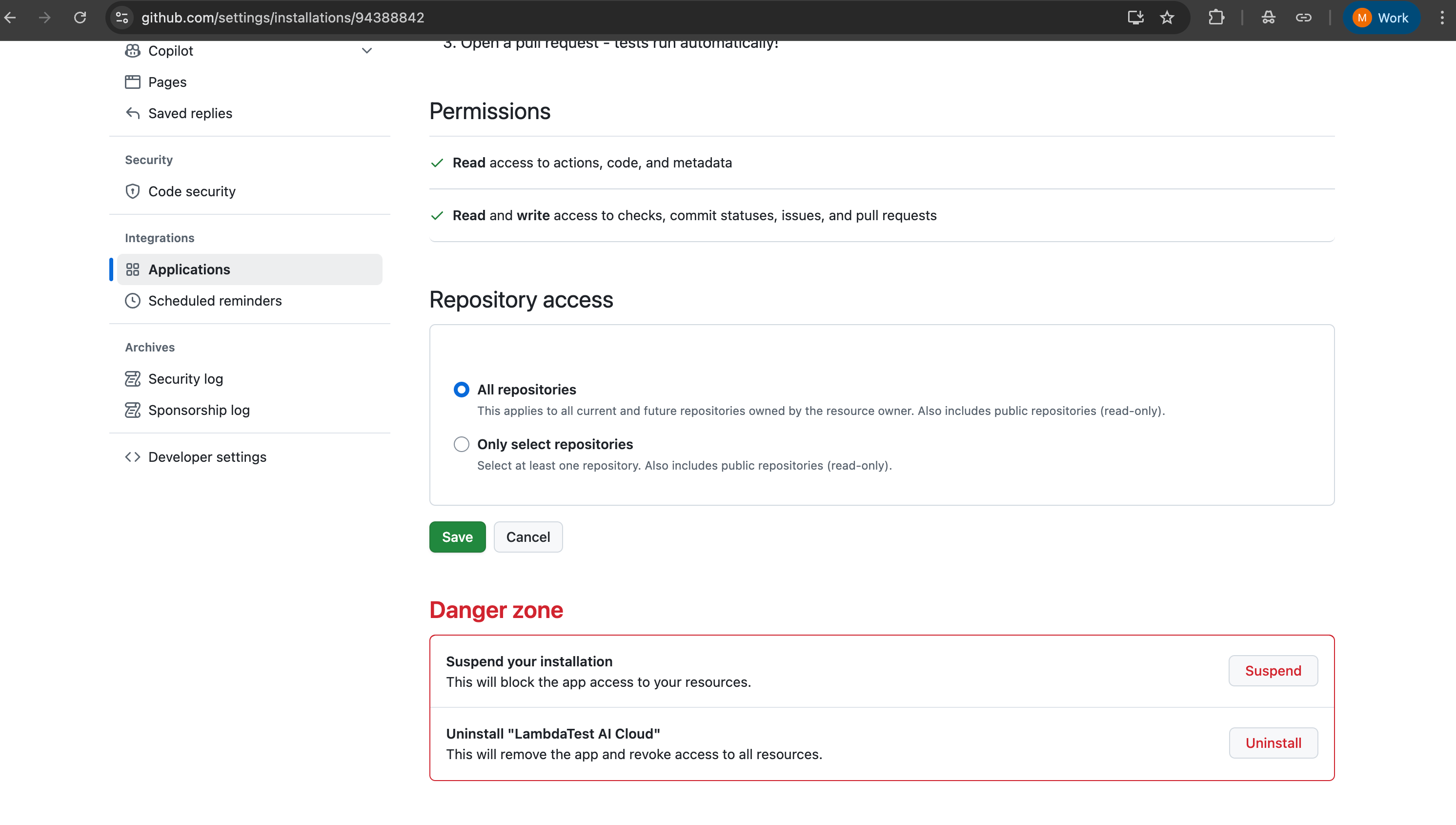

3.1 Uninstalling the GitHub App

If you need to remove the TestMu AI Cloud GitHub App from your organization:

- Navigate to the TestMu AI Cloud GitHub App on GitHub Marketplace

- Click on the Configure button to access the app settings

- Scroll down to the bottom of the page to find the Danger Zone section

- Click on the Uninstall button to remove the app from your organization

- Confirm the uninstallation when prompted

Uninstalling the GitHub App will stop all AI-powered test generation workflows on your pull requests. This action cannot be undone, and you'll need to reinstall the app to restore functionality.

4. Repository Configuration

After installing the GitHub App, each repository requires a configuration file to connect your GitHub workflow with your TestMu AI Test Manager environment. This configuration defines where tests should be stored, who should be assigned, and which environment to test against.

Configuration File Setup

Create a .lambdatest/config.yaml file in the root directory of your repository with the following structure:

# LambdaTest AI Cloud Configuration

project_id: "your_project_id"

folder_id: "your_folder_id"

assignee: your_user_id

environment_id: environment_id

test_url: "https://your-deployed-app-url.com/"

Configuration Parameters

| Parameter | Description | How to Obtain |

|---|---|---|

project_id | The unique identifier for your TestMu AI Test Manager project | Navigate to your project in Test Manager and copy the ID from the URL |

folder_id | The folder where generated test cases will be organized | Create or select a folder in Test Manager and obtain its ID from the URL |

assignee | The TestMu AI user ID who will be assigned to test runs for executions | Can be referenced from APIs |

environment_id | The target testing environment (browser, OS, device configurations) | Create environments in Test Manager and reference their IDs |

test_url | The base URL of your application under test | Your staging or testing environment URL where tests will be executed |

Note: All configuration IDs can be retrieved programmatically from the TestMu AI Test Manager API Documentation.

The README.md file in your repository is analyzed by KaneAI to understand your application's purpose, architecture, and business logic. A comprehensive README significantly improves the quality and relevance of generated test cases. Include:

- Application overview and key features

- User workflows and critical paths

- Architecture and technology stack

- Business rules and validation logic

Repository Structure

Your final repository structure should look like this:

your-repo/

├── .lambdatest/

│ └── config.yaml # LambdaTest configuration

└── agent.md # Optional file for custom instructions to enhance responses

├── src/ # Your application source code

├── README.md # Detailed project documentation (used by AI)

└── ... other project files

Configuration Best Practices

- Environment Segregation: Use separate

project_idandfolder_idvalues for different branches (e.g., staging vs. production) to maintain test organization - Team Assignment: Configure

assigneeto route test runs to the appropriate QA team member or use a shared team account for visibility - Dynamic URLs: For teams with ephemeral preview environments, consider parameterizing

test_urlor updating it per deployment - Version Control: Commit

.lambdatest/config.yamlto your repository so all team members use consistent configuration

5. Triggering Test Generation

With the GitHub App installed and your repository configured, you're ready to start generating AI-powered tests for your pull requests.

Initiating the Workflow

The test generation workflow is triggered through a simple comment on any pull request in your repository. Once your development work is ready for testing:

- Create a Pull Request: Push your feature branch and open a PR as you normally would

- Add a Trigger Comment: Post any of the following commands in the PR comments section:

@LambdaTest Validate this PR

#OR

@KaneAI Validate this PR

Any of these commands will initiate the complete AI-powered testing workflow, from analysis to execution and reporting.

What Happens Next

After you post the trigger comment, KaneAI immediately begins working:

- Code Analysis: The AI examines all code changes in the pull request, including file modifications, additions, and deletions

- Context Gathering: Your repository's README.md, PR Title, Description, Comments and AGENT.md are analyzed to understand application context

- Test Strategy: Based on the changes and context, the AI determines which areas require testing and what scenarios to cover

- Test Generation: Intelligent test cases are created with appropriate assertions, validations, and edge case handling

This entire process typically completes within minutes, depending on the complexity of your code changes.

6. Automated Workflow and Real-Time Tracking

The TestMu AI Cloud GitHub App provides complete transparency throughout the testing lifecycle. From the moment you trigger the workflow, you'll receive real-time updates directly in your pull request—keeping your entire team informed without requiring context switching or dashboard checking.

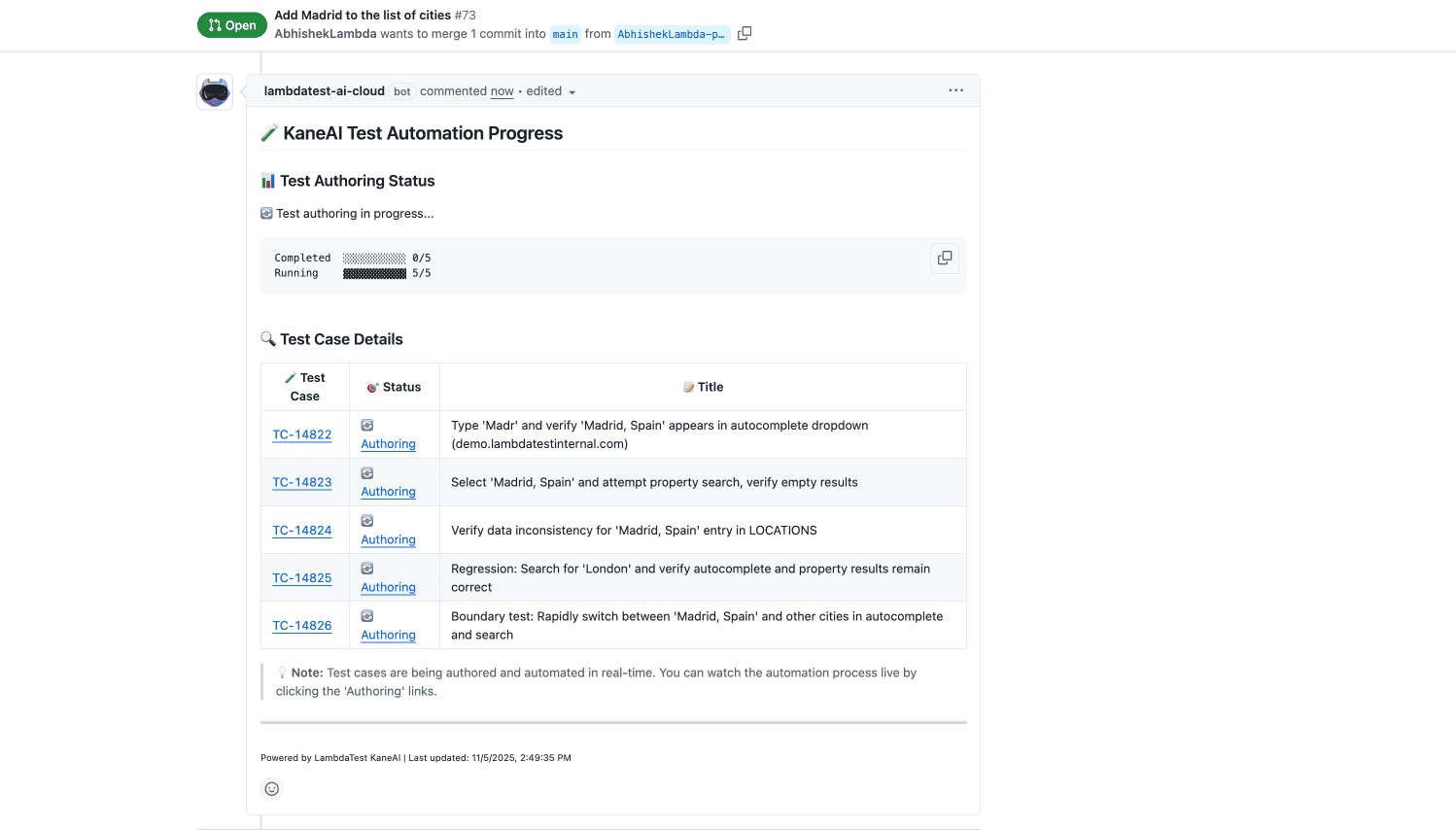

Unified Progress Tracking

As soon as the workflow begins, KaneAI posts a comprehensive progress tracker comment to your PR. This dynamic comment serves as your single source of truth for the entire testing operation.

The Progress Tracker includes:

- Current Workflow Status: Real-time updates on which phase is currently executing

- Test Case Pipeline: Progress through analysis, generation, authoring, and code generation phases

- Intelligent Test Case Suggestion: AI suggests semantically similar test cases present in the project

- Test Run Management: Execution status, including configuration, triggering, monitoring, and completion

- Reporting Status: Final report generation and PR approval recommendation

This tracker automatically updates as each stage completes, providing instant visibility into the workflow without requiring any manual refreshes.

Detailed Step Comments

In addition to the main progress tracker, KaneAI creates dedicated, detailed comments for each major milestone. These comments provide actionable information and direct access to relevant resources.

1. AI-Generated Test Case Inventory

Once KaneAI completes test generation, a detailed comment lists every test case that was created, including:

- Test Case Names: Descriptive titles reflecting the functionality being tested

- Coverage Areas: Which features, components, or user flows each test validates

- Authoring Status: Real-time updates as each test case is converted into executable code

- Direct Links: Quick access to view, edit, or customize tests in TestMu AI Test Manager

This comment updates dynamically as test authoring progresses, so you can monitor the transition from conceptual test cases to executable automation.

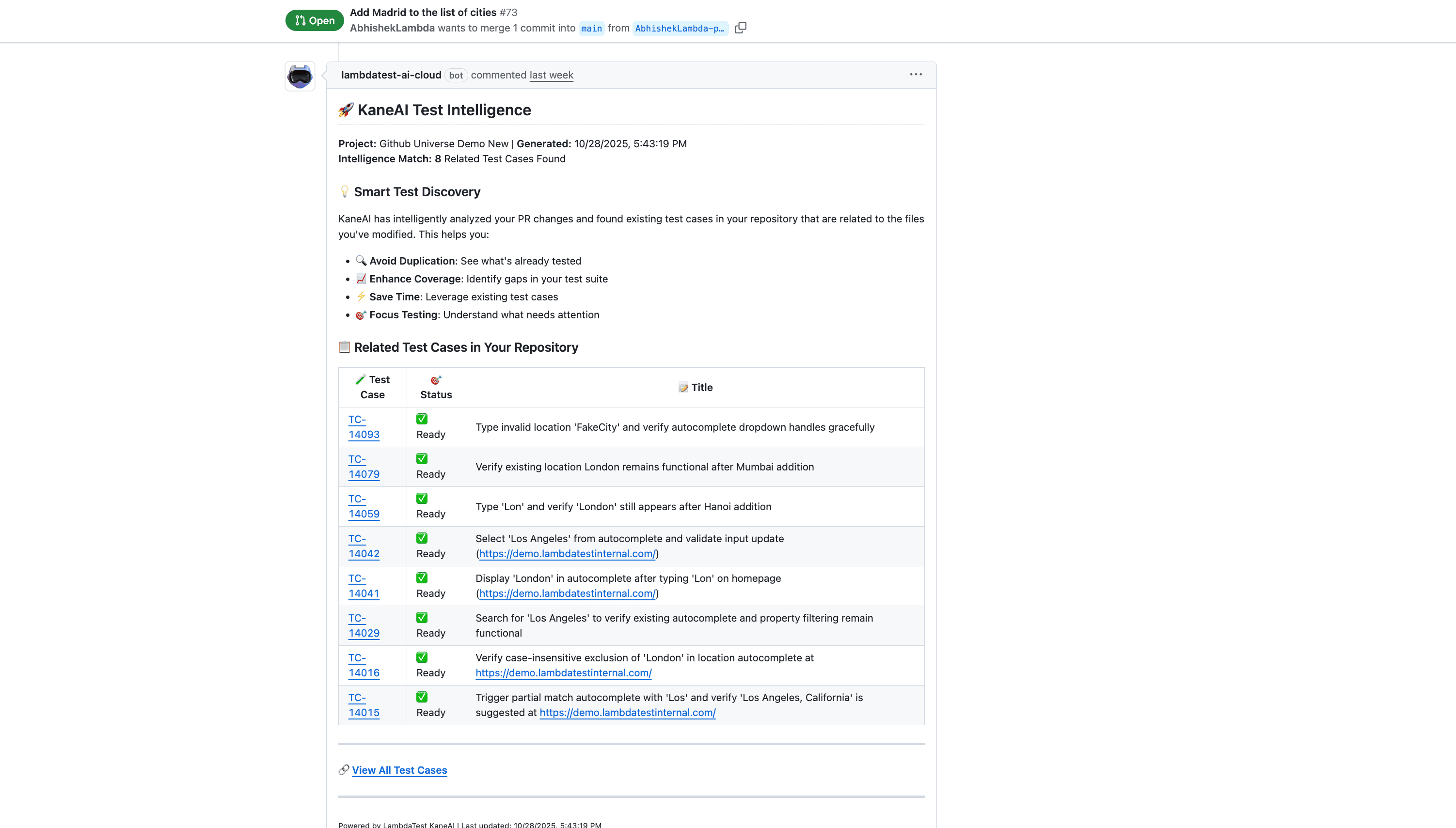

2. Duplicate Detection and Test Optimization

To prevent test redundancy and optimize your test suite, KaneAI performs semantic analysis against your existing test inventory:

- Similar Test Identification: Finds existing tests that cover similar functionality or user scenarios

- Test Suite Optimization: Helps maintain a lean, efficient test suite by preventing duplicate coverage

This intelligent analysis ensures your test repository remains organized and maintainable as it grows over time.

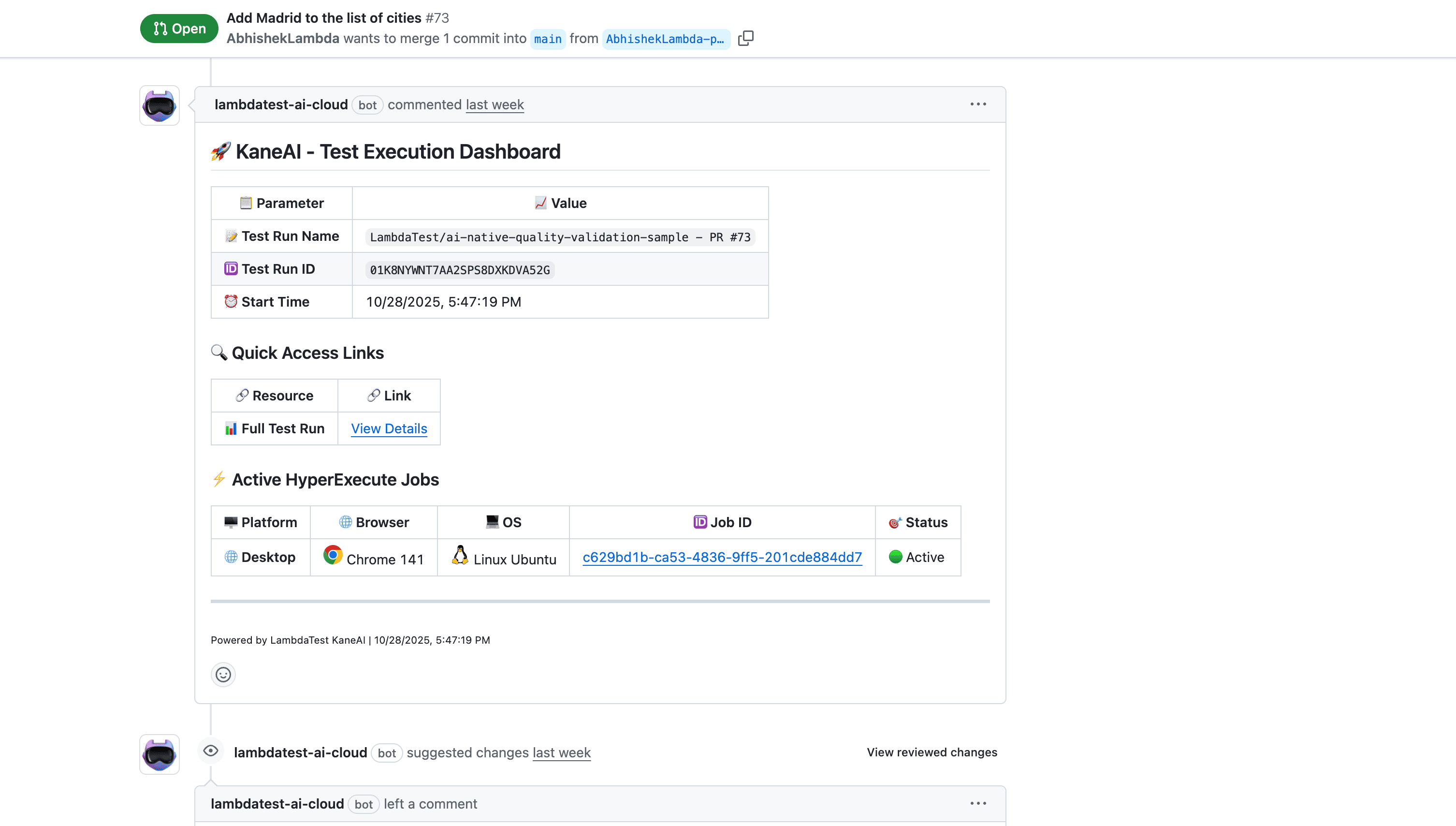

3. Test Execution Dashboard

When test execution begins on HyperExecute, a dedicated comment provides:

- Test Run Configuration: Details about the execution environment, browser matrix, and parallel execution settings

- Real-Time Execution Status: Live updates as tests run, including pass/fail counts and completion percentage

- HyperExecute Dashboard Link: Direct access to detailed logs, screenshots, video recordings, and network traces

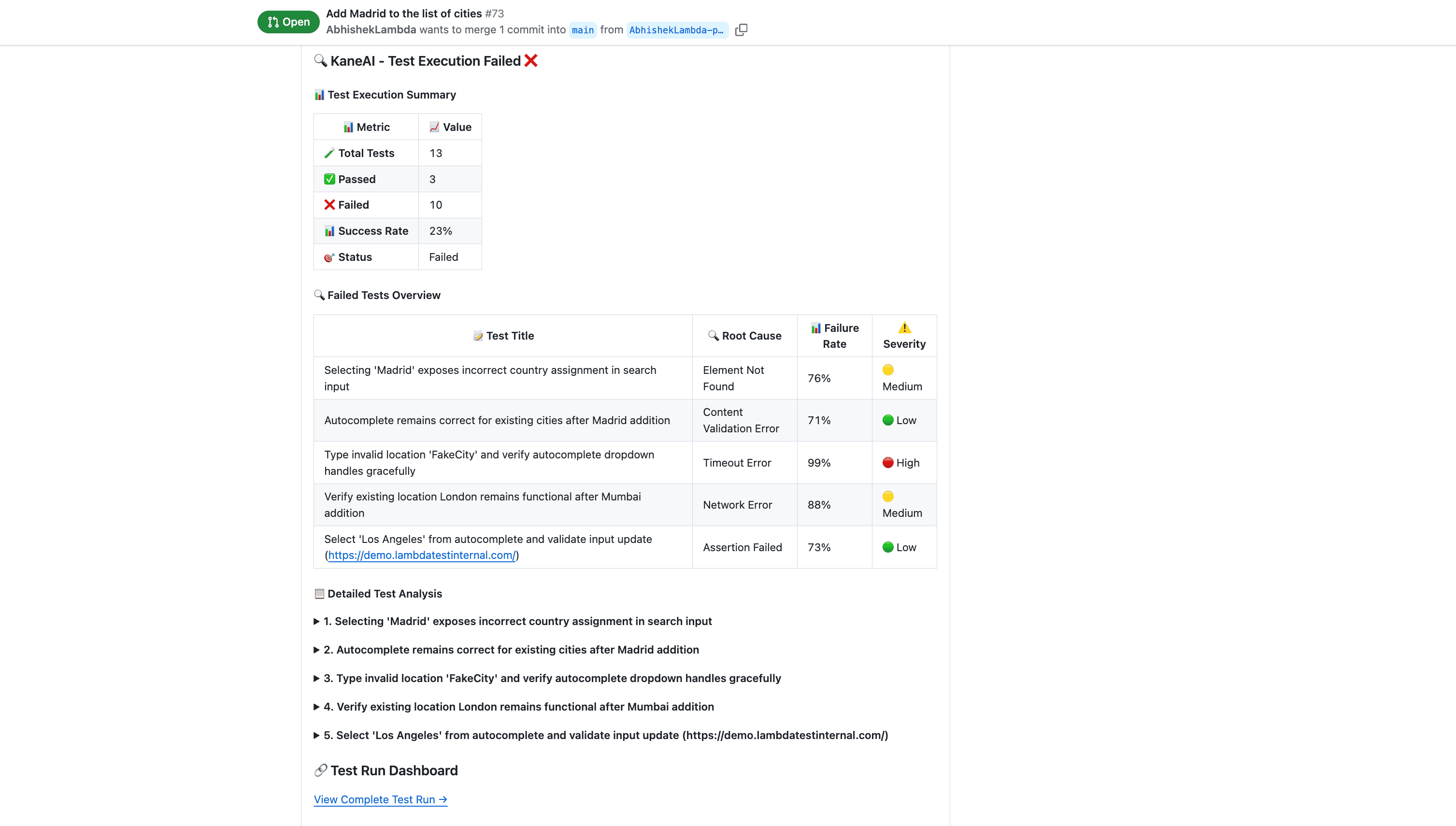

4. Comprehensive Test Report with AI-Powered Insights

Upon completion, KaneAI generates a final report that goes far beyond simple pass/fail metrics:

- Executive Summary: High-level test coverage and success rate for quick stakeholder review

- Detailed Test Results: Pass/fail status for each individual test case with failure details

- AI Root Cause Analysis (RCA): For any failures, KaneAI automatically analyzes logs, screenshots, and stack traces to identify:

- The specific component or code path that failed

- Potential root causes (e.g., locator changes, timing issues, data validation errors)

- Recommended remediation steps

- PR Approval Recommendation: Based on test results and failure severity, KaneAI suggests whether the PR should be approved, requires changes, or needs further investigation

This intelligent reporting dramatically reduces the time from test completion to actionable insights, accelerating your deployment cycles.

Benefits: Unified Visibility

The GitHub-native workflow provides critical advantages for enterprise teams:

- Developer-Centric Experience: All testing information lives where developers already work—no need to check external dashboards or switch contexts

- Stakeholder Transparency: Product managers, architects, and other stakeholders can follow testing progress by simply monitoring the PR

- Audit Trail and Compliance: Every test run, result, and AI recommendation is permanently documented in your PR history

- Deep-Dive Capability: When detailed analysis is needed, one-click access to TestMu AI Test Manager and HyperExecute provides comprehensive diagnostics

- Asynchronous Collaboration: Team members in different time zones can review testing status and results without requiring synchronous communication