AI Test Case Generator

What is the AI Test Case Generator?

The AI Test Case Generator is an intelligent feature within LambdaTest Test Manager that allows users to convert various requirement formats (like text, PDFs, audio, videos, images, Jira tickets and more) into structured, contextual software test cases. It significantly accelerates the test case creation process while improving coverage and quality. This feature is designed to save time, improve test coverage, and streamline the test design process for both manual and automated testing workflows.

How It Works: Step-by-Step

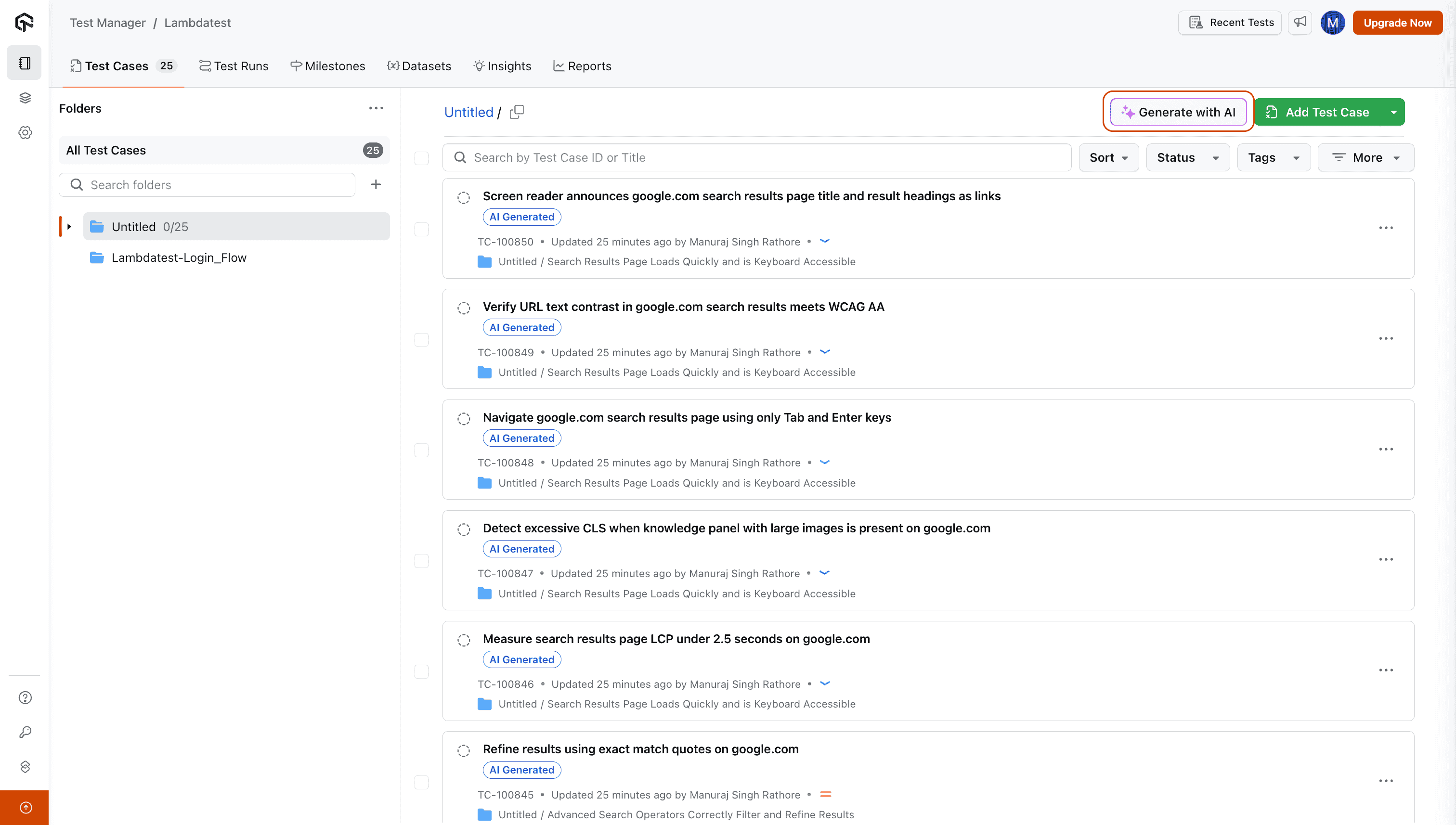

Step 1: Open the AI Test Case Generator

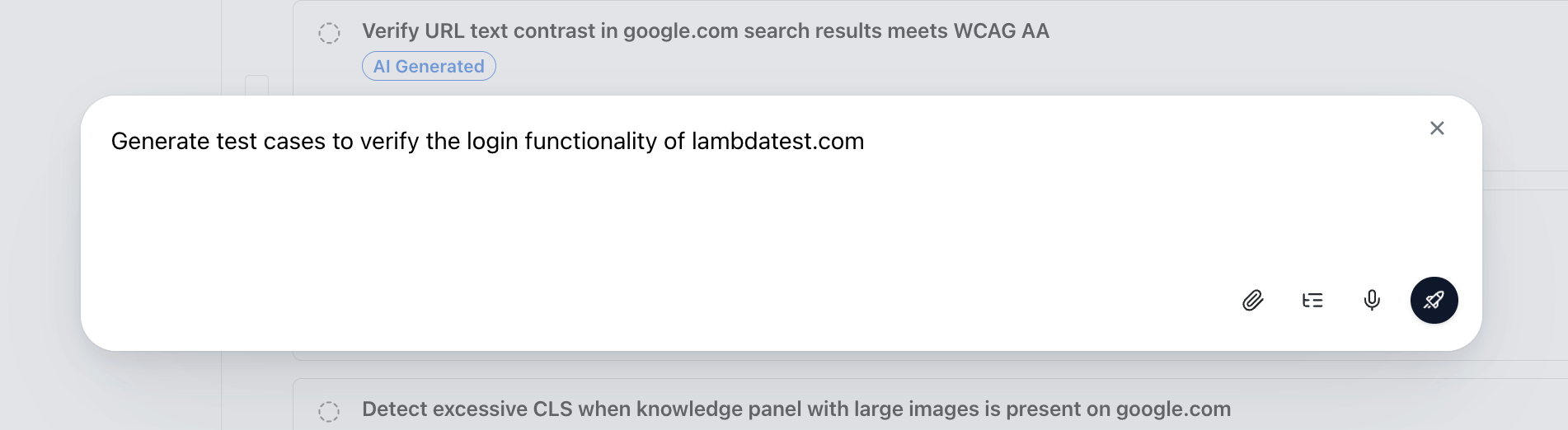

Go to the Test Case Listing page and click on Generate With AI. An input box will appear where you can provide your requirements.

Step 2: Enter Your Requirements

Start by entering your product or feature requirements in the input box.

We support multiple input formats, including:

- Textual requirements

- Jira/Azure DevOps links (e.g., epics, stories, tasks)

- PDFs

- Images

- Audio (recordings or uploads)

- Videos

- Spreadsheets (CSV or XLSX)

- Documents

- JSON or XML

Add Input requirements:

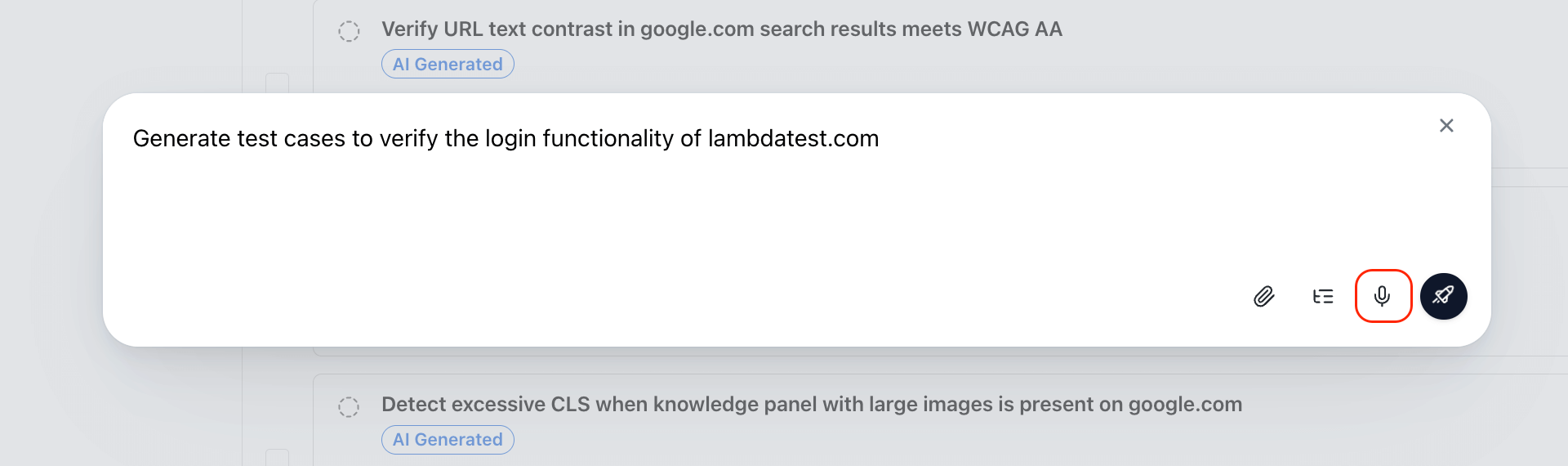

Text Input:

- Type your requirement directly into the input box.

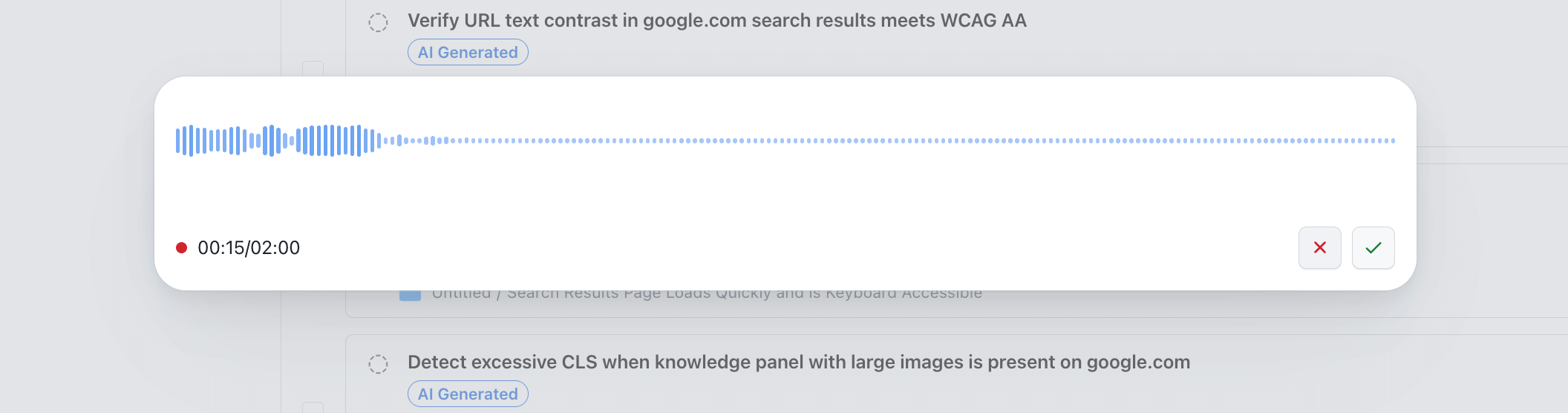

Record Audio:

- Click the mic icon to begin recording, as shown in the picture.

Audio recording is not supported in Firefox, ARC and Opera browsers.

- Click the tick icon to confirm, or the cross to discard.

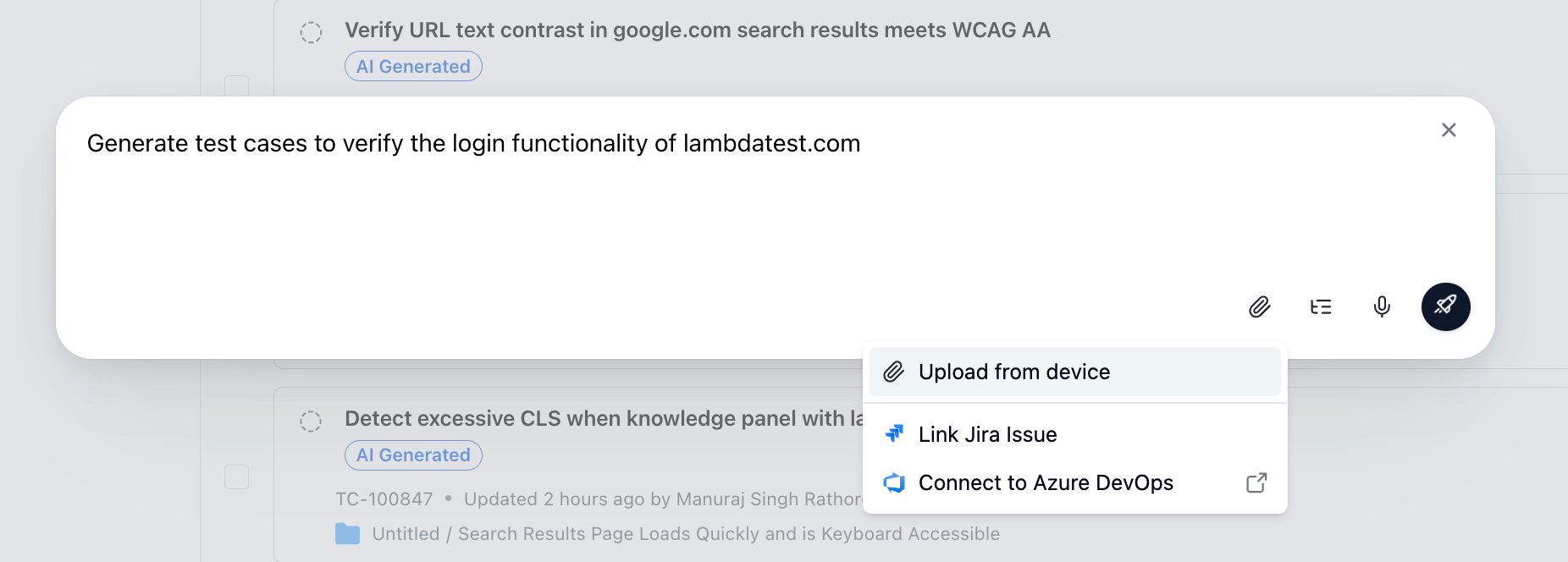

Upload Files:

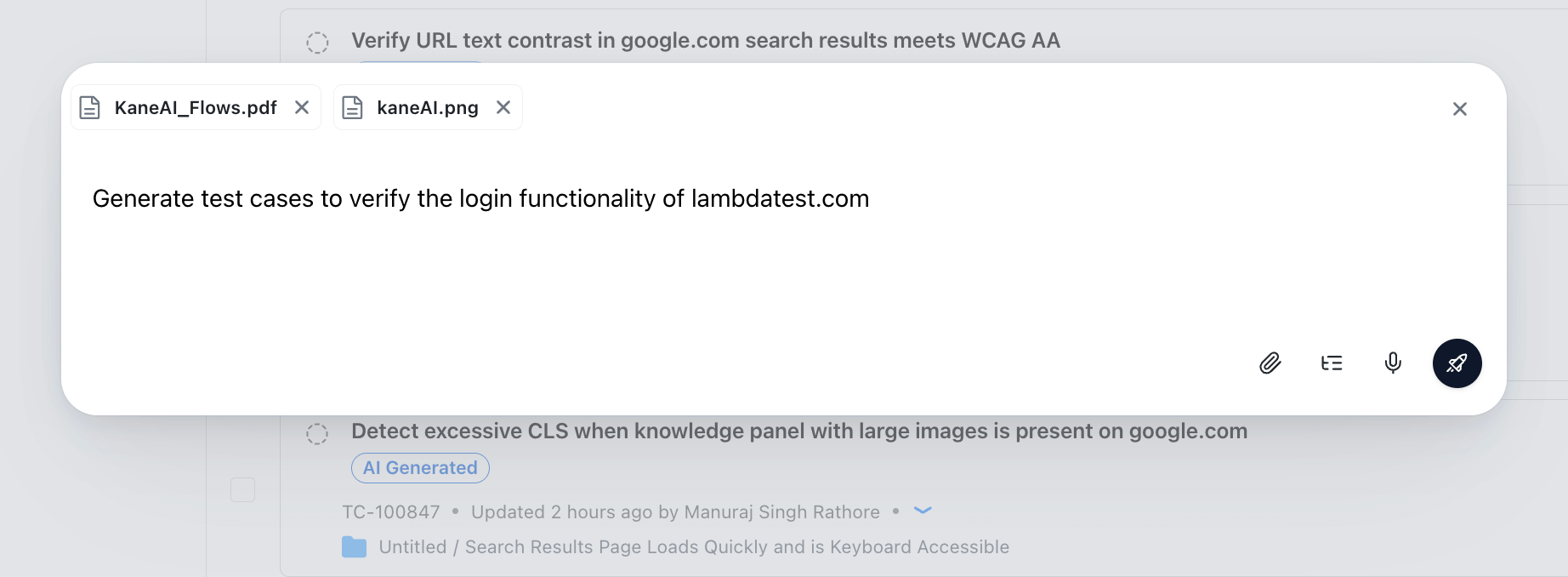

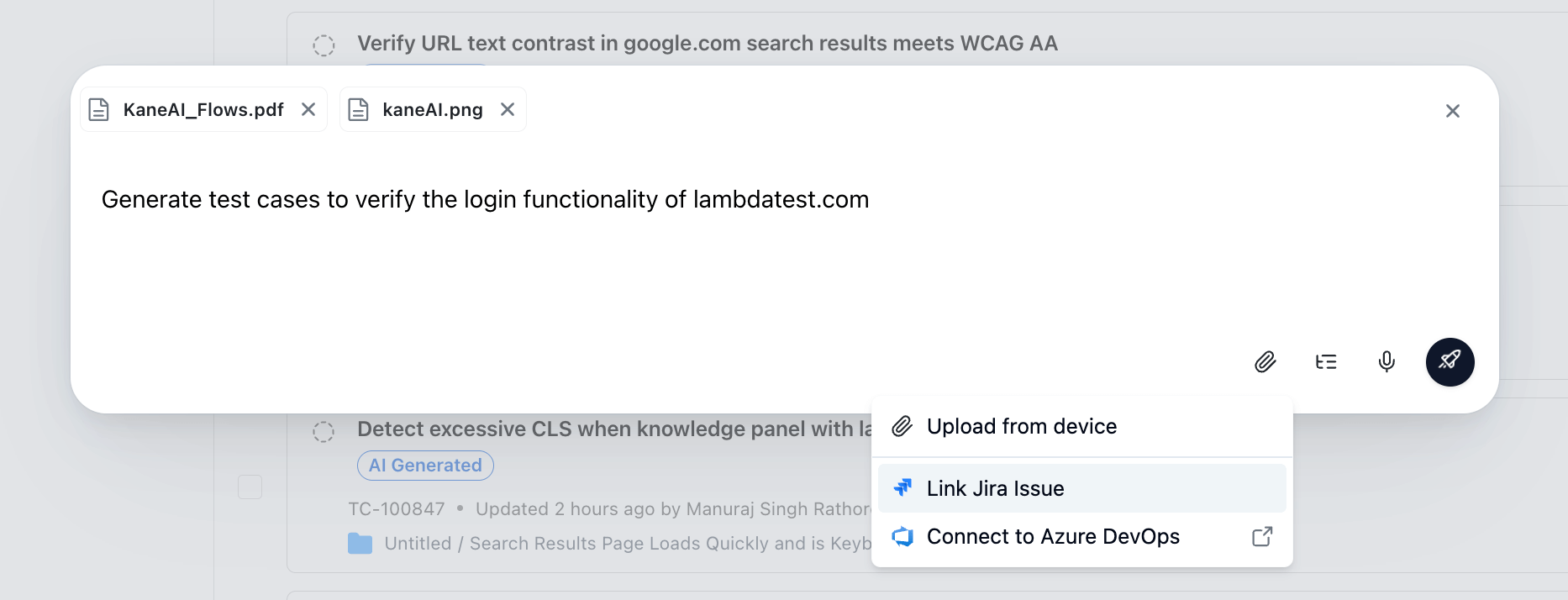

- Click on the pin icon 📎, then select

Upload from device. - You can select a maximum of 10 files from your device.

- Uploaded files will appear within the input box.

Add Issue Links:

-

Integrate your LambdaTest account with Jira/Azure DevOps. Follow the steps described in the following link to integrate Jira/Azure DevOps: LambdaTest Jira Integration / LambdaTest Azure DevOps Integration

-

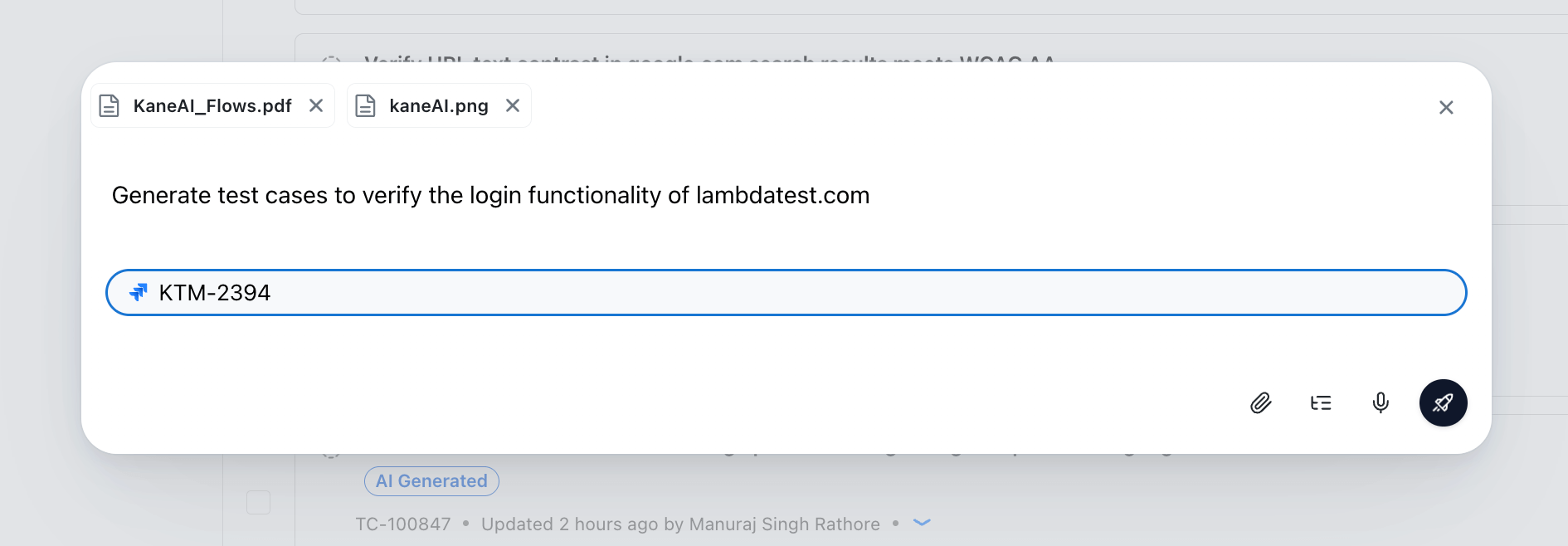

After integration is completed, select

Link Jira Issues/Link Azure DevOps Issues.

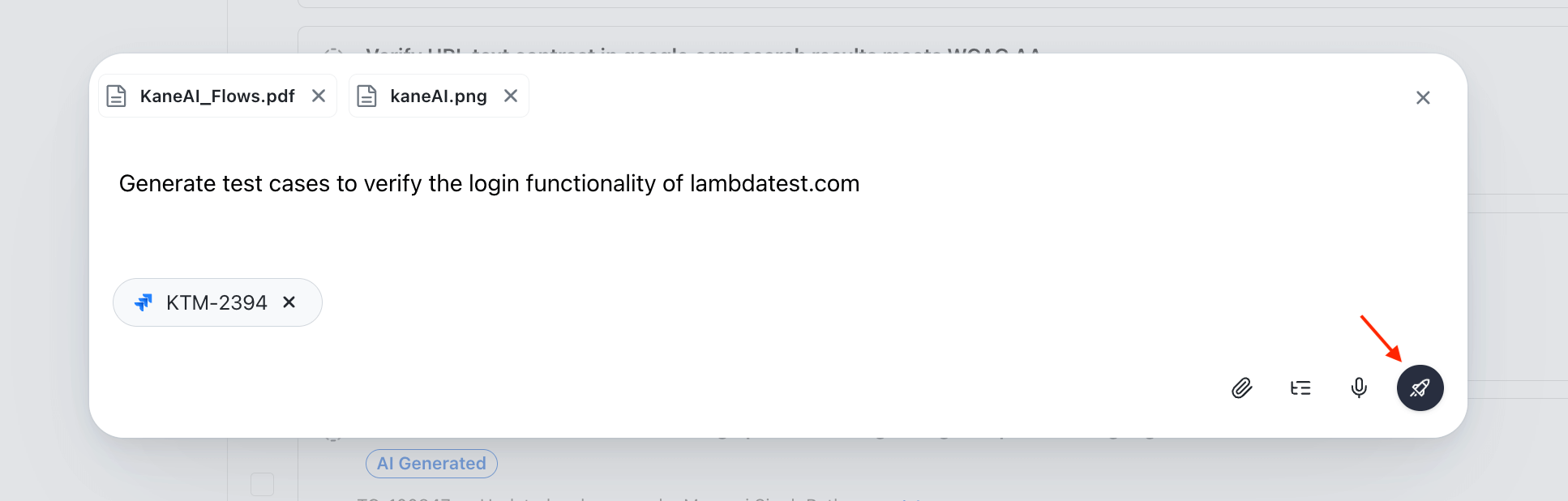

- Enter your Jira/Azure DevOps issue ID or link and press Enter.

Only issues from the connected Jira/Azure DevOps project can be linked here.

- The linked issue will then appear inside the input box.

Step 3: Generate Test Cases

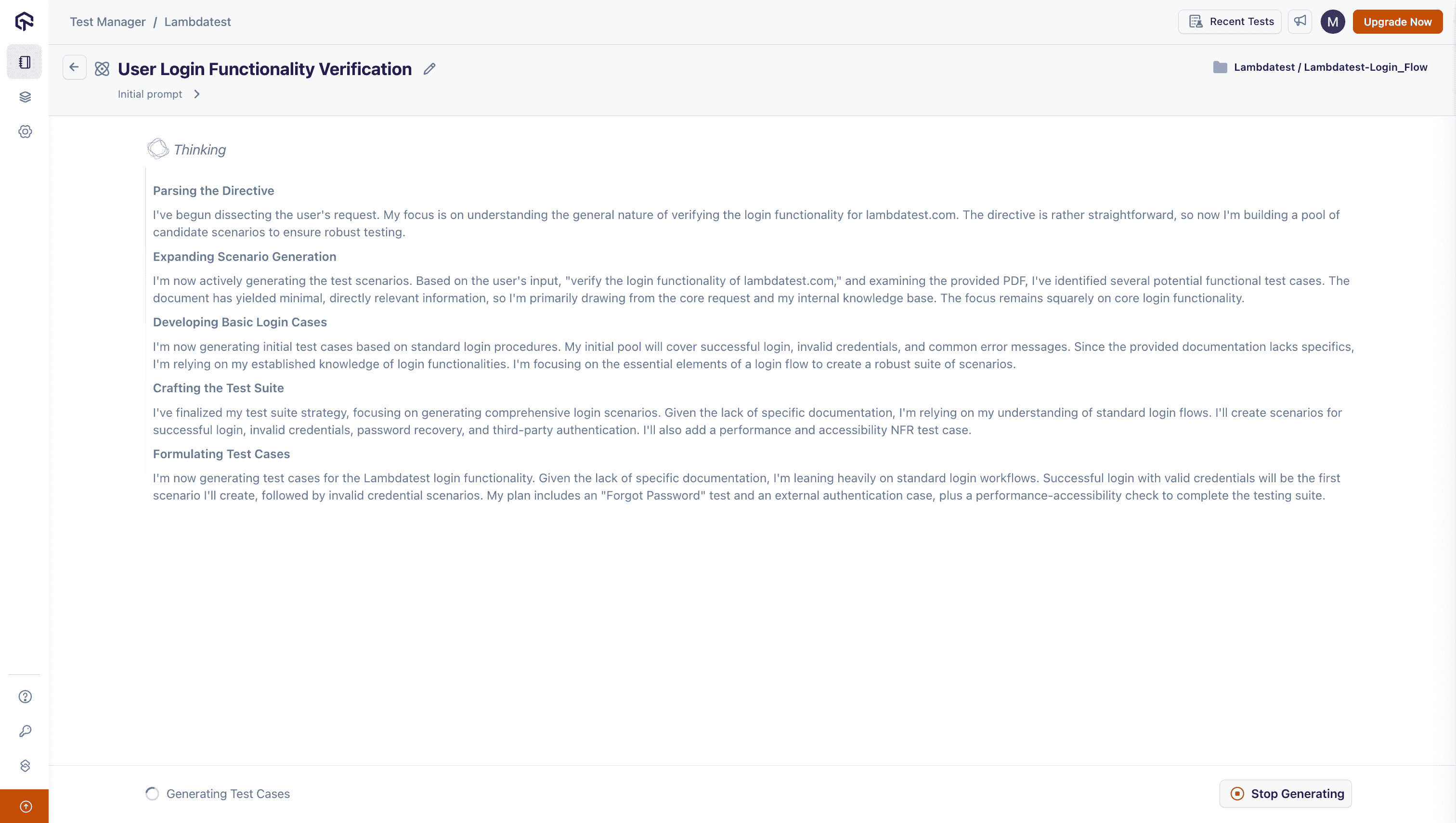

- Once all your input requirements are added, press Enter to start generating test cases.

- The AI will analyze the content and redirect you to a new screen where test cases will be suggested based on the input provided.

To end the test case generation while the Agent is thinking, click on the Stop button.

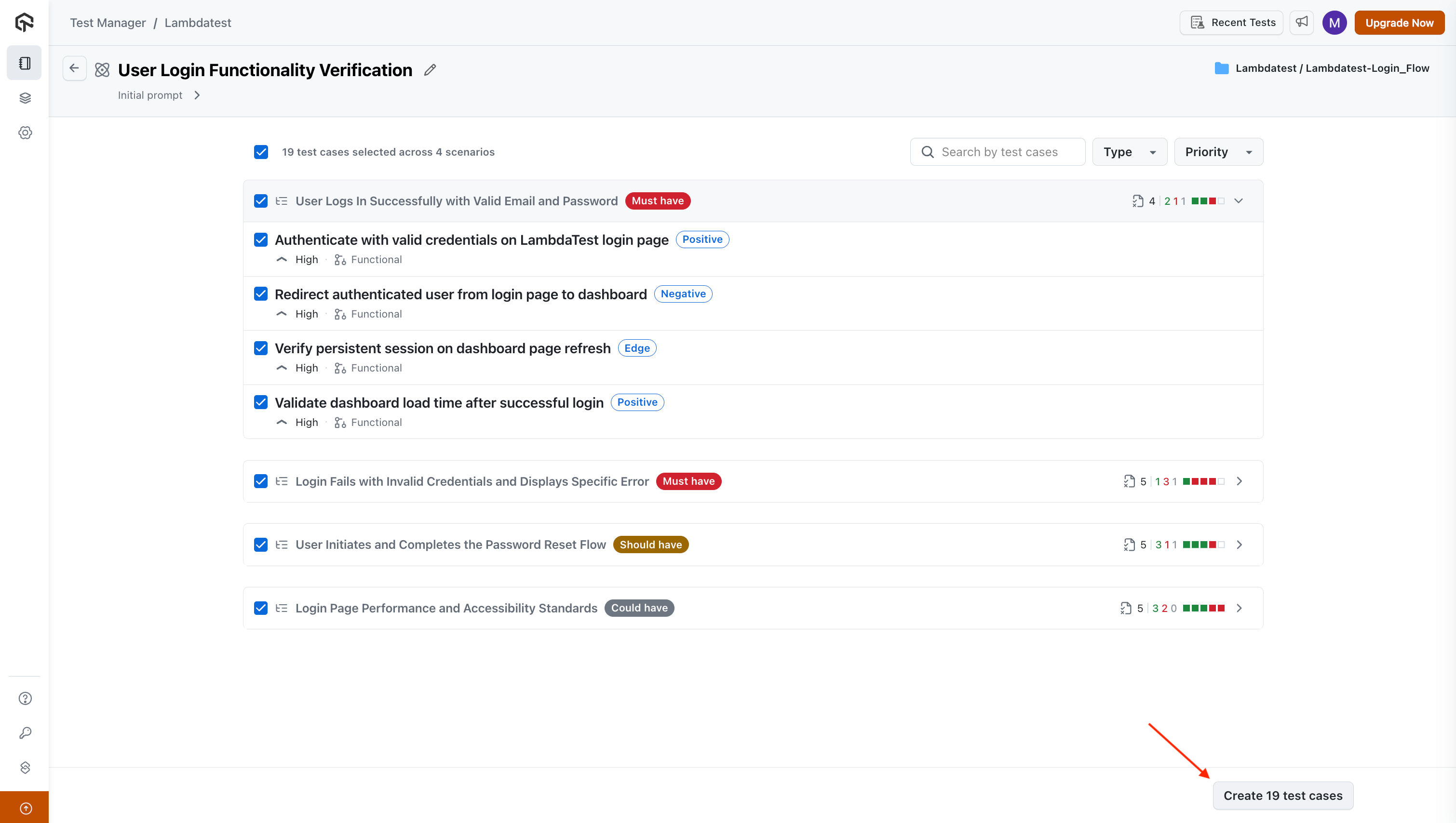

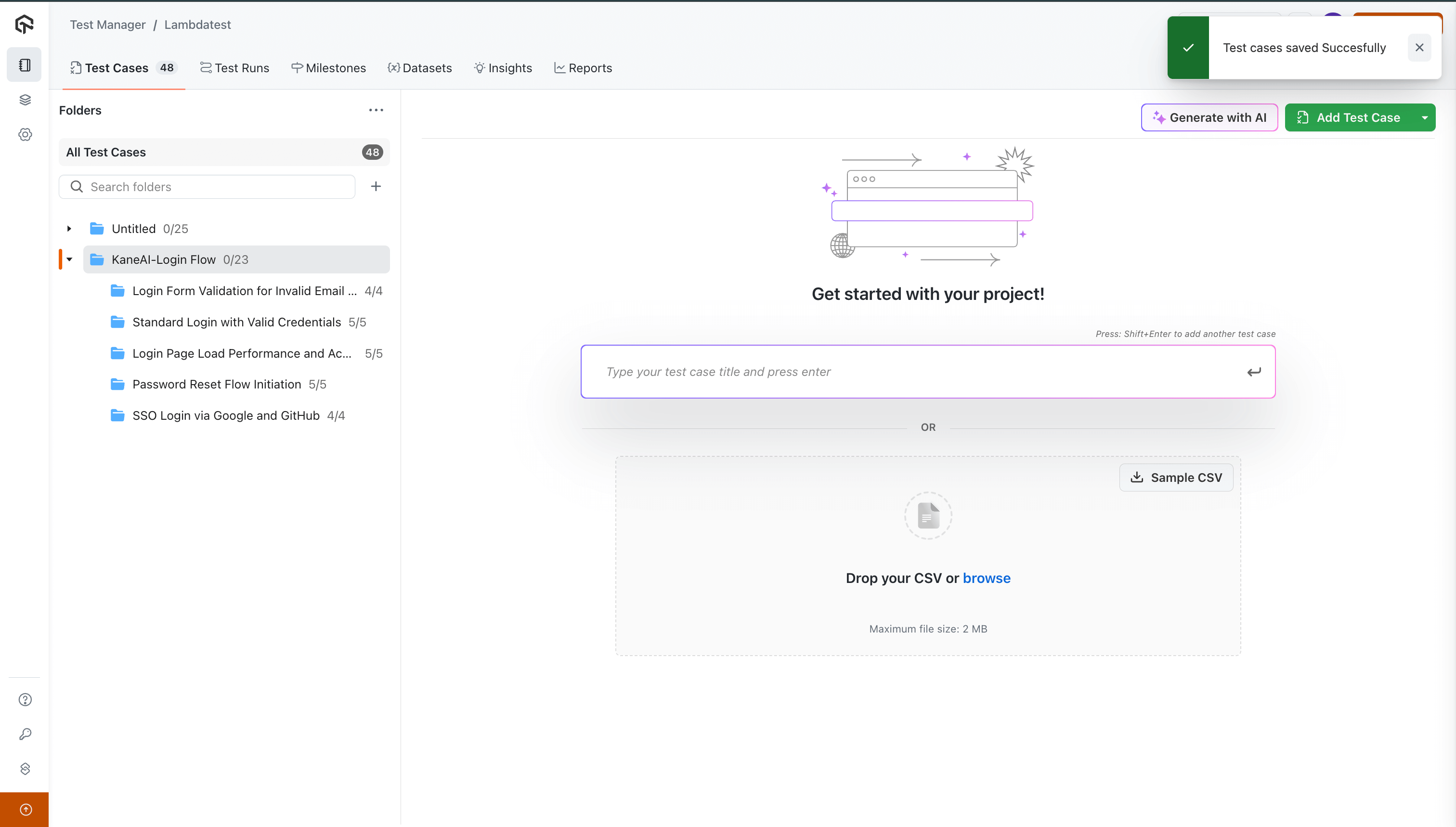

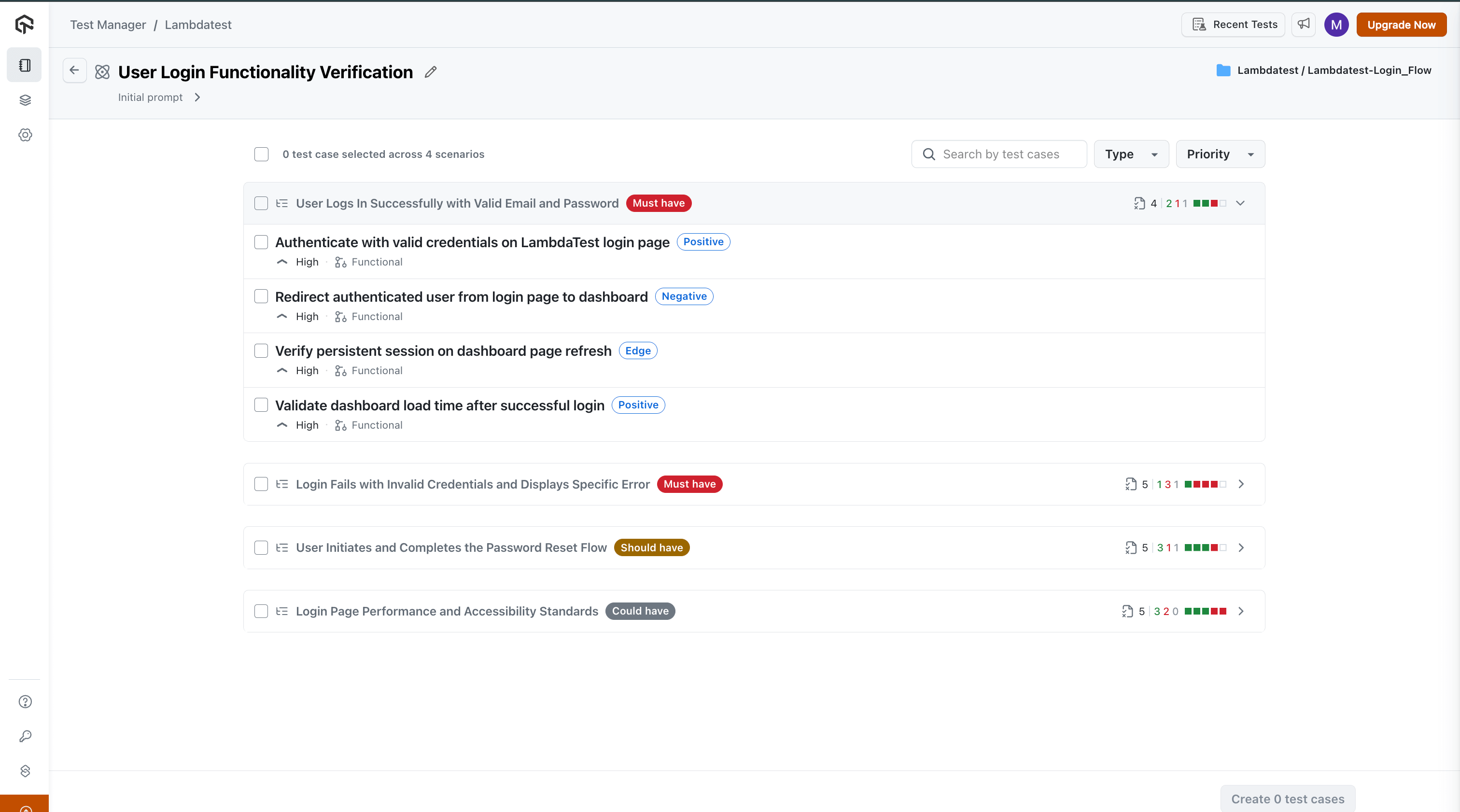

Step 4: Review Test Cases Across Scenarios

-

Test cases are grouped into high-level, logical test scenarios. Each scenario represents a theme or functional area for easier navigation and categorization.

-

Scenarios are labeled with tags such as

Must have,Should have, andCould have, indicating their relative importance as determined by the Agent. -

Individual test cases are further categorized with tags:

- Positive: Valid test cases expected to pass.

- Negative: Invalid or failure cases designed to test robustness.

- Edge: Corner cases that may be overlooked in testing flows.

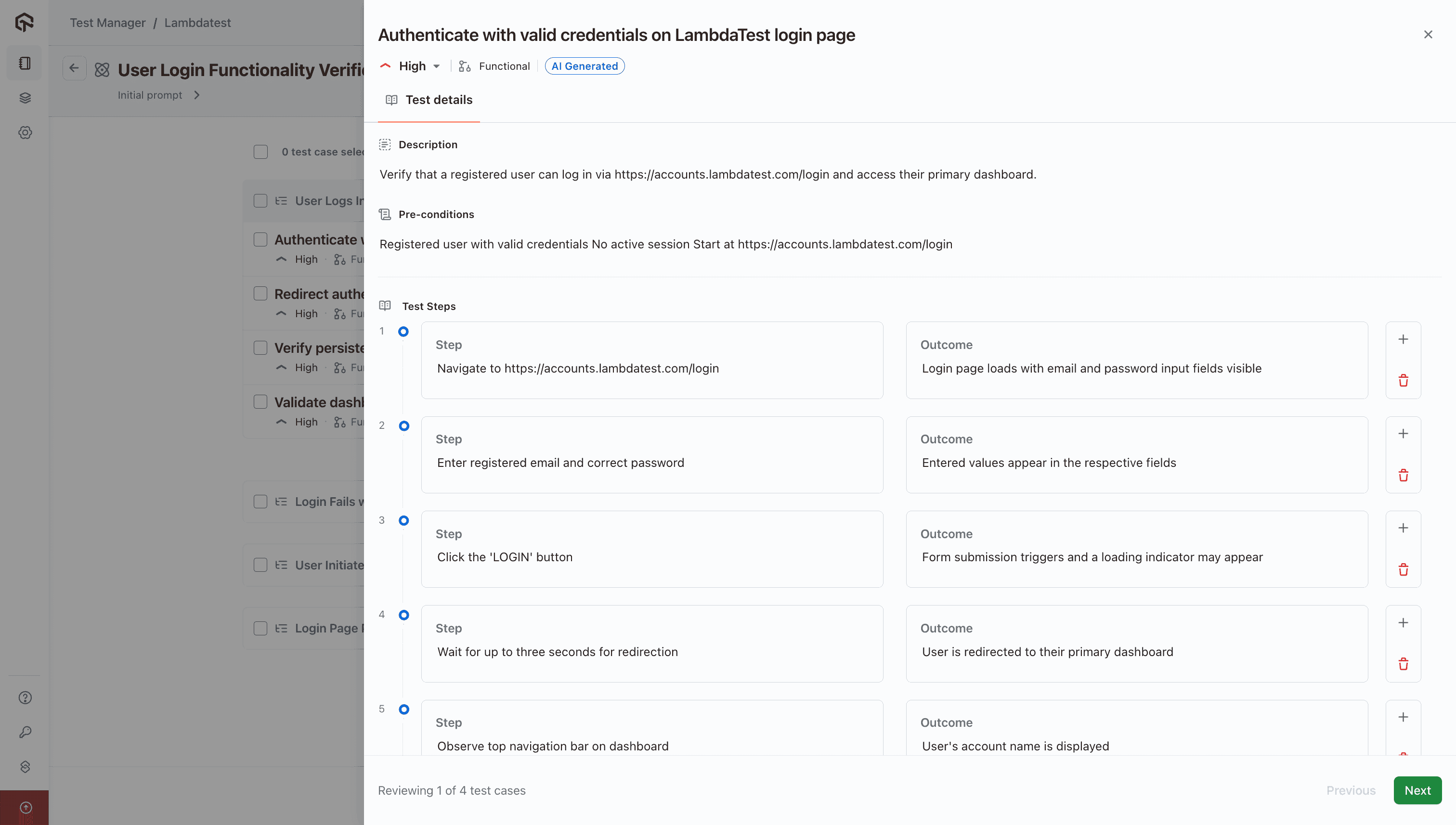

Step 5: View and Edit Test Case Details

Click on any test case to explore its full details, including:

- Test Case Title

- Description

- Pre-conditions

- Priority

- Test Steps and Expected Outcomes

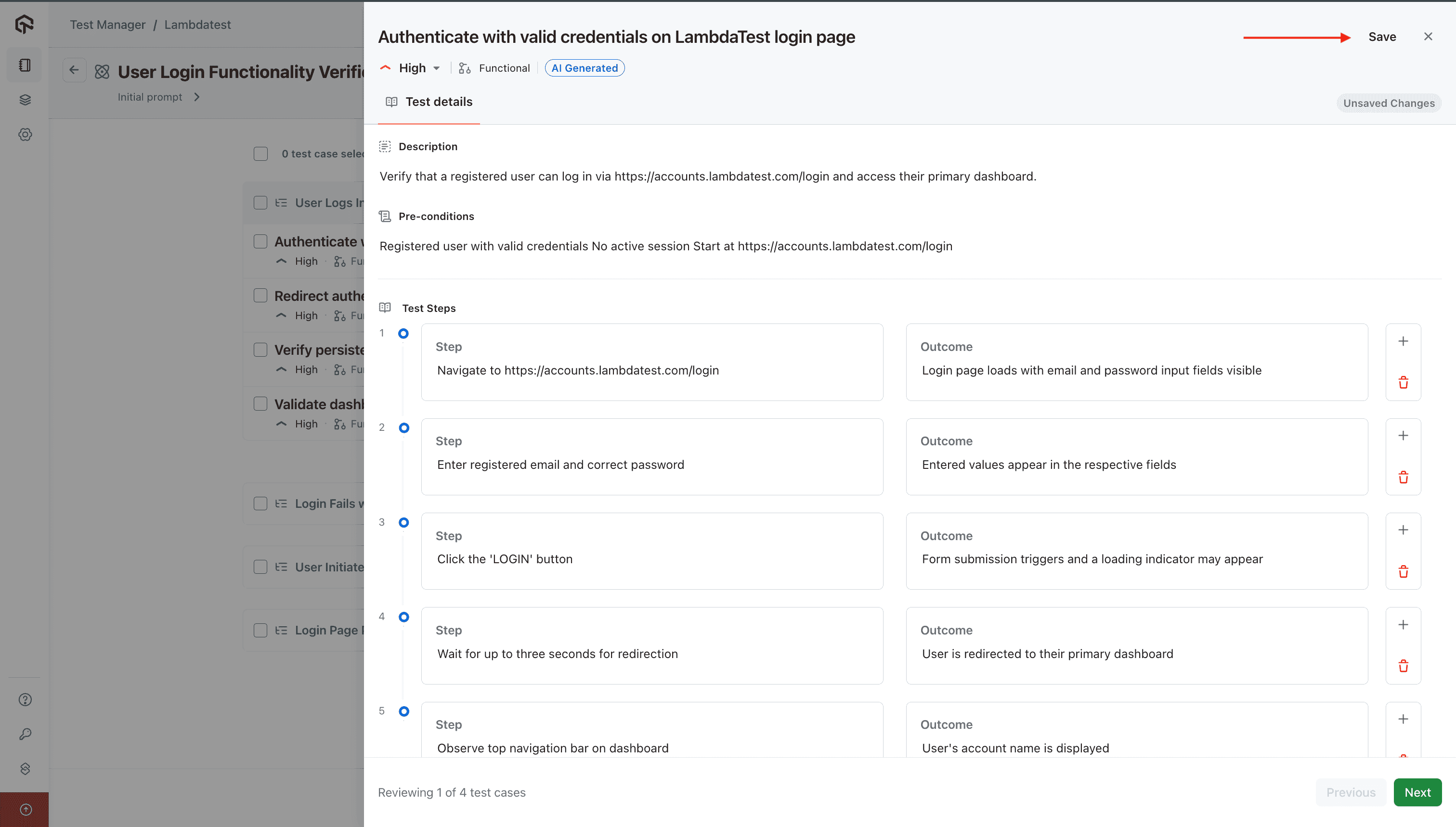

You can edit any part of the test case if you want to add more context or align it with your test strategy.

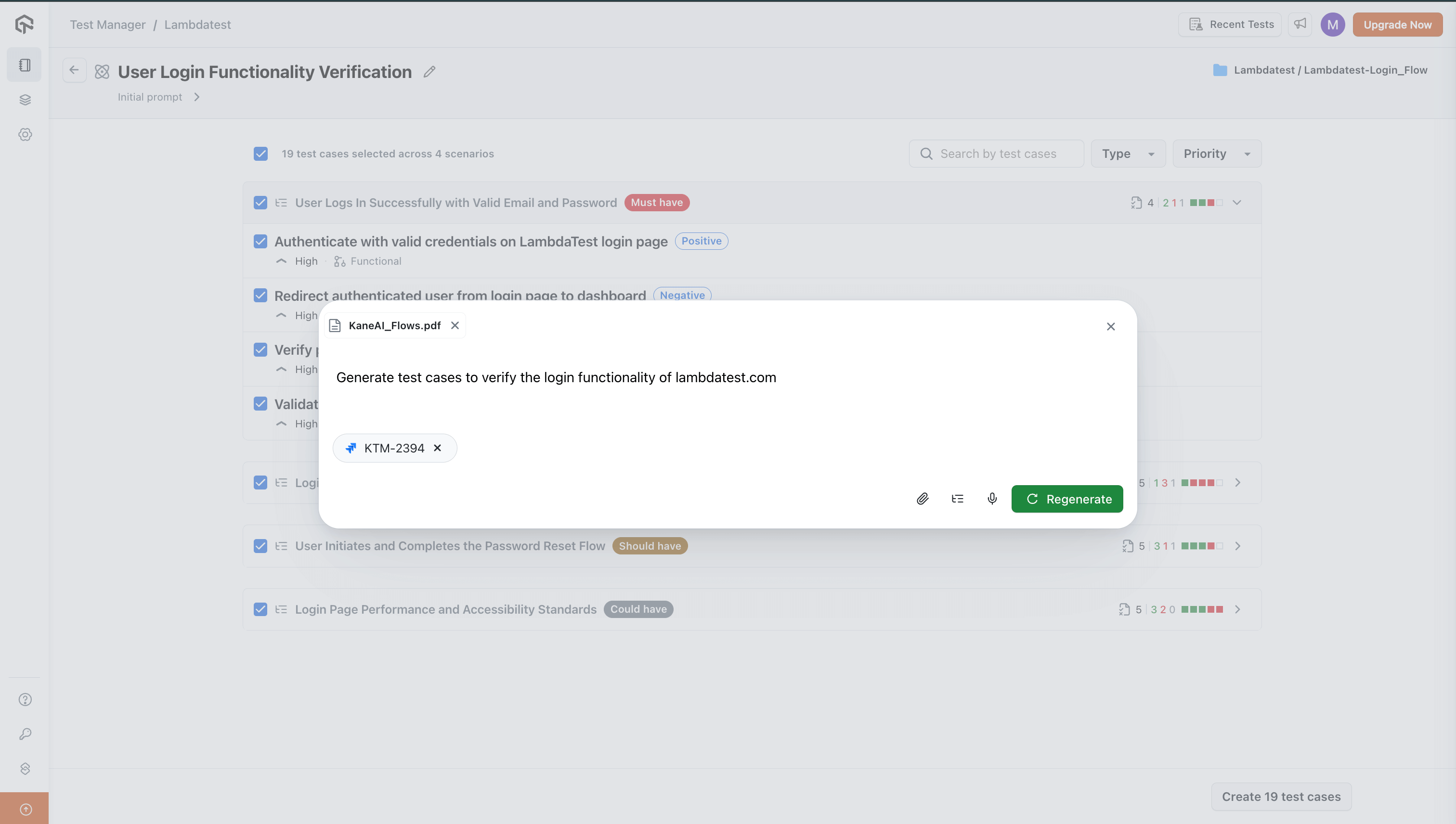

Step 6: Edit and Regenerate

Want to try a different approach or add missing information?

You can edit your original input and regenerate a new set of test cases. This allows you to experiment and fine-tune the output before saving.

When you regenerate, the previously generated test cases and scenarios are not retained.

Watch the thinking tokens during the Agent’s reasoning phase they show how your input is being interpreted. Use this insight to refine prompts and improve future test case generation.

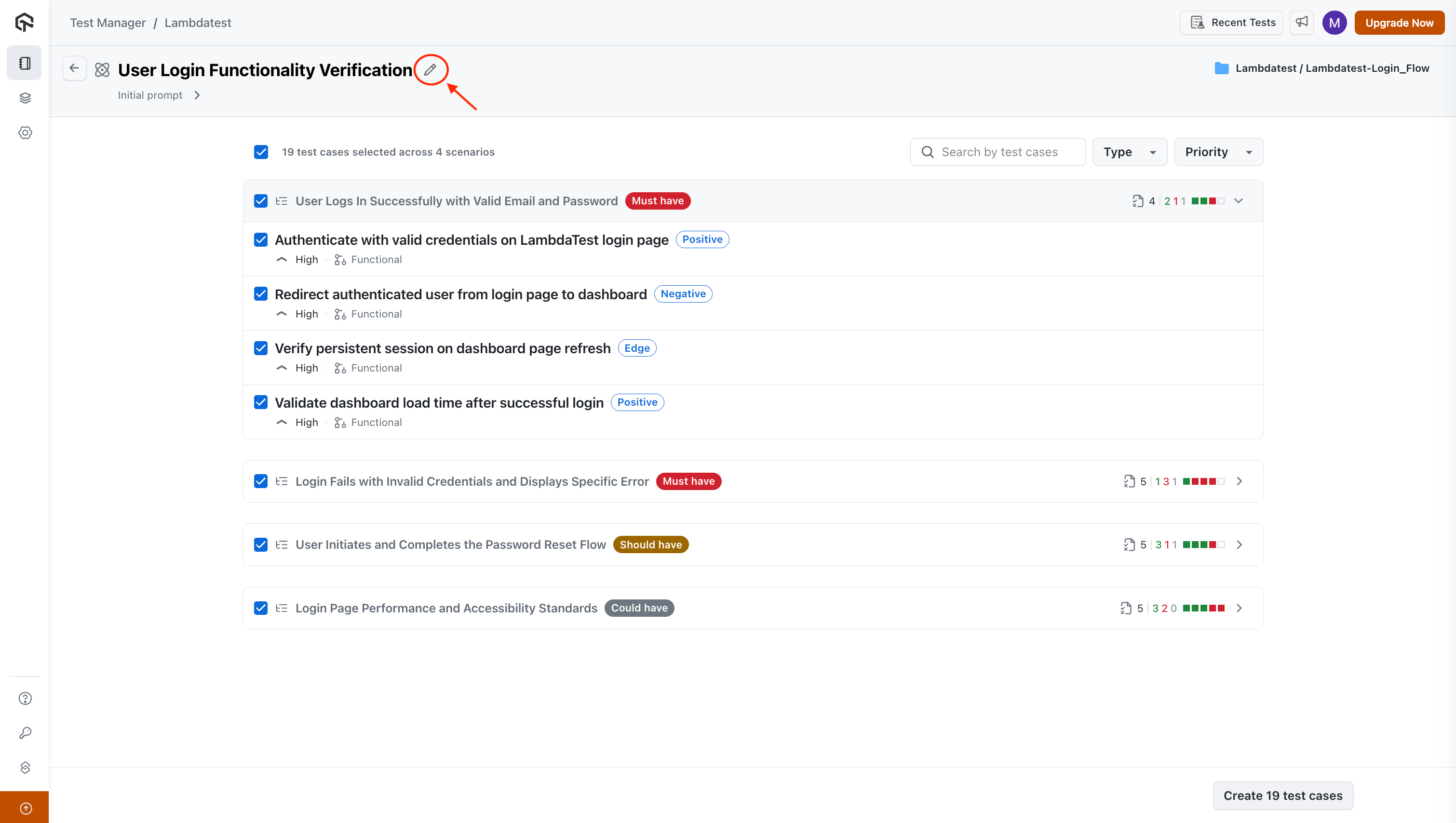

To edit, click on the pencil icon on the Summary listing page as shown below:

Click on regenerate after updating the input.

Step 7: Select and Save Test Cases

Once satisfied, select the test cases you want to keep and save them directly to your Test Case Repository in LambdaTest Test Manager. These saved test cases can then be assigned to test runs, shared with teams, or used in manual or automated test planning.