Power Your Software Testing with AI and Cloud

Supercharge QA with AI for Faster & Smarter Software Testing

- Automation Testing

- Home

- /

- Learning Hub

- /

- AI Observability

What is AI Observability In Software Engineering( 2025)

Learn what AI observability is, why it matters in 2025. Explore tools, benefits, and practices for building reliable and trustworthy GenAI & Agentic systems.

Last Modified on: September 26, 2025

- Share:

In software engineering, observability is about collecting information from a system, like logs, metrics, and traces, so teams can clearly see how the software is running and spot issues.

But when it comes to AI, traditional observability falls short. Models are non-deterministic, producing different outputs for the same input.

AI observability is the ability to monitor, understand, and explain the behavior of AI systems across their lifecycle, covering inputs, model internals, outputs, performance, cost, and risks, so teams can detect anomalies, trace root causes, and ensure trustworthy outcomes.

Overview

AI observability is the practice of monitoring, tracing, and analyzing AI systems to understand their behavior, detect failures, and ensure reliable, transparent, and trustworthy outcomes.

Key Components of AI Observability:

- Input Monitoring: Validate schema, fields, drift, and anomalies in live data.

- Output Correctness: Measure semantic quality, hallucinations, bias, and misleading outputs.

- Performance Metrics: Track response time, resource consumption, and token usage.

- Model Versioning: Record lineage, compare versions, and prevent regressions.

Key Benefits of AI Observability:

- Improved Reliability: Detect silent failures before they impact end-users.

- Increased Trust: Ensure AI decisions are explainable and auditable.

- Better Compliance: Capture lineage and evidence for regulatory requirements.

- Operational Efficiency: Reduce debugging time and control infrastructure costs.

AI Observability for Agentic Workflows & GenAI Systems:

Agentic workflows add complexity with multi-agent handoffs, tool calls, and non-deterministic paths. Observability tracks prompts, decisions, and outputs end-to-end, ensuring agents remain reliable, auditable, and free from drift, hallucinations, or context loss.

What is AI Observability

AI Observability is the ability to monitor, analyze, and explain the inner workings of AI systems across their lifecycle. Unlike traditional observability, which focuses mainly on logs, metrics, and traces, modern AI observability adds AI-specific signals such as model drift, bias, hallucinations, prompt behavior, and cost metrics.

In simple terms, it gives teams the visibility they need to answer not only “is the system running?” but also “is the AI making the right decisions for the right reasons?”

This visibility helps teams ensure that AI models are accurate, reliable, and aligned with business and compliance goals.

Advanced AI observability has been benchmarked to cut downtime costs by 90% and speed up product launches by 60%.

Key Benefits of AI Observability

AI brings challenges that traditional monitoring can’t catch. Models may give different results for the same input, lose accuracy over time due to data drift, or generate false outputs.

GenAI and AI agents add risks like prompt injections, inconsistent behavior, and rising costs. Without observability, these issues often stay hidden until they harm users or the business.

AI observability solves these problems by making the system transparent:

- Improved Quality & Reliability: Detect and fix hallucinations, drift, and regressions before they affect users.

- Faster Root Cause Analysis: Pinpoint exactly where and why failures happen, whether in input data, model logic, or external integrations.

- Better Compliance & Governance: Ensure fairness, bias checks, and explainability for regulatory requirements.

- Cost & Performance Optimization: Monitor token usage, compute resources, and latency to balance efficiency with speed.

- Stronger Security & Trust: Catch adversarial prompts, misuse in AI agents, or data privacy risks in real time.

- Continuous Improvement: Use observability insights to refine prompts, retrain models, and adapt systems to real-world changes.

What Is AI Test Observability & Why It Matters

Test observability is the practice of capturing detailed insights from test executions, going beyond simple pass or fail results.

Instead of just knowing whether a test broke, teams can understand why it broke, where it failed, and what interactions led to the issue.

In practice, test observability means transporting the same signals used in production, such as logs, traces, and metrics, into the testing process.

- Database calls made during execution

- External API requests and responses

- Feature flag toggles triggered

- Model embeddings or prompt chains generated by AI systems

This level of visibility is especially important for AI applications, and these are non-deterministic and prone to issues such as data drift, prompt regression, hallucinations, or inconsistent agent behavior.

Classic testing approaches can miss these subtle failures because they often only validate expected outputs.

The key difference is simple:

- Traditional testing tells you what failed.

- AI test observability tells you why it failed and how to fix it.

Critical Components of AI Observability and Testing

Building reliable AI systems requires observability that goes deeper than infrastructure metrics. Both AI observability and test observability depend on capturing rich telemetry signals that reveal how models behave under different conditions.

The following components form the foundation:

- Input Monitoring: Validate schema, fields, drift, and outliers to prevent silent failures when production data shifts unexpectedly.

- Output Correctness: Detect hallucinations, incoherence, or bias; use semantic scoring and feedback to ensure reliable, meaningful responses.

- Performance Metrics: Track latency, throughput, GPU/CPU usage, and token costs to optimize performance and control budget overruns.

- Model Versioning: Record model lineage, track versions, and compare performance to prevent regressions and ensure transparent system behavior.

- Agent & Prompt Tracking: Capture prompts, retries, decisions, and tool calls to detect regressions, prompt injections, or failed workflows.

- Test Instrumentation: Enrich QA pipelines with logs, traces, and metrics, flagging flaky tests and drift for faster debugging.

How to Architect and Implement AI Observability

Designing AI observability isn’t just about picking a tool; it’s about building the right architecture that can scale from early tests to full production systems.

Let’s break it down:

1. Minimal Viable AI Observability Stack

Every team, no matter the size, can start small. A minimal stack typically includes:

- Data Monitoring Layer: Tracks data freshness, drift, and anomalies.

- Model Monitoring Layer: Captures performance metrics (accuracy, drift, latency, hallucinations).

- Agent Workflow Tracking: Logs prompts, tool calls, and reasoning paths for GenAI agents.

- Dashboard & Alerting: Centralized visibility with thresholds and anomaly alerts.

- Test Observability Hooks: Observability embedded into test pipelines for CI/CD.

Example Setup: A QA team using OpenTelemetry for logs, Prometheus for metrics, and Grafana dashboards to observe test runs before deploying to production.

2. Instrumentation: Logs, Traces, Events, Telemetry

Instrumentation is the backbone of observability. For AI systems, it means going beyond traditional logging.

- Logs: Capture inputs, outputs, errors, and hallucinations during test and production runs.

- Traces: Map multi-step reasoning in GenAI agents (prompt → tool → response).

- Events: Record significant state changes (e.g., data drift detected, fairness violation).

- Telemetry: Continuous signals like latency, throughput, GPU/CPU usage, and cost per inference.

Best Practice: Tag logs and traces with test IDs during CI/CD runs so failures can be correlated with observability data instantly.

3. Tools, Frameworks, Open Source vs Proprietary

Choosing the right mix depends on budget, compliance needs, and scale.

- Open Source

- OpenTelemetry: Standard for traces, logs, and metrics collection.

- Prometheus + Grafana: Metrics storage and visualization.

- WhyLabs, Evidently, Arize (OSS variants): Model monitoring and drift detection.

- Proprietary / Enterprise

- Dynatrace, New Relic, Datadog: Full-stack observability with AI insights.

- Arize AI, Fiddler AI, Weights & Biases: Specialized ML/LLM observability platforms.

- LambdaTest HyperExecute: AI native test intelligence extends observability into test automation pipelines.

Tip: Start with OSS for cost efficiency. As workloads scale or compliance becomes critical, layer enterprise tools.

4. Embedding Observability in CI/CD / Test Pipelines

This is where most competitors stop short, but it’s where teams gain the biggest quality wins.

- Step 1: Instrument Tests: Add logging, traces, and telemetry collection inside test cases.

- Step 2: Run in CI/CD: Connect test runs to observability backends (e.g., HyperExecute streaming logs to Grafana).

- Step 3: Monitor Flaky Outputs: Detect non-deterministic failures or regression drift early.

- Step 4: Feedback Loop: Feed production anomalies back into regression test suites.

Example: An LLM-powered chatbot is tested in CI/CD. During test runs, observability detects that latency doubles when prompts exceed 500 tokens. That insight helps teams optimize before going live.

Common Failure Modes & Risks Unique to AI Systems

AI systems don’t always fail like traditional software. Instead of throwing errors, they often produce wrong or unpredictable results that can go unnoticed.

This is why AI observability is so important. Below are the most common risks teams need to track.

1. Data Drift & Concept Drift

The data your model sees in production changes over time. Inputs may look different (data drift) or their meaning changes (concept drift).

- Why it matters: Even accurate models can lose performance silently as real-world data evolves.

- Example: A retail demand forecast trained on last year’s patterns may mispredict sales after a major event (like COVID-19). Observability helps detect these shifts early.

2. Model Regression & Prompt Regression

A new model version or a prompt change works worse in some cases, even if overall metrics improve.

- Why it matters: Regressions can quietly break business-critical workflows.

- Example: An updated chatbot answers general questions better but starts failing on refund-related queries. Test observability catches the regression by comparing outputs across versions.

3. Hallucinations & Misleading Outputs

Generative AI produces fluent but factually wrong answers.

- Why it matters: Hallucinations damage user trust and may cause compliance or legal risks.

- Example: A financial assistant confidently invents non-existent stock data. With Gen AI observability, these cases can be flagged during testing.

4. Bias & Fairness Issues

AI models may give results that are unfair to certain groups because of biased training data.

- Why it matters: Biased outputs harm users, reputations, and may violate regulations.

- Example: A hiring model ranks resumes differently based on gender. With observability, QA teams can test demographic slices and uncover bias early.

5. Performance Degradation & Latency

Models slow down, consume more compute, or fail under heavy load.

- Why it matters: Latency and downtime hurt user experience and increase costs.

- Example: An LLM responds in 2 seconds in testing but slows to 10 seconds during peak traffic. Observability surfaces these spikes so teams can fix scaling issues.

6. Agent & Orchestration Failures

Multi-step AI agents fail to complete workflows or call tools in the wrong order.

- Why it matters: Most real-world AI failures come from orchestration, not the model itself.

- Example: A travel-booking agent searches for flights but never confirms the booking. AI agent observability traces each step, making it clear where the workflow broke.

7. Security & Privacy Risks

Attacks like prompt injection or accidental leaks of sensitive data (PII).

- Why it matters: Security and privacy failures can cause compliance violations (GDPR, HIPAA) and major reputational damage.

- Example: A malicious user enters a crafted prompt to reveal hidden system instructions. Test observability simulates such attacks before they reach production.

AI Observability for Agentic Workflows & GenAI Systems

GenAI is shifting from static models to autonomous agent workflows, adopted across startups and enterprises.

Powering use cases like AI chatbots in customer support, lead qualification in sales, IT ticket resolution, virtual care in healthcare, fraud monitoring in finance, and shopping assistants in e-commerce, and so much more.

Wherever these agents interact, with humans or other agents, they must remain observable, auditable, and reliable

And as discussed in the previous section, these AI systems don't fail like traditional software; they often exhibit unpredictable behaviors, a lack of context awareness, hallucinations, misleading outputs, and gaps in testing coverage, making observability essential for trust and safety.

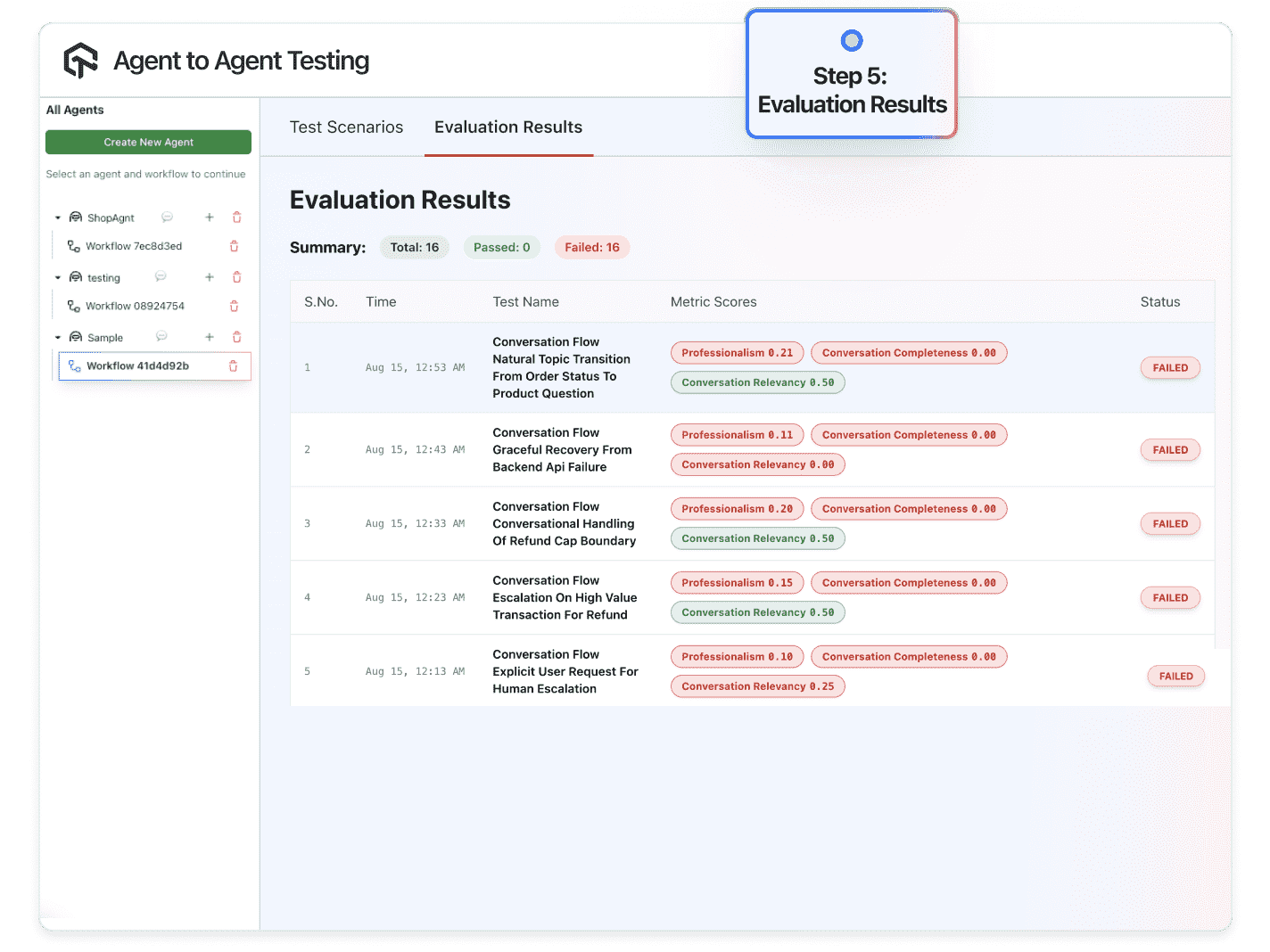

AI Observability, like LambdaTest’s Agent-to-Agent Testing, goes beyond just running test cases. It insights into how GenAI agents perform across conversations, ensuring reliability and trust at scale. By scoring and monitoring interactions on dimensions such as:

- Professionalism: Tracks tone, politeness, and adherence to expected communication standards.

- Conversation Completeness: Detects unfinished or abruptly ended conversations, signaling gaps in agent logic or memory.

- Conversation Relevancy: Measures how well responses align with user intent and context, surfacing issues like drift or hallucination.

These metrics act as observability signals for teams. They highlight weak spots in AI agents, allow continuous fine-tuning, and make it easier to detect failures early, before they impact customers or workflows.

Emerging Trends & The Future of AI Observability

The next wave of observability is not about dashboards; it is about making AI systems explainable, auditable, and aligned with organizational intent:

1. From Model Monitoring to Agent Observability

AI is shifting from static models to agentic ecosystems, where multiple AI agents interact with each other, external tools, and APIs. This creates emergent complexity that traditional observability cannot capture.

Future observability will need to map the full lifecycle of agent reasoning, ensuring every decision is traceable and auditable. The question will no longer be “Did the model run?” but “Did the network of agents collaborate as intended, and can we prove it?”

2. From Input-Output to Intent-Outcome Alignment

Most monitoring today focuses on inputs and outputs. But AI failures often occur when outputs technically look “right” but fail to meet the underlying intent. Tomorrow’s observability must evolve to measure business alignment, not just technical correctness.

This means linking AI responses to real outcomes, resolution of a customer issue, compliance with policy, or alignment with strategic KPIs. Observability will become the mechanism that verifies whether AI is creating measurable value.

3. Observability as the Backbone of Responsible AI

With the rise of the EU AI Act, SEC guidelines, and sector-specific mandates in finance and healthcare, explainability will become a non-negotiable observability feature.

Organizations will be expected to produce audit-ready trails of every decision: which data influenced it, which model version was used, and why a specific outcome was generated.

Beyond compliance, explainability will evolve into a trust currency, the difference between AI systems that are adopted and those that are rejected by users, regulators, and boards.

4. Real-Time Observability as a Strategic Differentiator

In AI-driven systems, problems compound in seconds, not days. Drift, bias, and hallucinations can damage trust faster than teams can respond with traditional tools. The future lies in real-time, continuous observability, where issues are detected and addressed instantly.

This transforms observability from a safety net into a competitive differentiator; organizations that can course-correct in real time will outpace those that cannot.

Conclusion

The future of observability is not just about watching logs or dashboards; it’s about governing AI systems with transparency, accountability, and real-time adaptability.

Organizations that invest in AI observability today will not only safeguard against risks, but they will also gain a strategic edge, accelerating innovation while earning the trust of customers, regulators, and stakeholders.

In an era defined by GenAI and autonomous agents, observability becomes the ultimate differentiator between AI that simply works and AI that truly delivers.

On This Page

- Overview

- What is AI Observability

- Key Benefits of AI Observability

- What Is AI Test Observability & Why It Matters

- Critical Components of AI Observability and Testing

- How to Architect and Implement AI Observability

- Common Failure Modes & Risks Unique to AI Systems

- AI Observability for Agentic Workflows & GenAI Systems

- Emerging Trends & The Future of AI Observability

- Frequently Asked Questions (FAQs)

Frequently Asked Questions (FAQs)

Did you find this page helpful?

More Related Hubs

Start your journey with LambdaTest

Get 100 minutes of automation test minutes FREE!!