Quality Engineering Using Human & Machine Interactive: Chaitanya Kolar [Testμ 2022]

LambdaTest

Posted On: August 25, 2022

6 Min

On this beautiful evening, Mudit Singh, Marketing Head at LambdaTest, welcomed Chaitanya Kolar, Managing Director in Deloitte, to discuss how to perform quality engineering using the interaction of humans & machines.

His expertise includes leading the quality engineering practice and specialized testing initiatives for clients, especially around test automation performance and resilience, service virtualization, test engineering, and test data management.

In this session, they discussed enabling quality engineering functions by adopting artificial intelligence, specifically around how machines and humans work together.

Chaitanya started by describing how panic arose in the world of testers when automation tests started replacing manual tests and how things started getting complicated when AI entered the picture.

Chaitanya strongly believes that AI is here to stay, but the testers’ jobs will get more interesting in the upcoming days.

His opinion was that, even though AI was present in the 1960s and 1970s, there wasn’t any significant data evolution. With so much data at current times, you can create a lot of things. He emphasizes that we are slowly progressing to a stage where humans cannot live all alone but need a machine to augment their thoughts.

“Tester’s role will change when you funnel all of the AI around us to our daily ways of working. You will see that. Even a tester will start doing things very differently because now he has a support system.” he quoted.

A lot of people can use AI for test coverage and optimization. Deloitte is the biggest professional service that has seen many people think leverage AI in reporting and analytics. They did a survey on AI and he confessed the results.

When asked where they see a larger impact of cognitive technologies on QE/ Software testing practice, this was the audience reply:

- 30% of people said you can use AI for requirement analysis and test design.

- 53% use it for better coverage and optimization.

- 56% use it for better test data, and

- 68% for better reporting and analytics.

When the question arose on where they are applying cognitive technologies into quality engineering/ software practice, this was their reply:

- 51% believe it to be useful to generate test data.

- 49% believe it helps to generate insights for optimization and prioritization of test scenarios based on production data.

- 32% are still exploring this area.

This was the audience’s answer to the question- What are the challenges you see in implementing AI/ML?

- 55% see challenges in resource skills

- 50% inform lack of enough tools and platforms to use

He mentioned that some of the typical AI test use cases include testing AI applications, using AI in automating the testing life cycle, and correlating VOC to testing for analyzing customer feedback from social media.

Chaitanya, while speaking on how testing AI systems are different from traditional apps, said that there would be significant changes in requirement gathering, data and code validation, a test model for accuracy, and end-to-end testing.

He said there would be no changes in areas such as change management, domain capability, system integration, regulatory submission, and risk assessment.

“Traditional systems are deterministic. But AI is a moving goalpost with a range of possible outcomes,” he quotes.

The primary areas mentioned by him for AI-infused applications include:

- Mechanical & Electrical

- Information Processing

- Machine Intelligence

- Business Impact

- Social Impact

He also spoke about how traditional testing methods would change or mold these areas.

He suggests that during the requirement gathering phase of testing, we need to understand which AI model would we implement, and what kind of boundaries and tolerances the product managers will be okay with based on their expectations. You need to train and test your models, and you suddenly start thinking about two separate data sets, one for training and another for testing.

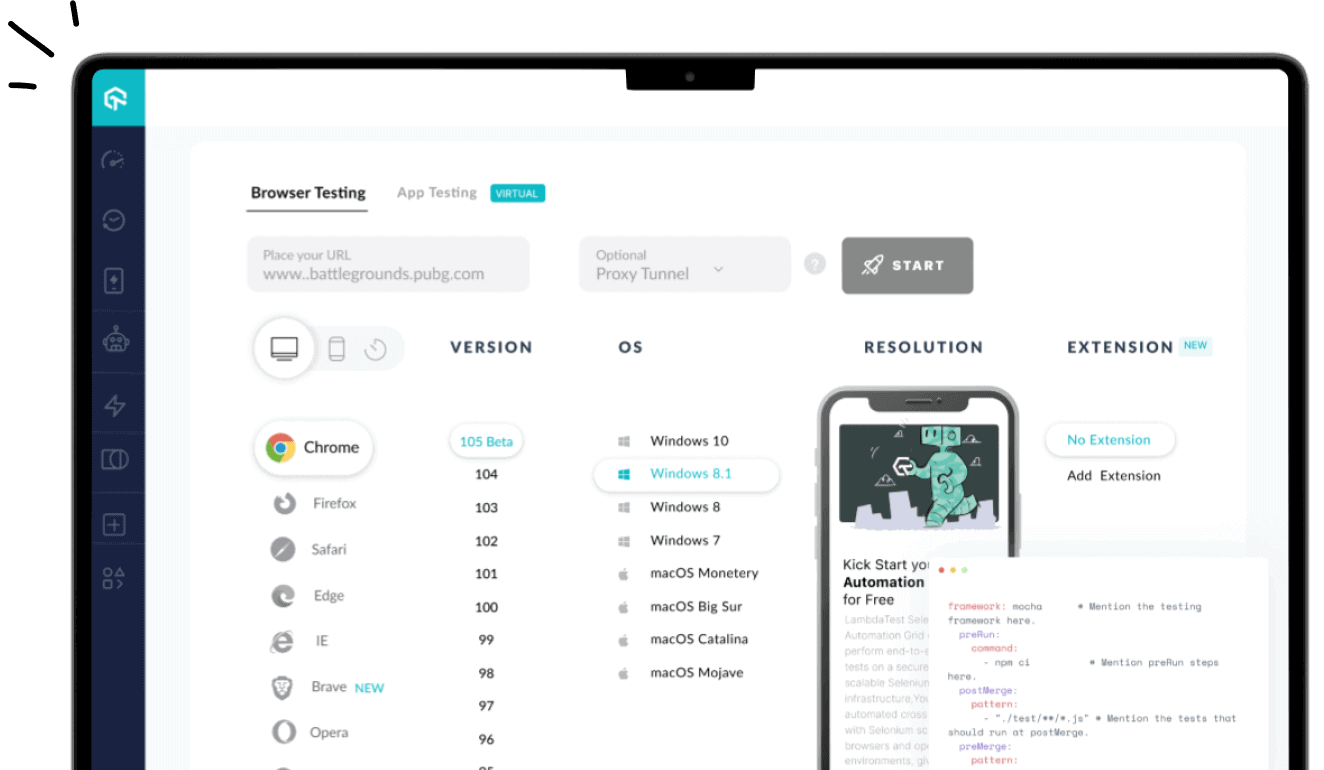

He conveyed that to build solutions that can autonomously perform all of the testing activities, right from understanding requirements to generating reports, he said that using JIRA, you can collect every need from different sources with the help of design, automation, execution, and reporting tools, you can quickly build models based on the requirements.

You can also start using Selenium to automate your processes and digital logs to collect feedback.

While building these models based on AI algorithms, you can speak to the tester and explain any bugs and errors that need to be fixed, be it slowness in the system. You can also look at the timing at which your customers are active on your web app and check how they interact with your brand on Google and Apple stores. Hence you can correlate different facts using AI to provide a better customer experience.

He spoke on the use cases he has delivered to different clients, enabling test engineers, test leads, test managers, directors, senior directors, CEOs, and CXOs to understand both inside and outside feedback. He also said that it depends on a lot of factors.

When questioned on the maturity of AI tooling around the testing space, he replied optimistically that there are many AI tools to help testers at every testing stage. He also suggested that this optimism might not come in handy unless and until you perform tool training. This way, you will probably have a super AI tool in 6-8 weeks or 15 weeks. He also added that it depends upon the user to use it efficiently. He also suggested testers learn the basics of data science since that would help them to create their tool. As time goes on, the senior leadership team can help you with the right budget.

Mudit asked him what strategy should be adopted to test an AI-based application. We were curious if we could depend only on codeless testing tools. He denied saying it won’t work for us unless and until we train it. He said that the testing strategy should always convince the traditional world. He notes that if you have AI applications, you will have to move your strategy from your requirement analyst, analyze how much the gap is, and do thorough research to mitigate it.

It was an extremely interesting session!

We are extremely happy to have invited him for Testμ 2022.

After successful Testμ Conference 2022, where thousands of testers, QA professionals, developers worldwide join together to discuss on future of testing.

Join the testing revolution at LambdaTest Testμ Conference 2023. Register now!” – The testing revolution is happening, and you don’t want to be left behind. Join us at LambdaTest Testμ Conference 2023 and learn how to stay ahead of the curve. Register now and be a part of the revolution.

Author