AI-Powered Selenium

Testing Cloud

Supercharge QA with AI for Faster & Smarter Software Testing

- Automation

- Home

- /

- Learning Hub

- /

- 110+ Docker Interview Questions & Answers for 2025

110+ Docker Interview Questions & Answer Guide for 2025

Crack your next interview with these 110+ Docker Interview Questions and Answers. Covers fundamentals, CI/CD, security, real-world use cases for 2025.

Last Modified on: September 26, 2025

- Share:

Docker plays a central role in modern software delivery. From streamlining development environments to powering large-scale microservices and CI/CD pipelines, it has become a foundational tool across DevOps and cloud engineering teams.

This collection of 110+ Docker interview questions and answers, structured to help you showcase both technical depth and practical thinking. Topics span from core Docker concepts to advanced usage in production, including container orchestration, security, networking, and CI/CD integration. It's designed for developers, DevOps engineers, and platform teams preparing for interviews in 2025 and beyond.

Docker Interview Questions

Note: We have compiled all Docker Interview Questions List for you in a template format. Feel free to comment on it. Check it out now!!

Overview

Docker is a platform that simplifies the process of building, running, and shipping applications in containers. This guide covers over 110 curated interview questions across various levels, from freshers to experienced DevOps professionals , categorized by topics for structured preparation.

Docker Basics Interview Questions for Freshers

- What is Docker, and how is it different from a virtual machine?

- What are the main components of Docker architecture?

- How do you install Docker on Linux or Windows?

Docker Images and Containers Interview Questions

- What is the difference between a Docker image and a Docker container?

- How do you list all running and stopped containers in Docker?

- What happens when you run docker run?

Dockerfile and Image Optimization Interview Questions

- What is a Dockerfile, and how does it work?

- How can you reduce the size of a Docker image?

- What is the difference between CMD and ENTRYPOINT in a Dockerfile?

Docker Networking Interview Questions

- What types of Docker networks are available?

- How do containers communicate with each other by default?

- What is port forwarding in Docker?

Docker Volumes and Storage Interview Questions

- What are Docker volumes, and why are they used?

- What is the difference between a bind mount and a named volume?

- How can you back up data from a Docker volume?

Docker Compose and Orchestration Interview Questions

- What is Docker Compose, and how does it help in managing multi-container applications?

- How do you scale services using Docker Compose?

- What is Docker Stack, and how does it relate to Compose?

Docker Security Interview Questions

- Why is it unsafe to run containers as root?

- What is the role of seccomp and AppArmor in Docker security?

- How does Docker Content Trust (DCT) enhance image integrity?

Docker in CI/CD Pipelines Interview Questions

- How do you use Docker in Jenkins or GitLab CI pipelines?

- What are the benefits of using Docker for test automation in CI/CD?

- How can you optimize image build and caching in a CI/CD workflow?

Real-World Scenario-Based Questions

- You notice your container is consuming too much memory, how would you troubleshoot and fix it?

- How would you set up persistent storage for a containerized database?

- What steps would you take to secure a Dockerized production environment?

Docker Interview Questions for Experienced

- How do Linux namespaces and cgroups contribute to container isolation?

- What is user namespace remapping, and how does it improve security?

- How do you manage Docker resources in large-scale, multi-host production environments?

Docker Basics Interview Questions for Freshers

1. What is Docker, and why is it useful?

Docker is an open-source tool that packages applications and their dependencies into containers, ensuring they run consistently across different environments. It is lightweight, efficient, and ideal for microservices, scalable deployments, and CI/CD pipelines, eliminating the “it works on my machine” problem.

2. What is a Docker container?

A Docker container is a runtime instance of a Docker image. It’s the running, isolated process that holds an application and all its dependencies. Containers share the host OS kernel but have their own file system, network stack, and process space, thanks to Linux kernel features (namespaces and control groups).

In practical terms, a container is what you get when you run a Docker image. Containers are portable and consistent, meaning a containerized app will behave the same on any host (development, test, or production) as long as Docker is available.

3. What is a Docker image?

A Docker image is a read-only template that contains everything needed to run an application, including code, libraries, and settings. It acts like a blueprint for containers. Built from layers using a Dockerfile, images are immutable and tagged for versioning. They can be shared through registries like Docker Hub for easy distribution.

4. What’s the difference between a Docker image and a container?

A Docker image is a read-only template with the app and its environment, like a recipe. A container is the running instance of that image, with a writable layer on top. You can run many containers from one image, and changes in containers don’t affect the original image. To save a container’s state, you can create a new image, though most images are built using Dockerfiles.

5. How does Docker compare to a virtual machine (VM)?

VMs run full operating systems with their own kernels, making them heavier and slower to start. Docker containers share the host OS kernel, isolating only the app environment, which makes them lightweight and fast. VMs provide stronger isolation, but containers are more efficient and ideal for high-density deployments.

6. What are the main components of Docker’s architecture?

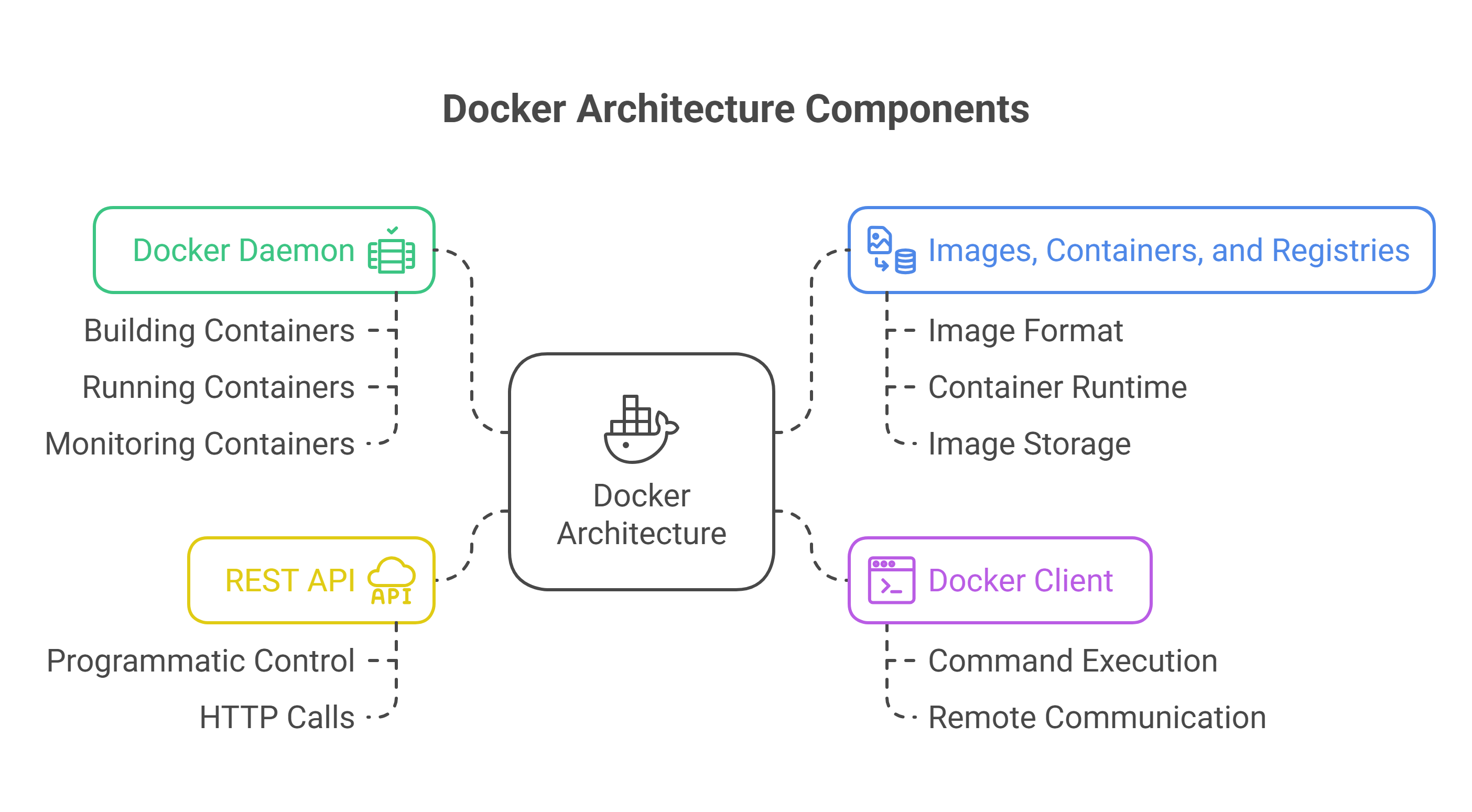

Docker follows a client-server architecture comprising:

- Docker Daemon (dockerd) – the server process that does the heavy lifting of building, running, and monitoring containers. It runs on the host (typically as root) and exposes a REST API.

- Docker Client (CLI) the command-line tool (docker) that users interact with. When you run Docker commands, the CLI sends these to the Docker daemon’s API. The CLI can be on the same host as the daemon or communicate remotely.

- REST API – Docker’s remote API that the CLI and other tools use to communicate with the daemon. It allows you to control Docker programmatically or via HTTP calls.

- Images, Containers, and Registries – These are not processes but core concepts in the architecture. The Image format (with a layered filesystem) and Container runtime are handled by the daemon (often leveraging containerd and runc under the hood – see advanced topics). Registries (like Docker Hub) store images; the daemon interacts with registries to pull/push images as needed.

7. What is Docker Hub (or a Docker registry)?

A Docker registry is a storage and distribution system for Docker images. Docker Hub is the most popular public registry, hosting official and community images. When you run a command like docker pull ubuntu, the image is fetched from Docker Hub. You can also push your own images there. Other options include private registries or services like GitHub Container Registry or AWS ECR.

8. How do you run a Docker container?

docker run creates and starts a container from a specified image. For example, docker run hello-world runs the "hello-world" image. Common options include:

- -d: run in background

- -it: interactive mode with terminal

- --name: set a custom name

- -p: map ports

- -v: mount volumes

It creates a writable layer, sets up networking, and runs the default or specified command. If no image is found locally, it pulls from Docker Hub.

9. What is the difference between docker run and docker start?

docker run creates and starts a new container from an image, generating a new container ID each time. docker start restarts an existing stopped container without creating a new one. Use docker stop or docker kill to halt a container, and docker start to resume it later. Containers persist after stopping unless removed with docker rm or run with --rm.

10. How can you see what containers and images are on your system? Docker provides commands to list containers and images:

Docker provides commands to list containers and images:

- Use docker ps to list running containers; docker ps -a shows all, including stopped ones.

- Use docker images or docker image ls to list available images.

- docker inspect <name_or_id> gives detailed info.

- docker history <image> shows image layers.

- docker volume ls and docker network ls list volumes and networks.

11. How do you remove Docker containers and images?

Use docker rm to delete stopped containers, or docker rm -f to force-remove running ones. To delete images, use docker rmi, but only if no containers are using them. For cleanup, docker system prune removes unused containers, images, networks, and cache. Use with care, especially in production.

12. What is container “exit code 0” (or other exit codes)?

When a Docker container stops, it returns an exit code. Code 0 means success, while non-zero codes indicate errors (e.g., 137 for forced stop, 139 for segmentation fault). You can view exit codes with docker ps -a. These codes help in debugging and are used by tools to decide on container restarts.

13. What is a Dockerfile?

A Dockerfile is a text file with step-by-step instructions to build a Docker image. It defines the base image, app files, dependencies, environment settings, and startup commands. Running docker build processes the Dockerfile to create an image. It ensures consistency, automation, and supports best practices like multi-stage builds.

Docker Images and Containers Interview Questions

14. How are Docker images layered, and why does it matter?

Docker images are built in layers, with each Dockerfile instruction (like FROM, RUN, or COPY) creating a new read-only layer. These layers are stacked using a union file system and cached for reuse, improving efficiency. When a container runs, Docker adds a writable top layer for runtime changes.

Layering enables faster builds, smaller updates, and shared base layers across images, saving time and storage. For example, if only one layer changes in a 5-layer image, Docker pushes just that layer, making deployments faster and more bandwidth-efficient. Ordering instructions wisely helps optimize build caching.

15. What is a Docker tag?

A Docker tag is a label used to identify different versions of an image, like nginx:1.25 or myapp:1.0. If no tag is specified, Docker uses :latest by default, though this doesn’t always mean the newest version. Tags are mutable, meaning they can be updated to point to new builds. They help manage versions during development and deployment. For consistency, it's best to use specific version tags rather than relying on :latest. You can create or change tags with docker tag and use them when pushing images to a registry.

16. How do you pull and push images from/to a Docker registry?

docker pull downloads an image from a registry to your local machine. For example, docker pull redis:7-alpine fetches that image from Docker Hub. docker push uploads a tagged image to a registry. To push:

1. Tag the image (docker tag image:tag username/repo:tag )

2. Log in (docker login)

3. Push it (docker push username/repo:tag)

For private registries, include the registry URL in the tag (e.g., myregistry.com/myimage:tag). These commands are essential for sharing and managing images across teams and environments.

17. What does the Docker image naming convention repository:tag mean?

Docker images follow the format [registry_hostname/]repository:tag. If no registry is specified, Docker defaults to Docker Hub. The repository usually includes a namespace (like username/image) unless it's an official image (e.g., library/ubuntu). The tag, added after a colon, identifies the image version or variant, such as python:3.11-slim. If no tag is provided, Docker uses :latest by default. Tags can be any string and are commonly used for versions or environment labels like dev, alpine, or prod.

18. Can multiple Docker containers run from the same image?

Yes, you can run many containers from the same Docker image. Each container is an isolated instance with its own writable layer, while sharing the base image layers to save resources. For example, running multiple containers from a web service image allows you to scale horizontally by handling more traffic across ports or load balancers. Each container has a unique ID and operates independently. This lightweight model makes Docker ideal for deploying multiple instances of the same app efficiently.

19. What is an ephemeral container and what happens to data when a container exits?

Yes, Docker containers are designed to be ephemeral. When removed, their internal data is lost unless it’s stored in external volumes or bind mounts. This promotes stateless design, where important data lives outside the container. While containers can run long processes, you should be able to replace any container without losing critical data. The --rm flag auto-deletes a container after it stops, which is useful for temporary tasks.

20. How do you get a shell inside a running container (for debugging)?

You can access a running container using docker exec -it <container_id_or_name> /bin/bash to open an interactive shell inside it. If bash isn't available, use sh or ash depending on the image. This lets you inspect files, run commands, and debug issues without stopping the container. Unlike docker attach , which connects to the main process, docker exec runs a new process inside the container and is safer for troubleshooting. If the image lacks a shell, you may need to debug using volumes or other tools.

21. What is an image digest or content-addressable identifier?

A Docker image digest is a unique SHA256 hash that identifies the exact contents of an image. Unlike tags, which can point to different versions over time, digests are immutable. You can use them to pull or run a specific image version, like docker pull nginx@sha256:<hash>. This ensures consistency and is often used in production or Kubernetes to avoid unexpected changes from tag updates.

22. What are official images and how are they different from user images on Docker Hub?

Official images on Docker Hub are maintained by Docker or trusted sources and follow best practices, security updates, and multi-architecture support. They appear without a username, like nginx (short for library/nginx). User images are published under individual or organization namespaces, like john/myapp, and may not be verified. For reliability and security, it’s best to use official or verified images as base images in your Dockerfiles.

23. What does it mean that Docker containers are isolated?

Docker uses Linux kernel features to isolate containers. Namespaces restrict what a container can see (like its own processes, network, and filesystem), while control groups (cgroups) limit resource usage such as CPU and memory. Each container runs with its own virtual network by default, blocking access to others unless configured. This setup makes containers feel like separate systems, though they share the host kernel. For stronger security, tools like seccomp, AppArmor, and rootless mode can add extra protection.

24. What is a container ID and how do you use it?

A Docker container ID is a unique alphanumeric identifier assigned to each running container. It’s typically shown as a short 12-character version in commands like docker ps , but the full ID is longer. You can use it to manage containers directly, such as docker logs <container_id>. Docker also assigns random names if no name is set, but it always uses the container ID under the hood. IDs are globally unique and useful for scripting or when no custom name is given.

25. What happens when you run docker run -d -p 80:80 nginx?

This command runs an Nginx container in the background (-d) and maps the container’s port 80 to the host’s port 80 (-p 80:80). If the nginx:latest image isn’t available locally, Docker pulls it from Docker Hub. Once running, you can access the Nginx welcome page at http://localhost:80. Use docker ps to confirm the container is running and port mapping is active.

Dockerfile and Image Optimization Interview Questions

26. How do you write a basic Dockerfile?

A basic Dockerfile defines how to build a Docker image. It starts with FROM to set the base image, then includes steps like setting a working directory (WORKDIR), copying files (COPY), installing dependencies (RUN), exposing ports (EXPOSE), and setting the default command (CMD), Example:

FROM node:18-alpine

WORKDIR /app

COPY package.json ./

RUN npm install

COPY . .

EXPOSE 3000

CMD ["node", "server.js"]

After writing it, use docker build -t myimage. to create the image. Order instructions to improve build caching and performance.

27. What is the difference between COPY and ADD in a Dockerfile?

Both COPY and ADD copy files into a Docker image, but ADD has extra features. COPY simply transfers files from your local build context to the image. ADD can also extract local tar archives and download files from URLs. Because of its added behavior, ADD can lead to unexpected results. Best practice is to use COPY unless you specifically need ADD for features like auto-extracting archives or fetching remote files. For better control over downloads, it's recommended to use RUN curl or wget instead of ADD.

28. What is the difference between CMD and ENTRYPOINT in a Dockerfile?

Both CMD and ENTRYPOINT define what runs when a container starts. ENTRYPOINT sets a fixed executable that always runs, treating the container like a specific app. Arguments passed during docker run are passed to it. CMD provides default commands or arguments, which can be overridden when running the container. If both are used, CMD sets default arguments for the ENTRYPOINT. Use ENTRYPOINT for fixed behavior and CMD for optional defaults. For example:

ENTRYPOINT ["ping"]

CMD ["localhost"] This pings localhost by default, but you can override with docker run image 8.8.8.8.

29. How does Docker build cache work and how can you leverage it?

Docker caches image layers during builds by checking if each Dockerfile instruction and its context match a previous build. If unchanged, it reuses the cached layer to speed up the process. To optimize caching:

- Place frequently changing steps (like COPY for app code) near the end.

- Keep static steps (like installing system packages) at the top.

- Use .dockerignore to exclude unnecessary files.

- Combine related RUN steps to reduce layers.

If you need a clean build, use --no-cache. Efficient caching can greatly reduce build time, especially during development.

30. What is a multi-stage build and why is it useful?

A multi-stage build uses multiple FROM statements in a Dockerfile to separate the build and runtime environments. This helps reduce the final image size by keeping only the necessary runtime files and discarding build tools and source code. For example, you can compile an app in a full-featured image, then copy just the binary into a minimal runtime image like alpine or openjdk-slim. This approach improves security, speeds up deployment, and keeps Dockerfiles clean without needing extra cleanup steps. It’s widely used in production to build efficient and lightweight containers.

31. How can you reduce the size of a Docker image?

To reduce Docker image size:

- Use smaller base images like alpine or slim variants.

- Use multi-stage builds to exclude build tools and keep only runtime code.

- Clean up caches (e.g., apt-get clean) and remove temporary files in the same layer.

- Use .dockerignore to exclude unnecessary files from the build context.

- Minimize layers by combining related RUNcommands.

- Copy only needed files using targeted COPY instructions.

- Use minimal images like scratch or distroless if suitable.

- Use tools like docker-slim for automatic size reduction.

These strategies can significantly reduce image size and improve performance.

32. What is the purpose of the .dockerignore file?

The .dockerignore file tells Docker which files and folders to exclude from the build context during docker build. This helps speed up builds, reduce image size, and avoid including unnecessary or sensitive files. It works like .gitignore and uses patterns to ignore items like .git/, node_modules/, *.log, or secrets.env. While it doesn’t directly change the image, it limits what can be copied into it, making builds cleaner and more secure.

33. How can you pass build-time variables or arguments into a Docker build (and what is ARG)?

Docker supports build-time variables using ARG in the Dockerfile and --build-arg during docker build. For example, declare ARG APP VERSION in the Dockerfile, then build with --build-arg APP VERSION=1.0. These variables are only available during the build and are not stored in the final image unless explicitly set with ENV. Use them for customizing builds, like selecting versions or toggling features. Unlike ENV, which sets runtime environment variables, ARG is only for the build phase. Changing an ARG value can invalidate Docker’s build cache from that layer onward.

34. What is the difference between CMD ["myapp"] and CMD ["sh", "-c", "myapp"] in Dockerfile?

CMD ["myapp"] uses the exec form, which runs myapp directly as PID 1. It's preferred for better signal handling and efficiency. CMD ["sh", "-c", "myapp"] runs through a shell, similar to the shell form (CMD myapp). This allows shell features like variable expansion or chaining commands, but introduces an extra shell process. Use the exec form unless you specifically need shell capabilities.

35. How do you set an environment variable in a Docker container?

You can set environment variables in two ways:

- At build time using ENV in the Dockerfile (e.g., ENV DB_HOST=postgres), which bakes the variable into the image.

- At runtime using -e or --env with docker run (e.g., -e APP_MODE=production), or by using --env-file to load multiple variables from a file.

Runtime variables override those set in the Dockerfile. For sensitive data, avoid hardcoding in the Dockerfile, use runtime flags or Docker secrets instead.

36. What does the EXPOSE instruction do, and is it necessary to publish ports?

The EXPOSE instruction in a Dockerfile documents which ports a container is expected to listen on, such as EXPOSE 8080. It doesn’t actually open or publish ports to the host. To make a container's port accessible externally, you must use -p or -P with docker run. While EXPOSE isn't required for port publishing, it’s good practice for clarity and is used by docker run -P to map exposed ports to random high host ports.

37. How can you run a command every time a container starts (not during build)?

Use ENTRYPOINT or CMD in the Dockerfile to run a command at container startup. Unlike RUN, which runs during build, ENTRYPOINT executes each time the container starts. The common approach is to create an entrypoint script that performs setup tasks (like reading env vars or generating configs) and then starts the main app. You can also use sh -c "command && exec app" for simple cases. For recurring tasks, consider schedulers, but for one-time startup commands, ENTRYPOINT is the standard solution.

38. What is an ONBUILD Dockerfile instruction?

ONBUILD is a Dockerfile instruction used in base images to define commands that run automatically when that image is used as a parent in another build. For example, ONBUILD COPY . /app will trigger during a future docker build, not when the image is run. It’s helpful for setting defaults in language stacks but is less common now, as it can be unclear or hard to trace. Modern best practices prefer explicit build steps over using ONBUILD.

39. Why might you have multiple FROM lines in a Dockerfile?

Multiple FROM lines indicate a multi-stage build. Each FROM starts a new stage, often used to separate build and runtime environments. For example, you might build an app in golang:1.20 and copy the output into a minimal alpine image. This reduces image size and improves security. You can also copy files between stages using COPY --from=<stage>. The last FROM defines the final image unless you build a specific stage with --target. Multi-stage builds are a powerful way to streamline Docker images.

40. Is it possible to reduce build times for Docker images? If so, how?

Yes, Docker build times can be reduced using several strategies:

- Optimize Dockerfile for caching: Place static steps early and frequently changing ones later.

- Use .dockerignore: Exclude unnecessary files to reduce build context size.

- Enable BuildKit: Allows parallel builds, better caching, and mountable cache volumes.

- Cache layers in CI: Reuse layers between builds to avoid rebuilding unchanged steps.

- Use multi-stage builds: Run only required stages to save time.

- Leverage --cache-from: Use previous images as cache sources.

- Minimize RUN steps: Combine commands to reduce image layers.

- Use efficient hardware: SSDs and more CPUs speed up builds.

- Avoid re-downloading dependencies: Use language-specific caches.

Efficient Dockerfile design and smart caching are the most effective improvements.

Docker Interview Questions

Note: We have compiled all Docker Interview Questions List for you in a template format. Feel free to comment on it. Check it out now!!

Docker Networking Interview Questions

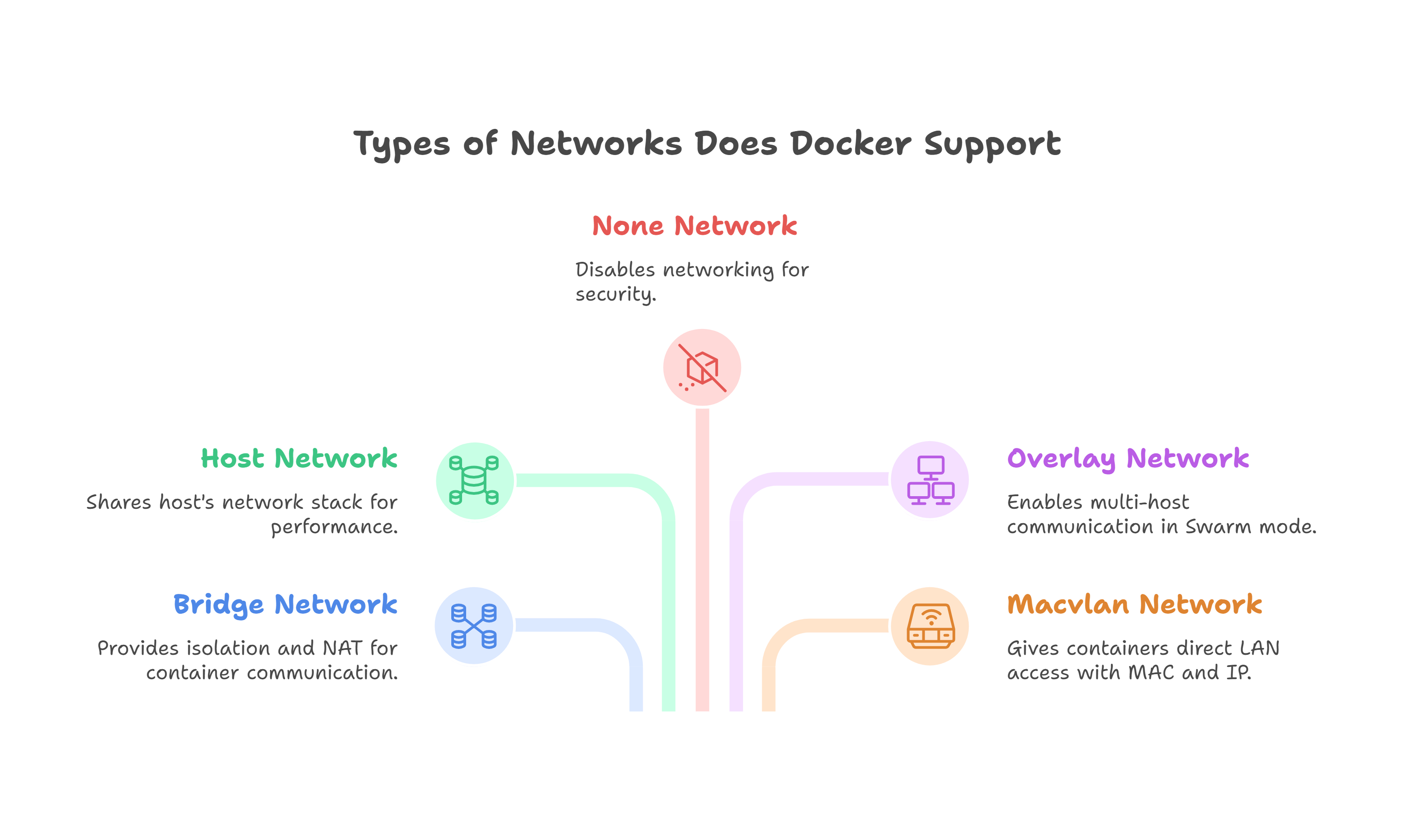

41. What types of networks does Docker support, and what are their differences?

Docker supports several network types:

- Bridge (default): Isolated internal network on a single host. Containers get private IPs and can talk to each other and the internet via NAT. Use custom bridge networks for better DNS-based container discovery.

- Host: Shares the host’s network stack. No isolation or port mapping needed, but less secure. Useful for performance or when direct access to host ports is required.

- None: Disables networking entirely. The container has no external access, only a loopback interface. Ideal for secure, isolated workloads.

- Overlay: Enables container communication across multiple hosts (Swarm mode). Used in clustered setups to simulate a shared network across nodes.

- Macvlan: Gives containers their own MAC and IP on the host’s LAN. Good for direct LAN access, but setup is more complex.

Each type serves different use cases depending on performance, isolation, and scalability needs.

42. How do you expose or publish ports for a Docker container?

To make a container’s internal service accessible from the host or outside network, you use the -p or --publish flag when starting the container. This maps a port on the host to a port inside the container.

For example, -p 8080:80 means host port 8080 forwards traffic to container port 80. This allows you to access the containerized service at localhost:8080.

You can also expose multiple ports by repeating the -p flag, and optionally specify an IP address (e.g., -p 127.0.0.1:8080:80 binds only to localhost). Without -p, the container’s ports remain isolated and unreachable from the host.

43. What is the difference between -p and -P when running a container?

The -p flag lets you map a specific container port to a specific host port (e.g., -p 8080:80 ), giving you full control. The -p flag automatically maps all exposed container ports to random available host ports. Use -p for precise access, and -p for quick, flexible testing.

44. How can containers on different hosts communicate with each other?

By default, Docker containers cannot communicate across different hosts using internal networking. To enable this, one common method is using Docker Overlay Networks in Swarm mode, which allows containers on different nodes to connect as if on the same virtual network. Alternatively, you can expose ports on each host and have containers communicate using standard TCP/IP through host IPs and ports. This is simpler but requires manual port management. Other options include third-party networking tools like Weave or Flannel, or orchestration platforms like Kubernetes, which provide built-in cross-host networking through cluster-wide IP allocation.

45. What is a Docker network and how do you create one?

A Docker network enables communication between containers and with the outside world. To create one, use docker network create <name>. Custom networks (like bridge or overlay) allow containers to communicate by name and offer better isolation and control than the default network..

46. How do containers find each other by name on a network?

When containers are on the same user-defined Docker bridge network (or overlay network in Swarm), Docker provides an internal DNS that lets them resolve each other by name. For example, if one container is named db, another container on the same network can connect to it using db as the hostname. This DNS resolution is scoped to the specific network, meaning containers on different networks can't see each other by name. On the default bridge network, this name resolution doesn’t work unless links are used. Best practice is to use a custom network to enable reliable name-based discovery.

47. What is the default Docker bridge network and what are its limitations?

The default Docker bridge network (bridge) is used when no custom network is specified. Containers on this network can communicate via IP addresses but not by name. It lacks automatic DNS-based service discovery and has limited isolation, all containers on it can talk to each other. Advanced features like network aliases and scoped communication are only available in user-defined networks, making them better for most use cases.

48. What is port forwarding or NAT in context of Docker networking?

Port forwarding (NAT) in Docker maps a host port to a container port using the -p flag. This allows external traffic to reach services inside the container. Docker sets up network address translation so traffic sent to the host (e.g. localhost:8080 ) is forwarded to the container’s internal port (e.g. 80 ). Without this, containers are isolated and not accessible from the host or internet.

49. How would you configure a container to have access to the host network?

To give a container access to the host’s network, use the --network host flag when running the container. This bypasses Docker’s network isolation, allowing the container to use the host’s network stack directly. It can access host interfaces and ports but also exposes the container more broadly, so it should be used carefully and only when needed (e.g., for performance or low-level networking tasks).

50. How can you troubleshoot networking issues in Docker containers?

To troubleshoot Docker networking issues, start by inspecting the container’s network settings with docker inspect and verify port mappings using docker ps. Use docker exec to run tools like ping or curl inside the container to test connectivity. Check if the app is listening on the correct port and interface. Ensure firewall rules or host restrictions aren’t blocking access. You can also inspect Docker networks with docker network ls and docker network inspect to detect misconfigurations or conflicts.

Docker Volumes and Storage Interview Questions

51. What is a Docker volume and why would you use one?

A Docker volume is a persistent storage mechanism that exists outside a container's ephemeral file system. It allows data to be saved and reused even if the container is removed. Volumes are managed by Docker and stored on the host (e.g., /var/lib/docker/volumes/ on Linux), and can be shared between containers. They’re ideal for storing databases, logs, or user uploads. Volumes offer better performance for heavy I/O and support external drivers like NFS or cloud storage. Unlike the container’s writable layer, data in volumes remains intact across container rebuilds or restarts.

52. What’s the difference between a volume and a bind mount?

Volumes and bind mounts both let containers access host data, but they differ in how they're managed. Volumes are created and managed by Docker, stored in Docker’s internal directory (like /var/lib/docker/volumes/) and are ideal for persistent, portable data. They're isolated from the host's file structure and can use volume drivers. Bind mounts directly map a specific host path to a container path, allowing real-time sync between host and container. They're more flexible but less secure and harder to manage at scale. Volumes are better for production, while bind mounts are common in development or when accessing host files.

53. How do you create and use a volume in Docker?

You can create a volume with docker volume create <name> and use it by mounting it into a container with -v <name>:/path. This allows data to persist across container restarts or be shared between containers. Docker also auto-creates named or anonymous volumes when needed. Volumes are ideal for storing databases, logs, or user data outside the container’s ephemeral filesystem.

54. What happens to data in a container’s filesystem when the container is removed?

Data stored in a container’s default writable layer is deleted when the container is removed. This means any changes made inside the container that weren't stored externally will be lost. However, data saved in volumes or bind mounts remains intact. Volumes persist on the host even after the container is deleted, unless removed explicitly. Bind mounts point to existing host directories, so their data is never removed with the container. To ensure persistence, always store important data outside the container’s internal filesystem.

55. Can multiple containers share the same volume? If yes, how?

Yes, multiple containers can share the same Docker volume. You can mount a named volume into different containers, even at different paths. For example, docker run -v shareddata:/data and docker run -v shareddata:/app/data both attach the same shareddata volume. This allows containers to read or write shared files. However, Docker does not manage file-level concurrency, so if multiple containers write to the same files, you must handle conflicts in your application. This setup is useful for tasks like data sharing, logging, or initializing data across containers.

56. What is the difference between an anonymous volume and a named volume?

A named volume is explicitly created and referenced by name, making it easy to reuse and manage across containers (e.g., -v mydata:/app/data). An anonymous volume is created automatically when you mount a volume without specifying a name (e.g., -v /app/data). Docker assigns it a random name, and it’s typically used for temporary or implicit storage needs, such as when a Dockerfile defines a VOLUME. Named volumes are preferred for long-term use and sharing, while anonymous volumes can clutter your system if not pruned, since they’re harder to track and manage.

57. How do you back up data from a Docker volume?

To back up a Docker volume, the most reliable method is to run a temporary container that mounts the volume and a host directory, then archives the volume's contents into that directory. This approach is portable and doesn't rely on host-specific paths. Alternatively, you can use docker cp to copy files from a container that uses the volume, though this may be inconsistent if the container is active. Another option is to access the volume directly from the host’s filesystem, but it requires host access and caution to avoid corrupt data. Some volume drivers or third-party tools also support snapshots. For databases, it's best to create a dump file inside the container and back up that file to ensure data consistency.

58. What are tmpfs mounts in Docker?

A tmpfs mount in Docker is a temporary in-memory filesystem that stores data in RAM or swap instead of on disk. It is useful for storing non-persistent, fast-access data such as caches, session files, or sensitive information that should not remain after the container stops. You can define a tmpfs mount when running a container, and the data written to it disappears once the container exits. Tmpfs offers performance benefits due to reduced disk I/O and enhances security by leaving no data trace on disk, but it consumes host memory and should be used with size limits when needed.

59. How are Docker images stored on disk (storage drivers)?

Docker uses storage drivers like overlay2 to manage image layers on disk. Each image consists of read-only layers stored under /var/lib/docker/<driver>/, and containers get a writable top layer. Layers are stacked using a union filesystem, allowing multiple containers to share common image data. The storage driver handles how these layers are combined and managed, affecting performance and compatibility.

60. How do you clean up unused Docker data (images, containers, volumes)?

Docker can accumulate unused data over time, including stopped containers, old images, unused volumes, and networks. To clean up:

- Use docker system prune to remove stopped containers, dangling images, and unused networks. Add --volumes to also remove unused volumes.

- Use targeted commands like docker image prune, docker container prune, or docker volume prune for specific types of cleanup.

- For full manual cleanup, remove containers with docker rm, images with docker rmi, and volumes with docker volume rm.

In CI pipelines, docker system prune -af is common for full cleanup. On development machines, use caution to avoid deleting useful data. Also consider managing container logs, which can consume space if not rotated.

Docker Compose and Orchestration Interview Questions

61. What is Docker Compose and what is it used for?

Docker Compose is a tool that lets you define and run multi-container applications using a docker-compose.yml file. Instead of running multiple docker run commands, you describe all services, networks, volumes, and configurations in one place. With a single docker-compose up command, all services start together with the correct settings. It’s ideal for development and testing setups like a web app with a database and cache, allowing services to communicate by name. While not a full orchestration tool, Compose simplifies container management on a single host and can also be used with Docker Swarm.

62. How do you start, stop, and scale services in Docker Compose?

To manage services with Docker Compose, use the following commands:

- Start services with docker compose up. Add -d to run in detached mode. You can also start a specific service, like docker compose up -d web.

- Stop and remove services with docker compose down, which removes containers and networks but keeps named volumes unless you add --volumes. Use docker compose stop to pause services without removing them.

- Scale services using docker compose up -d --scale web=3 to run multiple instances of a service. Be sure services are defined to support scaling (avoid fixed host ports to prevent conflicts).

These commands work with Docker Compose V2 (docker compose) and help manage multi-container apps easily.

63. Can you give an example of a Docker Compose file for a simple web app with a database?

Yes. A simple docker-compose.yml might define a web service and a MySQL database like this:

version: "3.8"

services:

web:

build: .

ports:

- "8080:80"

environment:

- DB_HOST=db

depends_on:

- db

db:

image: mysql:8

volumes:

- dbdata:/var/lib/mysql

environment:

- MYSQL_ROOT_PASSWORD=example

- MYSQL_DATABASE=myapp

volumes:

dbdata:

Explanation:

- The web service is built from the current directory and exposes port 80 on container to port 8080 on the host.

- It connects to the db service via the internal hostname db, and Compose ensures the db container starts first.

- The db service uses the official MySQL image with a named volume dbdata for data persistence and initializes with environment variables.

- Only the web app is exposed to the host, keeping the database internal.

Running changedocker compose up -d will launch both services together.

64. How does Docker Compose handle networking between containers?

Docker Compose automatically creates a dedicated network for all services in a Compose file, typically a bridge network named after the project (such as projectname_default). All containers in the project join this shared network and can communicate with each other using service names as hostnames. This allows seamless service discovery without needing to expose ports internally. For example, a web service can reach a database simply by referring to it as db, using its internal port. Compose networks are isolated by default between projects, preventing name clashes. You can also define custom networks for more complex setups if needed.

65. What is docker-compose up --build?

The --build flag with docker-compose up forces Docker Compose to rebuild service images before starting containers, even if those images already exist. This is useful when you've made changes to the Dockerfile or application code and want to ensure you're running the latest version. Without --build, Compose only builds if no image is available. It’s a shortcut for running docker-compose build followed by docker-compose up. This is commonly used in development to keep the environment up to date.

66. What is the difference between Docker Compose and Docker Swarm?

Docker Compose is a tool for defining and running multi-container applications on a single host, mainly used for development and small-scale setups. Docker Swarm is a built-in orchestration system for managing containers across multiple machines in a cluster. Compose works with containers directly, while Swarm operates at the level of services and tasks, offering features like scaling, service discovery, load balancing, and high availability. Although Swarm can use a similar Compose file format (as a stack file), it's meant for production clusters. In short, Compose simplifies local workflows, while Swarm manages distributed container deployments.

67. How do you use Docker Compose in a CI/CD pipeline or production?

In CI/CD, Docker Compose is used to spin up dependent services (like databases or caches) during test stages. You define services in a docker-compose.yml file and use commands like docker compose up -d before running tests. In production (single-host setups), Compose can manage multi-container apps with docker compose up, or be used with Swarm as a stack via docker stack deploy. It's best for simple environments; for larger deployments, Kubernetes is more suitable.

68. What are some alternatives to Docker Compose for orchestration?

The most widely used alternative is Kubernetes, which is the standard for container orchestration at scale. It supports multi-host deployments, auto-scaling, service discovery, and load balancing, but is more complex than Compose. Docker Swarm is a simpler, built-in clustering option for Docker, offering basic orchestration across hosts.

HashiCorp Nomad is another alternative, known for its simplicity and support for mixed workloads beyond containers. For local development, tools like Minikube or kind let you run lightweight Kubernetes clusters, offering a more production-like environment than Compose. Each tool suits different needs based on scale, complexity, and team workflow.

69. What is “Docker Stack” and how does it relate to Docker Compose?

Docker Stack is a way to deploy multi-service applications to a Docker Swarm cluster using a Compose-style YAML file. By running docker stack deploy, you can take a docker-compose.yml file and deploy its services as Swarm-managed containers.

While the file format is similar, not all Compose features are supported, Swarm focuses on keys like deploy, and may ignore others like build. A "stack" refers to the full group of services that make up the app, each deployed with its own networking and scaling rules. In essence, Docker Stack bridges the gap between Compose for development and Swarm for production orchestration.

70. Have you worked with any orchestration beyond Docker Compose (like Kubernetes)? How do they differ?

Yes, Kubernetes is a widely used orchestration system that differs significantly from Docker Compose. While Compose is designed for single-host setups and quick development environments, Kubernetes is built for managing containerized applications across clusters with features like self-healing, auto-scaling, rolling updates, and high availability. Kubernetes uses a richer set of abstractions such as Pods, Deployments, Services,

ConfigMaps, and Secrets, each defined through separate YAML files. Its learning curve is steeper, but it’s far more scalable and production-ready. Compose is simpler and ideal for small setups, while Kubernetes excels at managing large, distributed applications reliably.

Docker Security Interview Questions

71. What are some best practices for securing Docker containers?

Securing Docker containers involves protecting the image, container runtime, and host environment. Here are key best practices:

- Use minimal base images like Alpine or Distroless to reduce attack surface.

- Avoid running containers as root by specifying a non-root user in the Dockerfile.

- Apply least privilege by dropping unnecessary capabilities and avoiding --privileged mode unless absolutely required.

- Set resource limits for memory, CPU, and processes to prevent abuse or denial-of-service.

- Update images regularly and scan them using tools like Trivy or Docker Scout to catch known vulnerabilities.

- Enable Docker Content Trust to verify the authenticity of images through cryptographic signing.

- Restrict network access, use user-defined networks, and avoid exposing unnecessary ports.

- Manage secrets securely using Docker secrets, environment variables, or external vaults instead of embedding them in images.

- Enforce kernel-level protections like Seccomp, AppArmor, or SELinux profiles to limit what containers can do.

- Use user namespaces or rootless mode to prevent container users from mapping directly to host root.

- Limit host access by minimizing bind mounts and avoiding exposure of critical paths like the Docker socket.

- Implement monitoring and logging to detect unusual behavior and maintain visibility into container activity.

These layered measures help minimize security risks and ensure containers operate safely within your infrastructure.

72. Why is running a container as root (inside the container) considered a bad practice?

Running a container as root is risky because root inside the container often equates to root-level access to the host kernel. If an attacker escapes the container, they could gain full control of the host system. It also violates the principle of least privilege, most applications don’t need root and can run safely with limited permissions.

Using a non-root user reduces the potential damage from exploits or misbehaving scripts and helps prevent unauthorized changes to files or mounted volumes. Best practice is to define a non-root user in the Dockerfile or use the --user flag when running the container.

73. What is Docker’s default seccomp profile?

Docker’s default seccomp profile is a built-in security feature that restricts containers from using potentially dangerous Linux system calls. It blocks around 40 to 50 syscalls, such as keyctl, mount, and kexec_load, which are rarely needed by typical applications but could be exploited to escape the container or harm the host.

The profile is designed to be permissive enough for most workloads while providing meaningful protection. Users can override it with a custom profile or disable it (not recommended) using the --security-opt flag. This seccomp profile is part of Docker’s layered security model.

74. What is the difference between --privileged and --cap-add flags in Docker run?

The --privileged flag gives a container full access to all Linux capabilities and host devices, removing most security restrictions. It's powerful but risky. In contrast, --cap-add lets you grant only specific capabilities (like CAP_NET_ADMIN) without exposing the container to unnecessary privileges. Always prefer --cap-add for safer, fine-grained control.

75. How can you restrict the memory and CPU usage of a Docker container?

You can limit memory with the -m or --memory flag (e.g., -m 512m) and control CPU usage using --cpus (e.g., --cpus 1.5) or --cpu-shares for relative weighting. These flags prevent a container from consuming excessive host resources and help maintain system stability.

76. What is Docker Content Trust (DCT)?

Docker Content Trust (DCT) is a security feature that ensures the integrity and authenticity of Docker images through digital signatures. It uses Notary, based on The Update Framework (TUF), to verify that images come from a trusted source and haven’t been tampered with. When DCT is enabled, Docker will only allow pulling or running signed images.

Publishers sign their image tags with private keys, and users verify them using corresponding public keys. This helps prevent the use of malicious or altered images, especially in production or security-sensitive environments. DCT can be enabled by setting the environment variable DOCKER_CONTENT_TRUST=1.

77. What are Linux namespaces and cgroups, in context of Docker security?

Linux namespaces and cgroups are core features that enable Docker's process isolation and resource control.

Namespaces isolate system resources like process IDs (PID), networking, filesystems, and user IDs. Each Docker container gets its own set of namespaces, ensuring it sees only its own processes, network stack, mounts, and hostname. This prevents containers from interacting with or accessing the host or other containers directly, forming a key part of Docker's security model.

Cgroups (control groups) limit and track resource usage such as CPU, memory, and I/O for each container. They protect the system by preventing any one container from consuming all resources and potentially causing denial-of-service.

Together, namespaces provide isolation, and cgroups provide containment. These are essential for securely running containers on a shared host, although they don’t replace the need for kernel hardening and other security layers since all containers still share the same host kernel.

78. What is user namespace remapping in Docker, and why use it?

User namespace remapping is a Docker security feature that maps user IDs inside a container to different, non-privileged user IDs on the host. For example, the root user (UID 0) inside the container might map to an unprivileged UID like 10000 on the host. This means that even if a container is compromised, processes running as root inside it won’t have root privileges on the host.

Enabling this feature with --userns-remap adds a strong layer of protection by separating container and host privileges. However, it may introduce compatibility issues with volume mounts or specific features that require real root access. Despite trade-offs, it is a recommended practice for enhanced container isolation and reduced risk of privilege escalation.

79. How can you securely allow a container to communicate with the Docker daemon (for example, Docker-in-Docker scenarios)?

Allowing a container to access the Docker daemon is risky, as it can grant full control of the host. To do this more securely:

- Use Docker-in-Docker (dind): Run a separate Docker daemon inside the container. This isolates access from the host but requires elevated privileges and adds complexity.

- Avoid mounting /var/run/docker.sock unless absolutely necessary. If you must, treat the container as fully trusted and restrict its capabilities using seccomp, AppArmor, or user namespaces.

- Consider alternatives like Kaniko or Buildah, which can build and push images without requiring access to the host Docker daemon. These are safer for CI/CD pipelines.

- Use Docker's remote API with TLS: In rare cases, run the Docker daemon with TLS enabled and provide containers with limited client certificates for authenticated access.

- Use access control tools like Sockguard to proxy and restrict what the container can do with the Docker socket.

In general, avoid exposing the host Docker socket to containers whenever possible. If required, apply strong isolation and treat the container as a privileged actor.

80. How do you keep Docker host secure?

Securing the Docker host is critical since a container breach can affect the entire system. Key practices include:

- Keep Docker and the host OS updated to patch vulnerabilities, especially those affecting container isolation or the kernel.

- Secure the Docker daemon, especially if exposing the API, always enable TLS and never leave the API open without authentication.

- Restrict Docker group access, as Docker users effectively have root privileges. Only trusted users should have access.

- Harden the kernel with AppArmor, SELinux, or Seccomp to limit container actions and prevent privilege escalation.

- Monitor activity using audit logs or Docker event logs to track unexpected container behavior or administrative actions.

- Use a minimal host OS designed for containers to reduce the attack surface, such as Bottlerocket or Alpine.

- Apply network security controls, including limiting exposed ports, using firewalls, and isolating services with custom Docker networks.

- Enforce resource limits to prevent containers from exhausting host resources and causing denial-of-service.

- Enable user namespaces or rootless Docker to reduce risk if a container is compromised.

- Regularly scan images and configurations using tools like Docker Bench for Security or CIS benchmarks to identify weaknesses.

Combining these measures helps create a hardened, secure foundation for running containers in production

Docker in CI/CD Pipelines Interview Questions

81. How is Docker used in CI/CD pipelines?

Docker standardizes build, test, and deployment environments by packaging applications and dependencies into containers. In CI/CD, it's used to create isolated environments for building code, running tests, building images, and deploying consistently across environments.

82. What are the benefits of using Docker in Jenkins/GitLab CI pipelines?

Docker provides isolated, consistent environments for builds and tests, reducing "it works on my machine" issues. It allows defining custom environments with all dependencies, speeds up setup with reusable images, and ensures reproducibility across stages. In Jenkins or GitLab CI, Docker enables clean, disposable runners and supports parallel test execution. It also simplifies deployment by building and pushing containerized apps directly from the pipeline.

- Consistency: Ensures the same environment across different stages and machines.

- Isolation: Prevents dependency conflicts by isolating builds.

- Portability: Docker images can be run anywhere, improving deployment flexibility.

- Speed: Faster build/test cycles using pre-built images and parallel jobs.

- Scalability: Easily run multiple jobs or services as containers in parallel.

- Reusability: Shared base images and reusable container setups reduce repetition.

83. How do you use Docker Compose in a CI pipeline?

Docker Compose can define and run multi-service setups in CI. In your pipeline config (e.g., .gitlab-ci.yml or Jenkinsfile), you start services using docker-compose up -d, run your tests or build steps, then tear down with docker-compose down. It's useful for integration testing where the app needs dependencies like a database or cache during the pipeline.

84. What is the best way to cache Docker layers in CI pipelines?

- Use a shared cache registry like Amazon ECR, GitHub Packages, or GitLab Registry to pull and push intermediate images.

- Use --cache-from in docker build to reuse layers from previously built images.

- Structure Dockerfile wisely by placing stable layers (e.g., OS, dependencies) early and frequently changing ones (e.g., code) later.

- Enable layer caching in CI runners if supported (e.g., GitHub Actions cache, GitLab runner volume cache).

- Efficient layer caching can significantly speed up Docker builds in CI pipelines.

85. How do you version Docker images in CI/CD?

Docker images are typically versioned using tags based on the Git commit SHA, release version, or build number. For example, you might tag an image as myapp:1.2.0, myapp:latest, or myapp:commit-abc123. In CI pipelines, dynamic tags are often generated automatically using environment variables like CI_COMMIT_SHORT_SHA or BUILD_NUMBER. This approach ensures traceability and avoids confusion caused by ambiguous tags like latest.

86. How do you scan Docker images in a CI pipeline?

To scan Docker images for vulnerabilities during CI, integrate tools like Trivy, Grype, Snyk, or Docker Scout. These tools analyze the image layers and dependencies for known CVEs. The scan step is added in the CI config after building the image and before pushing it. If vulnerabilities exceed allowed thresholds, the pipeline can fail automatically, enforcing security compliance.

87. How do you push a Docker image from a CI pipeline?

After building and optionally tagging the Docker image, the CI pipeline authenticates with a container registry (like Docker Hub, GitHub Container Registry, GitLab Registry, or AWS ECR) using credentials or tokens stored securely in the CI tool. Then, the image is pushed using docker push imagename:tag. This step is scripted into the CI pipeline and triggered only for deployable branches or tags (e.g., main, release/*).

88. How do you implement rollback in a CI/CD system using Docker?

Rollback is managed by deploying a previously tagged and tested Docker image. In the pipeline, all images are versioned and pushed to a registry. If a deployment fails or needs to be reverted, the orchestrator (e.g., Kubernetes, Docker Swarm) or CI system can redeploy an earlier image by specifying the desired tag (e.g., myapp:1.1.0). Rollbacks can be manual or automated via scripts, depending on the deployment setup.

Real-World Scenario-Based Questions

89. A container keeps restarting. How do you troubleshoot it?

Start by checking the container’s logs with docker logs <container_name> to see the error output. Then inspect the exit code using docker inspect, for example, code 137 may indicate it was killed due to memory issues. Confirm if the main process exits immediately, if it’s missing required environment variables, or if a health check is failing. Also review Docker Compose or orchestrator settings (e.g., restart policy or resource limits) and ensure dependencies (like databases or network services) are accessible.

90. Your container image is 2GB and takes too long to deploy. What do you do?

Reduce image size by:

- Using a minimal base image (like Alpine or Distroless).

Removing unnecessary build tools or intermediate files via multi-stage builds.

- Minimizing layers by combining commands in Dockerfile.

- Excluding unneeded files using .dockerignore .

Once optimized, leverage layer caching and push images to a fast-access registry close to your deployment target (e.g., a regional ECR or GCR mirror).

91. Your app inside Docker runs fine locally but fails in CI. Why?

CI environments differ from local setups. Possible causes include:

- Missing environment variables or secrets in CI config.

- Network or service dependencies not started (e.g., DB not ready).

- File permission issues or missing volumes.

- Differences in Docker or OS versions.

- Dockerfile relying on local files not tracked in Git.

Check CI logs, verify environment parity, and ensure all dependencies are defined in the pipeline or Docker Compose file.

92. How do you share data between multiple containers?

Use named volumes or bind mounts to share files or persistent data. Volumes allow containers to read and write to a common storage path. In Docker Compose, define a volume and mount it at the needed paths in each service. For one-way communication (e.g., a data producer and a consumer), this is safe. For concurrent writes, you may need to manage access at the application level. Alternatively, for real-time communication, containers can also exchange data via networked APIs or message queues.

93. How do you run a database and web server together in Docker for testing?

Use Docker Compose to define both services in a single docker-compose.yml file. This sets up a shared network so the web app can connect to the database using the service name as the hostname. You can seed the database with test data using a volume or init script. Running docker compose up -d brings up the full test environment quickly and consistently.

94. You want to run integration tests every PR. How can Docker help?

Docker allows you to spin up isolated environments for every pull request. In your CI pipeline, use Docker or Docker Compose to launch required services (e.g., app, database, cache), run tests inside containers, and tear everything down after the job. This ensures consistent, reproducible test environments without polluting the CI host.

95. Your container has high memory usage. What steps will you take?

Start by monitoring the container with docker stats or reviewing metrics from your observability tools. Inspect logs and application behavior for memory leaks or large allocations. Set memory limits (--memory) to cap usage and prevent host exhaustion. If needed, run the app locally with profilers or inside the container with debugging tools to identify memory hotspots. Also consider optimizing base image or code paths causing bloat.

96. How do you debug a container that exits immediately after running?

First, check the logs using docker logs <container> to understand the error. Use docker inspect to review the exit code and container config. Often, the issue is a missing command, config error, or failed script. To debug interactively, override the entrypoint with a shell (--entrypoint /bin/sh) and start the container manually using docker run -it --rm image. This lets you explore the container state before it exits.

Docker Interview Questions for Experienced

106. How do you run Docker in production securely?

- Use minimal base images to reduce attack surface.

- Avoid running containers as root; set a specific non-root user.

- Enforce resource limits (CPU, memory, PIDs) to prevent abuse.

- Use user namespace remapping or rootless Docker for isolation.

- Regularly scan images for vulnerabilities and apply updates.

- Secure the Docker daemon (enable TLS if remote access is needed).

- Control access to the Docker group, which has root privileges.

- Use AppArmor, SELinux, and Seccomp profiles to restrict container capabilities.

- Never mount the Docker socket (/var/run/docker.sock) unless absolutely necessary.

107. What is the difference between Swarm and Kubernetes in Docker contexts?

- Docker Swarm is Docker’s native orchestration tool. It’s simpler and easier to set up but less feature-rich.

- Kubernetes is the industry standard for container orchestration, offering more advanced features like auto-scaling, rolling updates, service discovery, and broader ecosystem support.

Swarm integrates tightly with Docker CLI, while Kubernetes has a steeper learning curve but better long-term scalability and flexibility for large-scale systems.

108. How does Docker interact with Kubernetes?

Kubernetes uses a container runtime to manage containers. Historically, this was Docker via the Docker Engine. However, Kubernetes now uses Containerd, which is part of Docker’s underlying architecture. Docker images are still fully compatible with Kubernetes, images built with Docker can be pushed to a registry and pulled into Kubernetes pods. You don’t need the full Docker daemon in Kubernetes, just a compatible runtime.

109. How would you manage secrets in production with Docker?

- In Docker Swarm, use the built-in Docker Secrets feature to securely store and inject secrets into containers at runtime.

- In Kubernetes, use Kubernetes Secrets with encryption at rest and RBAC controls.

- Avoid hardcoding secrets in Dockerfiles or passing them as environment variables.

- Use bind mounts or volume drivers to inject secrets from secret management tools like HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault.

- Limit access by scoping secrets only to the containers that need them.

110. How do you handle logs and metrics for containers in production?

- For logs, configure Docker’s logging driver to use a central system like Fluentd, syslog, or a log aggregation service (e.g., ELK, Loki, or Graylog). Avoid the default json-file driver in large-scale setups.

- For metrics, use tools like Prometheus and cAdvisor to collect resource usage data. In Kubernetes, tools like Metrics Server, Grafana, and Prometheus Operator are commonly used.

- Combine logs and metrics into a unified monitoring dashboard to detect issues, set alerts, and support debugging.

- Ensure logs and metrics are stored externally to avoid losing data if a container crashes or is redeployed.

111. How do you patch base images for security vulnerabilities?

To patch base images, regularly update the FROM instruction in your Dockerfile to the latest secure version (e.g., node:18-alpine). Rebuild and redeploy your application image whenever base images release security fixes. Use image scanning tools like Trivy, Grype, or Docker Scout to detect known vulnerabilities. Automate these scans in your CI pipeline and monitor upstream image changelogs for updates.

112. How can you limit outbound/inbound traffic for containers?

- Inbound traffic can be restricted by not publishing ports or using firewall rules (e.g., iptables, ufw, or cloud security groups).

- Outbound traffic can be controlled using Docker’s custom bridge networks with --icc=false, external firewalls, or third-party CNI plugins.

- In Kubernetes, use Network Policies to control ingress and egress at the pod level.

- For strict isolation, containers can run on separate networks with no internet access unless explicitly allowed.

113. Describe a secure multi-tenant Docker setup.

In a secure multi-tenant setup:

- Use user namespace remapping so root in containers maps to non-root on host.

- Run rootless Docker for unprivileged tenants.

- Apply strict resource limits and cgroups per tenant.

- Use network isolation (separate networks or VLANs) to prevent cross-tenant access.

- Restrict shared volumes and avoid exposing the Docker socket.

- Implement role-based access control (RBAC) and audit logs.

- Consider using Kubernetes namespaces or virtual machines for stricter tenant separation if full isolation is required.

114. What are the challenges of using Docker in microservices?

- Complex orchestration: Managing many services requires tools like Kubernetes or Swarm.

- Networking overhead: Service discovery, DNS resolution, and inter-container communication can be complex.

- Resource sprawl: Each service may require its own container, increasing CPU/memory consumption.

- Security: More containers = larger attack surface.

- Logging and monitoring: Aggregating and correlating logs/metrics across services becomes critical.

- Data consistency and dependency management: Ensuring consistency across loosely coupled services can be tricky.

115. What is the role of OCI in Docker ecosystem?

The Open Container Initiative (OCI) standardizes container formats and runtimes. Docker donated its image format and runtime specs to OCI to ensure interoperability across tools. This means any OCI-compliant image (e.g., Docker-built image) can be run by OCI-compliant runtimes like Containerd or CRI-O. OCI ensures consistency, avoids vendor lock-in, and promotes ecosystem-wide compatibility across Docker, Kubernetes, and other platforms.

Docker Interview Questions

Note: We have compiled all Docker Interview Questions List for you in a template format. Feel free to comment on it. Check it out now!!

Conclusion

Answering Docker Interview Questions effectively requires more than knowing commands, it’s about demonstrating how Docker fits into scalable, secure, and production-ready systems.

Use this guide to deepen your understanding, refine your answers, and strengthen your practical knowledge. Whether you're targeting DevOps, platform engineering, or cloud-native roles, confidently explaining how Docker works will set you apart in any technical interview. Keep learning, stay hands-on, and apply these insights in real projects to stand out.

2M+ Devs and QAs rely on LambdaTest

Deliver immersive digital experiences with Next-Generation Mobile Apps and Cross Browser Testing Cloud

On This Page

- Overview

- Docker Basics Interview Questions for Freshers

- Docker Images and Containers Interview Questions

- Dockerfile and Image Optimization Interview Questions

- Docker Networking Interview Questions

- Docker Volumes and Storage Interview Questions

- Docker Compose and Orchestration Interview Questions

- Docker Security Interview Questions

- Docker in CI/CD Pipelines Interview Questions

- Real-World Scenario-Based Questions

- Docker Interview Questions for Experienced

- Frequently Asked Questions

Frequently Asked Questions (FAQs)

Did you find this page helpful?

More Related Hubs

Start your journey with LambdaTest

Get 100 minutes of automation test minutes FREE!!