Power Your Software Testing with AI and Cloud

Supercharge QA with AI for Faster & Smarter Software Testing

- Automation

- Home

- /

- Learning Hub

- /

- Python Behave

Python Behave for BDD Testing: A Complete Guide

Learn how to use Python Behave for BDD testing. Write human-readable tests, run them efficiently, and follow best practices for seamless collaboration.

Last Modified on: November 9, 2025

- Share:

Behavior-Driven Development (BDD) in Python is made simple and effective with Python Behave. This framework helps developers create automated tests that reflect real-world user behavior, ensuring that only relevant features are built and verified.

By combining structured test automation with human-readable scenarios, Python Behave helps teams maintain high-quality software while improving collaboration between technical and non-technical stakeholders.

Overview

What Is Python Behave?

Python Behave enables writing automated tests in a clear, structured format that both technical and non-technical team members can follow. It translates behavior specifications into executable Python code, helping ensure that software behaves as intended. By separating feature descriptions from implementation, Behave promotes collaboration between developers, testers, and stakeholders while improving test readability and maintainability.

How to Run Python Behave BDD Tests?

Python Behave lets you execute Behavior-Driven Development tests by translating human-readable scenarios into automated Python scripts. Running these tests efficiently requires setting up a proper environment, organizing feature files, and connecting step definitions to their corresponding scenarios.

- Install Dependencies: Use a virtual environment to isolate project packages. Install Behave and any required libraries like Selenium or Requests.

- Organize Feature Files: Place .feature files in a features directory. Each file describes a feature with scenarios using Gherkin syntax.

- Create Step Definitions: Implement Python functions under features/steps that correspond to the Given-When-Then steps in your feature files.

- Configure Environment Hooks: Use environment.py for setup and teardown actions, such as initializing browsers, connecting to APIs, or cleaning test data.

- Run Tests Locally: Execute behave from the project root to run all scenarios, or use tags to run specific features or scenarios.

- Integrate With CI/CD: Connect Behave tests to pipelines for automated execution across different environments, ensuring consistency and early detection of failures.

- Analyze Test Reports: Generate console output or HTML reports to review scenario outcomes, debug failures, and track test coverage.

What Are the Common Troubleshooting Techniques for Python Behave?

Python Behave tests can fail due to environment issues, directory misconfigurations, or mismatched step definitions. Understanding common pitfalls and debugging effectively ensures stable BDD test execution.

- Behave Not Found: Activate virtual environment, verify installation, reinstall if missing to ensure command executes successfully.

- Directory Issues: Maintain correct features/steps structure with __init__.py and optional behave.ini for proper discovery.

- Step Definition Errors: Match Gherkin steps exactly, use correct placeholders, imports, and --dry-run to detect undefined steps.

- Debugging Steps: Use sprint() statements or inspect context to track variables and step execution flow.

- CI/CD Crashes: Set PYTHONUNBUFFERED=1, log outputs, minimize parallel execution, and validate environment.py hooks for stability.

- Reporting: Utilize --junit or --format=json.pretty flags to generate structured, analyzable test outputs and logs.

What’s the Best Way to Manage Reusable Step Definitions?

For large projects, managing step definitions efficiently is essential. The best practice is to modularize your step files, group related steps into separate Python modules (for example, login_steps.py, api_steps.py, ui_steps.py). Behave automatically discovers these modules if they’re inside the steps directory. Following the same structure recommended in Cucumber best practices, you should also avoid duplicating steps and use descriptive function names to improve readability and maintainability.

What Is Python Behave?

Python Behave is a BDD framework that focuses on bridging the gap between business requirements and code. It uses plain English to define scenarios, making it easy for everyone to understand an application's expected behavior.

Scenarios written in the Gherkin language are then linked to Python step definitions, which implement the actual logic to execute and validate those behaviors.

Key Components of Python Behave

Python Behave relies on several essential components to enable Behavior-Driven Development (BDD) testing in Python. Understanding these elements helps in organizing and executing tests efficiently:

- Features and Feature Files: Contains a Features directory with *.feature files. Scenarios are written in Gherkin syntax, using keywords like Feature, Scenario, Scenario Outline, Given, When, Then, And, and But.

- Step Definitions: Python scripts in the Steps folder map Gherkin scenario steps to executable code. Each function corresponds to a scenario step and implements the logic to validate expected behavior.

- Environment Module and Hooks: The environment.py file contains setup/teardown functions such as before_step(), after_step(), before_scenario(), and after_scenario(). It also manages environment variables or connections to cloud testing platforms.

- Tags: Tags (e.g., @Chrome, @Edge) allow selective execution of tests. They can be applied to features or scenarios to control which tests run in development, staging, or production environments.

Note: Run your Python Behave tests at scale across 3000+ browsers and OS combinations. Try LambdaTest Now!

How to Run Python Behave BDD Tests?

For Python projects, the Behave framework streamlines Behavior Driven Development test execution and promotes better collaboration between developers, testers, and non-technical team members.

Prerequisites:

Ensure that the latest version of Python is installed on your local machine, along with the Python package manager, pip.

Use the commands below to verify both installations:

- Verify Python: Run the command below to verify if Python is installed or not.

python --versionpip --versionAfter verifying the installation of Python and the standard package manager on your local machine, you must create a Python virtual environment and install the required project dependencies.

Create a Python Virtual Environment:

Follow the steps below to set up your project environment:

- Create Project Directory: Run the command below to create a new project folder:

mkdir behavior_driven_development_python_behavecd behavior_driven_development_python_behavepython -m venv envenv\Scripts\activatepip install behave behavex selenium requestsWith the virtual environment ready and dependencies installed, the next step is to organize your project into the standard Behave directory structure.

While Behave is one of the most popular frameworks for Behavior Driven Development in Python, you can also use pytest-bdd, which combines the simplicity of pytest with BDD-style testing. If your team already relies on pytest for automation, pytest-bdd offers a seamless way to reuse existing fixtures and integrate BDD scenarios within the same testing ecosystem.

Project Structure

A Python Behave project requires a specific structure to organize tests:

- There must be a features directory.

- Inside features, there should be a steps directory for step definitions.

- The environment.py file is optional but useful for setup and teardown of BDD tests.

behavior_driven_development_python_behave/

└── features/

├── steps/

├── *.feature

└── environment.py

This ensures that Behave can discover your feature files and execute the corresponding step definitions correctly.

Writing Feature Files (Gherkin Syntax)

Behave feature files are written using the Gherkin language, which is specifically used in BDD to describe the behavior of an application or software feature in plain English.

Gherkin has a standard syntax and keywords that provide a structured pattern for describing scenarios. Scenario steps are described using Given-When-Then, while And and But are sometimes used to provide extra details where necessary.

In every *.feature file, a software feature is described, and one or more scenarios give concrete examples of how the feature should behave. Each scenario essentially represents a Gherkin test case that can be executed to validate the behavior of the application.

Some Gherkin keywords:

- Feature: States a specific software or application feature that will be tested. The feature title is outlined, and an optional description is provided.

- Scenario: A typical use case or example of how the feature should behave. One or more scenarios describing a feature’s behavior are accepted.

- Scenario Outline: Provides a concrete example of the feature behavior along with parameters or datasets, enabling parameterized testing.

- Given:Declares the default state of the software. It describes the system context before a user begins interaction.

- When: Details the trigger action or interaction performed, which changes the current state of the system.

- Then: Specifies the results or outcomes from the previous interaction.

- And / But: Extend a previous Given, When, or Then step to provide additional context or information.

Using Gherkin in this way ensures clear Gherkin testing, where each test case is readable in plain English, understandable by both technical and non-technical stakeholders.

API Endpoint Response Implementation:

In the Features directory, you will need to create an api_status.feature file that will contain code for BDD testing of an API response. The Gherkin language is used in Python Behave to write scenarios that describe specific behaviors of the software.

Python Behave provides the same approach as Cucumber testing in other languages, keeping scenarios human-readable and easy to understand. While Gherkin describes scenarios, the step definitions are the code implementation of those scenarios.

Feature: Public API - Post Retrieval

@Chrome

Scenario Outline:

Given the API endpoint is "https://jsonplaceholder.typicode.com/<endpoint>"

When I make a GET request

Then the response status should be "<status_code>"

And the response should contain the field "<field>"

Examples:

| endpoint | status_code | field |

| posts/1 | 200 | userId |

| posts/2 | 200 | title |

| posts/100 | 200 | body |

| posts/101 | 404 | error |

Code Walkthrough:

- Feature Declaration: The api_status.feature file should begin with the Feature: keyword, followed by the title of the feature to be tested.

- Scenario Tagging: Use the tag @Chrome as a prefix to the Scenario Outline. The purpose of the tag is to select this group of code to be executed on the Chrome browser.

- Scenario Outline Syntax: The Scenario Outline is written using the Given-When-Then syntax. Given declares the state of the API endpoint, When states the action taken, and Then gives the result, which is the status code in this case.

- Scenario Outline Dataset: Unlike Scenarios, Scenario Outlines come with a dataset for testing. The examples give different endpoints, status codes, and fields to validate that each endpoint behaves as expected.

Creating Step Definitions in Python

After creating *.feature files, you need to create step definitions in Python code. The step definitions are Python functions that are mapped to the Scenarios written in Gherkin syntax. These functions define what each step outlined in the Given-When-Then format should do.

The Python files containing Python code definitions for each step are contained in the Steps repository. A decorator with the keyword Given, When, or Then is attached to its function.

API Endpoint Response Python Step Implementation:

After creating a feature file for the API Endpoint Response, a steps file will have a Python definition for the feature. To define the steps to test the behavior of the API Endpoint response, the requests library is imported alongside Given, When, then decorators from the behave library.

import requests

from behave import given, when, then

@given('the API endpoint is "{endpoint}"')

def step_api_endpoint(context, endpoint):

context.url = endpoint

@when('I make a GET request')

def step_get_request(context):

context.response = requests.get(context.url)

@then('the response status should be "{status_code}"')

def step_response_status(context, status_code):

assert context.response.status_code == int(status_code), f'Expected status code {status_code}, but got {context.response.status_code}'

@then('the response should contain the field "{field}"')

def step_response_contains_field(context, field):

try:

json_data = context.response.json()

except Exception:

json_data = {}

# Optional: allow for failed responses that have no field

if context.response.status_code == 404:

assert field not in json_data or not json_data.get(field), f"Unexpected field '{field}' found in 404 response"

else:

assert field in json_data, f"Field '{field}' not found in response"

Code Walkthrough:

- Given Decorator Mapping: The @given decorator maps the scenario defined with the Given keyword for the API Endpoint response feature file. The endpoint is assigned to the context.url variable.

- When Step Implementation: Create a function step_get_request(), which is a Python implementation of the When scenario step. The value of the context.response attribute is set to the response gotten from context.url using the requests.get() method.

- Then and And Assertions: The @then decorator is used for both the Then and And keywords because Python Behave renames And to the name of the previous step, which in this case is Then.

- Response Validation Assertions: An assertion is written to validate that the context.response.status_code equals status_code. In the last function step_response_contains_field(), the json_data variable is set to the value for context.response.json(). Python asserts are raised to check if the field parameter is found in json_data.

Using environment.py for Setup and Teardown

Here, you must create an environment.py for setup and teardown, which will contain the code responsible for connecting your local script to a cloud testing platform.

The reason for executing tests over the cloud is that the tests may struggle with maintaining multiple browser versions, OS configurations, and devices, leading to flaky tests and inconsistent results.

Local setups are slow, resource-heavy, and hard to scale for large test suites. Cloud testing solves these issues, enabling reliable Behave testing at scale across thousands of environments.

As a cloud testing platform, will use LambdaTest as the execution platform, which allows you to run Behave test automation at scale across 3000+ browsers and OS combinations.

To get started with LambdaTest, follow these steps:

- Get Credentials: Navigate to Account Settings < Password & Security in LambdaTest to get your Username and Access Key.

- Get Capabilities: Use the Automation Capabilities Generator to configure the desired OS, browser, and version for your LambdaTest tests.

- Connect to LambdaTest Grid: Initialize a remote WebDriver session to run your tests on LambdaTest’s cloud infrastructure instead of locally. Connect to the LambdaTest Grid using your credentials for authentication:

context.browser = webdriver.Remote(

command_executor=f"https://{username}:{authkey}@hub.lambdatest.com/wd/hub",

options=options

)This setup ensures that your Behave tests run reliably at scale, without worrying about local environment inconsistencies or resource limitations.

Code Implementation:

The environment.py file allows you to define functions that execute at specific points during your BDD tests with Python Behave. Setup and teardown logic is defined here. You should also import all dependencies required for the project setup and teardown in this file.

from behave.model_core import Status

from selenium import webdriver

from selenium.webdriver.chrome.options import Options as ChromeOptions

from selenium.webdriver.firefox options import Options as FirefoxOptions

from selenium.webdriver.edge.options import Options as EdgeOptions

import os

import json

INDEX = int(os.environ['INDEX']) if 'INDEX' in os.environ else 0

if os.environ.get("env") == "jenkins":

desired_cap_dict = os.environ["LT_BROWSERS"]

CONFIG = json.loads(desired_cap_dict)

else:

json_file = "config/config.json"

with open(json_file) as data_file:

CONFIG = json.load(data_file)

username = os.environ["LT_USERNAME"]

authkey = os.environ["LT_ACCESS_KEY"]

def before_scenario(context, scenario):

try:

desired_cap = setup_desired_cap(CONFIG[INDEX])

if 'Chrome' in scenario.tags:

options = ChromeOptions()

options.browser_version = desired_cap.get("version", "latest")

options.platform_name = "Windows 11"

elif 'Firefox' in scenario tags:

options = FirefoxOptions()

options.browser_version = desired_cap.get("version", "latest")

options.platform_name = "Windows 10"

elif 'Edge' in scenario tags:

options = EdgeOptions()

options.browser_version = desired_cap.get("version", "latest")

options.platform_name = "Windows 8"

else:

raise ValueError("Unsupported browser tag")

options.set_capability('build', desired_cap.get('build'))

options.set_capability('name', desired_cap.get('name'))

print("Browser Options:", options.to_capabilities())

context.browser = webdriver.Remote(

command_executor=f"https://{username}:{authkey}@hub.lambdatest.com/wd/hub",

options=options

)

except Exception as e:

print(f"Error in before_scenario: {str(e)}")

context.scenario.skip(reason=f"Failed to initialize browser: {str(e)}")

def after_scenario(context, scenario):

if hasattr(context, 'browser'):

try:

if scenario.status == Status.failed:

context.browser.execute_script("lambda-status=failed")

else:

context.browser.execute_script("lambda-status=passed")

except Exception as e:

print(f"Error setting lambda status: {str(e)}")

finally:

context.browser.quit()

def setup_desired_cap(desired_cap):

cleaned_cap = {}

for key, value in desired_cap.items():

if key == 'connect':

if not isinstance(value, (int, float)) or value is None:

cleaned_cap[key] = None

else:

cleaned_cap[key] = value

else:

cleaned_cap[key] = value

return cleaned_cap

Code Walkthrough:

- INDEX Initialization:The value of the constant INDEX is set from the environment variable, and if it’s not found, the value is set to 0.

- Browser Capabilities Loading: The if/else statement that follows allows us to set browser capabilities. Capabilities are loaded from LT_BROWSERS if in a CI/CD pipeline, else from the local config/config.json file.

- Credentials Setup: The username and authkey variables are set to the LT_USERNAME and LT_ACCESS_KEY environment variables.

- Before Scenario Hook: A hook function, before_scenario(), is created to run before each scenario is tested.

- Desired Capabilities Setup: The desired_cap, which holds browser capabilities, is returned from the loaded config file via the function setup_desired_cap(CONFIG[INDEX]).

- Browser Routing: Conditional statements route the browser based on tags in feature files, such as @Chrome, @Firefox, and @Edge.

- Selenium Options Configuration: Each Selenium Options object is set within the conditional code blocks.

- Test Metadata Setup: The name and build for each test on the LambdaTest automation dashboard are set using the options.set_capability() method.

- Remote Browser Launch: A remote browser is launched on the LambdaTest platform in the variable context.browser using the Selenium webdriver.Remote class.

- After Scenario Hook: A hook function, after_scenario(), is created to run after each scenario is tested.

- Failed Test Handling: If a scenario fails, the test status on the LambdaTest dashboard is set to failed using context.browser.execute_script("lambda-status=failed").

- Passed Test Handling: If a scenario passes, the test status on LambdaTest is set to “passed”.

- Set up Desired Capabilities Function: The function setup_desired_cap() is created to clean up LambdaTest capabilities, returning a clean capability object.

Running Python Behave Tests

To run Python behave tests, ensure that you’re in the root directory of your project (the one containing the `features` directory) and run the following command in the terminal:

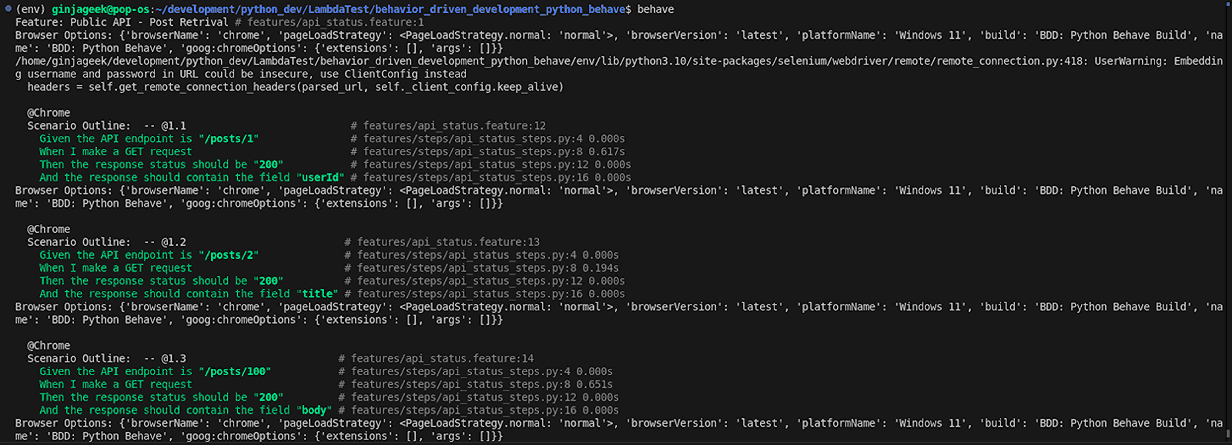

behaveOutput on the local terminal:

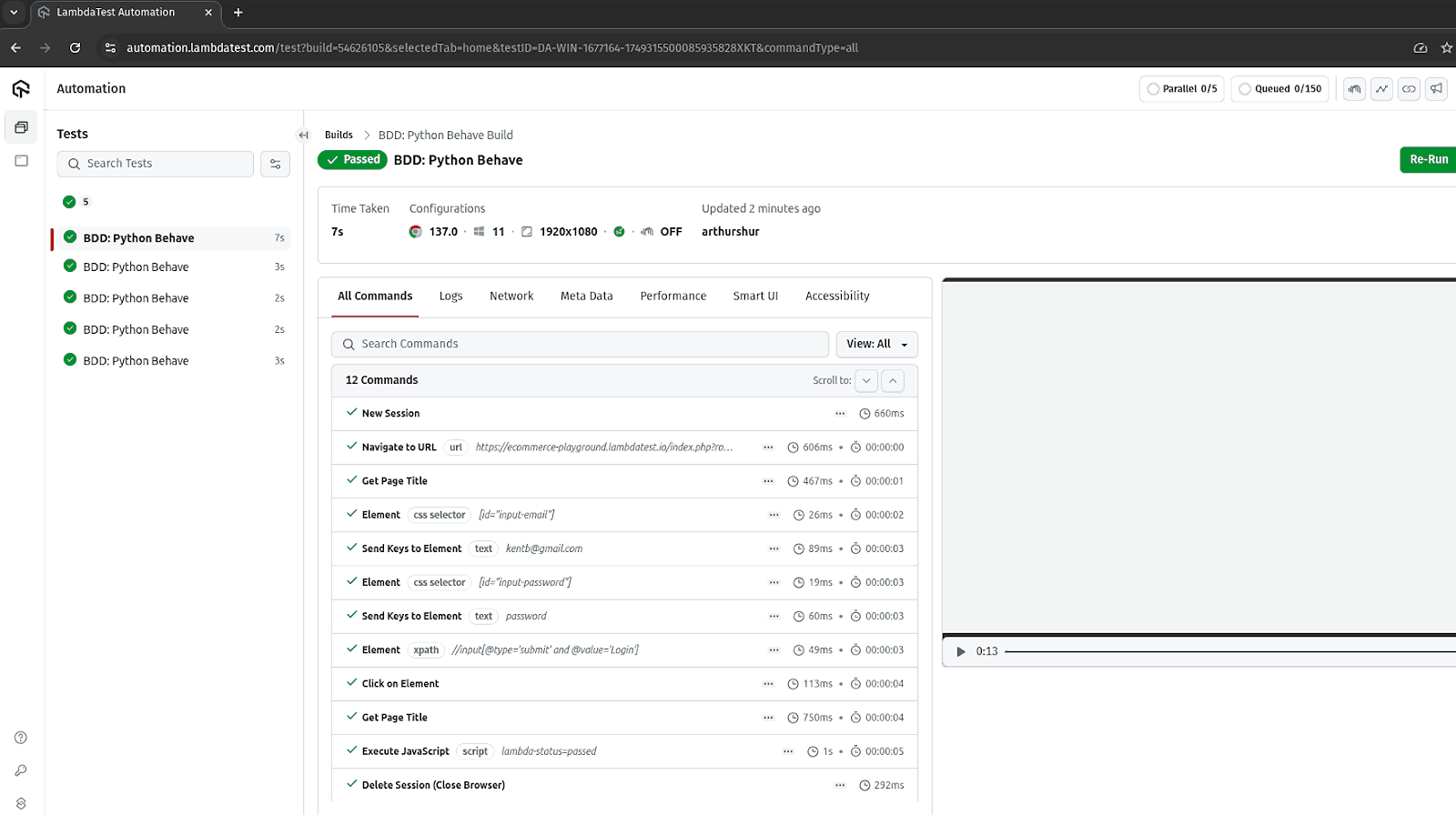

Result on the LambdaTest automation dashboard:

To learn how to configure and run your automation testing scripts on LambdaTest, follow this support documentation on the Python framework Behave.

Running Specific Feature Files in Python Behave:

To run specific feature files, in your terminal, specify the path to the exact feature file you want executed. To execute only the api_status.feature file, run the command below in the terminal:

behave features/api_status.featureRunning Specific Test Scenarios With Tags:

Tags allow you to run only specific scenarios that have been tagged. For example, you can run a scenario with the @Chrome tag using the command:

behave --tags=@ChromeRunning Python Behave in Parallel:

To experience faster execution of Behave tests, you’ll have to run your tests in parallel. By default, Behave does not provide parallel execution; hence, the need to use the Behave wrapper framework, BehaveX. It is noticeably used for executing parallel test sessions, among other things.

To execute your Python Behave tests using 4 parallel processes, follow the command below:

behavex --parallel-processes 4The --parallel-process flag is used to indicate that the tests should be executed in parallel. The number 4 indicates that 4 parallel processes should be used to run the tests.

In the case your path length exceeds 108 bytes and there is an exception when executing in parallel, use the command below:

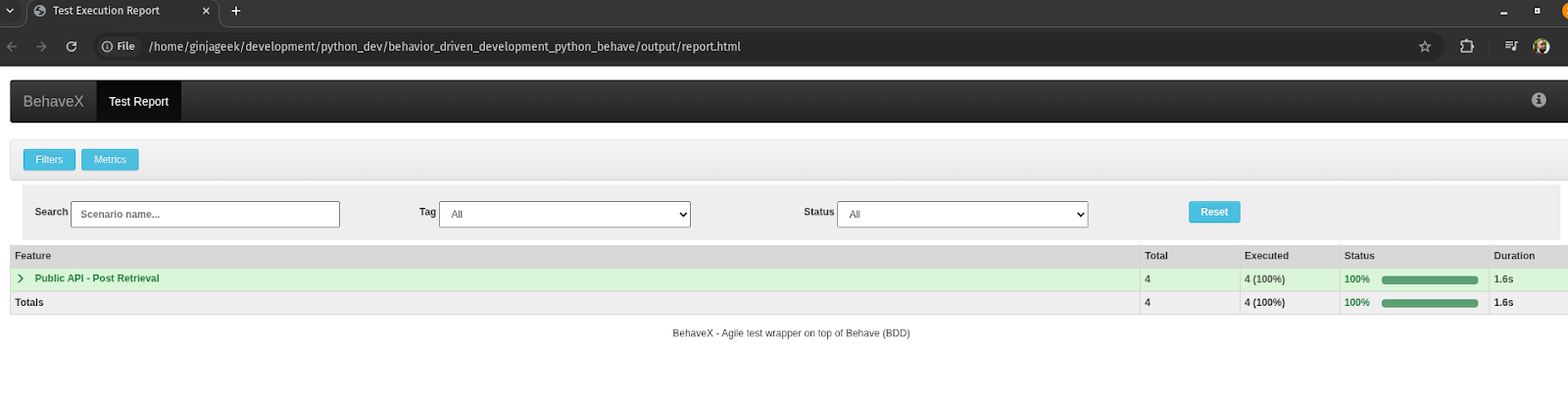

TMPDIR=/tmp behavex --parallel-processes 4How to Geneate a Test Report in Python Behave?

Another capability of BehaveX is the ability to generate a report. The report is in three types: HTML, JUnit, and JSON. To create a report, use the default behavex command to execute tests, and reports will be generated.

Run the following commands to generate, locate and view the report.

- Generate a report in Python Behave: Run the following command to generate a report.

behavex/path/to/behavior_driven_development_python_behave/output/report.html

TMPDIR=/tmp behavex --output-folder my_reportsUse the --output-folder flag, followed by the name of the folder, and your reports will be created in the given name folder, in this case, it's my_reports.

Troubleshooting Python Behave

When writing and troubleshooting Python Behave tests, it’s helpful to follow practices inspired by Cucumber, another BDD framework.

Many Cucumber best practices, such as using clear Gherkin syntax, organizing step definitions logically, and maintaining consistent feature file structures, also apply to Python Behave, ensuring reliable, maintainable, and readable BDD tests.

Some of the common issues are mentioned below:

Problem: Behave command not recognized

This issue often occurs if the behave executable file is not installed or found in the PC’s PATH environment variables.

Solution:

- Activate Virtual Environment: Activate your project’s virtual environment.

- Verify Installation: Verify Behave has been installed by running the behave command.

- Check Executable File: Verify the Behave executable file in your virtual environment.

- For Windows: Navigate to C:\\Path\\To\\Your\\behave\_project\\env\\Scripts\\ (replace env with your env name).

- For Linux/macOS: Go to $HOME/Path/To/Your/behave\_project/env/bin/ (replace env with your env name).

- Reinstall Behave: Try the command below if the Behave executable is not found:

pip install behave --upgrade --force-reinstallThe most common cause of the Behave command not recognized issue results from an unverified installation of Behave or non-activation of the Python virtual environment.

Problem: Directory Structure Issues

When the Behave folder structure is not followed strictly when setting up a project, issues with the directory structure will arise.

Solution:

- Ensure Root Directory: Before running the behave command, ensure you’re in the root directory of your project. The root directory should be the one that contains the features folder.

- Add __init__.py Files: Ensure that Python packages have the __init__.py file for easy importation of modules.

- Create behave.ini File: Create a behave.ini file in your project’s root directory to define the paths variable.

paths=featuresBehave follows a strict folder structure; hence, any deviation from the expected structure results in directory structure issues.

Problem: Step Definition Errors

Step definition errors occur when Behave is unable to map feature files to their corresponding Python code implementations. A warning is often displayed by Behave on the terminal about undefined steps when these step definition errors occur.

Solution:

- Exact String Matching: Use an exact matching string from the feature file steps when creating a Python step definition. Ensure there are no extra spaces or quotes that could cause errors.

- Feature File Example: Then the response status should be "200"

- Step Definition Example: Define a Python function that matches the step text from your feature file using the appropriate decorator, such as @then, @given, or @when. This connects the Gherkin step to executable code.

@then('the response status should be {status_code}')

def step_status_code(context, status_code):

pass

Missing import statements, undefined steps, and syntax errors are the usual suspects for step definition errors encountered when running Behave.

Problem: Crashes and Unexpected Behavior

The issue of crashes and unexpected behavior often occurs in CI/CD pipelines because of the Unix-based environments.

Solution:

- Match Steps: Verify that steps in the *.feature files exactly match those in the step definition functions.

- Environment File Placement: Ensure that the environment.py file is placed in the features folder and that configurations and hooks are valid.

- Check Import Paths: Cross-check that import paths for modules and packages are correct to avoid import errors.

- Disable I/O Buffering: Disable I/O buffering by setting the environment variable PYTHONUNBUFFERED=1 in the config file or as a prefix before the behave command.

- Log Output: Log output results to a file like so: behave > behave_output.log 2>&1

- No-Capture Flag Caution: Avoid using the --no-capture flag in CI/CD pipelines unless necessary, as it may cause deadlocks in stdout.

- Parallel Execution Caution: Keep parallel execution minimal, as heavy I/O operations can trigger file descriptor exhaustion.

- Structured Output: Ensure structured output by using --junit or --format=json.pretty flags to prevent output errors.

When executing BDD tests with Python Behave in CI/CD pipelines, there are chances that there will be crashes and unexpected behavior, as seen here.

Conclusion

Behavior-driven development, as a software development methodology, is increasingly being adopted by developers and organizations due to its focus on communication among technical and non-technical members on a project. It helps everyone on the team understand and outline the desired behavior of an application or feature using human-readable language.

For Python-based projects, a recommended framework for executing BDD is Behave. A Behave project structure is easy to set up and get started with. It uses the Gherkin language for writing software use case scenarios in plain English with its syntax and keywords. Creating step definitions in Python files is how Behave maps each step written in Python executable code to the related Gherkin language scenarios.

When the behave keyword is used to run the BDD tests, the feature files in the features repository are discovered along with the associated step definitions for execution. For additional capabilities, such as report generation and parallel execution, BehaveX is used.

Citations

- Behave Docs : https://behave.readthedocs.io/en/latest/

- PyPI BehaveX : https://pypi.org/project/behavex/

Frequently Asked Questions (FAQs)

Did you find this page helpful?

More Related Hubs

Start your journey with LambdaTest

Get 100 minutes of automation test minutes FREE!!