Explore AI UI Testing for Smarter QA

See how AI UI Testing can reduce maintenance and boost accuracy.

- Agentic Testing

- Home

- /

- Learning Hub

- /

- AI UI Testing

A Guide to Effective AI UI Testing

Learn how AI UI testing boosts reliability, reduces maintenance, and accelerates test automation with tools like KaneAI, Smart UI, and HyperExecute

Last Modified on: October 17, 2025

- Share:

Traditional UI testing is notoriously fragile. Tests break with minor UI changes, maintenance eats up more time than writing new tests, and flaky tests erode team confidence in your test suite.

And it's only getting worse as applications grow more complex and release cycles accelerate.

AI-powered UI testing can help turn your automation from brittle and high-maintenance to resilient and self-healing. According to recent surveys, 60% of DevOps professionals identify testing as one of the key areas for AI implementation.

So, if you want to test AI-native UI testing for your organization, this article is for you. I'll dig into AI-native UI testing approaches, break down techniques and tools that make your automated tests more intelligent, and offer straightforward methods for making your workflow more robust, efficient, and ready for whatever comes next.

Overview

AI UI Testing uses artificial intelligence to make UI automation more resilient, adaptive, and self-healing. Unlike traditional tests, AI-powered tests can automatically adjust to UI changes, reducing maintenance and improving reliability.

What are the Key Aspects of AI UI Testing:

- Self-Healing Automation: Dynamically adapts locators when UI elements change.

- Computer Vision: Detects visual regressions across browsers and devices.

- Natural Language Processing (NLP): Enables test creation through plain English instructions.

- Predictive Analytics: Forecasts test failures to prioritize maintenance.

Which Tools Are Popular for AI UI Testing:

- KaneAI: AI-native test agent converting instructions into scripts.

- Smart UI: Visual regression testing across browsers and devices.

- HyperExecute: AI-driven test orchestration for faster execution.

What Are the Benefits of AI UI Testing:

- Faster testing cycles

- Reduced maintenance effort

- Higher accuracy and coverage

- Early defect detection

What Does the Future Hold for AI UI Testing:

Autonomous AI agents may perform exploratory testing and adaptive workflows with minimal human intervention.

What Does AI Bring to UI Testing Workflows?

AI adds several new capabilities to traditional UI testing (read traditional vs AI testing) and validation workflows. For instance, machine learning algorithms can analyze application behavior patterns, predict potential fail points, and automatically "heal" tests when the interface is updated.

Then there's computer vision. These systems examine screenshots pixel-by-pixel to catch visual regressions that traditional selectors might miss. Add natural language processing, and you can create tests through conversational instructions instead of complex scripting.

These capabilities mean you can create an AI prompt for the test script once and not touch it again, even when your app updates.

But the takeaway is, you always need human oversight. While AI is powerful, you need to balance those capabilities with good judgment to keep testing quality high.

How Do You Implement AI in UI Testing?

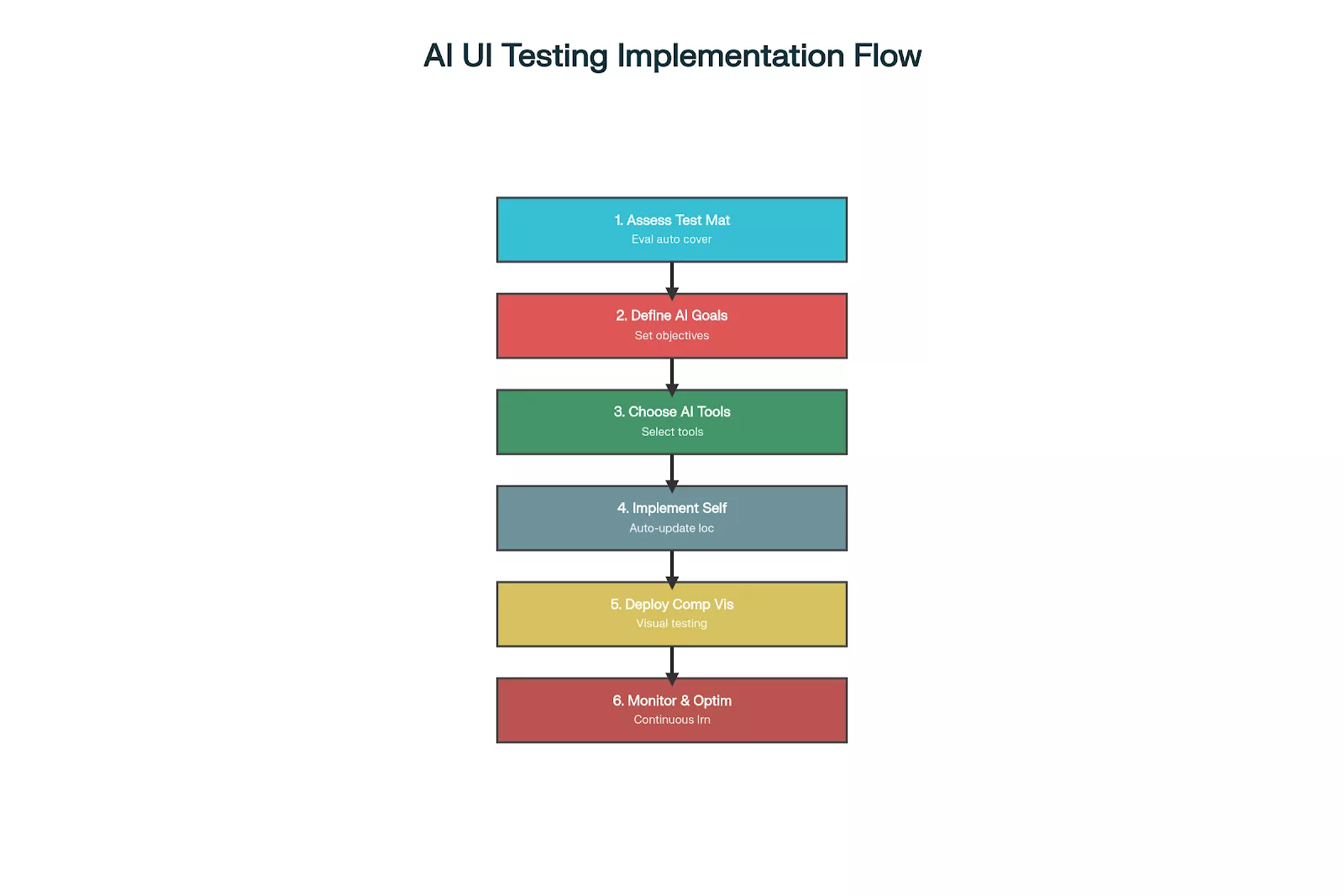

To actually implement AI in your UI testing, you need systematic planning and gradual adoption. So here’s a simple step-by-step guide to the implementation.

Step 1: Assess Current Testing Maturity

Begin by taking a close look at where your testing automation stands today.

You'll want to get a sense of how frequently your tests actually run, how much time you're spending keeping them up to date, and what your failure rates look like. Pay particular attention to how much effort goes into fixing tests that break after you make changes to your application. This is often where teams lose the most time.

As you dig into this, you'll start to notice patterns around which types of tests demand the most hands-on attention. This understanding becomes your roadmap, helping you figure out which AI tools will actually solve your problems and where you should focus your implementation efforts first.

Step 2: Define Clear AI Testing Goals

Once you understand your baseline, it's time to set some concrete goals for what you want AI to accomplish. Think in terms of specific, measurable outcomes.

For example, you may want to:

- Cut test maintenance time by 70%

- Reduce false positives by 50%

- Increase test coverage to 95%+ consistently

- Include 1000 more devices in your tests

Having these clear targets serves two important purposes: it gives you a way to objectively assess whether the AI tools are delivering real value. And it provides the concrete evidence you'll need when explaining the investment to stakeholders who control the budget.

Step 3: Choose the Right AI Testing Tools

When it comes to selecting tools, focus on what your team actually needs rather than getting swept up in marketing promises. Think through the practical realities:

- How complicated will it be to integrate this tool with your existing setup?

- Does your team have the technical skills to work with it effectively?

- Will it play nicely with your application's technology stack?

The best way to answer these questions is to run pilot programs with a few promising options. This hands-on testing lets you see whether a tool actually delivers on its promises in your specific environment before you commit significant resources to rolling it out across your entire testing operation.

Step 4: Start with Self-Healing Automation

Self-healing automation is a great place to start your AI implementation because it builds directly on the tests you already have. The idea is to add intelligence that helps your tests adapt when the application changes, rather than breaking immediately.

Here's how to make it work:

- Configure your systems to capture multiple attributes for each UI element when you're creating tests. This gives the AI multiple ways to recognize an element if one method fails.

- Then turn on automatic locator updating so that when the primary way of finding an element stops working, the system can try alternative approaches.

- As this runs, keep an eye on how often the healing actually succeeds and use that information to fine-tune the algorithms based on the specific patterns in your application.

Step 5: Deploy Computer Vision Testing

Once you have self-healing in place, the next step is to layer in visual validation alongside your functional tests.

Start by capturing baseline screenshots when you run your tests for the first time, these become your reference points. Then you'll need to set tolerance levels that define what counts as an acceptable visual variation.

Think of this as teaching the system the difference between a meaningful change (like a button that's moved to a different location) and trivial rendering differences (like a font that's one pixel off due to browser quirks).

After you've configured these settings, integrate the visual testing into your continuous integration pipelines so it happens automatically with every build.

Step 6: Monitor and Optimize Performance

The final step is to establish ongoing monitoring so you can see whether your AI testing investment is actually paying off. Keep an eye on key metrics like how often the self-healing successfully adapts to changes, how accurate your visual comparisons are, and whether your overall test execution time is improving.

But don't just look at the numbers, talk to your team about how well the tools are working in practice and whether they're actually making the workflow easier. Use all this information to continuously refine your AI models, adjusting them as your application evolves and new testing patterns emerge.

What Tools Are Available for AI UI Testing?

Several platforms offer AI-enhanced UI testing capabilities, each with distinct strengths and implementation approaches.

1. SmartUI: Visual Regression Testing Platforms

SmartUI provides pixel-perfect visual regression testing integrated with the HyperExecute test orchestration platform.

The system performs automated screenshot comparisons across multiple browsers and devices simultaneously. Smart UI captures baseline images during initial test execution, then compares subsequent runs against these references. The platform identifies visual discrepancies while ignoring minor browser-specific rendering differences.

Smart UI integrates seamlessly with popular frameworks, including Selenium, Cypress, and Playwright. Teams can configure tolerance levels for visual comparisons, allowing flexibility for acceptable variations while flagging significant changes. The system supports cross-browser testing across 10,000+ browser-real device combinations, ensuring consistent visual presentation across different environments.

2. KaneAI: AI-Native Testing Agents

KaneAI represents LambdaTest's GenAI-native testing agent that enables test creation through natural language instructions.

The platform allows teams to describe testing requirements conversationally, then automatically generates executable test scripts across multiple programming languages and frameworks.

KaneAI features include intelligent test generation from plain English descriptions, automatic test planning based on high-level objectives, and multi-language code export supporting Java, Python, JavaScript, and other popular languages. The system handles complex testing scenarios, including API validation alongside UI flows, database testing integration, and accessibility compliance checking.

The platform's test authoring capabilities extend beyond simple automation. KaneAI records user interactions in real-time, converting them into reusable test components. Teams can approve AI-generated test plans before execution, ensuring alignment with testing objectives. The system adapts to different environments automatically, customizing tests for development, staging, and production deployments.

3. HyperExecute: Test Orchestration Platforms

HyperExecute functions as an AI-native test orchestration platform that accelerates test execution through intelligent distribution and parallel processing.

The system replaces traditional hub-and-node architectures with advanced orchestration techniques that eliminate network latency and optimize resource utilization.

HyperExecute achieves up to 70% faster execution compared to traditional cloud grids by merging all testing components into unified execution environments. The platform automatically analyzes test histories, reorders execution sequences to surface failures earlier, and distributes tests optimally across available resources.

The system provides comprehensive reporting through AI-generated build analysis, eliminating manual data gathering from multiple sources. HyperExecute integrates with over 120 tools supporting continuous integration pipelines, project management systems, and codeless automation platforms.

Teams can execute tests across Windows, macOS, and Linux containers with built-in compatibility for Selenium, Cypress, Playwright, and Puppeteer frameworks.

What Are Some Advanced AI UI Testing Techniques?

Beyond basic automation, AI enables sophisticated testing approaches that address complex validation scenarios.

1. Adaptive Element Recognition

Computer vision systems identify UI elements through visual characteristics rather than code-based selectors. Instead of relying on brittle element IDs or CSS classes, these systems analyze what elements actually look like—their shapes, colors, text content, and where they sit relative to other elements.

This approach maintains accurate identification even when the underlying DOM structure changes, which makes visual recognition particularly valuable for testing legacy applications, virtualized environments like Citrix, or applications where identifiers change frequently.

2. Predictive Test Maintenance

Machine learning algorithms analyze test execution patterns, code changes, and historical failure data to predict which tests require updates before they actually break. These predictive models can identify tests most likely to fail after application changes, which allows teams to prioritize maintenance efforts where they'll have the biggest impact.

Instead of waiting for tests to break and then scrambling to fix them, these systems suggest specific test modifications ahead of time, cutting down on reactive debugging work.

3. Intelligent Test Data Generation

AI systems generate realistic test data based on production patterns and application requirements. Machine learning models analyze existing data structures, user behavior patterns, and business logic to create representative test datasets that actually make sense for your application.

The generated data maintains referential integrity, meaning relationships between data points stay valid, while providing comprehensive coverage of edge cases and boundary conditions that you might not think to test manually.

4. Multi-Modal Testing Integration

Advanced platforms can combine functional testing, visual validation, performance monitoring, and accessibility checking all in a single test execution. AI orchestrates these different testing types and correlates the results to give you a comprehensive quality assessment.

Instead of running separate tests for each concern, you get unified reports showing functional failures alongside performance issues discovered during the same test run, making it much easier to understand the full picture of your application's health.

What Are the Common Implementation Challenges?

When you're implementing AI UI testing, you'll run into some practical challenges. Here's how to navigate them.

Technical Challenges

False Positive Management

AI systems sometimes flag legitimate application changes as test failures. For instance, visual comparison algorithms might detect acceptable design updates as regressions. Self-healing mechanisms might incorrectly update test logic based on temporary application states.

You'll need to configure appropriate tolerance levels and validation mechanisms to minimize false positives while keeping your testing effective.

Model Training Requirements

Machine learning systems need sufficient training data to work accurately, and this can take time to build up. Visual recognition models, for example, need diverse screenshot samples across different browsers, devices, and application states.

Self-healing algorithms need to observe multiple UI change patterns before they can develop reliable adaptation strategies. The key is to plan for extended training periods and make sure you're providing comprehensive example data so the AI can reach optimal performance.

Organizational Challenges

Integration Complexity

Your existing testing infrastructure might need significant modifications to accommodate AI-powered tools. Legacy systems might lack the APIs or data formats required for AI integration, and team training requirements can delay implementation timelines.

The best approach is to budget adequate time and resources for integration planning and team skill development so you're not caught off guard by these challenges.

Performance Considerations

AI processing adds computational overhead to test execution. Image analysis for visual testing requires significant processing power and memory resources, and complex machine learning operations might slow overall test execution despite the automation benefits.

You'll need to balance AI capabilities with performance requirements, sometimes it makes sense to implement selective AI usage rather than applying it everywhere, so you can get optimal results without sacrificing speed.

What About Security and Privacy Considerations?

AI testing systems often need access to sensitive application data and user interfaces. Computer vision tools capture screenshots that might contain confidential information. Machine learning models process application behavior data that could reveal business logic or security vulnerabilities.

Make sure you implement appropriate data protection measures and ensure your AI testing complies with organizational security policies. Cloud-based AI testing platforms raise additional privacy concerns since application data might be processed on external systems.

When you're selecting AI testing tools, take a close look at data residency requirements, encryption standards, and access controls. Some teams prefer on-premises AI implementations despite potential scalability limitations, simply because they want to keep their data in-house and maintain tighter control over security.

How Can You Measure Your AI Testing Success

To measure AI testing effectiveness, you need comprehensive metrics beyond traditional test execution counts. Key performance indicators include test maintenance time reduction, false positive rates, test coverage expansion, and overall testing cycle acceleration.

For self-healing automation, you'll want to keep an eye on how often the system successfully adapts to application changes without needing manual intervention. When it comes to visual testing, look at how accurate the comparisons are and what percentage of the flagged issues are actually meaningful regressions rather than false alarms.

The real proof of AI implementation value shows up in your overall testing velocity is as you should see faster feedback cycles and less time spent on manual maintenance work.

What Does the Future Hold for AI UI Testing?

What we're seeing now in AI testing is just the beginning. Natural language processing will enable more conversational test creation and modification. Advanced computer vision will provide semantic understanding of UI elements rather than just pixel-level analysis. Integrated AI testing platforms will orchestrate complex testing workflows automatically based on application changes and risk analysis.

Autonomous testing agents might eventually perform exploratory testing, generating new test scenarios based on application behavior analysis and user interaction patterns. These systems could identify untested application areas, suggest coverage improvements, and adapt testing strategies based on defect discovery patterns.

Ready to explore how AI can enhance your UI testing workflows? Book a demo with LambdaTest to see these capabilities in action and discover which AI testing approach best fits your team's requirements.

2M+ Devs and QAs Rely on LambdaTest for Web & App Testing Across 3000 Real Devices

On This Page

- Overview

- What Does AI Bring to UI Testing Workflows?

- How Do You Implement AI in UI Testing?

- What Tools Are Available for AI UI Testing?

- What Are Some Advanced AI UI Testing Techniques?

- What Are the Common Implementation Challenges?

- What About Security and Privacy Considerations?

- How Can You Measure Your AI Testing Success?

- What Does the Future Hold for AI UI Testing?

- Frequently Asked Questions (FAQs)

Frequently Asked Questions (FAQs)

Did you find this page helpful?

More Related Hubs

Start your journey with LambdaTest

Get 100 minutes of automation test minutes FREE!!