Power Your Software Testing with AI and Cloud

Supercharge QA with AI for Faster & Smarter Software Testing

- Automation

- Home

- /

- Learning Hub

- /

- Top 12 Mobile App Performance Metrics for Testing

Top 12 Mobile App Performance Metrics for Testing

Discover essential mobile app performance metrics for testing and learn how to track them effectively to boost app speed, stability, and overall quality.

Last Modified on: September 26, 2025

- Share:

Mobile users expect fast, reliable, and seamless experiences across a wide range of devices and network conditions. Even minor performance issues, such as slow launch times, laggy interactions, or excessive resource consumption, can impact retention and user satisfaction. Tracking mobile app performance metrics during testing gives teams actionable insights to identify bottlenecks, optimize efficiency, and ensure consistent responsiveness.

Overview

Mobile app performance metrics in testing are measurable indicators used to evaluate how well a mobile application performs under varying load conditions. They help QA teams, developers, and product managers identify bottlenecks, improve user experience, and ensure the app meets quality standards before release.

Why Are Performance Metrics Important in Mobile App Testing?

Here’s why monitoring performance metrics can significantly enhance mobile app testing results:

- User Retention: Ensuring smooth, responsive app performance keeps users engaged longer. Testing identifies friction points, reduces drop-offs, and fosters positive experiences that drive loyalty and higher lifetime value.

- Issue Prioritization: Performance metrics highlight the most impactful bottlenecks. By focusing testing on these areas, teams can allocate resources strategically and resolve issues that affect critical workflows first.

- Experience Validation: Testing across devices, network conditions, and usage patterns ensures improvements translate into real-world benefits, confirming that optimizations meaningfully enhance user interactions and satisfaction.

- Competitive Advantage: High-performance apps differentiate in the market. Monitoring and testing key metrics enables teams to refine responsiveness, smoothness, and reliability, resulting in superior experiences compared to competitors.

- Preventive Maintenance: Continuous metric monitoring anticipates potential regressions. Integrating these insights into test cycles maintains stability, ensures consistent performance with updates, and reduces the risk of post-release failures.

What Are the Key Mobile App Performance Metrics to Track?

Here are some of the key mobile app performance metrics you can track when testing:

- Launch Time: Measures the time from app start until fully interactive. Testing identifies slow initialization routines, optimizes critical startup paths, and ensures fast, seamless user access.

- Frame Rate: Tracks animation smoothness and rendering consistency. Testing reveals stutters or dropped frames, ensures fluid UI interactions, and guides refinement of transitions for polished visual performance.

- CPU Usage: Evaluates processing demands during tasks and background operations. Monitoring prevents sluggish behavior, overheating, and inefficient processes that degrade responsiveness, multitasking, or overall app performance.

- Memory Consumption: Monitors allocation patterns and detects potential leaks. Testing ensures stability during extended sessions, prevents crashes, and optimizes memory usage across devices with varying RAM capacities.

- Network Latency: Measures API response and data request times. Testing ensures reliable connectivity, rapid content retrieval, and smooth app performance under fluctuating network conditions.

- Battery Usage: Evaluates energy impact of app features and background tasks. Testing prevents excessive drain, ensures prolonged device usability, and maintains a positive user experience efficiently.

What Are Mobile App Performance Metrics?

Mobile app performance metrics in testing quantify how your app behaves under real-world conditions, providing insight into responsiveness, stability, resource usage, and efficiency. Testers rely on these metrics to identify bottlenecks, measure improvements, and ensure that features work reliably across devices, OS versions, and network conditions, forming the backbone of performance validation.

These metrics include startup time, frame rate, CPU and memory usage, network latency, battery consumption, and error rates. When you monitor them during functional, stress, and real-world testing, it can help prioritize optimizations, validate fixes, and maintain consistent user experience. Accurate measurement of these metrics allows for proactive improvements and supports a polished, responsive, and reliable mobile application.

To truly understand how these metrics behave under real user conditions, you need a structured approach that goes beyond isolated checks. This is where mobile performance testing becomes essential, since it brings all these measurements together under controlled scenarios and helps you see how the app holds up across different devices, networks, and usage patterns.

Why Are Mobile Performance Metrics Important?

Understanding performance metrics allows you to prioritize fixes and optimizations that have a real impact. Performance metrics help you validate improvements, compare devices, and measure the effect of updates over time, creating apps that feel polished, responsive, and reliable.

Key areas influenced by metrics include:

- User Retention: Better-performing apps keep users engaged. Testing ensures smooth, responsive experiences, reducing churn, increasing lifetime value, and encouraging positive reviews that amplify your app's reputation.

- Issue Prioritization: Metrics reveal the most critical bottlenecks. Leveraging these insights during testing focuses development efforts strategically, delivering maximum impact with minimal time or resources.

- Experience Validation: Performance data ensures enhancements are meaningful for real users. Testing across devices and scenarios ensures enhancements translate into seamless, more enjoyable interactions in practice.

- Competitive Advantage: Optimized apps outperform competitors. Test metrics guide refinements in speed, responsiveness, and fluidity, delivering experiences that boost satisfaction and reinforce your app's market position.

- Preventive Maintenance: Continuous monitoring prevents regressions. Integrating metrics into test cycles helps maintain stability and performance during updates, ensuring long-term reliability even as new features are added.

Note: Run your performance tests on Linux, macOS and Windows. Try HyperExecute Now!

What Are Key Mobile App Performance Metrics in Testing?

Monitoring performance metrics in testing gives actionable insight into your app's responsiveness, stability, efficiency, and overall user experience. Each metric guides decisions to optimize reliability and engagement.

Key metrics to monitor include:

- Launch Time: Measures duration from app start to readiness. Testing launch time ensures fast access, highlights slow initialization routines, and optimizes critical startup paths for better retention.

- Frame Rate: Tracks animation smoothness and rendering consistency. Testing ensures fluid interactions, identifies stutters or dropped frames, and guides UI optimization for a polished visual experience.

- CPU Usage: Evaluates processing demand during tasks. Monitoring prevents sluggish performance, device overheating, and inefficient processes that degrade multitasking or responsiveness.

- Memory Consumption: Monitors allocation and potential leaks. Testing ensures stability during prolonged sessions, prevents crashes, and optimizes resource usage across devices with varying RAM capacities.

- Network Latency: Measures response time of API calls and data requests. Testing ensures reliable connectivity, fast content retrieval, and smooth performance across varied network conditions.

- Battery Usage: Evaluates energy impact of the app. Testing ensures prolonged use does not drain devices excessively, maintaining positive user experience and efficient resource utilization.

- Error Rates / Crash Frequency: Tracks functional failures or crashes. Monitoring identifies unstable features, prioritizes fixes, and validates high reliability across devices and scenarios.

- App Responsiveness / Input Delay: Measures time between user action and system response. Testing ensures interactions feel instantaneous and guides optimization of UI code and background processes.

- Data Usage / Network Efficiency: Monitors app data consumption. Testing optimizes API calls, reduces network overhead, and ensures efficiency for users on limited or metered connections.

- Animation and Transition Smoothness: Assesses fluidity of UI animations. Testing reduces jank, refines transitions, and enhances perceived performance for visually engaging experiences.

- Load Handling / Stress Metrics: Measures behavior under high activity or heavy load. Stress testing ensures stability, validates scalability, and identifies breaking points for proactive optimization.

- Accessibility and Rendering Metrics: Assesses speed and accuracy of content display across devices and accessibility settings. Testing ensures consistent layout, usability, and experience for all users.

When you tracking mobile app performance metrics, you're essentially looking at the results of your testing pipeline. But the accuracy, speed, and depth of those insights depend on how efficiently and consistently your tests run when you requirements scale.

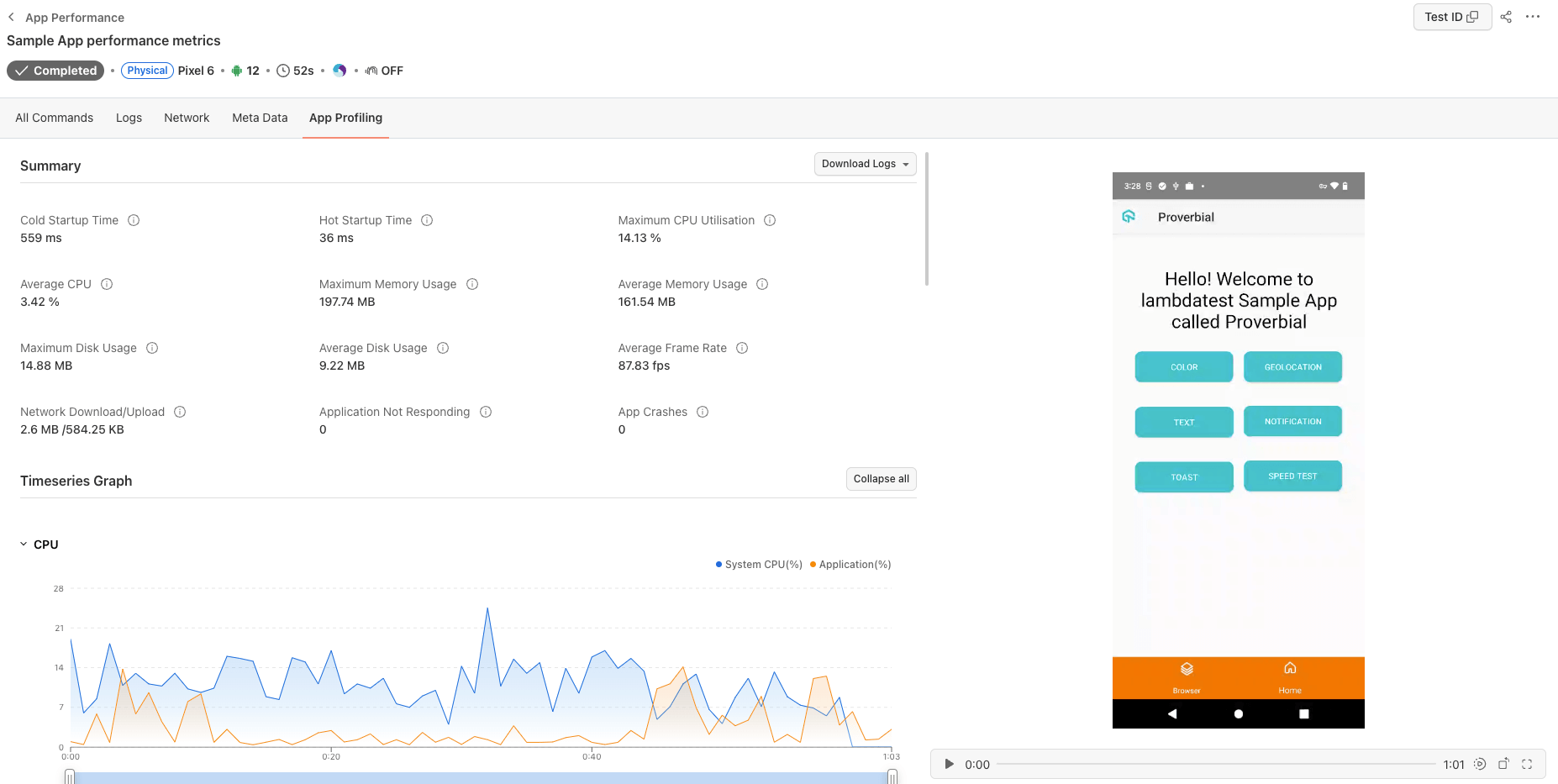

To help you scale your mobile app testing, platforms like LambdaTest offers App Profiling feature that helps you to detect and optimize performance issues before release. You can get real-time insights into key metrics like CPU usage, memory consumption, and more on real Android and iOS devices.

Why Is Test Observability Critical for Mobile App Performance?

Test observability provides testers with a comprehensive, real-time view of how a mobile app behaves under diverse conditions. By collecting structured data like logs, traces, metrics, and telemetry, you can see not just whether a test passes or fails, but why it behaves that way.

This visibility spans application logic, infrastructure, and network interactions, allowing you to detect hidden bottlenecks, performance issues, or edge-case failures before they reach end users. For testers, this depth of insight is invaluable in designing precise, reliable, and proactive test strategies.

Key advantages:

- Quick Issue Identification: Observability allows tracing test execution across user interactions and system events. Testers can pinpoint the exact source of failures, reproduce issues reliably, and provide developers with actionable insights to fix problems faster.

- Proactive Detection of Risks: Monitoring trends such as rising error counts, slow responses, or resource spikes during tests helps identify potential failures before they appear in production, enabling proactive mitigation and more effective regression testing.

- Performance and Resource Optimization: Detailed metrics and traces reveal inefficient code paths or resource-heavy operations. Testers can refine test scenarios, validate performance optimizations, and ensure smoother, faster user experiences.

- Strategic Test Planning: Observability enables analysis of CPU, memory, and network trends under different scenarios. This supports smarter test coverage planning and better prioritization of critical paths under varying load conditions.

- Contextual Error Analysis: Provides contextual information for crashes and errors. Testers can prioritize fixes based on real-world impact, focusing on failures that affect most users rather than rare configurations.

- Network and Backend Insights: Distributed traces and network metrics help identify whether delays or failures originate from backend services, APIs, or client-side processing, enabling targeted remediation during testing.

Testing mobile apps is more about understanding how your app behaves under real-world conditions. Traditional reporting methods like spreadsheets, static dashboards, or fragmented logs often fail to capture the depth needed for actionable insights. Teams may spot failures, but they struggle to connect them to metrics that explain why the app feels slow, unstable, or unresponsive. This is where test observability platforms such as LambdaTest AI Test Insights help close that gap.

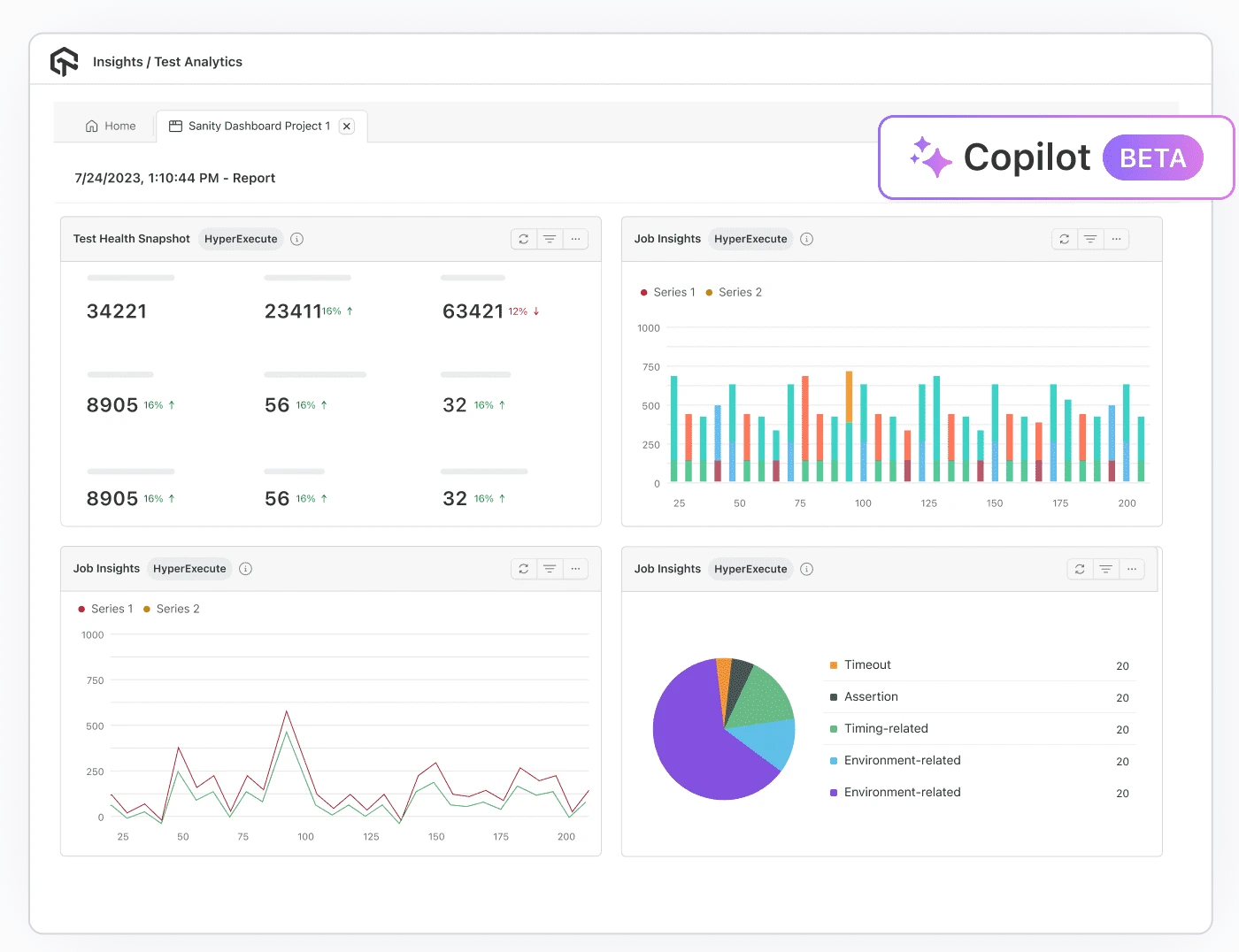

Optimize Mobile App Performance With LambdaTest AI Test Insights

LambdaTest offers AI-native Test Insights that consolidates your real-time execution data into a single dashboard. It transforms your raw test results into actionable intelligence.

It doesn't simply show pass/fail results; it helps you understand patterns, anomalies, flaky behavior, root causes, and historical trends across your test suites. LambdaTest AI Test Insights platform includes dashboards and widgets that let you track things like error/failure types, flaky tests, build comparisons, test case health, concurrency and resource use, and real-device performance. You can also customize these dashboards.

Key features:

- Performance Summary Report: Consolidates key metrics such as launch time, memory consumption, and CPU load. This provides an at-a-glance view of whether the app is performing within acceptable thresholds across devices.

- Error and Crash Insights: Clusters frequent failures and links them to performance data. Testers can quickly see whether crashes stem from memory leaks, high CPU usage, or network instability.

- Trend Analysis: Tracks long-term performance metrics like frame rate stability, latency variations, and resource utilization across builds. This enables QA teams to identify regressions early and measure improvements over time.

- Device and OS Report: Breaks down performance by operating system, device type, and screen resolution. This ensures the app is optimized for real-world usage patterns and prevents environment-specific slowdowns.

- Resource and Concurrency Insights: Surfaces how the app behaves under load such as heavy multitasking or parallel requests, helping testers spot bottlenecks that only appear under stress conditions.

- AI Recommendations: With Test intelligence, it goes beyond raw numbers by providing context and suggesting next steps. For example, if frame drops increase under certain device/OS combinations, the dashboard can guide developers toward optimizing rendering or resource handling.

- Customizable Dashboards: Different stakeholders need different levels of visibility. Developers may want detailed logs tied to CPU spikes, while product managers may prefer high-level metrics like app responsiveness or release readiness indicators.

To get started, check out this LambdaTest AI Test Insights guide.

Best Practices for Optimizing Mobile App Performance

Optimizing mobile app performance is about building a responsive, stable, and efficient experience. These best practices provide actionable strategies to ensure apps perform reliably in real-world conditions.

- Minimize App Launch Time: Users judge apps immediately. Optimize startup routines, defer non-critical resources, and streamline splash screens to deliver faster access and stronger first impressions.

- Optimize Rendering and Frame Rate: Maintain smooth 60fps performance. Profile animations carefully, reduce overdraw, eliminate unnecessary layers, and validate responsiveness across low-end, mid-tier, and flagship devices.

- Control CPU and Memory Usage: Prevent sluggish performance and crashes by profiling regularly, releasing unused objects promptly, and moving heavy computations away from the main thread where possible.

- Reduce Network Latency: Cache responses, batch requests, and paginate data. Plan for poor connectivity by using retries, fallbacks, and background sync to preserve functionality consistently.

- Manage Battery Consumption: Users quickly uninstall battery-draining apps. Schedule background tasks efficiently, limit GPS and sensor usage, and favor push notifications over frequent polling for sustainable performance.

- Optimize Data and Storage Usage: Use efficient formats like JSON or Protobuf, compress payloads, and manage caches and logs carefully to prevent storage bloat across devices.

- Test Under Real-World Conditions: Simulate poor networks, low memory, and varied devices. Leverage a real device cloud to validate app responsiveness and stability in real-world conditions to build resilience users can trust daily.

- Leverage Observability and Metrics: Monitor key performance indicators like launch time, frame drops, and crashes. Use metrics proactively to prioritize fixes and validate optimizations improve real experiences.

- Secure and Optimize Backend Dependencies: Backend delays degrade user experience. Monitor APIs, optimize infrastructure scalability, and leverage CDNs to deliver content faster while improving app reliability consistently.

- Continuously Monitor and Iterate: Treat performance optimization as ongoing. Set thresholds, integrate monitoring into CI/CD cycles, and use alerts to prevent regressions before reaching production environments.

Conclusion

Mobile performance metrics are the evidence of how well your app holds up against the real-world conditions your users face every day. Metrics like load time, responsiveness, battery consumption, and network efficiency reveal whether the experience feels smooth and reliable or frustrating and disposable. Teams that consistently measure and act on these signals position themselves to catch issues early, reduce costly post-release fixes, and build a reputation for quality that users trust.

What separates successful teams from the rest is not simply collecting metrics, but interpreting them in context and turning them into actionable improvements. It is easy to fall into the trap of tracking too many indicators without clarity on which ones actually impact the user experience. The real value comes when metrics are tied directly to user expectations and business goals, creating a feedback loop where every release is smarter than the one before.

Citations

- Improving Mobile App Performance: https://www.researchgate.net/publication/383115696

On This Page

- What Are Mobile App Performance Metrics?

- Why Are Mobile Performance Metrics Important?

- Key Mobile App Performance Metrics

- Test Observability for Mobile App Performance

- Optimize Mobile App Performance With LambdaTest AI Test Insights

- Best Practices for Optimizing Mobile App Performance

- Frequently Asked Questions (FAQs)

Frequently Asked Questions (FAQs)

Did you find this page helpful?

More Related Hubs

Start your journey with LambdaTest

Get 100 minutes of automation test minutes FREE!!