Power Your Software Testing with AI and Cloud

Supercharge QA with AI for Faster & Smarter Software Testing

- Testing Basics

- Home

- /

- Learning Hub

- /

- Monkey Testing

Monkey Testing: A Comprehensive Guide With Best Practices

Monkey testing ensures app stability by using random inputs and unpredictable actions to uncover hidden crashes, performance issues, and vulnerabilities.

Last Modified on: September 28, 2025

- Share:

In software development, not every issue can be caught through structured or planned testing. Applications often face unexpected user actions, unusual inputs, or stress conditions that traditional test cases don’t cover.

This is where Monkey testing becomes essential. It helps evaluate how stable and reliable an application is when subjected to unpredictable usage, ensuring that hidden flaws, crashes, or performance bottlenecks are exposed before users encounter them.

Overview

What Is Monkey testing?

Monkey testing is a software testing method that checks an application’s stability by using random inputs and unpredictable user actions. It helps uncover hidden crashes, performance issues, and rare defects, especially in mobile apps, web apps, games, and IoT systems.

Key Features of Monkey testing

- Random Inputs: Generates clicks, keystrokes, and gestures

- No App Knowledge Needed: Works without knowing internal logic

- Exploratory: Tests the app without a set plan

- Stress Testing: Checks stability under heavy load

- Automation Friendly: Can run via tools and scripts

- Security Check: Finds hidden vulnerabilities

- Hard to Reproduce Bugs: Issues may be tricky to replicate

- Multi-Domain Use: Effective for apps, games, IoT, and OS

Steps to Perform Monkey testing

- Pick a Tool: Choose Android, web, or cloud-based tools for random testing.

- Setup Environment: Install the app, enable logs, and prepare devices.

- Define Parameters: Set duration, input types, event frequency, and focus areas.

- Run Test: Inject random actions and watch app behavior.

- Log & Report: Capture errors, crashes, and performance data.

- Analyze Issues: Identify root causes and categorize defects.

- Iterate: Adjust parameters and retest for deeper coverage.

Pro Tip

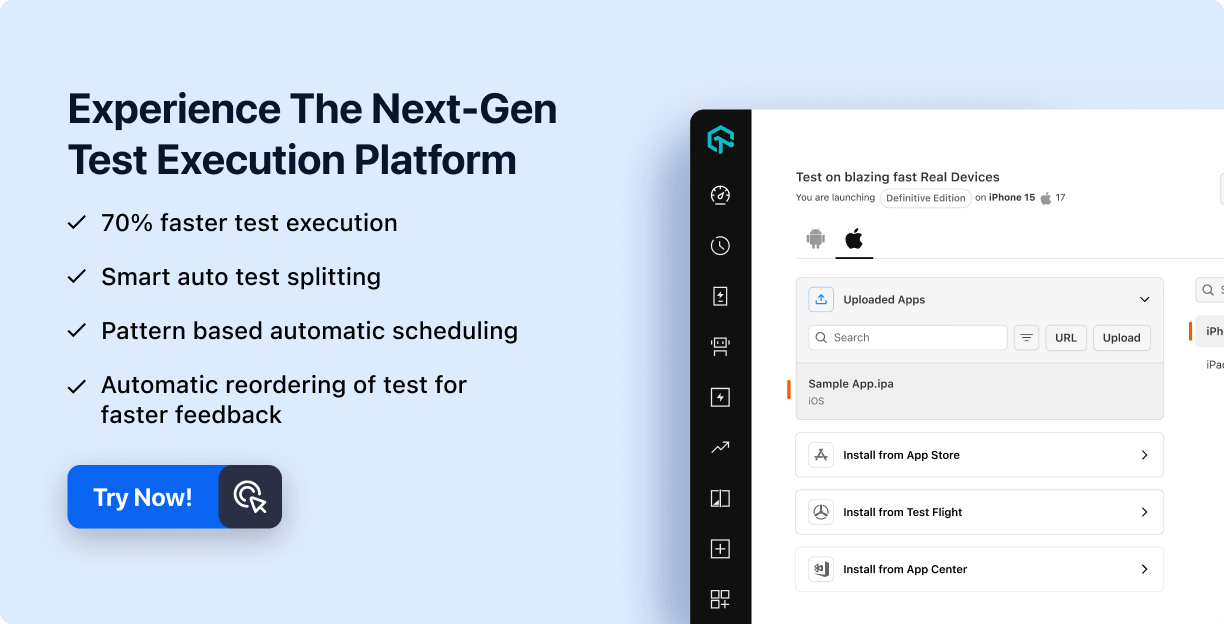

Scale tests using cloud platforms like LambdaTest to cover multiple devices, browsers, and OS combinations efficiently.

Infinite Monkey Theorem

The Infinite Monkey Theorem is a mathematical principle stating that if a monkey typed randomly on a typewriter for an infinite amount of time, it could eventually produce an entire piece of literature, such as Shakespeare's Hamlet.

This illustrates the laws of probability and randomness: given enough time, any random event is bound to occur. While largely theoretical, the theorem has been widely used as a metaphor across disciplines, including computer science and software testing.

In software testing, this theorem serves as a metaphor for Monkey testing, where random inputs are injected into an application to evaluate its stability and performance.

Just as random typing could eventually produce meaningful text, unpredictable test inputs can uncover hidden bugs, crashes, or vulnerabilities that structured test cases might miss.

By simulating erratic user behavior, Monkey testing helps detect issues like memory leaks, unexpected crashes, and performance bottlenecks, making it an effective approach for stress testing software.

Unlike conventional testing, which relies on predefined test cases, Monkey testing does not require prior knowledge of the application’s inner workings.

This makes it particularly useful for uncovering edge cases and defects in complex systems where exhaustive testing isn’t feasible. Even though the process is random, it significantly contributes to software resilience by revealing hidden vulnerabilities and strengthening overall system reliability.

This principle perfectly sets the stage for understanding Monkey testing itself, highlighting why random, exploratory inputs can be so effective in uncovering defects that conventional testing might miss.

What Is Monkey testing?

Monkey testing is a software testing technique where test cases are executed randomly without predefined input or logic. The primary goal is to check the application's stability by providing unexpected or arbitrary inputs, mimicking unpredictable user behavior. This technique helps uncover crashes, performance issues, and defects that structured test cases might overlook.

It is especially effective in stress testing scenarios because it mimics real-world situations where users can use the software in unexpected ways. It doesn't adhere to a test plan, so it's a great method for discovering system vulnerabilities that other testing may not catch.

Monkey testing is widely applied to mobile and web applications to guarantee that they will be able to withstand unexpected inputs without crashing.

There are two common variations of Monkey testing: Dumb Monkey testing, where inputs are completely random with no knowledge of the application, and Smart Monkey testing, where random actions are generated with some awareness of the system’s workflows and input constraints.

Smart monkeys are more effective for meaningful defect detection, while dumb monkeys are better for stress testing.

Note: Run Monkey test scripts across 3000+ environments with Appium, Selenium, and WebDriverIO. Try LambdaTest Now!

Features of Monkey testing

Monkey testing is a unique and unstructured software testing technique designed to evaluate an application’s robustness against unpredictable user interactions.

Here are its key features:

- Random Input Generation: Generates random keystrokes, clicks, or gestures without predefined test cases. This helps uncover unexpected crashes, unhandled exceptions, and performance bottlenecks.

- No Prior Knowledge Required: Testers don’t need to know the internal functionality of the application, making it behave like real users who interact in unpredictable ways.

- Unstructured and Exploratory: Doesn’t follow a set plan. Focuses on exploring the system’s response to random actions, which is effective for finding hidden bugs and vulnerabilities.

- Effective for Stress and Load Testing: By sending large volumes of random inputs, Monkey testing checks application stability under heavy traffic and unusual scenarios. Commonly used for mobile and web apps.

- Automated Execution with Tools: Though it can be manual, Monkey testing is often automated using tools that simulate random user actions at scale.

- Helps Identify Security Vulnerabilities: Unexpected or malformed inputs can expose flaws such as buffer overflows, weak input validation, or other security gaps.

- Challenges in Bug Reproduction: Because inputs are random, reproducing issues can be difficult. Testers usually rely on logs or detailed system behavior analysis to trace problems.

- Applicable Across Multiple Domains: Beyond mobile and web apps, Monkey testing is also applied in games, operating systems, and IoT devices where unpredictable interactions matter.

Types of Monkey testing

Monkey testing is one approach among the many types of software testing, each designed to evaluate applications differently based on objectives and methods.

Within Monkey testing itself, there are three primary approaches:

- Dumb Monkey testing: Testers provide random inputs without any knowledge of the application's functionality. The objective is to observe how the system handles unexpected or arbitrary actions, potentially uncovering crashes or major bugs. This method is straightforward but may not effectively identify complex issues due to its lack of direction.

- Smart Monkey testing: Testers conducting Smart Monkey testing possess a basic understanding of the application's functionality. They produce inputs that are more likely to be valid and useful, mimicking normal user behavior. This focused strategy improves the chances of finding defects in typical usage patterns, making the testing process more effective.

- Brilliant Monkey testing: This advanced form involves testers with in-depth knowledge of the application and its domain. They create sophisticated and targeted test cases to assess certain functionalities and edge conditions. Brilliant Monkey testing is intended to find the slightest defects that could be overlooked in other types of tests to ensure an exhaustive analysis of the software.

Each of the Monkey testing approaches has unique benefits and is selected depending on the goals of testing and on the complexity of the application. All these methods can add strength and reliability to software applications.

Where to Use Monkey testing?

Monkey testing is a testing methodology where random inputs are supplied to an application to determine the stability of the application and any unexpected bugs. This method works best in situations where user input may be random or where the creation of exhaustive test cases is not feasible.

Some of the areas where Monkey testing proves useful are:

- Mobile Application Testing: In the domain of mobile app testing users engage with apps in unpredictable and varied ways. Monkey testing mimics random taps, swipes, and gestures to identify bugs related to user interaction, memory management, and overall stability across different phones and operating systems.

- Web Application Testing: In web application testing, applications face a broad spectrum of user actions. Monkey testing can mimic random clicks, form submissions, and navigation patterns to detect issues such as broken links, unhandled exceptions, or performance bottlenecks, ensuring a robust user experience.

- Game Testing: In game testing applications involve numerous possible user interactions. Monkey testing generates random inputs to identify glitches and unexpected behavior, ensuring the game handles unpredictable actions without crashing or malfunctioning.

- Internet of Things (IoT) Testing: In IoT testing devices interact with multiple sensors and inputs, making them prone to unexpected events. Monkey testing simulates random sensor inputs and events to evaluate system stability and ensure reliable performance under unusual conditions.

- Security Testing: In security testing helps uncover vulnerabilities by providing random inputs to applications. This approach can reveal issues such as SQL injection, cross-site scripting, or improper data handling, enabling teams to address security flaws before exploitation.

- Usability Testing: In usability testing evaluates the user interface by simulating random user actions. This helps identify issues with navigation, responsiveness, and overall user experience, ensuring the application can handle unexpected interactions smoothly.

Integrating Monkey testing in these areas increases the stability and reliability of software programs by subjecting them to random situations that regular testing processes may not be able to simulate.

Some of the Best Monkey testing Tools

Several software testing tools facilitate this approach across different platforms, helping testers evaluate application stability and uncover unforeseen bugs.

Some of them are mentioned below:

- MonkeyRunner: A tool in the Android SDK that automates Android app testing by simulating taps, swipes, and text entries using Python scripts. It allows testing on multiple devices without modifying the app’s code and can capture screenshots during execution.

- Monkey Tool (UI/Application Exerciser Monkey): A built-in Android SDK tool that generates random events like touches, keystrokes, and device rotations. It is especially effective for stress testing to uncover crashes, slowdowns, and memory leaks that structured testing might miss.

- Appium: An open-source automation framework for Android and iOS. While not a dedicated Monkey testing tool, Appium can be scripted to send random gestures such as taps, scrolls, and text inputs. Its cross-platform support and ability to run tests on real devices and emulators make it a versatile option.

- MonkeyTalk: An older open-source tool for automating native and hybrid apps on iOS and Android. It supported recording, playback, and simple scripting for both functional and stress testing. Although useful historically, MonkeyTalk is no longer actively maintained and has largely been replaced by modern tools.

- MonkeyTestJS: A JavaScript-based library for web testing. It simulates random user actions like mouse clicks, keyboard inputs, and form submissions to identify UI bugs and functional issues, particularly under stress conditions.

How to Perform Monkey testing?

Monkey testing involves injecting random inputs into an application to assess its stability, uncover hidden defects, and identify how it handles unexpected user interactions.

Follow these steps to execute it effectively:

- Choose the Right Testing Tool: Selecting the right tool is critical. For Android apps, use MonkeyRunner or UI/Application Exerciser Monkey to create random clicks, gestures, and key presses. For web apps, Selenium or WebDriverIO can simulate unexpected actions. For automation testing across multiple devices, cloud platforms like LambdaTest provide scalable environments with access to real devices.

- Prepare the Test Environment: Install the application on the target device, emulator, or cloud platform. Configure debugging tools and enable logging to track unexpected behaviors. If using a cloud service, select multiple device configurations to simulate real-world conditions and detect device-specific crashes or performance issues early.

- Define Testing Parameters: Establish control over randomness by setting key parameters:

- Test Duration: How long the random events should run.

- Types of Interactions: Taps, swipes, multi-touch gestures, or random text input.

- Event Frequency: Adjust the rate of interactions to avoid overwhelming the system.

- Seed Values: Use specific values to make test cases reproducible.

- Target Areas: Limit testing to certain modules if needed.

- Execute the Monkey Test: Use the Monkey testing tool to generate random inputs and simulate user interactions. Monitor system performance, responsiveness, and stability. Note crashes, freezes, or unusual behaviors. Repeat tests under different conditions to uncover more weak points.

- Capture Logs and Reports: Collect logs, error messages, screenshots, and performance data. Logs are critical for debugging, providing insights into the causes of crashes or slowdowns. Ensure integrated error reporting gathers sufficient detail.

- Analyze Issues and Debug: Examine the logs for failure patterns. Identify if crashes are due to memory leaks, uncaught exceptions, or UI rendering errors. Classify defects by severity and work with developers to replicate and fix critical issues before re-testing.

- Refine Test Strategy and Repeat: Adjust testing parameters based on results and rerun tests. Changing event types or input frequency can explore additional edge cases. Repeating tests ensures fixes work and evaluates application resilience against unpredictable user behavior.

Defining these parameters ensures a balance between randomness and meaningful test coverage.

For effective Monkey testing, consider ways to scale across multiple devices and environments. Cloud testing platforms can simulate diverse real-world conditions, run tests in parallel, and capture detailed logs and performance data, reducing the need for a full in-house device lab.

One such platform is LambdaTest, which allows teams to run manual and automated web testing, including Monkey testing scripts that generate random input scenarios via tools like Appium, Selenium, or WebDriverIO, across 3000+ real browsers and OS combinations. This helps uncover hidden defects and ensure application stability at scale.

While it's not a dedicated Monkey testing platform, it enables scalable execution, parallel testing, and detailed reporting, helping teams uncover defects and ensure application reliability without maintaining an extensive on-premise device lab.

Monkey testing vs Gorilla Testing vs Ad-Hoc Testing

Monkey testing, Gorilla Testing, and Ad-Hoc Testing each serve distinct purposes. Monkey testing uses random inputs to uncover unexpected crashes and defects, Gorilla Testing repeatedly tests a specific module to ensure its stability, and Ad-Hoc Testing relies on tester intuition to find hidden issues.

Key differences between these testing approaches are summarized in the table below:

| Aspect | Monkey testing | Gorilla Testing | Ad-Hoc Testing |

|---|---|---|---|

| Definition | A type of random testing where automated tools or testers generate random inputs to check for crashes or stability issues. | A repetitive testing approach where the same module is tested multiple times with different test cases to ensure robustness. | An unscripted and exploratory approach where testers use domain knowledge, intuition, and experience to identify defects without predefined test cases. |

| Testing Approach | Unstructured and exploratory, with no predefined test cases. | Highly structured, focusing on a specific module with repeated testing. | Unstructured but intentional, testers target potential weak points based on an understanding of the software. |

| Objective | To identify unexpected crashes, bugs, or performance issues that structured tests may miss. | To ensure that a specific functionality or module remains stable after repeated execution of the same test case. | To find hidden defects by exploring the system beyond predefined test cases using tester insight. |

| Test Coverage | Broad but unpredictable, covering various parts of the application randomly. | Narrow but deep, focusing on one module or functionality with exhaustive testing. | Broad and flexible; coverage depends on the tester's intuition and experience rather than randomness or repetition. |

| Execution | Usually performed using automated tools that generate random events. | Conducted manually or through automated scripts with predefined test cases. | Performed manually based on the tester's discretion; no formal scripts are required. |

| Use Case | Suitable for stress testing and finding unexpected defects in the overall system. | Best for validating the stability of critical features by repeatedly executing test cases. | Effective for exploring untested areas or uncovering subtle issues that structured tests may overlook. |

| Defect Identification | Finds crashes, UI issues, and performance slowdowns through random inputs. | Detects deep-seated bugs in a specific module by testing it repeatedly. | Identifies defects that require insight or experience to notice, including logic, usability, or workflow issues. |

| Testers Involved | It can be performed by non-expert testers or automated tools with no prior knowledge of the application. | Requires experienced testers or automated scripts that focus on a specific feature. | Requires skilled testers with domain knowledge and understanding of the application. |

| Example | A testing tool randomly tapping, swiping, and entering text in a mobile app to check for unexpected failures. | Running the same login test case 100 times to ensure it always functions correctly. | Tester navigating an e-commerce app intuitively, trying uncommon flows to find hidden bugs. |

Best Practices for Monkey testing

Monkey testing is a valuable technique for uncovering unexpected bugs and ensuring application stability under unpredictable user behavior. To maximize its effectiveness, consider the following best practices:

- Monitor and Capture Logs: During testing, continuously monitor the application for any anomalies. Capture logs, screenshots, and error messages to document unexpected behaviors. These logs are crucial for identifying the root causes of issues and for reproducing bugs.

- Combine with Other Testing Methods: While Monkey testing is effective in uncovering hidden issues, it should complement other testing methods. Integrate it with structured testing approaches like functional, regression, and performance testing to ensure comprehensive test coverage.

- Automate Where Possible: Automating Monkey testing can save time and resources, especially for repetitive tasks. Use automation frameworks and tools to simulate random user inputs across different devices and environments. Automation also facilitates integration into continuous integration/continuous deployment (CI/CD) pipelines.

- Analyze and Prioritize Findings: After completing the tests, analyze the captured data to identify patterns and prioritize issues based on their severity and impact. Not all discovered bugs may be critical; focus on those that affect user experience, security, or system stability.

- Iterate and Refine: Monkey testing is an iterative process. Based on the findings, refine your testing parameters and repeat the tests to explore different scenarios. Continuous refinement helps in uncovering a broader range of potential issues.

- Educate and Train Testers: Ensure that testers understand the purpose and methodology of Monkey testing. Provide training on using the selected tools, interpreting results, and reporting issues effectively. Well-informed testers can conduct more efficient and insightful tests.

- Document and Share Results: Maintain detailed documentation of the testing process, including objectives, tools used, parameters set, and issues discovered. Sharing these results with the development team fosters collaboration and aids in addressing identified problems promptly.

By following these best practices, you can enhance the effectiveness of Monkey testing and contribute to the software development process by building robust and reliable applications.

Conclusion

Monkey testing is highly effective for uncovering unexpected bugs and improving application stability by simulating random user inputs. Unlike structured testing, it exposes hidden vulnerabilities that predefined test cases may miss, making it ideal for stress and performance testing.

While it does not replace formal testing methods, Monkey testing complements them by evaluating how applications handle unpredictable user behavior, crashes, or failures. When applied correctly, it enhances reliability, strengthens user experience, and contributes to building more robust, resilient software.

Citations

- What Is Monkey testing?: https://economictimes.indiatimes.com/definition/monkey-testing

- Monkey testing and Human Testing: https://chapering.github.io/pubs/lctes19.pdf

- Monkey testing for Android Apps: https://www.researchgate.net/publication/322064745

Frequently Asked Questions (FAQs)

Did you find this page helpful?

More Related Hubs

Start your journey with LambdaTest

Get 100 minutes of automation test minutes FREE!!