Power Your Software Testing with AI and Cloud

Supercharge QA with AI for Faster & Smarter Software Testing

- Automation Testing

- Home

- /

- Learning Hub

- /

- What is Pilot Testing

Pilot Testing in Software Testing: Guide for QA Teams and Developers

Learn how to run pilot testing with real users to catch bugs, improve usability, and validate ideas. Includes tools, steps, templates, and expert tips

Last Modified on: September 26, 2025

- Share:

Pilot testing is a preliminary stage designed to evaluate a product, process, or methodology on a smaller scale before full-scale implementation. It’s a small, low-risk test that helps identify problems, confirm feasibility, and make improvements before a full rollout.

Pilot testing allows you to refine designs based on real-world feedback. In software, it helps spot bugs and UX issues that may not appear in a lab, ultimately reducing uncertainty and boosting success.

Overview

Pilot testing is a software testing approach that involves releasing a product or feature to a small, representative group of users under near-real conditions before a full-scale launch.

Why pilot testing matters:

- Catches bugs and usability issues early

- Improves product quality with real user feedback

- Reduces post-launch costs and risks

- Builds stakeholder confidence with real data

How to run pilot testing:

- Choose your OS and run the setup wizard.

- Set clear success metrics.

- Recruit real, representative users.

- Simulate real-world scenarios.

- Track key metrics and feedback.

- Prioritize fixes and iterate before rollout.

What Is Pilot Testing?

Pilot testing is a controlled, small-scale version of a larger rollout. It’s used to uncover flaws, measure effectiveness, and prepare for full deployment. The pilot is intentionally limited, either in time, scope, audience, or geography, so you can manage risk while learning from real interactions.

Pilot testing also builds confidence. When you share results with stakeholders, completion rates, issue logs, and user feedback. You give them data, not guesses. That trust leads to faster approvals and fewer surprises.

Key Applications of Pilot Testing

- Software Development: Ensuring app functionality by identifying bugs and UX issues early, this process helps optimize launches and saves time and resources.

- Research & Surveys: Refining survey questions for clarity and neutrality, pilot testing guarantees reliable responses before broader distribution.

- Product Development: Assessing prototypes and designs, this process collects valuable feedback to refine products before moving to mass production.

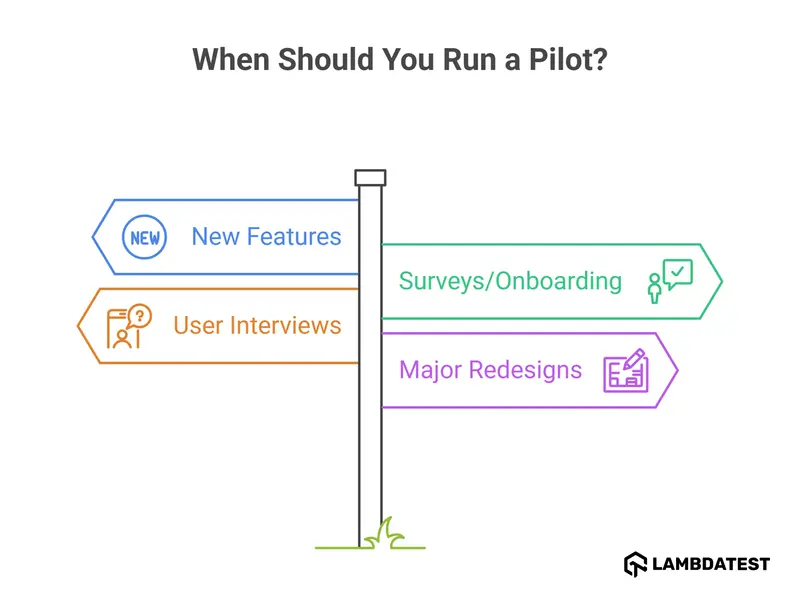

When Should You Run a Pilot?

You run a pilot when the stakes are high and certainty is low. This includes:

- Before launching new features

- Before releasing surveys or onboarding flows

- Before user interviews, if the script is new

- After major redesigns

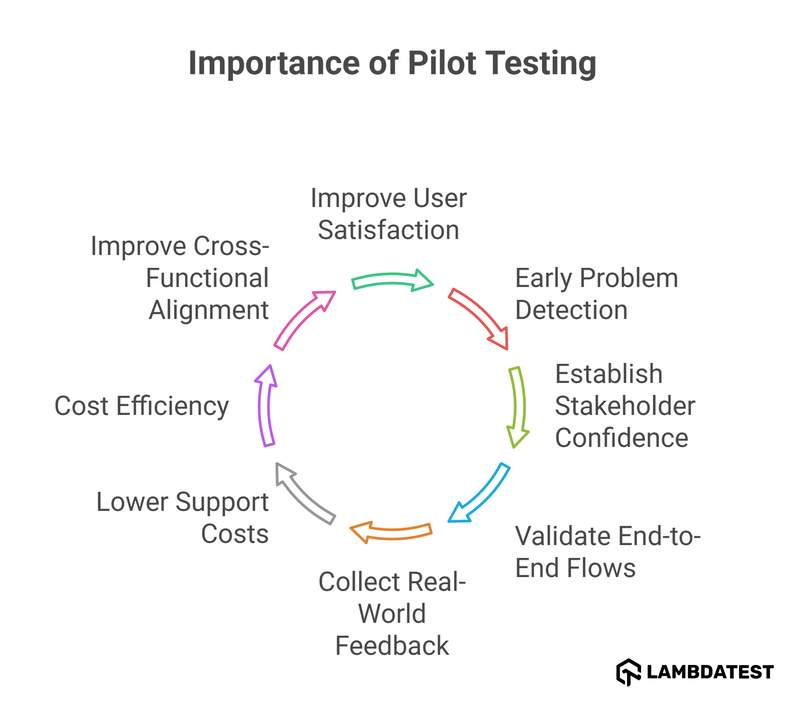

Why Pilot Testing Matters

User testing can uncover up to 85% of usability issues with just five participants. When done correctly, pilot testing not only strengthens your product but also minimizes rollout risks. Here’s what you gain:

- Improved User Satisfaction: Pilot testing gathers valuable user feedback, helping you shape a product that meets real-world needs.

- Early Detection of Problems: Identify issues that staging environments often miss, like integration failures, edge-case conditions, or browser-specific errors.

- Cost Efficiency: Fixing problems at the pilot stage is far cheaper than addressing them after launch.

- Validate end-to-end flows: Make sure users can complete tasks without confusion, roadblocks, or unexpected detours.

- Collect real-world feedback: Learn how actual users behave and where they struggle, giving you qualitative input alongside metrics.

- Lower support costs post-release: Pilot testing often uncovers usability problems that generate future tickets. Fixing them early prevents downstream churn.

- Establish confidence with stakeholders: When you present metrics from a successful pilot, you reduce decision friction.

- Improve cross-functional alignment: Sharing pilot results across QA, product, design, and engineering teams keeps everyone grounded in real usage, not assumptions.

Step-by-Step: How to Run a Pilot Test

1. Define What Success Looks Like

Success starts with clarity. What does “ready for release” mean to your team? Set measurable targets before you test. For example:

- 90% of users complete a task in under 3 minutes

- <5% error rate

- Positive qualitative feedback on feature flow

These goals turn your pilot into a decision tool, not a vague exercise.

2. Choose the Right Participants

Sample selection matters. Internal testers are fine for early validation, but for usability or market feedback, recruit real users who match your audience. Keep the group small, 5 to 10 users often reveal 80% of usability issues.

3. Set Up Tools and Scenarios

The pilot environment must mirror production. Your setup must simulate real conditions. Otherwise, the feedback won’t match what users will face.

Tools to consider:

- Surveys: LimeSurvey, Typeform, SurveyMonkey

- UX testing: Maze, UXtweak

- Automation + CI: LambdaTest

- Session replay & logging: FullStory, Hotjar, LogRocket

Build out realistic scenarios like:

- Common flows: account creation, form submission, checkout, etc.

- Edge cases: slow network, mobile interactions, error paths

Create task instructions or scripts. Brief moderators if it’s a guided session. Provide fallback support.

4. Run the Pilot

Treat it like a live test. Provide the same instructions and support you would in a full rollout. Observe how users interact, where they hesitate, and what breaks. If possible, record sessions for later analysis. Tools like Lookback or Loom make this easy. Be transparent, always ask for consent when recording.

5. Collect and Analyze Data

Instead of focusing solely on success or failure, dive deeper into the overall experience. Track key metrics like time on task, task success rate, drop-off points, and rage clicks.

When tagging issues, categorize them by severity (Critical, High, Medium, Low), type (UI glitch, logic error, confusion, crash), and cause (design flaw, outdated content, API failure). Organize these findings into a shareable dashboard or spreadsheet, and prioritize fixes based on risk and frequency.

6. Iterate and Improve

Review your pilot data and fix the most critical issues first. Focus on what impacts the user experience and core workflows. Keep a simple changelog to track updates.

If needed, rerun the pilot with a fresh group to validate improvements. Then, check your success metrics:

- If goals are met, move forward.

- If key issues remain, refine and retest.

- If results fall short entirely, pause and reassess.

Note: Run your pilot test on real browsers and devices with LambdaTest. Catch issues early and launch with confidence. Try LambdaTest Now!

Wrap it up with a short report, highlight what was tested, what changed, or you're ready to launch.

Pilot Testing Report (with Template)

A pilot testing report is a concise summary of what was tested, what was learned, and what actions were taken. It helps stakeholders make data-informed decisions before full-scale rollout.

The report generally captures:

- Objectives

- User feedback and metrics

- Issues found and their severity

- Fixes implemented

- Final recommendation (Go / No-Go / Iterate)

Here’s a quick: Pilot Testing Report Template , ready-to-use format to align teams and document findings clearly. |

Pilot Surveys to Validate User Experience Before Full Release

A pilot survey is a small-scale test of a questionnaire or form before large-scale deployment. In software, this might mean testing in-app feedback forms or onboarding surveys with a small user group.

Why pilot surveys matter:

- Ensure questions are clear and unbiased

- Spot drop-off points or confusion in the survey interface

- Validate that the response data is meaningful and actionable

Here’s a quick: Pilot Testing Questionnaire Template , use this to collect structured feedback during your pilot. Covers usability, performance, satisfaction, and more. |

Pretesting vs. Pilot Testing vs. Beta Testing

Here is a detailed comparison table for pretesting vs. pilot testing vs. beta testing, covering their key differences across multiple attributes:

| Aspect | Pretesting | Pilot Testing | Beta Testing |

|---|---|---|---|

| Primary Goal | Validate individual elements (e.g., questions, UI components, instructions) | Test the full product flow or feature in a real-world, small-scale setting | Collect broad user feedback at scale under near-final or production conditions |

| Focus Area | Clarity, logic, and usability of specific parts | End-to-end experience, functionality, stability, and user satisfaction | Market readiness, performance under load, long-tail issues, and adoption patterns |

| Audience | Internal team, usability experts, or limited stakeholders | Real users from target segments, recruited intentionally | General public, early adopters, or opt-in customers |

| Sample Size | Very small (1–3 people) | Small but representative (5–15 users usually) | Large and diverse (hundreds to thousands) |

| Environment | Lab-like, highly controlled | Simulated or near-production | Real-world, production, or near-production |

| Test Scope | Isolated elements or modules | Entire workflows, cross-functional dependencies, and real use conditions | Full product, across environments, devices, and user scenarios |

| Duration | Short, often hours or a few days | Short to medium, typically 1–2 weeks | Medium to long, can last several weeks to months |

| Feedback Type | Immediate expert feedback, usability observations | Structured and unstructured feedback, metrics, and behavioral observations | Real-world feedback, bug reports, support queries, usage analytics |

| Tools Involved | Wireframes, clickable prototypes, survey drafts, static mockups | Feature-complete test builds, session replay tools, and test management dashboards | Final builds, bug tracking tools, telemetry/analytics platforms |

| Risk Exposure | Very low | Low to medium | Medium to high |

| Output/Deliverable | Refined content, UI components, survey questions | Pilot report with success metrics, issue log, and go/no-go decision | Public release notes, changelogs, backlog of feedback |

| Best Used When | Designing surveys, new UI elements, or instructions | Validating newly developed features, workflows, or platforms before rollout | Gearing up for the final launch or stress testing their key differences across multiple attributes: a release with mass adoption |

| Objective | Reduce friction in design or content before development starts | Ensure stability, usability, and performance before wide rollout | Detect scalability or edge-case issues in real-world conditions |

| Common Mistakes | Overreliance on internal bias, skipping expert reviews | Using internal testers only, skipping success metrics, treating it like a demo | Launching without moderation, under-supporting feedback channels, and ignoring data from early adopters |

Common Mistakes (and How to Avoid Them)

- Using internal testers only- Biases results. Always test with real users.

- Skipping success criteria- Without targets, you can't measure effectiveness.

- Treating it as a formality- Pilots are experiments, not demos. Expect things to break.

- Not iterating- A pilot isn’t useful unless it leads to change.

Best Practices for Pilot Testing

1. Define Clear Goals: Set measurable, achievable objectives for what you want to learn or validate.

2. Choose a Representative Sample: Ensure your sample group reflects your target audience for relevant feedback.

3. Collect Quantitative & Qualitative Feedback: Combine data with user comments for a complete picture of performance.

4. Act on Feedback: Use insights to make meaningful improvements and adjust your product or process accordingly.

Additional Resources

- Download Examples of Software Pilot Testing: Real-world example of Healthcare Appointment App pilot testing in a clinical setting

- Download UX Testing Checklist: Step-by-step guide to ensure usability coverage during pilot tests

Final Thoughts

Pilot testing is a practical insurance policy against avoidable failure. Before launching software, a survey, or a service workflow, test it with a small group first. Observe. Learn. Adjust. Then scale.

The most polished products aren’t built in a vacuum. They’re rehearsed. And pilot testing is how you do that well.

2M+ Devs and QAs Rely on LambdaTest for Web & App Testing Across 3000 Real Devices

On This Page

- Overview

- What Is Pilot Testing?

- Why Pilot Testing Matters

- Step-by-Step: How to Run a Pilot Test

- Pilot Testing Report (with Template)

- Pilot Surveys to Validate User Experience Before Full Release

- Pretesting vs. Pilot Testing vs. Beta Testing

- Common Mistakes (and How to Avoid Them)

- Best Practices for Pilot Testing

- Additional Resource

- Frequently Asked Questions (FAQs)

Frequently Asked Questions (FAQs)

Did you find this page helpful?

More Related Hubs

Start your journey with LambdaTest

Get 100 minutes of automation test minutes FREE!!