How False Positive and False Negative Affect Product Quality

Yatish Jhamb

Posted On: September 18, 2025

11 Min

False positive and false negative can undermine modern software testing. With fast CI/CD cycles and high customer expectations, testing is about trust, not just bug-hunting. Wrong signals waste developer time, frustrate QA, block pipelines, and delay releases. This blog explores their causes, team-wide impact, and strategies to reduce them, including AI-powered tools like LambdaTest’s Test Insights for detecting flaky tests and improving test reliability.

Overview

False positives and false negatives are two common types of errors in testing and classification. Both affect the accuracy of results, but in different ways. A false positive incorrectly flags a problem that does not exist, while a false negative misses a real issue, giving a false sense of security.

False Positive (Type I Error)

Key details about false positives:

- Definition: A test indicates a bug or condition that is not actually present.

- Medical Example: A test shows a disease when the person is healthy.

- Software Example: A UI regression test fails due to a harmless CSS change.

- Impact: Wasted time, unnecessary debugging, and reduced trust in automation.

False Negative (Type II Error)

Key details about false negatives:

- Definition: A test fails to detect a real bug or condition that is present.

- Medical Example: A test shows negative results, but the disease actually exists.

- Software Example: A performance test misses a memory leak under load.

- Impact: Bugs slip into production, causing reliability issues and potential business loss.

Key Differences

How false positives and negatives differ:

- False Positive: Indicates a bug when none exists (a false alarm).

- False Negative: Misses a bug that exists (a missed detection).

- Consequences: False positives waste resources, while false negatives risk product quality and customer trust.

TABLE OF CONTENTS

- What Are False Positive and False Negative?

- Why False Negatives Are More Dangerous

- Why They Matter Beyond QA

- Real-World Impact Scenarios – False Negative and Positive

- What Causes of False Positives

- What Causes of False Negatives

- Strategies to Minimize False Positives

- Strategies to Minimize False Negatives

- Stop Wasting Time on Flaky Tests with LambdaTest Test Insights

- Cross-Team Benefits of Reducing False Positive and Negative

- Frequently Asked Questions (FAQs)

What Are False Positive and False Negative?

False positive and false negative are common types of errors in software testing that affect the accuracy of test results. A false positive occurs when a test reports a problem or defect that does not actually exist, leading teams to investigate issues that are not real. A false negative happens when a test fails to detect an actual defect, allowing bugs to pass through undetected. Both types of errors can reduce confidence in testing, cause wasted effort, and impact the reliability of development and release processes. Understanding these errors is important for improving test design, ensuring accurate results, and maintaining efficient workflows.

- False Positive: A test indicates a defect when the system is actually working correctly.

- Example: A visual regression test fails because of a harmless CSS change.

- False Negative: A test passes when there is, in fact, a real defect.

- Example: A performance test misses a memory leak because it was not run under high load conditions.

Here is a quick comparison:

| Aspect | False Positive | False Negative |

|---|---|---|

| What happens | Test flags a bug that does not exist | Test misses a real bug |

| Example | UI test fails due to a dynamic element mismatch | Security vulnerability goes undetected |

| Short-term impact | Wasted developer time, slowed releases | Bug leaks into production |

| Long-term impact | Reduced trust in automation, flaky pipelines | Customer dissatisfaction, reputational damage |

Both can severely deteriorate your release velocity. The most notorious of the pack are false negatives.

Note

NoteShip quality software with confidence. Try LambdaTest Today!

Why False Negatives Are More Dangerous

While both errors are problematic, false negatives are more costly:

- They allow bugs to ship undetected into production.

- They lead to user-facing defects that damage trust.

- They create expensive firefighting for DevOps and engineering.

A false positive wastes hours. A false negative can cost millions.

Think of it like security: a false alarm is annoying, but failing to detect a real breach can be catastrophic.

Why They Matter Beyond QA

False positive and false negative are often discussed in QA contexts, but their consequences ripple across the entire software delivery ecosystem.

For Developers

- False Positives: Developers lose time investigating tests that fail for no reason. This kills momentum during sprint cycles.

- False Negatives: Developers unknowingly ship defective code, leading to late-stage debugging when the cost of fixing is highest.

For DevOps Teams

- False Positives: Pipelines fail unnecessarily, creating flaky pipelines that waste compute resources and delay deployments.

- False Negatives: Weak test coverage lets regressions sneak into production, making rollback procedures or hotfixes more frequent.

For Product Managers and Business Leaders

- False Positives: Cause slower release velocity, meaning features take longer to reach users.

- False Negatives: Put customer trust and revenue at risk. Bugs slipping into production lead to churn, poor reviews, and reputational hits.

Real-World Impact Scenarios – False Negative and Positive

Scenario 1: The Developer’s Perspective

A frontend engineer runs the CI pipeline and sees 12 failing UI tests. After hours of debugging, it turns out all were caused by a dynamic element ID mismatch. This is a classic case of false positives. Productivity drops, sprint velocity slows, and frustration builds.

Scenario 2: The DevOps Pipeline Perspective

A fintech team runs automated API regression tests in GitHub Actions. A misconfigured test case keeps failing despite no real issue. Deployments get blocked multiple times a week, reducing trust in the pipeline.

Scenario 3: The Product Manager’s Nightmare

An e-commerce platform skips extensive load testing. During Black Friday sales, the system crashes under heavy concurrency. A missed defect caused by false negatives results in abandoned carts, lost sales, and a brand’s reputation hit.

How False Positives and Negatives Erode Trust

The biggest problem is not just wasted time. It is trust erosion.

- Developers stop trusting test results and start ignoring them.

- QA engineers spend cycles triaging noise instead of writing better tests.

- DevOps treats flaky pipelines as expected.

- Product managers question the ROI of test automation.

Once trust is broken, the entire testing process loses credibility.

What Causes of False Positives

False positives often come down to poorly designed or unstable tests.

- Brittle Test Scripts: Hard-coded waits, fragile selectors, and environment assumptions make tests fail even when the app is fine.

- Environment Issues: Differences between staging and production environments create mismatches, especially in UI and API tests.

- Overly Strict Assertions: Assertions that flag even non-critical changes, such as font size or element position, as defects generate unnecessary noise.

- Tooling Limitations: Basic automation frameworks without intelligent test validation lack the context to differentiate between real bugs and harmless changes.

What Causes of False Negatives

False negatives typically stem from gaps in test coverage or weak execution.

- Incomplete Test Coverage: Critical scenarios are not automated, so bugs in those paths slip through.

- Poor Data Variety: Tests pass with happy-path inputs but fail to explore edge cases.

- Mocking Overuse: Excessive reliance on mocks makes tests blind to real integration issues.

- Ignoring Scale and Load: Performance or concurrency issues are missed because tests are not run under real-world loads.

Strategies to Minimize False Positives

1. Smarter Test Design

- Use stable locators such as data-test attributes instead of brittle XPath.

- Write assertions that validate intent, not cosmetic details.

2. Environment Consistency

- Keep test and staging environments closely aligned with production.

- Use containerization with Docker or Kubernetes for reliable test infrastructure.

3. Flaky Test Management

- Quarantine flaky tests until fixed.

- Use AI-driven detection to auto-identify unstable patterns.

Strategies to Minimize False Negatives

1. Broaden Test Coverage

- Use equivalence partitioning and boundary value analysis.

- Automate edge cases, not just happy paths.

2. Data-Driven Testing

- Expand test datasets with varied inputs.

- Simulate real-world traffic, devices, and browsers.

3. Continuous Testing in CI/CD

- Run tests on every pull request.

- Integrate pipelines with real device and browser testing for accuracy.

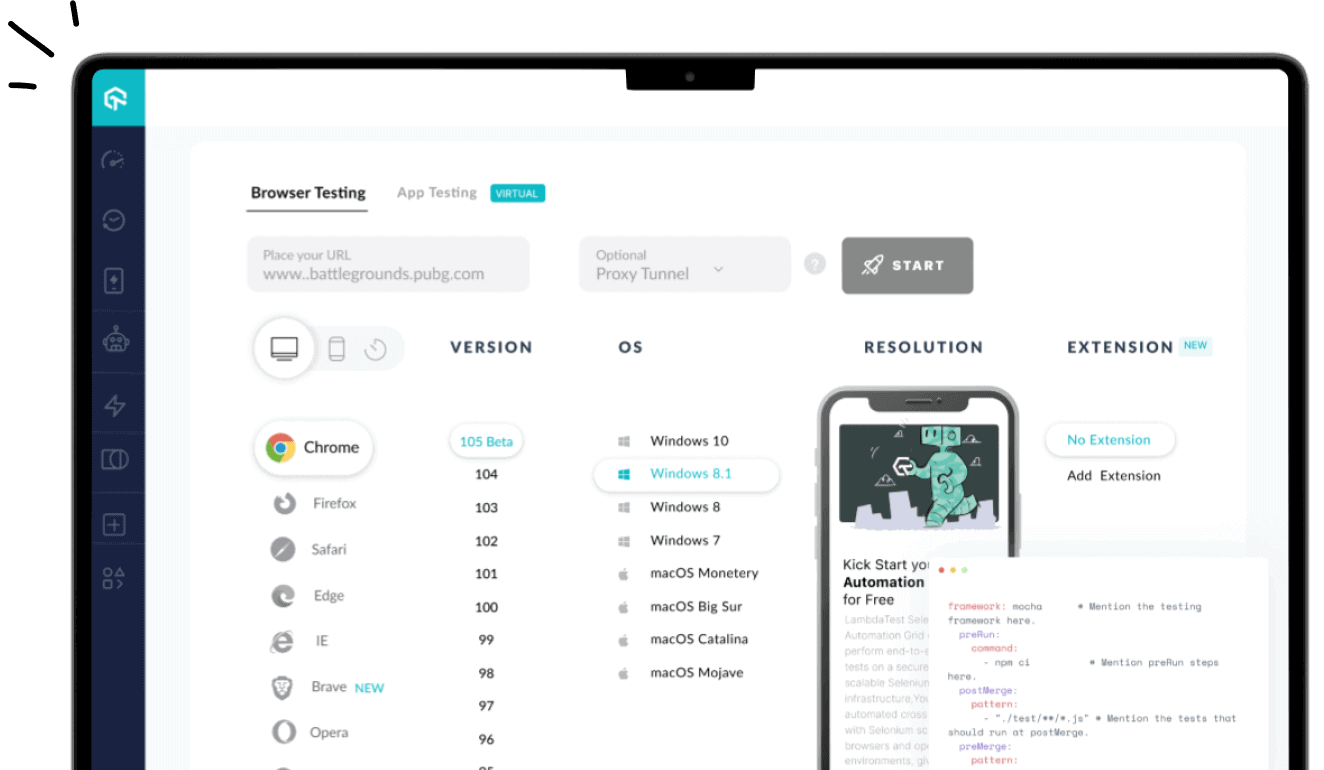

Stop Wasting Time on Flaky Tests with LambdaTest Test Insights

Flaky tests and unreliable automation can slow development and block CI/CD pipelines. LambdaTest Test Insights uses AI to turn test data into actionable intelligence, helping teams detect issues faster and ship with confidence. Key benefits include:

- AI-Native Root Cause Analysis: Quickly pinpoint why a test failed, distinguish real defects from environmental glitches.

- Flaky Test Detection: Automatically identify unstable tests and reduce false positives that waste time.

- Classify Failed Actions: Categorize failed steps to focus on critical issues and cut down repetitive debugging.

- Analyze Test Cases: Get insights into test coverage, execution trends, and gaps to improve overall reliability.

- Detect Anomalies in Test Execution: Flag unexpected behaviors across devices, browsers, and environments.

- Test Failure Categorization AI: Group similar failures and prioritize fixes for faster, smarter triage.

With LambdaTest Test Insights, teams can reduce false positives and negatives, optimize test suites, and accelerate release cycles, making every test run more meaningful and productive.

Cross-Team Benefits of Reducing False Positives and Negatives

Minimizing false positives and false negatives is not just a QA concern. It benefits the entire software delivery ecosystem. Here is how different teams gain from more reliable testing:

- QA Engineers: With fewer false alarms and flaky test results, QA engineers can spend more time designing meaningful test cases instead of triaging noise. Reliable automation increases efficiency, reduces frustration, and allows QA to focus on uncovering real defects that matter.

- Developers: Accurate test results provide faster and more trustworthy feedback on code changes. Developers waste less time investigating phantom failures and can concentrate on delivering high-quality features. This leads to improved sprint velocity and reduced debugging cycles.

- DevOps Teams: CI/CD pipelines become more stable when test results are reliable. DevOps teams can trust automated checks to validate deployments. This reduces rollback incidents, minimizes blocked releases, and ensures smoother continuous delivery

- Product Managers: Reliable test results accelerate release cycles and reduce the risk of shipping buggy features. Product managers can confidently launch features faster, maintain high product quality, protect brand reputation, and minimize revenue impact from post-release defects.

When false positives and negatives are minimized, the entire organization operates more efficiently. Teams collaborate better, pipelines remain stable, releases are faster, and everyone, from QA to leadership, can move forward with confidence knowing that the software meets both quality and business expectations.

Conclusion

False positives and false negatives play a critical role in modern software testing. Software teams today ship fast with CI/CD pipelines, shorter release cycles, and rising customer expectations. In this setup, testing is not just about finding bugs, it is about trust. When tests give wrong signals, developers chase phantom issues, real defects slip by, and pipelines lose credibility. These errors may seem minor but can derail product strategies, frustrate QA with unreliable automation, waste developer time, block DevOps pipelines, and delay releases. This blog explores their causes, their impact across different teams, and the strategies to minimize them using best practices and smarter test insights.

Frequently Asked Questions (FAQs)

What is a false positive in software testing?

It is when a test incorrectly reports a defect that does not exist, leading to wasted debugging effort.

What is a false negative in CI/CD?

It is when a test passes despite a real defect, allowing bugs to escape into production.

Which is worse: false positives or false negatives?

False negatives are more dangerous because they hide real issues that can impact users and business outcomes.

How can teams reduce false positives?

By writing stable tests, aligning environments, and using AI-based test intelligence to filter out noise.

How can DevOps pipelines avoid false negatives?

By running comprehensive tests in CI/CD with real devices and browsers, and incorporating load and performance testing.

Why do false positives and false negatives matter to product managers?

Because they directly affect release velocity, product quality, and customer trust, which are key drivers of business success.

Author