Rainbows & Unicorns: Testing Serverless Applications in AWS [Testμ 2023]

LambdaTest

Posted On: August 25, 2023

![]() 11150 Views

11150 Views

![]() 17 Min Read

17 Min Read

In an engaging session led by Anaïs van Asselt, we delved into the world of quality assurance within the startup realm. At Choco, a Berlin-based startup with unicorn status, the approach to quality assurance was anything but conventional. We also explored the intricacies of test automation in a serverless architecture on AWS, revealing the unique challenges it presents.

Anaïs’ session offered a wealth of knowledge crucial for thriving in this ever-changing landscape.

About the Speaker

Anaïs van Asselt knows her way around testing and automation. She’s spent ten years working with different organizations and using various testing tools for both the front and back ends of software. Her main goal is to ensure automated testing is done smartly and sustainably. She’s not just about testing; she works closely with developers to ensure everything runs smoothly. Recently, she took on a mission at Choco in Berlin to help create a supply chain that’s good for the planet and reduces food waste. It’s a big challenge, but she’s up for it!

If you couldn’t catch all the sessions live, don’t worry! You can access the recordings at your convenience by visiting the LambdaTest YouTube Channel.

Anaïs van Asselt discussed the impact of startups on quality and test automation, along with the challenges of testing automation in a serverless setup on AWS.

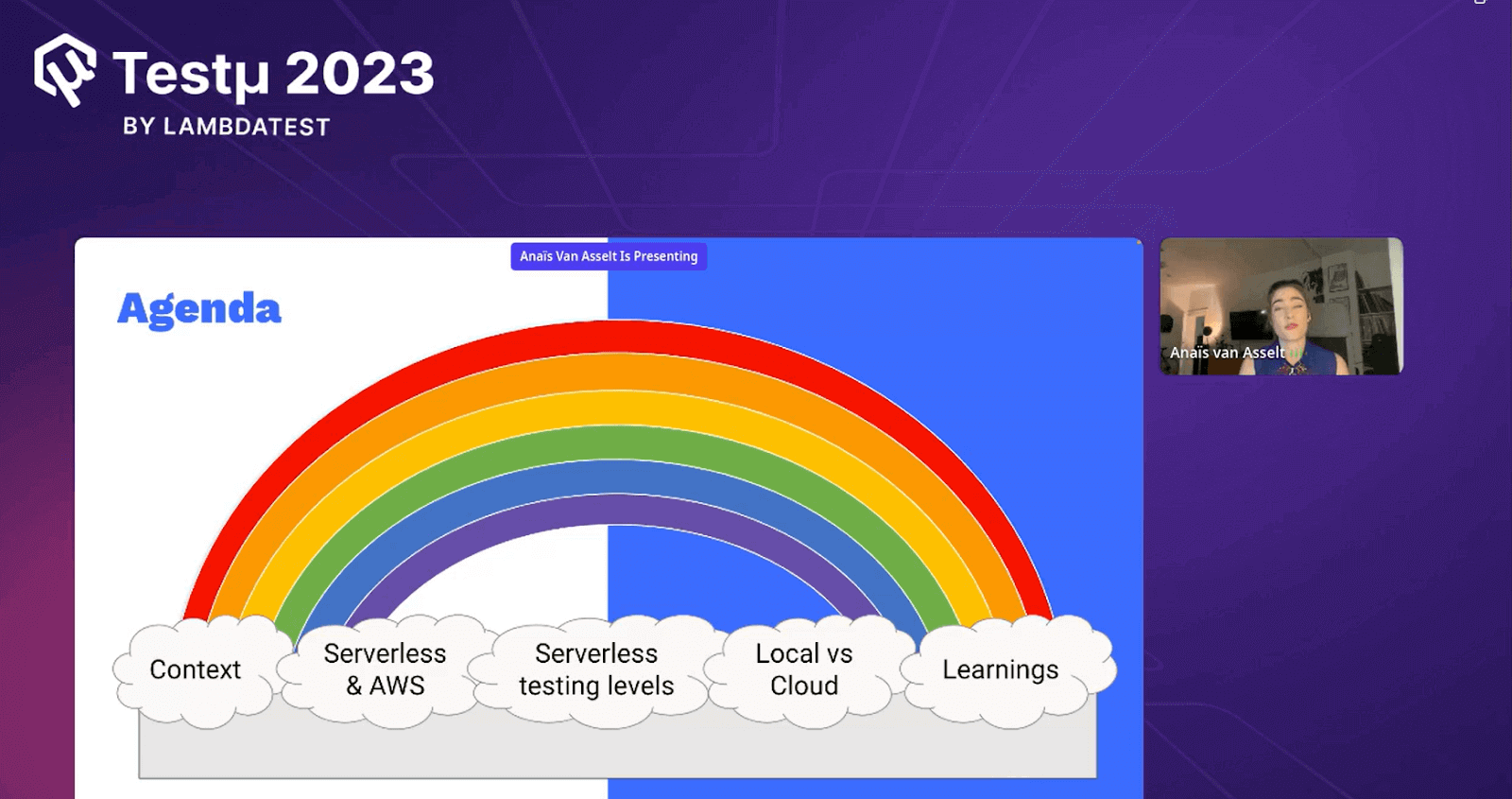

Agenda

- Context

- Serverless & AWS

- Serverless testing levels

- Local vs Cloud

- Learnings

Choco

Anaïs spoke briefly about the application called Choco, highlighting that this application brought together food suppliers and restaurants to manage and help reduce food wastage.

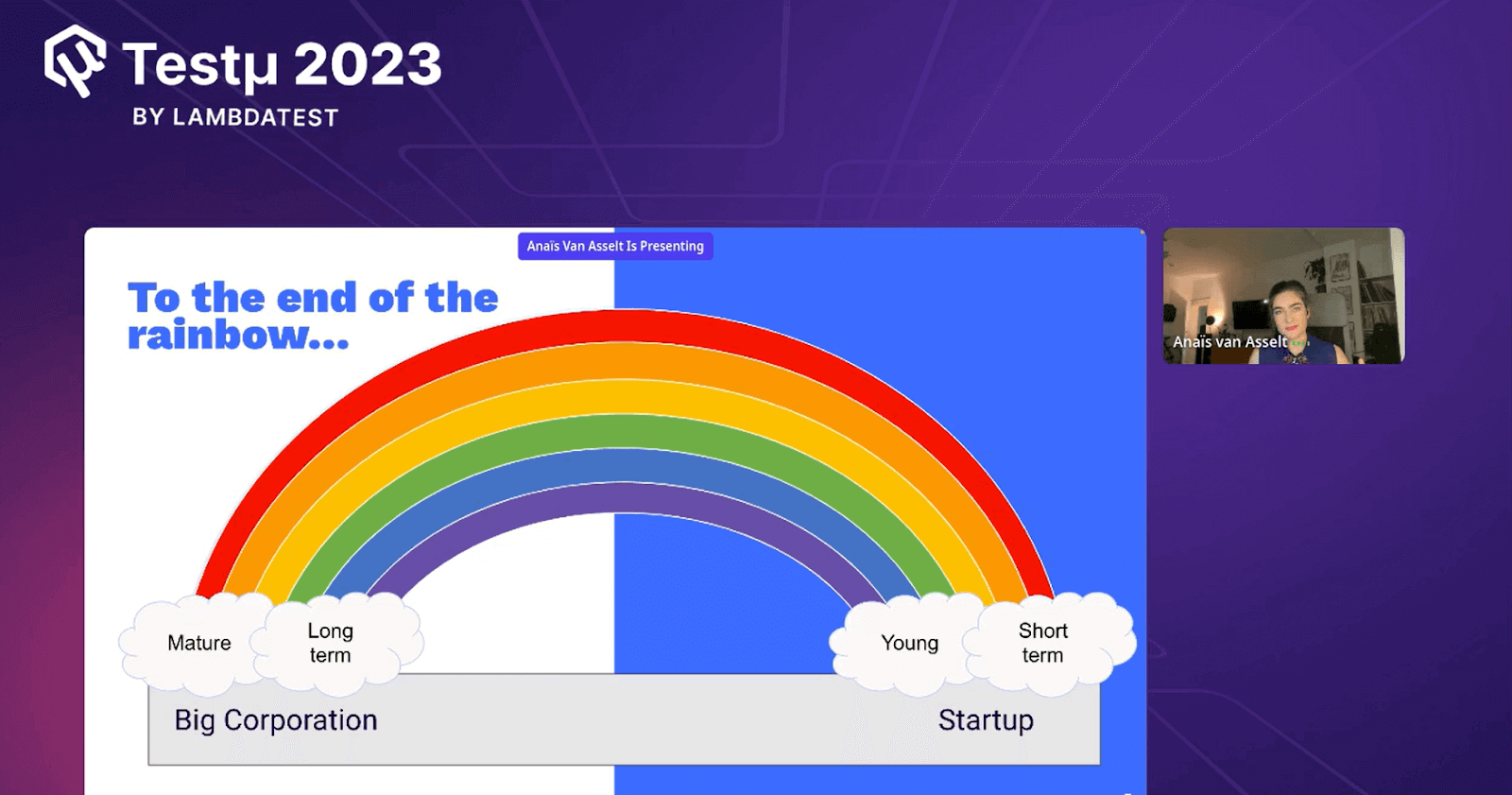

To the end of the Rainbow (Big Corporate – Startups)

Anaïs discussed the contrasting perspectives of big corporations and startups during her presentation. She elaborated on how big corporations tend to have a mature outlook, focusing on long-term strategies and stability. In contrast, relatively young and dynamic startups often prioritize short-term goals and rapid growth.

Anaïs highlighted the differences in their approaches to quality assurance and test automation, emphasizing the need for adaptability in the fast-paced startup environment while valuing established corporations’ stability and long-term vision.

To the end of the Rainbow (Server-based vs Serverless)

During her session, Anaïs delved into the distinction between server-based and serverless architectures. She provided insights into the challenges and considerations associated with the test automation in a serverless setup, particularly on AWS (Amazon Web Services).

She shared strategies and best practices for effectively testing serverless workflows and functions, emphasizing the importance of adaptability and innovation in this rapidly evolving technology landscape.

Anaïs discussed how serverless architectures, known for their scalability and cost-efficiency, introduce unique testing challenges compared to traditional server-based systems.

Serverless Computing

Anaïs delved into the realm of serverless computing using the AWS (Amazon Web Services) cloud platform. She discussed how serverless architecture, which involves deploying code without managing traditional server infrastructure, has gained popularity for its scalability and cost-effectiveness.

Anaïs shared valuable insights into the intricacies of testing serverless applications and workflows in the AWS environment.

Her talk revolved around the challenges and strategies for ensuring the reliability and quality of serverless solutions, highlighting the need for innovative testing approaches to adapt to this evolving technology landscape.

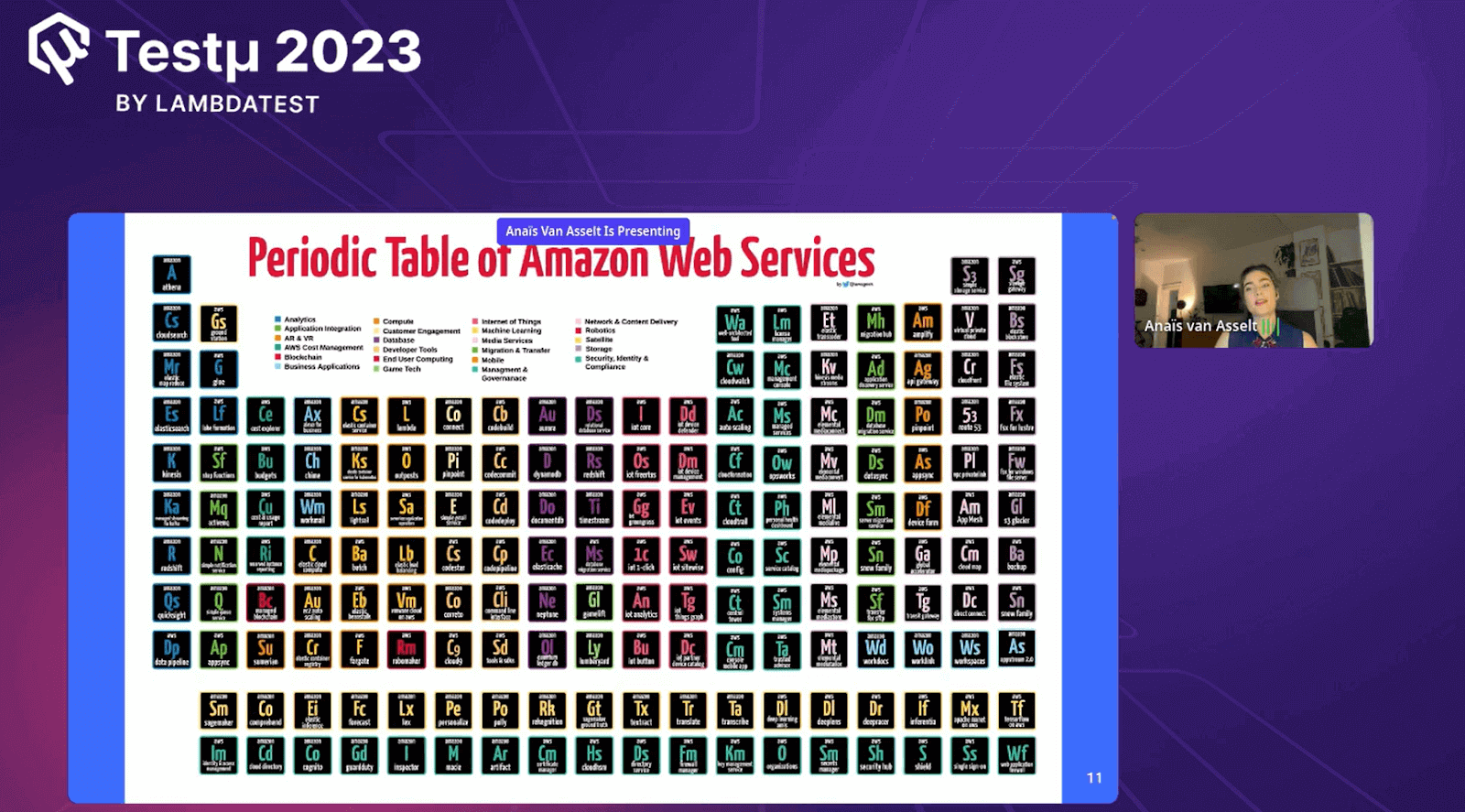

AWS Services

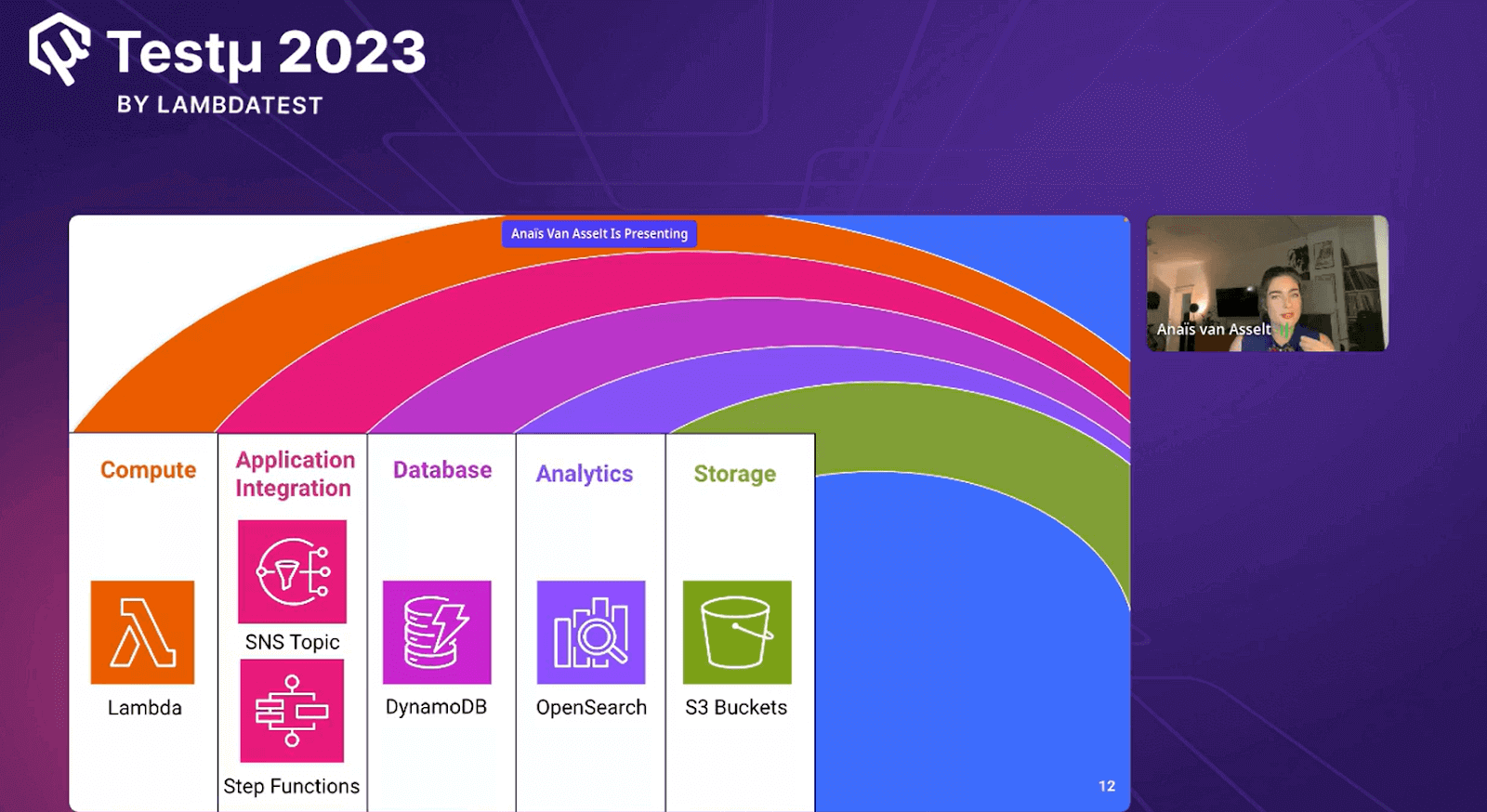

Anaïs discussed how serverless computing is not tough enough to manage and maintain by a small or big corporation. She highlighted a few AWS components and briefed about each in further sessions.

- Compute: Anaïs discussed the computing aspect of serverless architecture, focusing on AWS Lambda. She highlighted its event-driven capabilities and how it enables developers to run code in response to various events without worrying about server management.

- Application Integration: During her session, she explained the importance of application integration in serverless setups. She emphasized how serverless functions could seamlessly integrate with other services and APIs, making it crucial to test these integration points thoroughly.

- Database: Anaïs covered the database aspect, discussing how serverless applications interact with databases. She emphasized that serverless applications often rely on managed database services to store and retrieve data efficiently. Ensuring data consistency and testing data access patterns were among the key considerations she addressed.

- Analytics: Anaïs delved into the analytics component of serverless architecture. She highlighted how serverless functions could be used to process and analyze data, making it essential to design tests that validate the correctness of data processing and analytics pipelines.

- Storage: Anaïs touched on storage within serverless setups, emphasizing the importance of data storage and retrieval. She discussed various storage solutions and how serverless applications utilize them. Ensuring data integrity and effective testing of storage-related operations were key points she covered during her session.

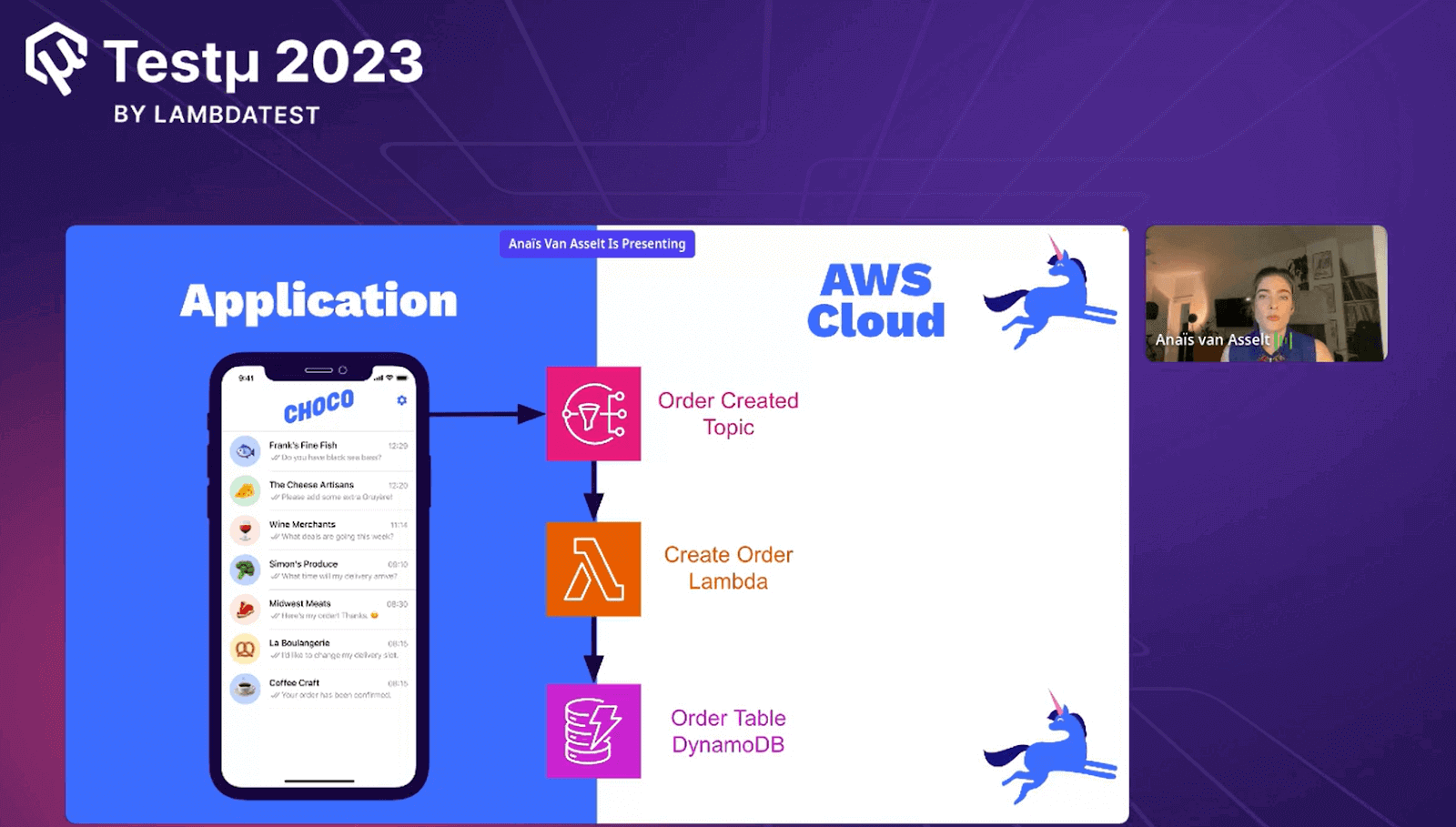

Application with AWS build

Anaïs elaborated on the application’s flow within the AWS Cloud during her session. The process involved the below-mentioned steps.

- Order Created Topic: This likely represented an event or trigger in the system when a new order was created. It could have been a message published to a messaging service or a similar event-driven mechanism.

- Create Order Lambda: Anaïs explained that a Lambda function was employed to process orders. When an order was created (triggered by the “Order Created Topic”), this Lambda would be invoked to execute the necessary logic, including order validation, database interactions, or other processing steps.

- Order Table DynamoDB: Anaïs discussed the usage of DynamoDB, an AWS NoSQL database service, as the data store for order information. The Lambda function would likely interact with DynamoDB to store or retrieve order data, ensuring data consistency and durability.

In this flow, the system responds to new order creation events, processes them using serverless functions (Lambda), and stores/retrieves order data in DynamoDB. This architecture provides flexibility, scalability, and reliability, often in serverless and cloud-native applications.

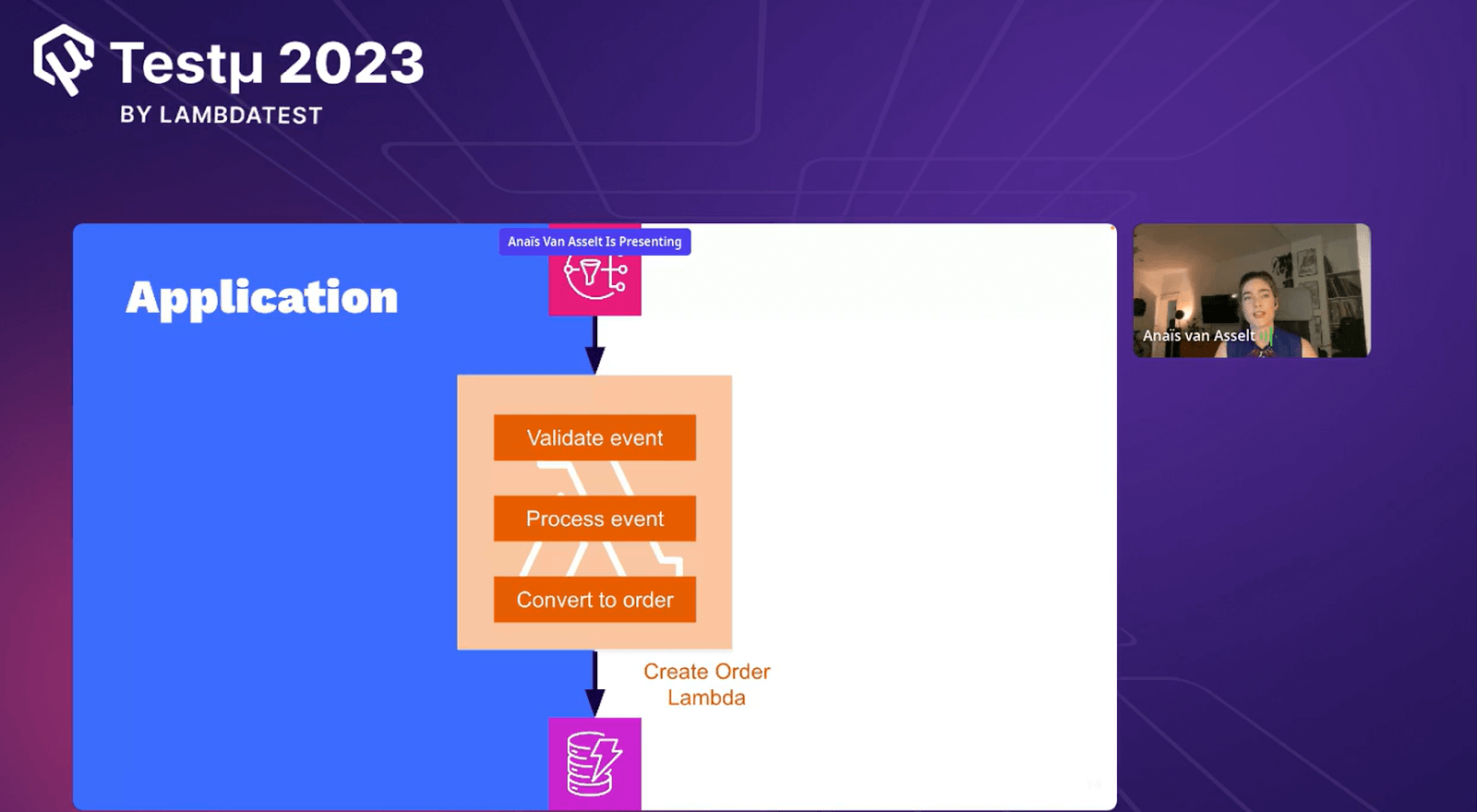

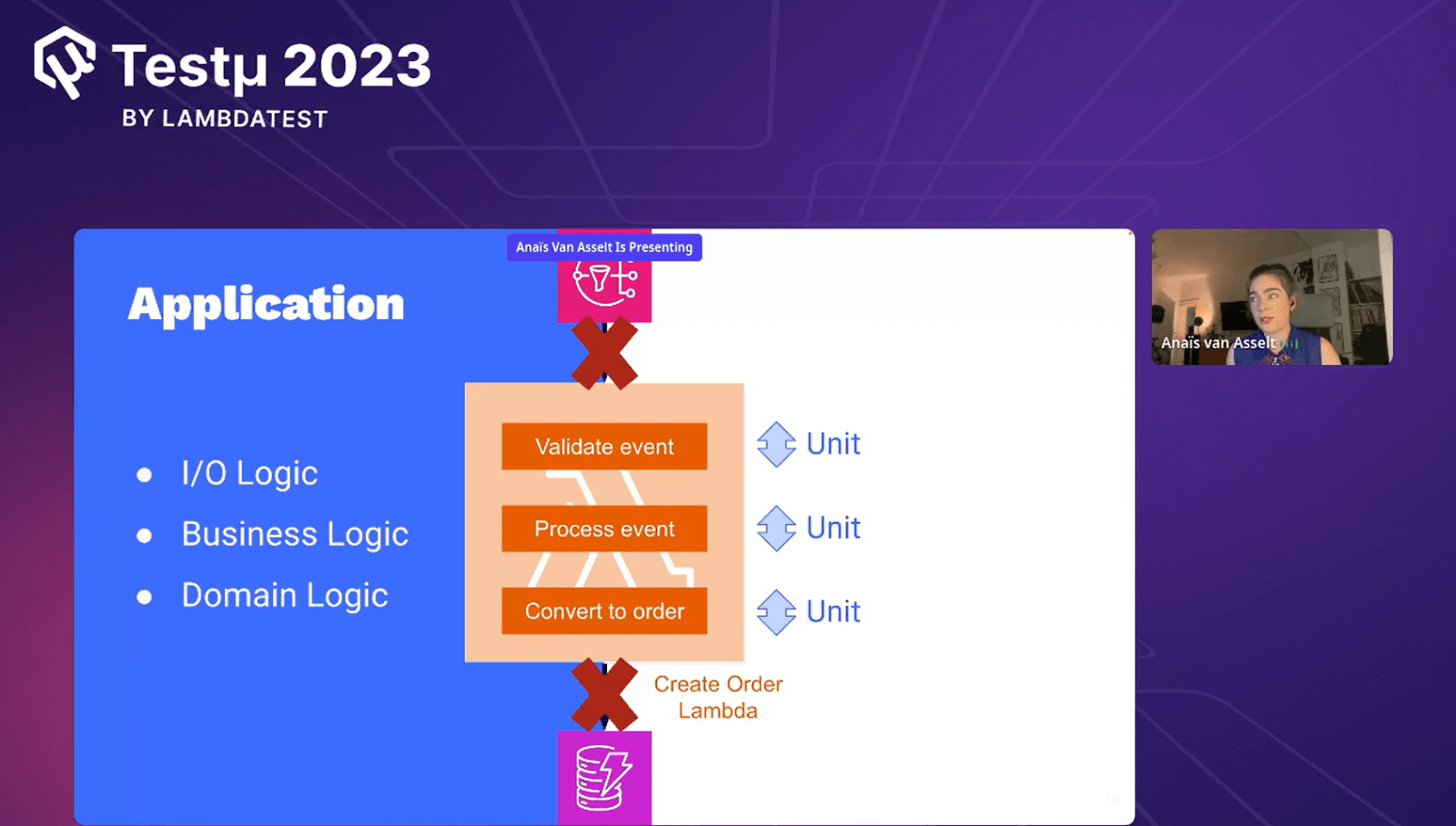

Validate, Process, and Create Order Process

Anaïs provided insights into the application flow that triggered the “Create Order Lambda” creation during her session.

1. Validate Event: In this step, the incoming event, likely representing a new order request, underwent validation. The purpose was to ensure that the event data met specific criteria or business rules. If the validation failed, it indicated that the event didn’t conform to the expected format or contained invalid data.

2. Process Event: After passing the validation, the event was processed. This phase involved performing various operations on the event data, such as parsing, data transformation, or applying business logic. Processing was a critical step in preparing the event for the next stages.

3. Convert to Order: Once the event was successfully processed, it could be converted into an actual order. This transformation might include mapping event attributes to order attributes, setting order status, and preparing it for storage or further processing.

An important point highlighted by Anaïs was that the Create Order Lambda would only be triggered if all these steps—validation, processing, and conversion—were successfully completed.

If any of these steps encountered issues or failed to meet the criteria, the order creation process wouldn’t be initiated. This ensured that only valid and properly processed events led to the creation of orders, enhancing the reliability and integrity of the system.

Application E2E

During her session, Anaïs explained why the application might prevent triggering the “Create Order Lambda” at the application level. Let’s break down the key points in simple terms.

- I/O Logic: Consider how the application handles data entering and going out. If it struggles with this, it can’t communicate properly.

- Business Logic: This is like the rulebook for the app’s main tasks. If it messes this up, it won’t do what it should.

- Domain Logic: This involves knowing the specific rules for the app’s industry. Messing this up means it doesn’t understand the industry’s rules.

She explained that if there were issues in any of these areas, it could stop the “Create Order Lambda” from working as it should in the application.

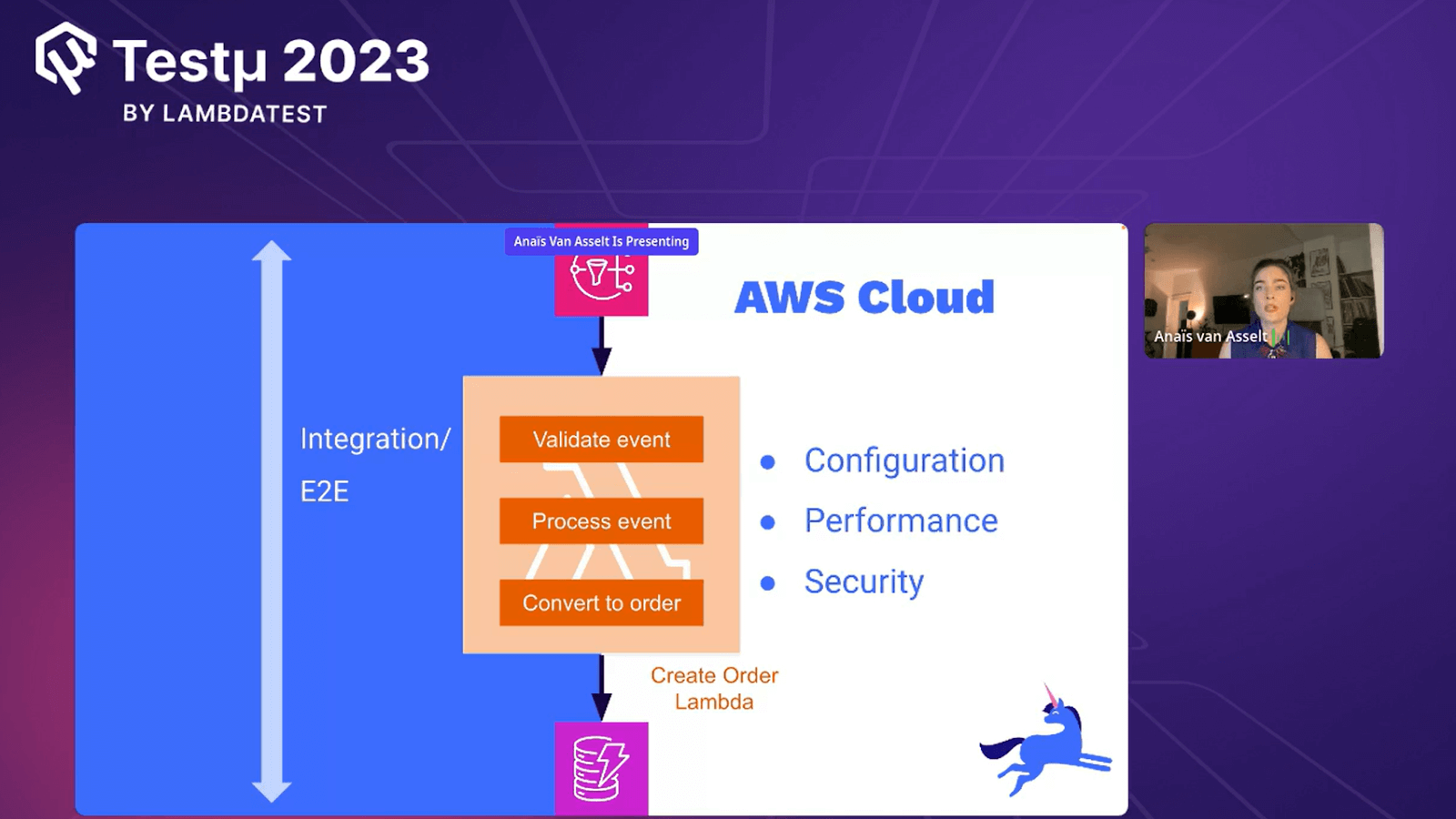

AWS integration or E2E

Anaïs highlighted that when the application focuses on AWS integration, end-to-end (E2E) configuration, performance, and security, it becomes much smoother to Create Order Lambda. By paying attention to these three critical aspects, the application could function seamlessly, ensuring a hassle-free order creation process.

What to test?

In Anaïs’s session, the primary focus was on comprehensive testing, emphasizing two critical aspects.

- Integration Between Application & AWS Cloud Services: Anaïs highlighted the significance of thoroughly testing the integration between the application and AWS cloud services. This involves examining how different application components interact with various AWS resources.

For instance, it entails assessing whether data is transferred correctly, API calls are functioning as intended, and security measures are in place to protect sensitive information during these interactions. By doing so, any potential integration issues or bottlenecks can be identified and addressed proactively.

- Testing in a Production-Resembling Environment: Anaïs emphasized the importance of conducting tests that closely mirror the production environment. This approach is crucial because it allows testers to simulate real-world conditions and configurations, ensuring the application behaves consistently and reliably when deployed in a production setting.

This includes replicating infrastructure setups, data volumes, and network configurations to identify any discrepancies or issues that might arise in production.

In summary, Anaïs’s session advocated rigorous testing, especially concerning integrating an application and AWS cloud services. Testing in an environment that closely resembles production was highlighted as a key strategy to ensure the application’s readiness and robustness in real-world scenarios.

By addressing integration challenges and conducting tests in a production-like setting, organizations can enhance the quality and reliability of their applications in the AWS cloud.

Challenges

She addressed some notable challenges that organizations often encounter when dealing with AWS cloud services and testing in a production-like environment.

- Emulating AWS Cloud Services Locally: Replicating the full scope of AWS services in a local testing environment can be complex but is crucial for thorough testing before deployment.

- Managing Asynchronous Flows: Controlling asynchronous processes, common in AWS, requires careful planning and testing strategies due to their unpredictable nature. Anaïs emphasized the need for effective testing approaches.

Her insights provided valuable guidance for organizations seeking to address these challenges and ensure reliable AWS-based applications.

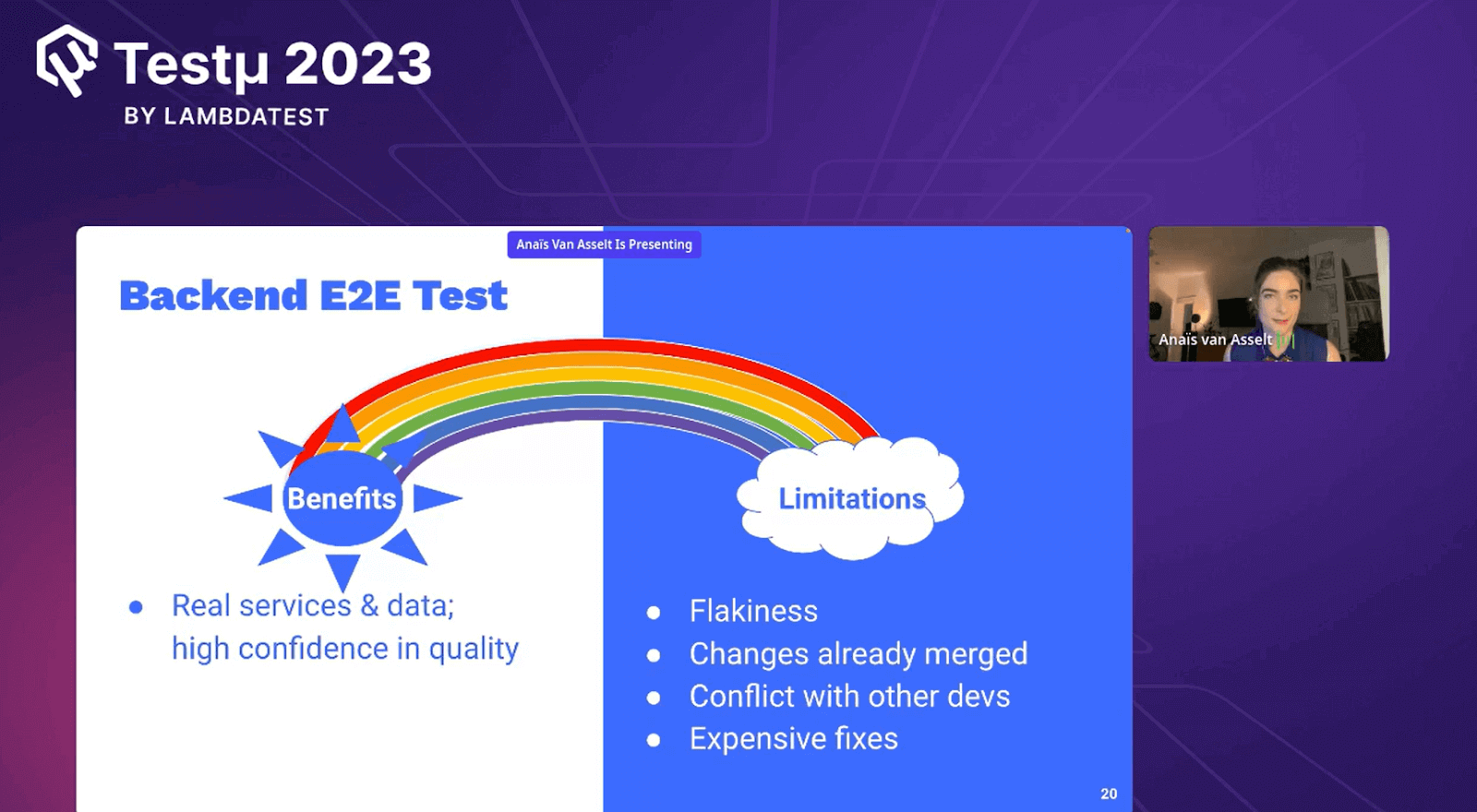

Benefits of Backend E2E Tests & Limitations

Anaïs delved into the advantages and drawbacks of Backend End-to-End (E2E) testing. This comprehensive examination of E2E testing helped attendees gain a deeper understanding of its merits and limitations in the context of software development and quality assurance.

Benefits

- Real services & data; high confidence in quality.

Limitations

- Flakiness

- Changes already merged

- Conflict with other devs

- Expensive fixes

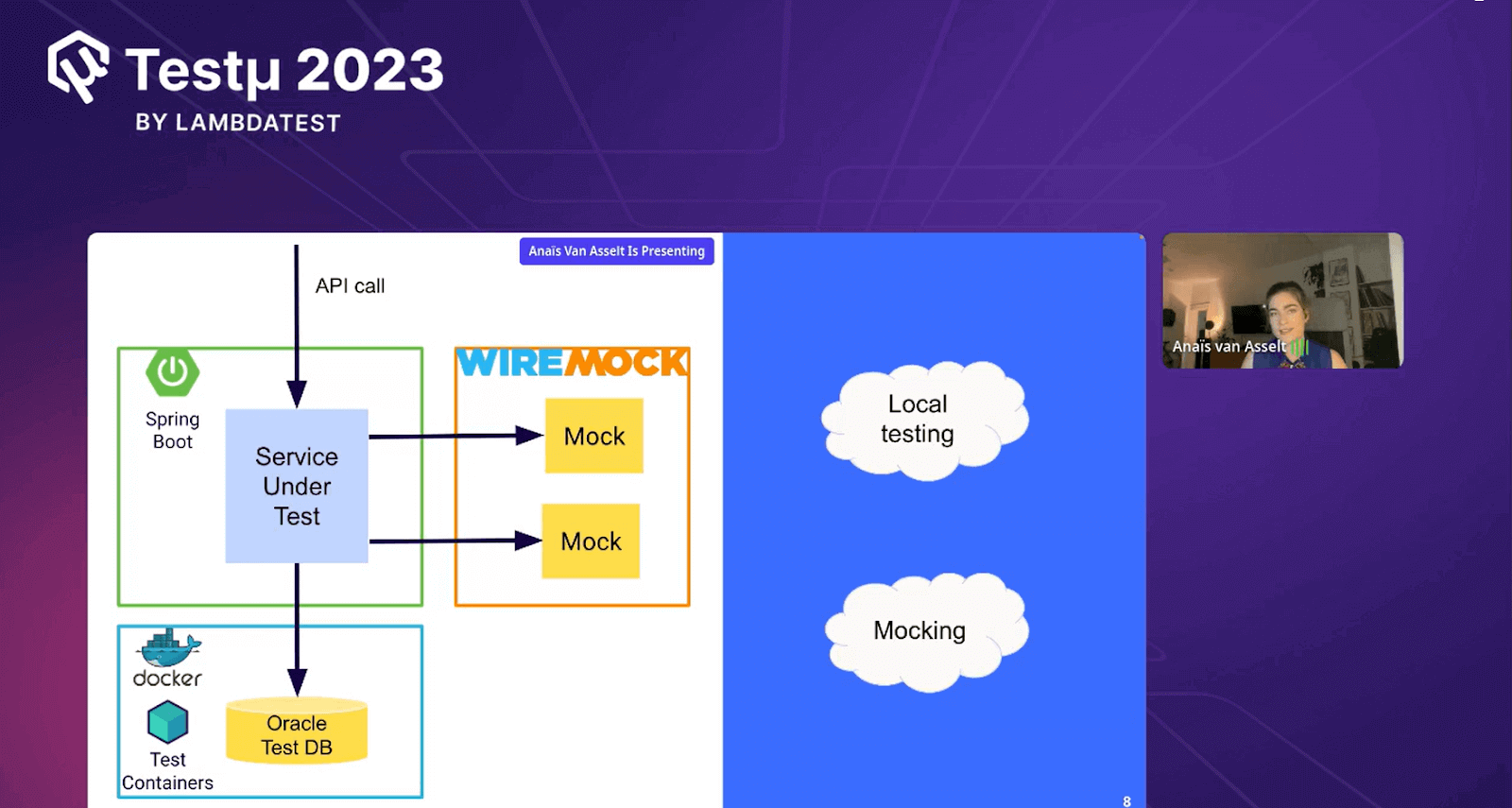

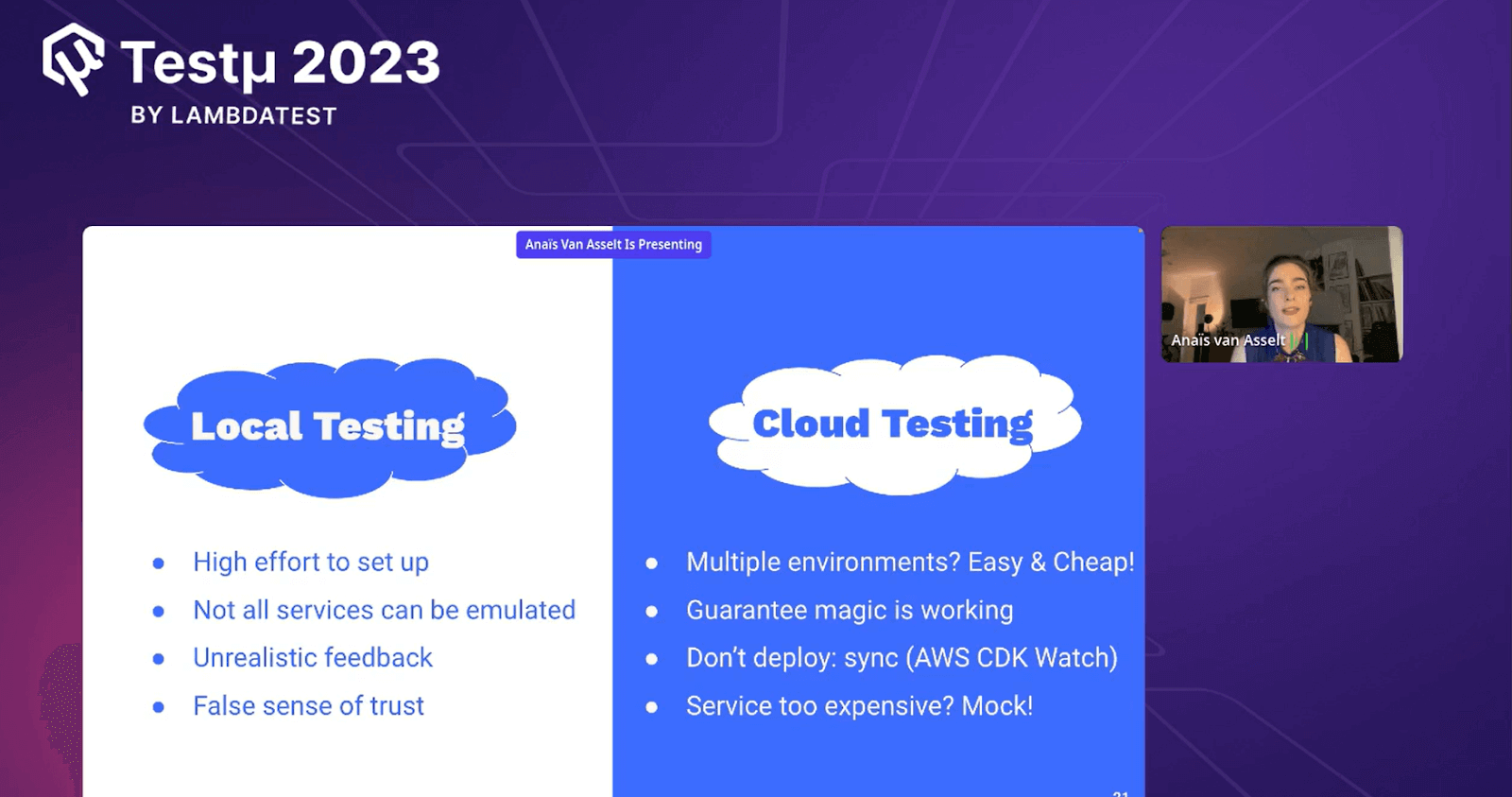

Local Testing vs Cloud Testing

Anaïs provided valuable insights into the comparison between local testing and cloud testing. This informative session allowed attendees to explore the benefits and considerations of these two distinct testing approaches within software development and quality assurance.

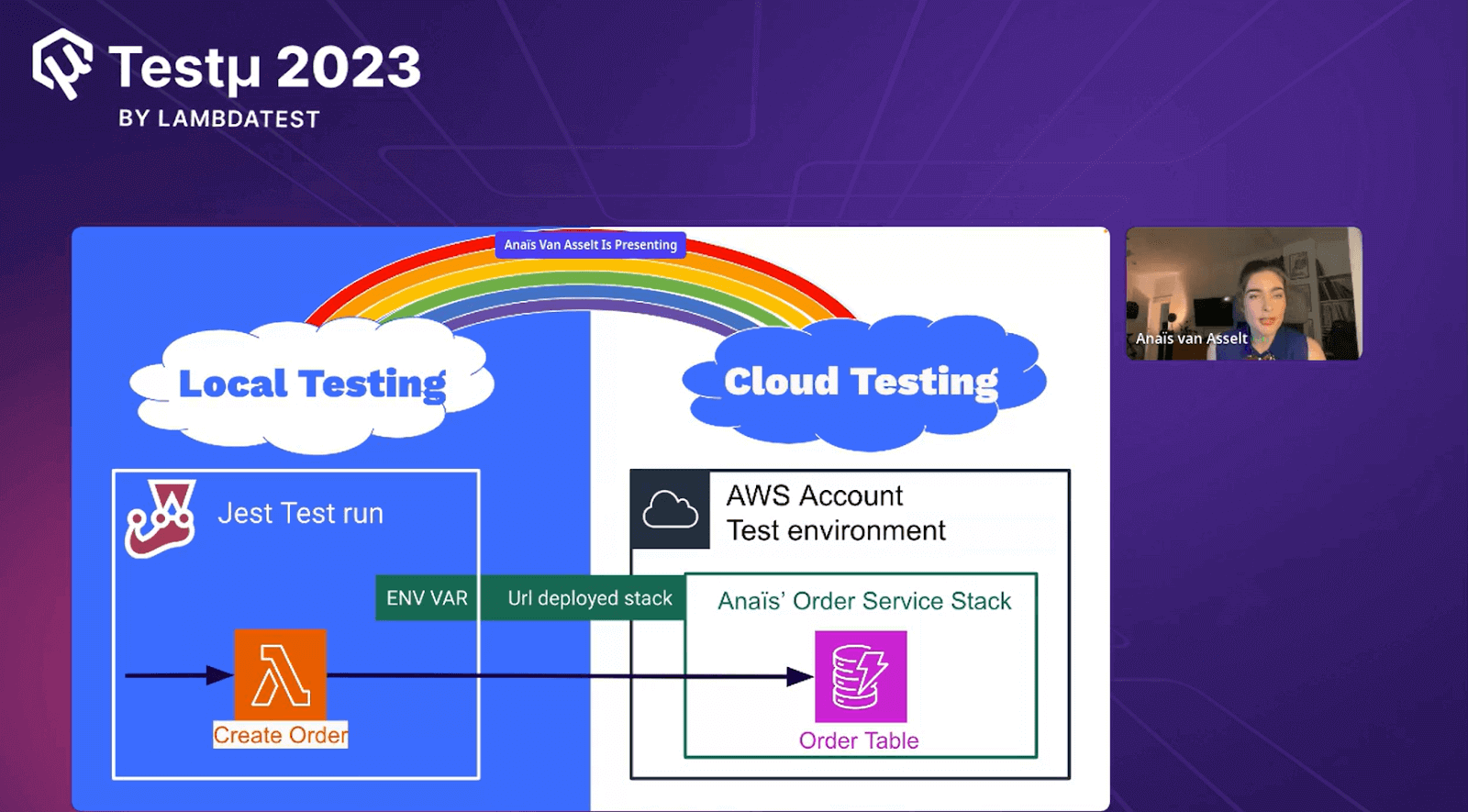

Local test over cloud test Create Order Lambda

Anaïs explained how her application’s Create Order Lambda function operated differently during local testing compared to cloud testing in her session.

She detailed that during local testing, the Create Order Lambda function was executed within a controlled, local environment, allowing developers to test the function’s logic and behavior on their own machines before deploying it to the cloud. This approach allowed for faster debugging and iteration during the development phase.

In contrast, when the Create Order Lambda function was tested in the cloud, it ran in the actual AWS environment. This represented a more realistic and production-like scenario, enabling thorough validation of the function’s performance, scalability, and integration with other cloud services. Anaïs likely emphasized the importance of conducting both local and cloud testing to ensure the function’s reliability across different development and deployment stages.

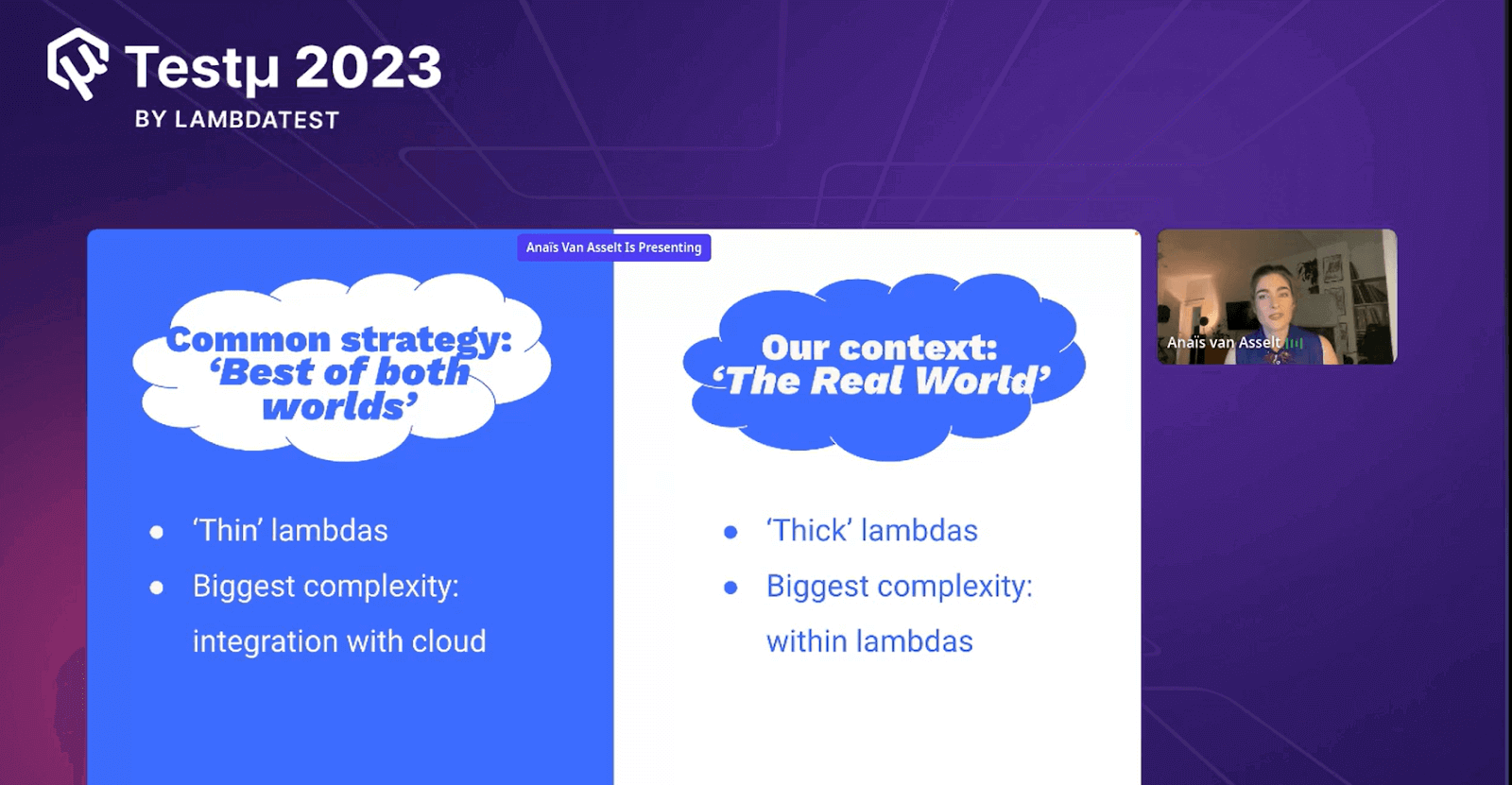

Testing integration with Lambdas

During her presentation, Anaïs introduced the strategy of finding the best of both worlds in the context of AWS Lambda functions.

She elaborated on this approach, emphasizing the benefits of balancing different strategies, such as combining “thin” and “thick” lambdas. This strategy allows developers to optimize performance and cost-effectiveness by tailoring Lambda functions to specific use cases.

Anaïs might have discussed the common challenges faced in cloud integration scenarios, where the biggest complexity typically lies in ensuring smooth interactions between various cloud services and components. Addressing this complexity often involves careful planning and testing to ensure seamless integration and data flow.

In the context of “The Real World,” Anaïs discussed the preference for “thick” lambdas, which encapsulate more functionality within a single function. While increasing the complexity within the lambdas, she likely explained how this approach can simplify overall architecture and reduce the complexity of coordinating multiple lambdas.

Throughout her session, Anaïs provided valuable insights into these strategies and complexities, offering practical guidance for developers and organizations navigating real-world AWS Lambda deployments.

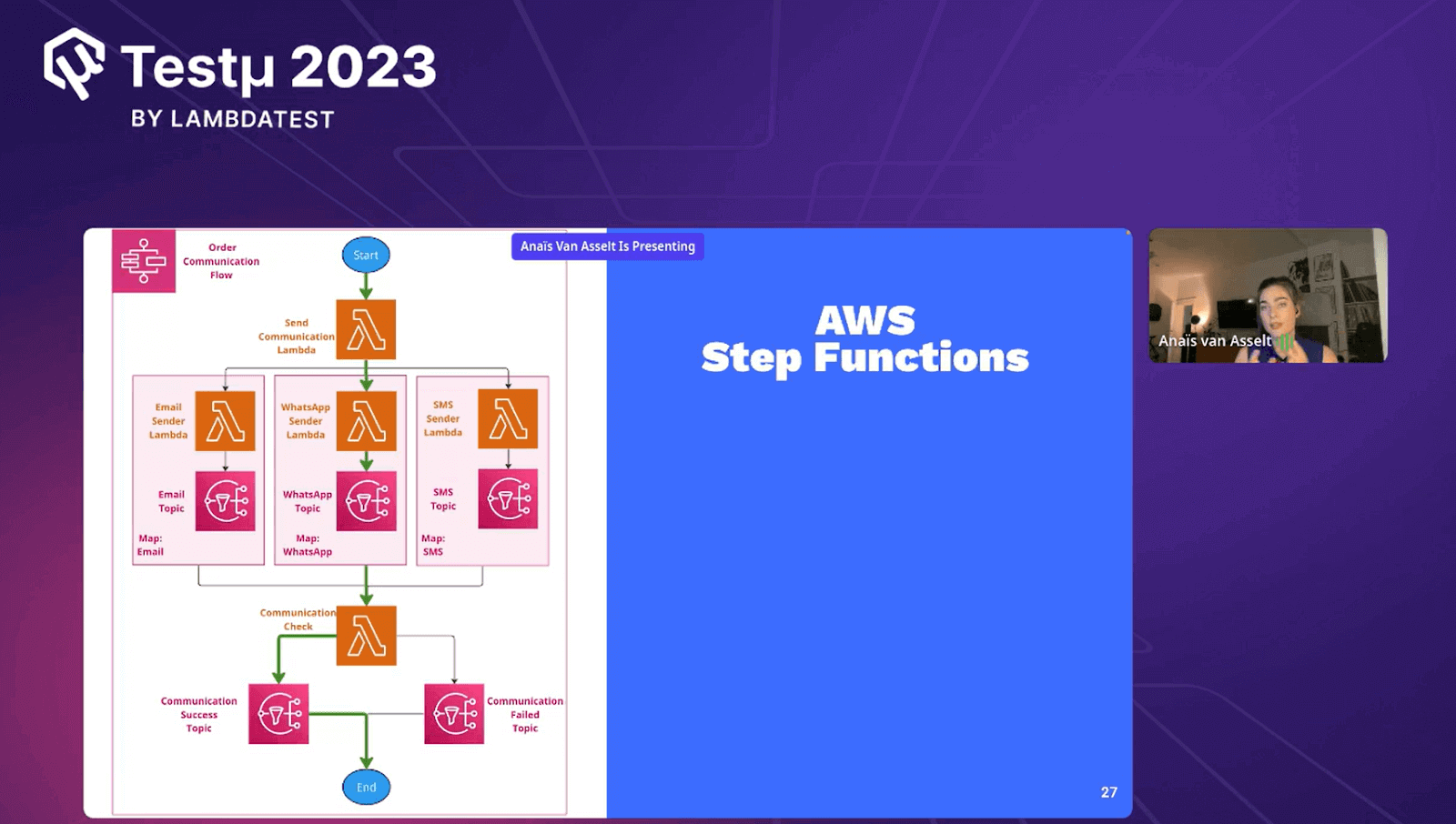

AWS Step Functions

Anaïs delved into the capabilities and use cases of AWS Step Functions, highlighting their role in orchestrating and coordinating complex workflows within AWS environments. These serverless orchestration services enable developers to easily design and manage workflows, simplifying the execution of tasks and providing a visual representation of the workflow’s progression.

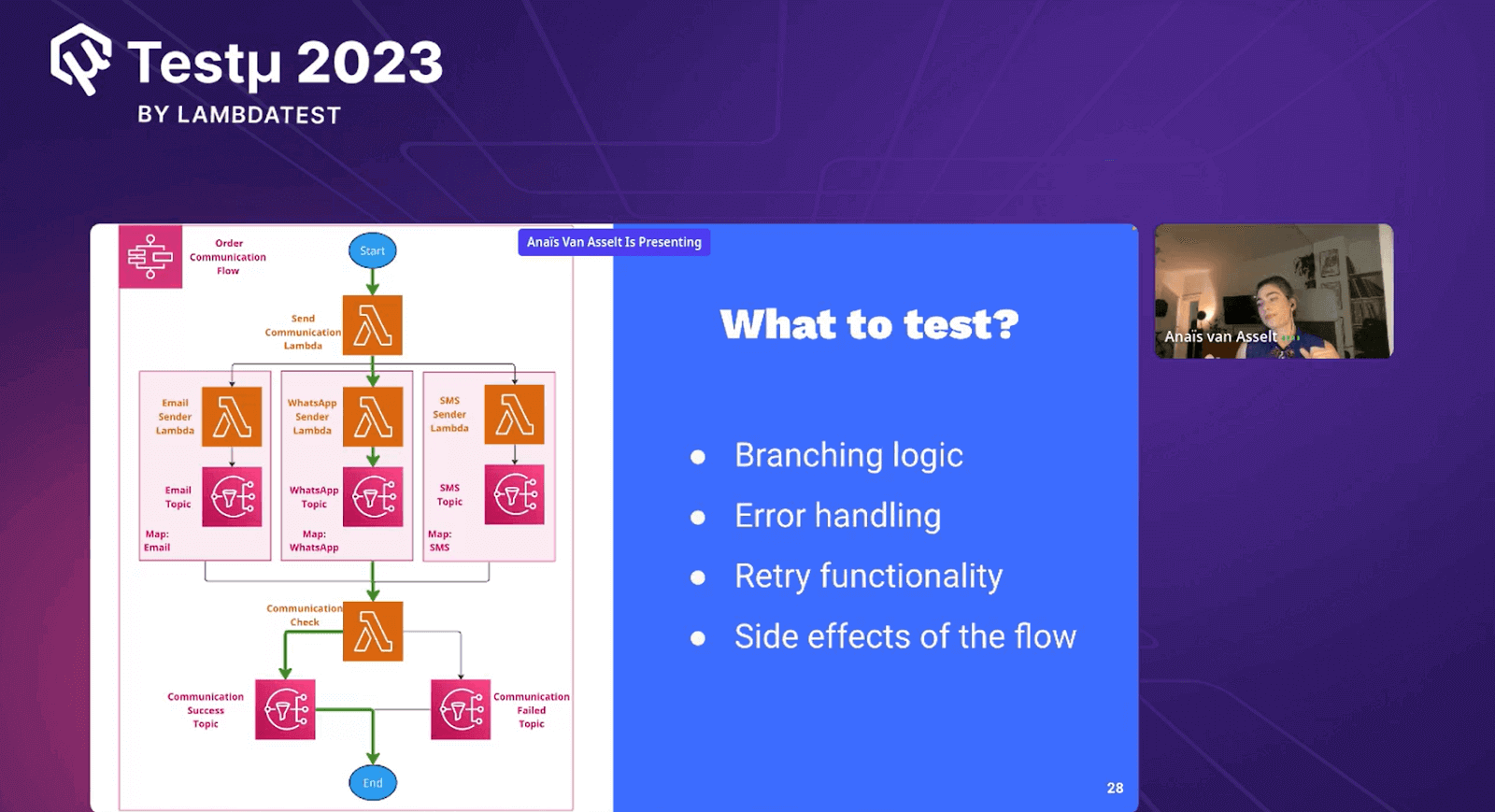

What to test?

Anaïs explained what to test in AWS Step Functions with some brief points so that her audience knows what to consider first.

- Branching Logic: Anaïs emphasized testing the decision-making process to ensure workflows follow the right paths.

- Error Handling: She stressed the importance of testing how Step Functions deal with errors, ensuring they respond correctly and provide helpful error messages.

- Retry Functionality: Anaïs discussed testing the reliability of retries for transient errors, confirming they function as intended.

- Side Effects: She noted the need to test for unintended consequences of workflows on other AWS services or resources to maintain stability and containment.

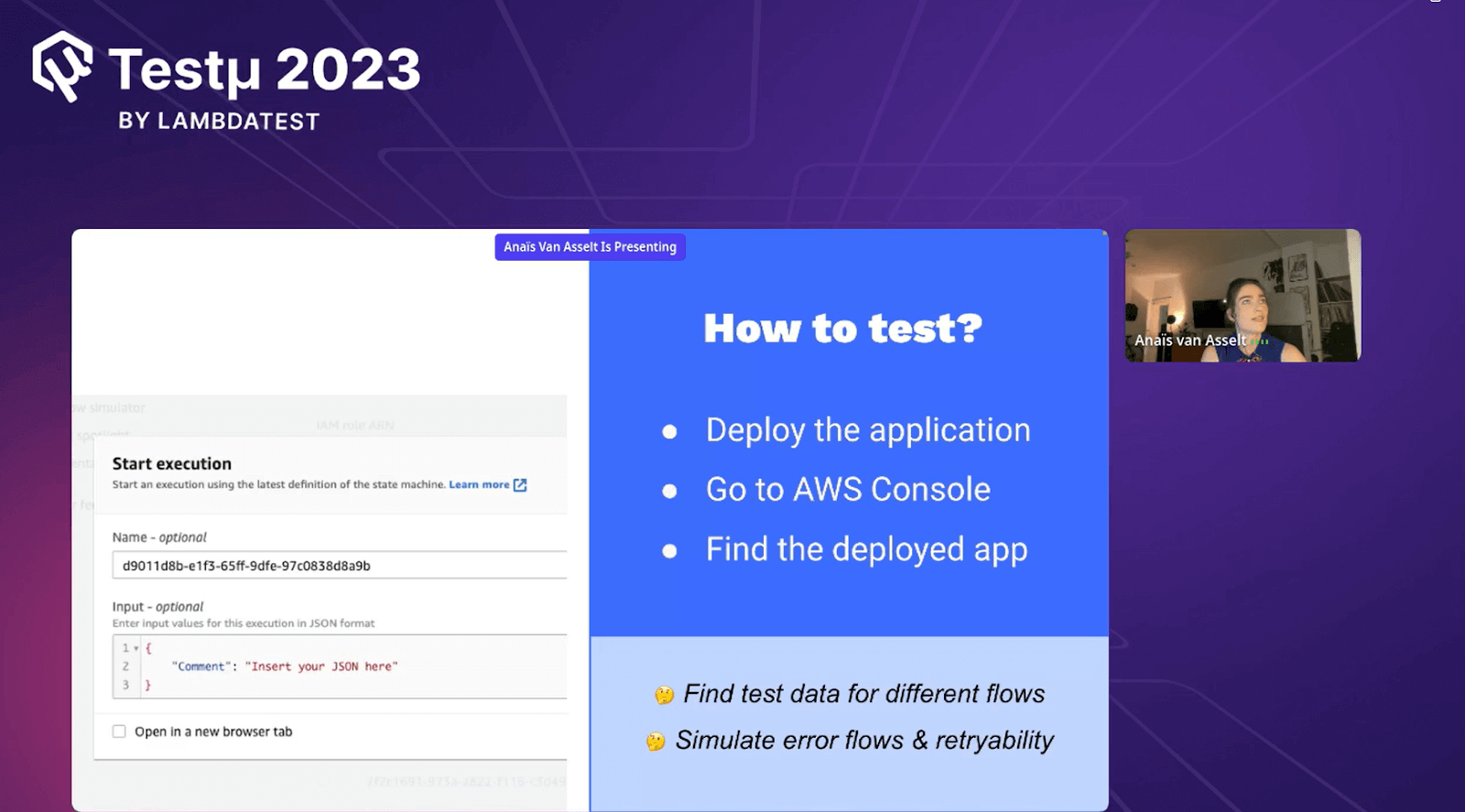

How to test?

Anaïs outlined the testing process for AWS Step Functions with the help of these further instructions, the developer and tester know how to test AWS steps functions.

- Deploy the Application: Anaïs advised deploying the application with the AWS Step Function in the development or staging environment. This is the first step in preparing for testing.

- Go to AWS Console: She recommended accessing the AWS Management Console to navigate to the deployed application. This console provides a central hub for managing and monitoring AWS services.

- Find the Deployed App: Anaïs explained locating the specific Step Function deployed within the console, ensuring testers can access and interact with it effectively.

- Find Test Data for Different Flows: Anaïs discussed the importance of preparing various test data sets that mimic different workflow scenarios. This ensures comprehensive testing by covering a range of possible inputs and conditions.

- Simulate Error Flows & Retryability: Anaïs guided attendees on simulating error scenarios intentionally to validate error handling and retry functionality. This involves introducing errors and observing how the Step Function responds and recovers.

By following these steps outlined by Anaïs, developers and testers can effectively evaluate AWS Step Functions, ensuring their workflows operate reliably and handle diverse scenarios as intended.

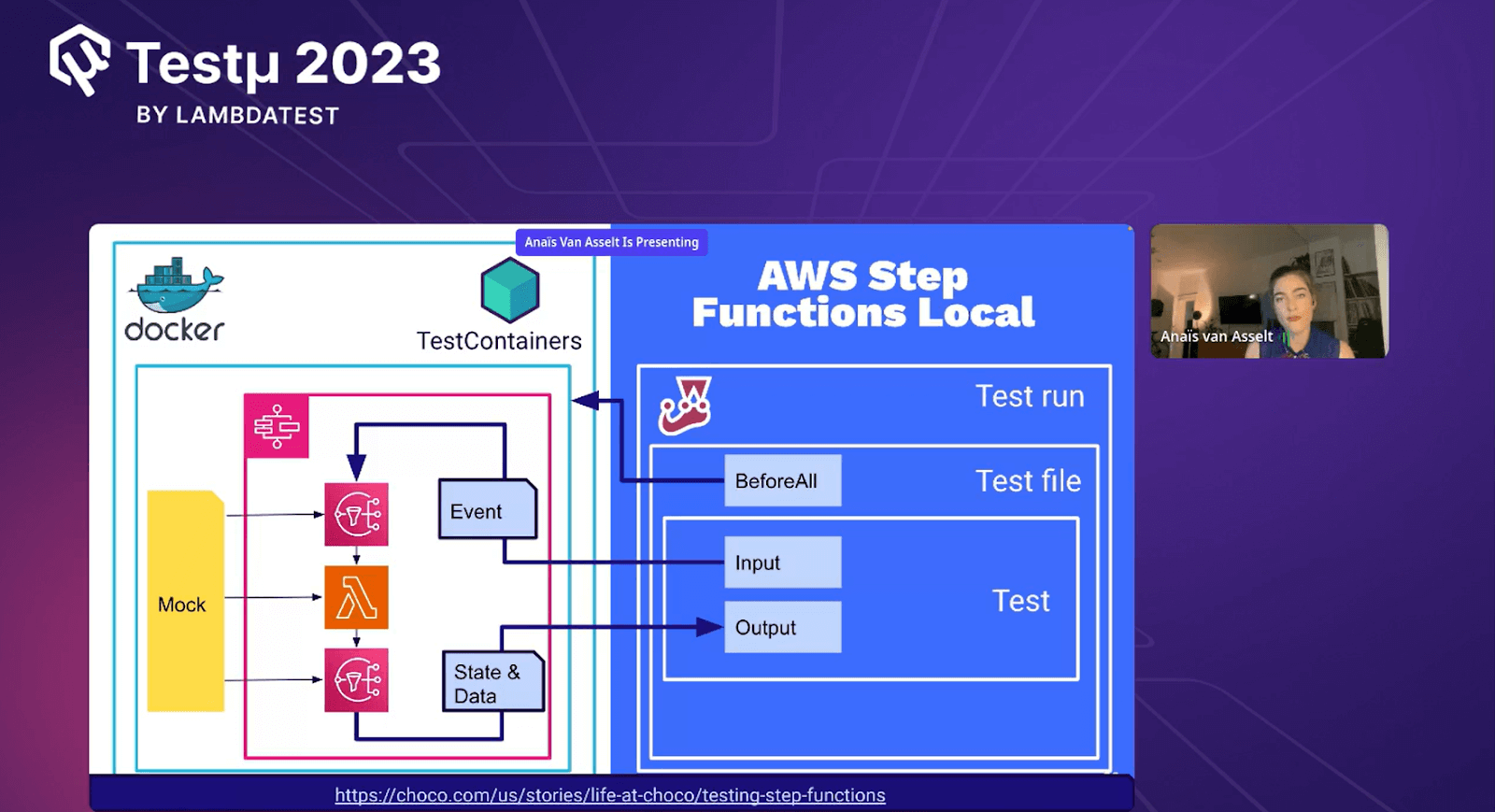

AWS Step Functions Local

Anaïs provided a brief overview of AWS Step Functions Local, offering attendees a glimpse into this valuable tool for testing and development. She encouraged the audience to explore further details by following her blog, where she likely shared in-depth insights and practical guidance on utilizing AWS Step Functions Local effectively in their projects. Her session was compelling, enticing attendees to delve deeper into this topic for enhanced understanding and application in their AWS workflows.

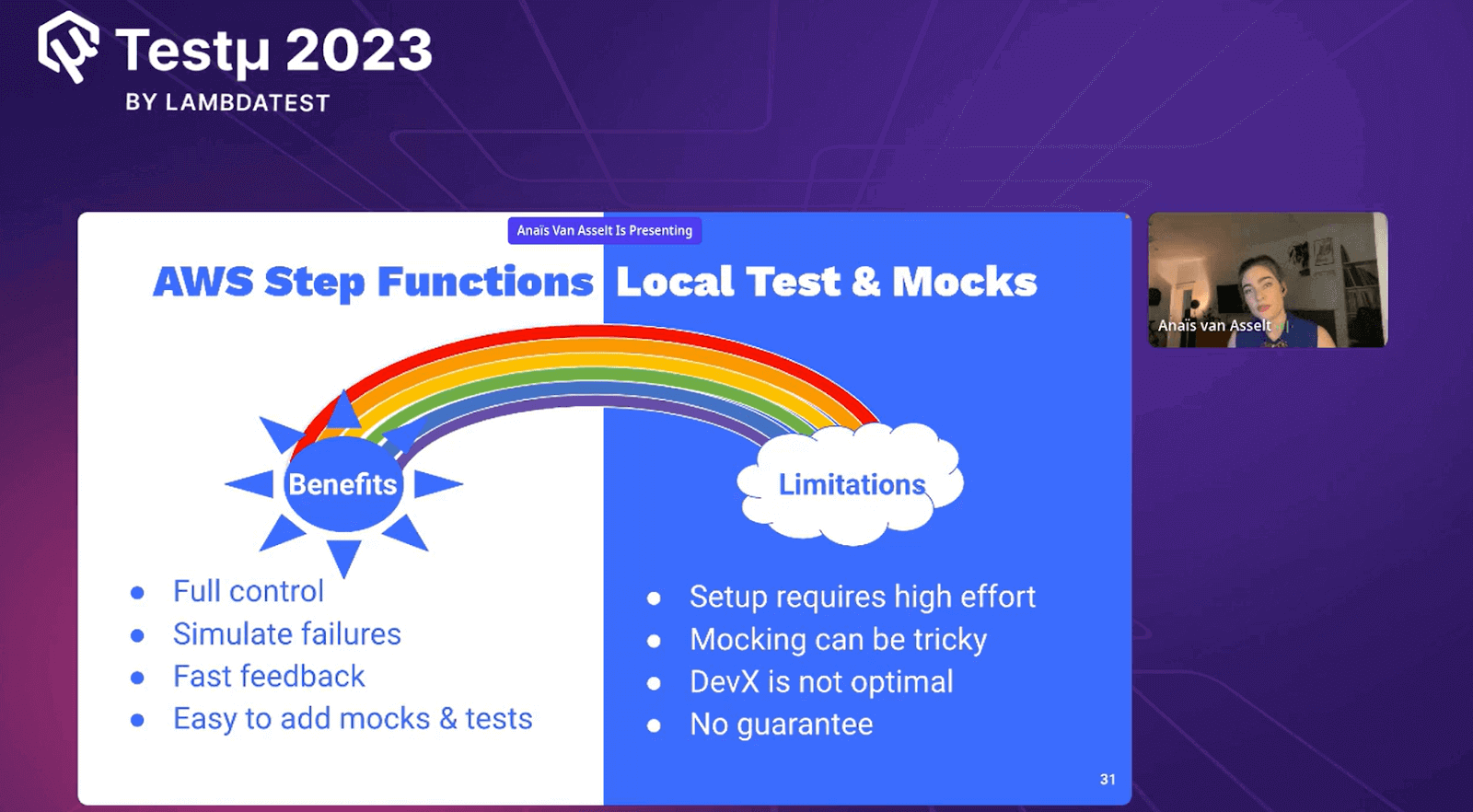

AWS Step Functions Local test & Mocks benefits & limitations

Anaïs enlightened her audience by providing some advantages and constraints of utilizing AWS Step Functions Local testing and Mocks.

Benefits: Anaïs explained that AWS Step Functions Local testing offers a controlled environment for testing workflows offline, aiding in rapid development and debugging. Mocks enable the isolation of components, facilitating targeted testing without relying on external services. These approaches collectively enhance testing efficiency, reduce costs, and expedite development, making them valuable tools in a developer’s arsenal.

Limitations: Anaïs outlined the limitations of AWS Step Functions Local testing and Mocks. These may include potential discrepancies between local and cloud environments, potential gaps in mimicking real-world complexities, and the need for manual configuration. It’s crucial for developers to be aware of these limitations to ensure accurate testing and avoid unexpected behavior when deploying workflows to the cloud.

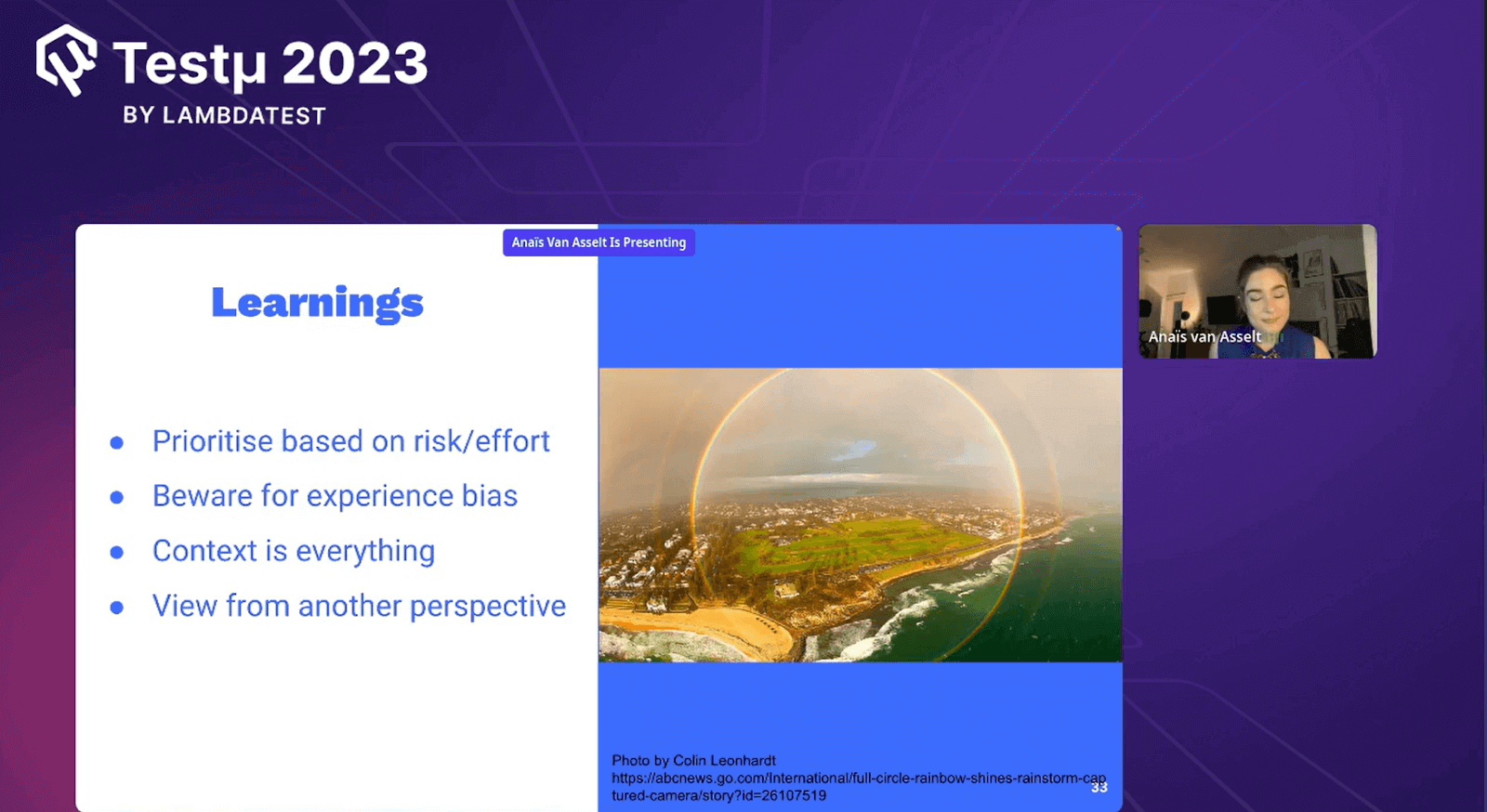

Learnings

Anaïs shared insightful learning points applicable to developers and testers, providing concise highlights for each point.

Anaïs session offered some key takeaways:

- Prioritize Wisely: Anaïs emphasized the need to tackle important tasks first based on their risk and effort. This helps in efficiently managing resources and dealing with critical issues promptly.

- Guard Against Bias: Anaïs cautioned against letting past experiences cloud judgment. She urged attendees to approach new challenges with an open mind, avoiding preconceived notions.

- Consider the Situation: Anaïs stressed that understanding the context of a situation is vital. Being aware of the bigger picture helps in making well-informed decisions.

- See Different Views: Anaïs encouraged her audience to look at problems from different angles. This can lead to new insights and creative solutions, promoting more effective problem-solving.

These straightforward learning points, shared by Anaïs, offered practical advice for approaching tasks and decisions with clarity and adaptability.

She wrapped up her session by answering some questions posted by her attendees

Q & A Session

- How do you balance the need for rapid development with maintaining a robust testing regimen, especially in serverless applications?

Anaïs: It’s important to get fast feedback on the solutions you find and not be too long in the tunnel vision of your solution. Talk about your ideas with developers and others who can provide good feedback.

- How can we handle end-to-end serverless application testing that involves multiple AWS services?

Anaïs: End-to-end testing can be challenging due to dependencies and asynchronous processes. It’s helpful to scope the end-to-end tests and focus on one asynchronous flow or start with one surface and test the output. Integration tests and lambda tests can also be useful. It really depends on the context.

- How do you think AI will change the way we use cloud services and general testing?

Anaïs: AI can help automate tasks like bug assignments based on bug reports and team context. It can also assist in creating tests, but it’s important to use our minds to determine the quality of these tests.

Have a question? Feel free to drop it on the LamdaTest Community.

Got Questions? Drop them on LambdaTest Community. Visit now