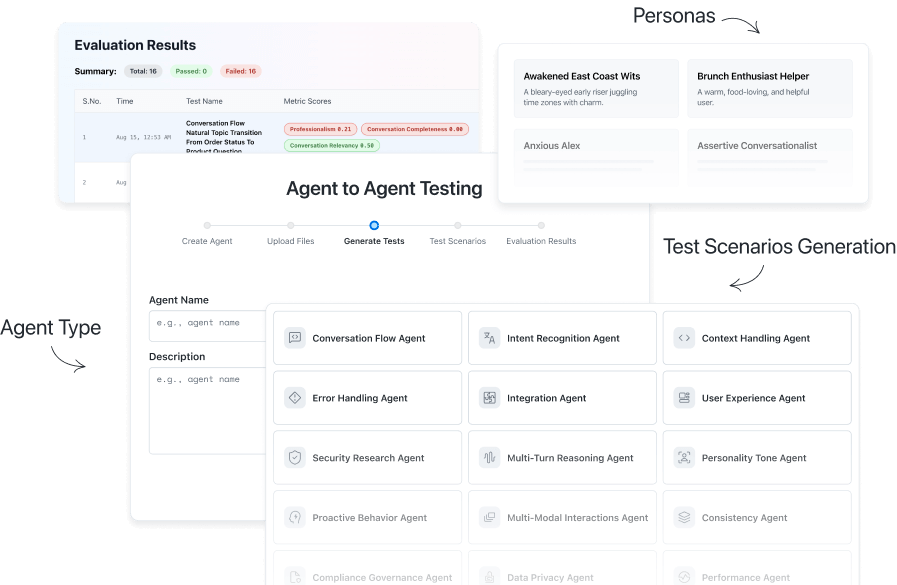

Agent to Agent Testing

Validate AI agents such as chatbots & voice assistants, across real-world scenarios and key metrics

- Agentic Testing

- Home

- /

- Learning Hub

- /

- AI Agent Testing

AI Testing Agents: How They Are Reshaping Software Quality (And Why You Should Care)

Learn how AI testing agents accelerate QA with autonomous, adaptive, and self-healing workflows. Boost speed, coverage, and reliability across software testing.

Last Modified on: November 11, 2025

- Share:

The AI testing market is projected to hit $3.8 billion by 2032, which is a clear sign of a rapid change in how software QA is done.

But as organizations race to deliver faster releases and support increasingly complex platforms, there are a few questions that seem to keep cropping up: What exactly are AI testing agents? Do they replace human testers? How are these agents different from old-school automation and can they actually help teams ship more reliable software?

With this article, I want to answer most of those questions. So, whether your goal is to reduce manual effort, catch edge-case bugs, or simply keep pace with continuous delivery, understanding AI testing agents before your competitors can give you a solid advantage.

Overview

AI Testing Agents are reshaping software quality by automating the full testing lifecycle, planning, creation, execution, and adaptation. Unlike traditional automation, they act like experienced testers, learning continuously, adapting to changes, and ensuring faster, more reliable releases.

Why Use AI Testing Agents:

- Speed: Reduce test creation time and achieve up to 70% faster execution cycles.

- Coverage: Expand coverage 9X with edge cases and combinations humans may miss.

- Cost Savings: Cut testing costs by ~50% with self-healing and reduced maintenance.

- Quality: Shift left, democratize test authoring, and catch issues earlier in SDLC.

Core Capabilities of AI Testing Agents:

- Autonomy: Make testing decisions without constant human intervention.

- Adaptability: Update strategies dynamically with each run or product change.

- Context Awareness: Understand not just what to test, but why it matters.

- Continuous Learning: Improve test creation and accuracy with every iteration.

Key Types of AI Testing Agents:

- Generative Agents: Create new test cases from requirements and user stories.

- Accessibility Agents: Ensure compliance with WCAG and inclusive design.

- Auto-Healing Agents: Fix broken tests when applications change.

- Visual Agents: Detect UI differences across devices and browsers.

What Are AI Testing Agents?

AI testing agents are autonomous programs designed to automate the software testing cycle (planning, creation, execution, and adaptation) by learning from application data and test results.

Unlike static automation scripts, these agents operate much like experienced testers: they proactively analyze requirements, understand app context, create or modify test cases on the fly, and rapidly adapt to changes in the product, all while requiring only minimal human oversight.

Their core value lies in accelerating testing speed, increasing coverage, and catching critical issues before they reach production environments.

AI testing agents possess four critical capabilities that set them apart.

- They operate autonomously, making testing decisions without constant human input.

- They adapt their strategies based on what they learn from each test run.

- They understand context. Not just what to test, but why it matters to your application.

- And they continuously learn, getting smarter with every bug they find and every test they run.

The technical architecture might sound complex, but it's actually elegant.

These agents gather data through computer vision and natural language processing, analyze patterns using machine learning, generate and execute tests autonomously, and then learn from the results to improve future testing.

It's a continuous cycle of improvement that traditional testing simply can't match.

Why Should You Consider Using AI Testing Agents ?

AI testing agents improve results by speeding up testing cycles, increasing coverage, reducing costs, and enhancing quality with earlier tests, broader coverage, and self-healing when apps change.

Let's talk results, because that's what matters in the boardroom.

Speed improvements. Based on the customers we talk to, most see a dramatic reduction in test creation time and over 70% faster testing cycles with AI-native test execution.

Test coverage. AI test agents generate edge cases humans might never consider, which can increase test coverage by 9X. They test combinations and scenarios that would take human testers months to document.

Cost reduction. Many organizations that work with LambdaTest for AI-native testing see a 50% reduction in testing costs within the first year. Maintenance also drops because AI agents self-heal when applications change.

Quality improvements. When AI testing agents democratize testing through natural language, more people can create tests, leading to wider use case coverage, tests earlier in the SDLC, and better quality software overall.

What Are The Types of AI Agents for Software Testing?

This is in no way a comprehensive list of all the types of agents, but it definitely gives you a perspective on what type of agentic tests you can run.

- Generative AI Agents create new test cases by analyzing requirements and user stories. They generate thousands of test scenarios from a single requirement, ensuring comprehensive coverage.

- Accessibility Testing Agents help with identifying opportunities to make your application or website more accessible to users with disabilities and meet the WCAG guidelines.

- Auto-healing Agents identify when a test is broken due to changed locators or divs and automatically fix the test to avoid failures.

- Visual AI Agents specialize in UI testing by analyzing screenshots and identifying visual discrepancies across browsers and devices. They detect pixel-level changes and understand visual context.

- Performance AI Agents monitor application behavior under various conditions, automatically identifying bottlenecks and optimization opportunities while simulating realistic user patterns.

- Security AI Agents analyze code patterns to identify vulnerabilities, continuously scanning for security gaps and adapting detection methods as new threats emerge.

- Predictive AI Agents keep an eye out for repeating patterns and errors. With more data, these AI testing agents can help with better test insights as well as predict if a certain change could lead to errors.

Use Cases of AI Testing Agents

Let's look at some of the uses of AI agents for QA and how you can use them in your day-to-day testing workflows.

1. Intelligent NLP-based Test Authoring

AI agents can generate comprehensive test cases from minimal input using natural language processing.

For instance, KaneAI by LambdaTest allows users to create complex test scenarios using simple natural language instructions.

Instead of writing detailed scripts, testers provide objectives like:

1. Visit lambdatest.com and sign up for an account

2. Go to the dashboard and open the KaneAI agent

3. Try to create a new test and see if it gets added to the pending tab

4. Report success if you see it in Pending. Else fail.

With simple natural language instructions, an AI agent can generate complete testing workflows for you. This democratizes test automation, removing barriers that previously limited participation to technical specialists.

AI agents can also analyze application architecture, user flows, and business logic to create test cases covering both happy paths and edge cases. They can automatically generate test data, identify validation points, and create assertions for comprehensive coverage.

Along with generation, research also shows AI agents can generate test cases that humans might miss, improving coverage. This capability is particularly valuable in agile environments where requirements change frequently.

2. Self-Healing Test Scripts

Traditional automation scripts break when applications change. For instance, it could be a change in button placement, element IDs, or the addition of new fields.

Self-healing tests are when AI agents automatically adapt to these changes, maintaining test continuity without manual intervention. So, instead of relying on specific locators, AI agents understand functional purpose.

Suppose you’ve prompted the AI testing agent to test your app's login functionality, and the login button is changed to “Sign in” and placed at a different location.

Unlike traditional scripts that would require edits, AI agents look around on the page to find a login button. Since they’re based on LLMs, AI testing agents also understand that “login” and “sign in” mean the same thing. And they continue testing with the changed button.

3. Shift-Left Testing

Shift left testing is the process of bringing testing activities earlier in the development life cycle. AI testing agents help your team shift left since it's easier for developers to author tests in plain English, encouraging them to run as many tests locally as possible before pushing them to the pipeline.

The result is fewer bugs in production and improved DORA metrics for your team, especially a reduced change failure rate.

4. Predictive AI Testing Agents

These AI testing agents are changing how QA teams handle defects and project risk. Unlike traditional test suites that catch failures after the fact, predictive agents actively scan patterns in test results, code changes, and historical defects to flag tests most likely to fail in the future. This proactive approach enables you to address likely problem areas before they turn into production outages or customer complaints.

With more data over time, these agents learn how new features, user behaviors, and system dependencies affect software stability. They provide targeted recommendations on what to test, where regressions are probable, and which tests might be redundant—all tailored to your evolving application.

As a result, teams can allocate time more efficiently, prioritize critical paths, and minimize risk, leading to fewer escaped bugs and a more robust product release after release.

Top AI-Native Testing Agents on The Market

KaneAI is a generative AI testing agent built for planning, creating, and editing tests using simple natural language. It allows testers to write test steps in plain English, then automatically converts those instructions into executable code for web, mobile, or API applications. This makes it possible to build and maintain complex test suites quickly, even without deep technical skills. KaneAI can also automatically update tests when applications change, reducing time spent on manual maintenance.

HyperExecute is a blazing-fast, AI-native test orchestration and execution platform. It manages the way tests are scheduled and run across thousands of environments, making sure you get the test results as quickly and reliably as possible. With HyperExecute, you can run your tests on cloud infrastructure that automatically scales to your needs, making it suitable for everything from quick local checks to large-scale, parallelized testing across different browsers and devices.

SmartUI is an AI visual testing agent. It detects changes in user interfaces by comparing screenshots, highlighting only the differences that matter while filtering out noise. This helps teams quickly spot unintended shifts in appearance or layout across browsers and devices without reviewing each change manually. SmartUI is especially helpful for releases with frequent frontend updates or when UI consistency is critical.

Agent-to-Agent Testing is LambdaTest’s AI system for validating other AI agents, such as chatbots, voice assistants, and even phone assistants. This platform tests how AI models handle conversation, reasoning, intent, and context by generating real-world scenarios and measuring for accuracy, bias, and other critical factors. It’s designed to help teams ensure that their AI-powered applications work as intended and meet enterprise standards before going live.

Where AI Testing Agents Excel (and Where They Don’t)

AI testing agents shine in specific scenarios that have traditionally been testing nightmares.

- Regression testing: AI agents automatically identify what changed in your code and generate appropriate tests. They prioritize based on risk, running critical path tests first. API testing benefits from automatic test generation from specifications and intelligent handling of non-deterministic responses.

- Visual and UI testing: AI-powered visual comparison clearly sees the difference between acceptable variations and actual bugs. Mobile testing across hundreds of device configurations becomes manageable with natural language test creation that works across platforms.

- Performance testing: AI agents analyze historical data to anticipate bottlenecks before they happen. They simulate realistic load conditions based on actual user patterns, not theoretical models.

But let's be honest, there are still some limitations.

- AI agents struggle with subjective user experience evaluation. They can't tell you if something "feels" right to users. Creative testing scenarios that require human intuition remain challenging. Understanding complex business logic, especially when it's not well-documented, can trip them up.

- There’s data dependency. These systems need quality training data, and 54% of data teams still depend on manual testing for data quality validation. That's a circular dependency we're still solving.

- Integration with legacy systems can be complex. If your tech stack includes 20-year-old mainframe systems, AI testing integration won't be plug-and-play. Non-deterministic AI responses create validation challenges—how do you test something when correct answers vary?

The Market for AI Test Agents Is Growing Fast

Enterprises are generally the slowest when it comes to adopting new technologies. But when adoption moves fast, you know something fundamental has shifted.

We're currently seeing the AI testing market grow from $856.7 million in 2024 to a projected $3.8 billion by 2032, a 20.9% compound annual growth rate (CAGR).

To put it in perspective, that CAGR is faster than the smartphone market grew in its heyday.

But is it just the benefits of the technology driving this explosion?

I think it’s just perfect timing for the technology, along with these four points that keep coming up in every conversation I have with other leaders:

- Speed pressure from DevOps. Teams are deploying multiple times daily, and traditional testing can't keep up.

- Application complexity has reached a point where human testers can't possibly cover every scenario.

- AI testing now costs 30-90% less than traditional approaches.

- We're facing a massive skills gap. Good QA engineers are hard to find, and AI is democratizing testing for entire teams.

Gartner predicts 80% of enterprises will use AI-augmented testing by 2027. That means if you're not exploring this now, you'll be in the minority within three years.

Implementation Strategy Based on Market Experience

Start with assessment and planning (months 0-6). Evaluate your current testing maturity honestly and identify your biggest testing pain points. Is it speed, coverage, or maintenance? Then, define clear success metrics in terms of what would make the investment in AI testing agents worthwhile.

Begin with a pilot program. Budget $10,000–50,000 for initial implementation. At this stage, you might ask: do you build AI agents in-house, or leverage a proven platform? While building may sound tempting, it typically requires deep AI expertise, extended development time, and ongoing maintenance—and that’s before you ever see value in production. Most organizations find it far more efficient to work with a leading platform that offers AI-native testing agents out of the box, ready to deliver measurable results. For example, platforms like LambdaTest provide production-ready AI agents, so your team can skip the prolonged R&D phase and focus on deploying, measuring, and optimizing testing outcomes.

Choose one or two specific testing scenarios where AI can demonstrate clear value. Measure everything from time saved, bugs found, and costs reduced.

Focus on team development. Implementing AI agents is as much about upskilling and training your existing employees as it is about licensing software. This is a new way of thinking and working for your team.

Scale strategically (months 6–18). Expand from pilot to wider implementation gradually, and make sure you establish proper governance frameworks for AI use. As your teams mature, document best practices, and build feedback loops between AI results and human expertise. This documentation will serve as a valuable resource for new team members and help position your organization as a thought leader in AI-enabled QA.

The key thing to remember is that AI testing agents, no matter how sophisticated, still need a human in the loop (HITL) to verify findings, test cases, and scripts. AI agents are not here to replace your testing team—they’re here to empower your team to be faster and far more efficient. You want a human to give the final pass before any code goes to production, ensuring quality and confidence at every release.

Does Adopting AI Testing Agents Make Sense For You?

My answer is a resounding yes. But it’s not because I work with LambdaTest, and I love our product.

It’s because we can clearly see AI agents aren’t a fleeting technological trend. They’re a complete workflow change and definitely for the better.

But the window for early advantage is closing quite fast. With 81% of teams already using AI in testing, being a fast follower means being average. You want to be AI-mature before your competitors adopt it.

Here's my advice after watching countless clients switch to AI-native testing: Start small, but start now.

The best time to plant a tree was 20 years ago. The second-best time is now. The same applies to AI testing agent adoption.

On This Page

- Overview

- What Are AI Testing Agents?

- Why Should You Consider Using AI Testing Agents

- What Are The Types of AI Agents for Software Testing?

- Use Cases of AI Testing Agents

- Top AI-Native Testing Agents on The Market

- Where AI Testing Agents Excel (and Where They Don’t)

- The Market for AI Test Agents Is Growing Fast

- Implementation Strategy Based on Market Experience

- Does Adopting AI Testing Agents Make Sense For You?

- Frequently Asked Questions (FAQs)

Frequently Asked Questions (FAQs)

Did you find this page helpful?

More Related Hubs

Start your journey with LambdaTest

Get 100 minutes of automation test minutes FREE!!