AI-Powered Selenium

Testing Cloud

Supercharge QA with AI for Faster & Smarter Software Testing

- Testing Basics

- Home

- /

- Learning Hub

- /

- 295+ Selenium Interview Questions

Top 40 Test Engineer Interview Questions and Answers [2025]

Master 40 top test engineer interview questions, from basics to advanced, designed to help freshers and experienced engineers tackle interviews with confidence.

Last Modified on: September 26, 2025

- Share:

OVERVIEW

Test engineering is a specialized domain in today’s tech landscape. Whether you're testing web or mobile-based applications, companies rely on skilled test engineers to ensure software quality and reliability.

A strong grasp of testing principles, tools, and methodologies can set you apart in interviews. Whether you're new to the field or an experienced professional, this guide is crafted to support your preparation for test engineer interview questions.

For freshers, we cover key areas like test plans, test cases, and automation basics. For those with more experience, we explore advanced topics such as continuous testing, risk-based testing, and integrating automation with CI/CD pipelines.

Understanding these questions and answers will enhance your ability to present your skills confidently and effectively. Let’s get started on your path to acing your test engineer interview.

Note: We have compiled all Test Engineer Interview Questions for you in a template format. Check it out now!

Fresher-Level Test Engineer Interview Questions

If you're preparing for a career in software testing, this guide covers essential test engineer interview questions aimed at freshers. These questions focus on core testing principles, QA processes, and real-world scenarios you’re likely to encounter in a test engineering role.

Whether you're applying for manual or automation testing positions, mastering these topics will help you stand out in interviews.

1. What Is the Difference Between a Test Plan and a Test Strategy?

A test plan is a detailed document that outlines the specific testing activities for a project, including scope, approach, resources, and schedule. It provides actionable details such as test objectives, features to be tested, and success criteria.

In contrast, a test strategy is a high-level document that defines the overall testing approach and methodologies applied across multiple projects or the entire organization.

It typically includes testing types, tools, and standards. Understanding the difference between a test plan and a test strategy is essential for both manual and automation testers, and this topic frequently appears in test engineer interview questions.

2. How Do You Write a Test Case?

Writing a test case involves defining a structured set of steps to validate a specific feature or function of an application. A typical test case includes a unique ID, objective, preconditions, detailed test steps, expected results, actual results, and execution status.

Well-written test cases are clear, repeatable, and aligned with the requirements. Writing effective test cases is a core responsibility for any test engineer, and this question frequently appears in test engineer interview questions.

Steps to write a test case:

- Identify the Test Case Objective: Clearly define what the test case is intended to verify or validate.

- Define Test Case Title: Provide a concise and descriptive title that indicates the purpose of the test case.

- Specify Preconditions: Outline any conditions or prerequisites that must be met before executing the test case.

- Detail Test Steps: List the precise actions to be performed during the test.

- Outline Expected Results: Describe the expected outcome for each step or the overall result of the test case.

- Include Test Data: Specify any data required to execute the test case.

- Describe Postconditions: Mention any state or setup required after test execution, if applicable.

- Document Test Case ID: Assign a unique identifier for easy reference and tracking.

- Write Test Case Description: Provide a detailed explanation of what the test case is designed to verify or validate.

- Record Actual Result: Document the actual outcome observed when the test case is executed.

- Update Status: Indicate the current state of the test case, such as "Pass," "Fail," "In Progress," or "Not Executed."

3. What Is a Test Scenario?

A test scenario is a high-level statement that defines what functionality or feature needs to be tested. It typically stems from use cases or user stories and serves as a foundation for writing multiple detailed test cases.

These scenarios help ensure comprehensive coverage and prioritize testing efforts, an important concept that frequently appears in most of the test engineer interview questions.

4. What Is Automation Testing, and When Should It Be Used?

Automation testing uses tools to execute test cases without manual intervention. It's best used for repetitive tasks, regression testing, and large-scale test suites requiring consistent results.

Automation enhances speed, accuracy, and coverage. This concept is commonly asked in both beginner and advanced test engineer interview questions.

5. Explain the Benefits and Challenges of Automation Testing

Automation testing offers numerous advantages, including increased efficiency, consistency, and broader test coverage. However, it also presents certain challenges that need to be addressed.

Here’s a detailed look at the benefits and challenges associated with automation testing:

Benefits:

- Efficiency: Automation reduces the time and effort required to execute repetitive test cases.

- Consistency: Automated tests eliminate human errors, providing consistent results.

- Coverage: Automation allows for extensive testing across various scenarios and data sets.

- Reusability: Test scripts can be reused across different test cycles and versions.

Challenges:

- Initial Cost: High setup costs for tools and initial development of test scripts.

- Maintenance: Test scripts require ongoing updates and maintenance as the application evolves.

- Complexity: Automation may struggle with highly dynamic or complex user interfaces.

- Skill Requirements: Requires knowledge of programming and the chosen automation tools.

6. What Are Some Popular Automation Testing Tools?

Automation testing encompasses various types of testing, each addressing different aspects of software quality.

Here’s a brief overview of key types of automation testing and some popular automation testing tools used in each area. For a fresher test engineer, knowledge of these tools is important, as questions about them frequently appear in many test engineer interview questions.

| Type of Automation | Description | Tools |

|---|---|---|

| UI Testing | Tests the user interface of an application to ensure it meets design specifications and functions correctly. | Selenium, Playwright, TestCafe, Cypress |

| API Testing | Verifies that APIs work as expected, handling requests and responses correctly. | Postman, Rest Assured, SoapUI, Insomnia REST |

| Performance Testing | Assess the application's performance under various conditions, including load and stress scenarios. | JMeter, LoadRunner, Gatling, k6 |

| Unit Testing | Focuses on testing individual units or components of the application to ensure they function correctly. | JUnit, NUnit, TestNG, PyTest |

| Integration Testing | Ensures that different components or systems work together as expected. | TestNG, JUnit, Postman, Rest Assured |

| Mobile Testing | Validates that mobile applications perform well across different devices and operating systems. | Appium, Espresso, XCUITest, Detox |

Note: Run tests at scale across 3000+ browsers and OS combinations using various test automation frameworks effectively. Try LambdaTest Now! Try LambdaTest Now!

7. What Is a Test Automation Framework?

A test automation framework provides a structured approach to writing and managing automated tests. It includes components such as test libraries, data management, execution strategies, reporting tools, and integration with CI/CD systems.

Frameworks enhance reusability, scalability, and team collaboration. For freshers, understanding what a test automation framework is can be crucial, as this topic is frequently highlighted in test engineer interview questions.

8. What Is a Bug Tracking Tool? Name Some Popular Ones

A bug tracking tool is a software designed to help teams manage, track, and report on software defects or issues throughout the development lifecycle. It assists in organizing bugs by severity or priority, assigning them to specific team members, and tracking their progress until resolution.

Having solid knowledge of bug tracking tools is crucial, as they play a key role in defect management. This topic frequently appears in test engineer interview questions because it highlights the importance of maintaining transparency and ensuring that all issues are addressed promptly, ultimately improving the overall quality of the software.

Popular Bug Tracking Tools:

- JIRA: A widely used tool for bug tracking, issue management, and project planning. It supports workflows, agile project management, and integrates with numerous other tools.

- Bugzilla: An open-source bug tracking tool known for its advanced search capabilities, comprehensive bug management features, and customizable workflows.

- Mantis: A web-based bug tracking system with an intuitive, user-friendly interface. It supports collaboration, notifications, and role-based access control.

- Trello: Primarily a task management tool, but it can be adapted for bug tracking using its Kanban-style boards. It's popular for its simplicity and flexibility.

9. Explain the Importance of Version Control in Testing

Version control is essential in testing because it helps manage and track changes to test scripts, test cases, and other testing artifacts over time. Here’s why it matters:

- Consistency: Version control ensures the latest version of test scripts or documents is used, preventing errors caused by outdated files. For example, using Git, teams can pull the most recent test scripts before running tests, ensuring consistency.

- Collaboration: It allows multiple team members to work on the same test artifacts without conflict. In tools like Git or SVN, multiple testers can work on different parts of the same test suite. Changes can be merged into a common branch without overwriting each other’s work.

- History Tracking: Every modification is tracked, allowing the team to see who made changes and why. For example, if a new test script causes issues, you can use Git to compare it with the previous version and identify the problematic changes.

- Backup and Recovery: Version control serves as a backup for all previous versions of test artifacts. If a tester accidentally deletes a critical test script, tools like GitHub or Bitbucket allow you to recover the file from a previous commit.

This question is commonly featured in test engineer interview questions, as understanding version control is essential for maintaining quality and collaboration in real-world testing environments.

Example Tools:

- Git: A widely used version control system that tracks changes and allows collaboration among team members. Platforms like GitHub or GitLab help manage test scripts and versions.

- SVN (Subversion): Another version control system often used in larger organizations for managing versions of testing artifacts.

10.What Is Regression Testing?

Regression testing is a type of software testing aimed at ensuring that recent changes or updates to the software have not negatively impacted existing functionality. The main goal is to verify that previously developed and tested features continue to work as expected after any modifications, such as bug fixes, enhancements, or new features.

Essentially, regression testing helps catch any unintended side effects caused by recent changes, ensuring that the software maintains its reliability and stability over time.

This question is frequently covered in most of the test engineer interview questions, as it reflects a test engineer’s ability to safeguard existing functionality while accommodating updates.

11. How Do You Ensure the Quality of a Product?

Ensuring the quality of a product involves a structured approach to testing and continuous improvement.

Here’s how you can achieve this:

- Planning: Start by developing a detailed test plan and strategy. This includes outlining the scope, objectives, resources, and schedule for testing activities.

- Execution: Conduct various types of testing, such as functional, non-functional, and performance testing, to cover different aspects of the product’s functionality and reliability.

- Review: Regularly review test results and defect reports to identify issues and assess the quality of the product. This helps in understanding where improvements are needed.

- Feedback: Incorporate feedback from users and stakeholders to address real-world concerns and refine the product based on actual use cases.

- Improvement: Continuously enhance your testing processes and methodologies by applying lessons learned from previous testing cycles and adapting to new challenges.

This approach is commonly referenced in many test engineer interview questions, as it's important for beginners to have a practical understanding of quality assurance principles

12. How Do You Prioritize Test Cases?

Prioritizing test cases helps ensure that the most critical aspects of the application are tested first, focusing on areas that impact the overall quality and functionality.

Here’s how you can prioritize test cases effectively:

Prioritization Criteria:

- Business Impact: Test cases related to critical business functions or key user workflows should be prioritized higher, as they directly affect the business operations and user experience.

- Risk: Areas with higher risk or potential for failure, such as new or complex features, should receive higher priority to mitigate the likelihood of significant issues.

- Frequency of Use: Features that are frequently used by end-users should be tested more thoroughly to ensure reliability and performance in high-traffic areas.

- Complexity: Complex features, or those that have undergone recent changes, may need more focus due to their higher likelihood of containing defects.

- Regulatory Compliance: Test cases related to regulatory and compliance requirements should be prioritized to ensure the product meets necessary legal and industry standards.

By considering these criteria, you can systematically address the most important aspects of your application, ensuring a more effective and efficient testing process, another important question that often appears in test engineer interview questions.

The test engineer interview questions covered above are fundamental and essential for any fresher to know, as they form the basic foundation of software testing and quality assurance. Understanding these basics is crucial for building a strong testing skill set and performing well in interviews.

As you progress, you'll encounter intermediate-level test engineer interview questions designed to deepen your knowledge and enhance your expertise in areas like automation, frameworks, and defect management. This will help you handle more complex testing scenarios and grow your career in the field.

Intermediate-Level Test Engineer Interview Questions

These test engineer interview questions cover advanced topics and are ideal for candidates with some experience in software testing. They are designed to assess your ability to handle complex testing scenarios, implement automation, and optimize testing processes, helping you further enhance your skills.

13. Explain the Difference Between Verification and Validation

Understanding the difference between verification and validation is crucial for ensuring product quality. Verification focuses on confirming that the product is built according to specified requirements and design, while validation ensures that the product meets the needs and expectations of its end-users.

The following table highlights these distinctions:

| Aspect | Verification | Validation |

|---|---|---|

| Definition | The process of ensuring that the product is built according to the specified requirements and design. | The process of ensuring that the product meets the needs and expectations of the end-users. |

| Focus | Confirms that the product aligns with requirements and design specifications. | Confirms that the product fulfills its intended purpose and delivers value to the customer. |

| Primary Question | "Are we building the product according to the design and specifications?" | "Does the product meet the needs and expectations of the users?" |

| Objective | To ensure the correctness of the product in terms of adherence to specifications. | To ensure the product is useful and meets user expectations and requirements. |

| Process | Involves reviews, inspections, and testing during the development phase. | Typically involves testing in real or simulated environments. |

| Focus | Confirms that the product aligns with requirements and design specifications. | Confirms that the product fulfills its intended purpose and delivers value to the customer. |

| Primary Question | "Are we building the product according to the design and specifications?" | "Does the product meet the needs and expectations of the users?" |

| Objective | To ensure the correctness of the product in terms of adherence to specifications. | To ensure the product is useful and meets user expectations and requirements. |

| Process | Involves reviews, inspections, and testing during the development phase. | Typically involves testing in real or simulated environments. |

This is often asked in most of the test engineer interview questions, as understanding these concepts is vital for quality assurance professionals in ensuring product reliability.

14. What Are the Key Elements of a Bug Report?

A comprehensive bug report helps ensure that issues are resolved efficiently and that all necessary information is communicated clearly. The following table outlines the key elements of a bug report, including additional components like Fix Version and Environment:

| Component | Description |

|---|---|

| Bug ID | A unique identifier assigned to the bug for tracking purposes. |

| Summary | A brief overview or title that describes the nature of the bug. |

| Description | A detailed explanation of the issue, including how it was discovered and its impact. |

| Steps to Reproduce | A list of actions or steps needed to replicate the bug. |

| Expected Result | The behavior that should occur if the bug did not exist. |

| Actual Result | The behavior that actually occurred. |

| Severity | An indication of the bug’s impact on the application (e.g., minor, major, critical). |

| Priority | Specifies the urgency for fixing the bug (e.g., low, medium, high). |

| Affected Version | Details the specific version(s) of the product affected by the bug. |

| Fix Version | The version in which the bug is expected to be fixed or has been resolved. |

| Assignee | The team member or individual responsible for addressing the bug. |

| Status | The current state of the bug (e.g., New, In Progress, Resolved, Closed). |

| Attachments | Relevant files or screenshots that help illustrate the problem (e.g., error logs, screenshots). |

| Environment | The environment in which the bug was encountered (e.g., operating system, browser version). |

Having a strong knowledge of these key elements will help you demonstrate your skills as a test engineer, and it's often asked in most test engineer interview questions

15. What Is Exploratory Testing, and When Is It Used?

Exploratory Testing is a testing approach where testers explore the application without relying on predefined test cases. Instead, testers use their knowledge, experience, and intuition to identify defects and verify functionality.

This approach is particularly valuable in several scenarios:

- Requirements Are Incomplete: When the documentation or requirements are lacking or unclear, exploratory testing allows testers to investigate the application based on their understanding and find issues that may not be covered by existing test cases.

- Testing Is Ad-Hoc: This approach is useful for discovering issues that may not be captured by formal test cases. Testers explore the application to uncover unexpected defects and assess the application's behavior in real-world scenarios.

- New Features: When new or changing functionality is introduced, exploratory testing helps quickly assess how these changes impact the application. It provides immediate feedback on the functionality and helps identify potential issues early in the development cycle.

This technique is often asked in most of the test engineer interview questions because it's a practical skill needed in testing when formal test scripts are insufficient or absent.

16. Describe the Process of Creating an Automated Test Script

Creating an automated test script involves a structured approach to ensure tests are efficient, maintainable, and deliver accurate results. Each step in the process plays a critical role in developing effective automation, from selecting the right test cases to analyzing results.

Below is an overview of the key steps involved in creating an automated test script:

- Select Test Cases: Begin by identifying which test cases are best suited for automation. Test cases that are repetitive, complex, or frequently executed (such as regression tests) are ideal candidates for automation. Prioritize based on factors like test coverage, potential time savings, and complexity.

- Set Up Environment: Configure the testing environment by setting up the necessary tools, frameworks, and dependencies. This includes installing automation tools (e.g., Selenium, Playwright), preparing the system under test, and ensuring proper access to environments, databases, and APIs.

- Develop Script: Write the automated test script using the selected tool or framework. In this step, you’ll define the test steps to interact with the application, verify expected outcomes, handle exceptions, and add necessary validations. Tools like Selenium, Rest Assured, or Appium can be used, depending on the type of testing (UI, API, or mobile).

- Execute Script: Run the test script in the prepared environment. During execution, the script will perform the specified actions, interact with the application, and capture test results. You can automate this process to run in different environments or across various devices.

- Analyze Results: After execution, review the test logs and results to verify whether the test passed or failed. Logs provide valuable insights into failures or errors, which can help in debugging and improving the script.

- Update Scripts: Over time, as the application evolves, test scripts will need to be updated to reflect changes in functionality or UI. Maintain the scripts regularly to ensure they stay relevant and functional across versions of the application.

For any automation tester, it's important to have a clear understanding of this process and its steps, as it's the core topic in automation testing and is often covered in most test engineer interview questions.

17. What Are the Best Practices for Maintaining Test Scripts?

Maintaining test scripts effectively ensures their reliability and relevance.

Here are some best practices with examples:

- Modularity: Design scripts in a modular fashion to enhance reusability and maintainability.

Example: Create separate functions for login, data entry, and validation tasks in a test script, allowing each function to be reused across multiple tests.

- Version Control: Use version control systems like Git to track changes and manage different versions of scripts.

Example: Commit your test scripts to a Git repository to keep a history of changes, collaborate with team members, and revert to previous versions if a new change introduces issues.

- Regular Updates: Keep scripts updated to reflect changes in the application or requirements.

Example: Update a test script that verifies a new feature, such as a redesigned login page, to ensure it tests the latest version of the feature accurately.

- Documentation: Document scripts and their purpose thoroughly.

Example: Include comments in your script explaining the purpose of each function and any specific parameters used, such as “// Function to verify user login using valid credentials.”

- Error Handling: Implement robust error handling and logging within your scripts.

Example: Add try-catch blocks to handle exceptions and log error messages, so if a test fails, you get a detailed error report indicating what went wrong and where.

These best practices are commonly asked in test engineer interview questions, as they demonstrate the ability to write maintainable and scalable automation scripts.

18.How Do You Handle Test Data Management in Automation?

Effective test data management is crucial for successful automation testing.

Here are some key strategies and frameworks that can be used:

- Data Parameterization: Use variables to input different data sets into test cases. This approach allows for testing with various inputs without changing the test script. Frameworks like Data-Driven Testing (DDT) facilitate this by providing a way to define and manage test data separately from test logic.

- Data Generation: Create synthetic data specifically for testing purposes. This can include generating data with specific attributes or patterns to test various scenarios. Tools and libraries can help automate the creation of such data.

- External Data Sources: Retrieve test data from external sources such as databases, spreadsheets, or configuration files. This approach can be enhanced by using frameworks like Apache POI for Excel files or SQL libraries for database interactions, allowing for dynamic and extensive data retrieval.

- Data Cleanup: Ensure that test data is cleaned up or restored to its original state after tests are completed. This helps maintain a consistent testing environment. Frameworks like Cucumber (with its data tables) and JUnit/TestNG (with setup and teardown methods) can assist in managing data cleanup processes.

By leveraging these strategies and frameworks, you can manage test data more efficiently, enhance the reliability of your automated tests, and maintain a stable testing environment.

Strong test data management techniques are essential to be known for any test engineer and are commonly addressed in test engineer interview questions.

19. How Do You Use a Test Management Tool?

A test management tool helps streamline and organize the testing process, making it easier for teams to plan, track, and manage their testing efforts.

Here's how you typically use it:

- Test Case Management: You can organize and document test cases, test scenarios, and requirements. The tool lets you create structured test cases and associate them with specific features or modules of your application.

- Test Execution: These tools allow you to schedule and execute your test cases. As tests are run, you can record the results, whether they pass, fail, or need re-testing.

- Defect Tracking: If a test case fails, you can log the defects directly within the tool. Most test management tools integrate with bug tracking systems to streamline this process.

- Reporting: One of the biggest advantages is reporting. You can generate detailed reports on test coverage, execution results, and other metrics, helping you understand the quality of the application and identify areas that need improvement.

Examples:

- TestRail: Popular for organizing test cases and providing in-depth reporting.

- Zephyr: Integrates well with JIRA and offers full test management within agile workflows.

- QTest: A robust tool for managing test cases, defects, and reporting, often used in larger teams.

Understanding how to utilize these tools is essential for test engineers to make proper use of resources, and it's a common topic in most test engineer interview questions, especially for roles that require extensive collaboration and traceability.

20. How Do You Perform Cross-Browser Testing?

Cross-browser testing ensures that a web application works consistently across different browsers and versions. This testing is crucial for verifying that the application's behavior and appearance are uniform for all users, regardless of their browser choice.

Way to perform cross-browser testing:

- Manually: This involves testing the application on various browsers and devices. You would need to install different browsers (like Chrome, Firefox, Safari, and Edge) and test the application on each one to check for compatibility issues. This method can be time-consuming, but it is useful for spot-checking and validating the user experience.

- Automated: Using specialized tools to automate cross-browser testing can save time and increase coverage. Tools like Selenium, Cypress, Playwright, and more along with cloud-based platforms like LambdaTest.

This Platform helps address common cross-browser testing challenges such as inconsistent rendering across browsers, limited local device/browser availability, time consuming test cycles, and difficulty in parallel execution. I have personally used LambdaTest to overcome these issues.

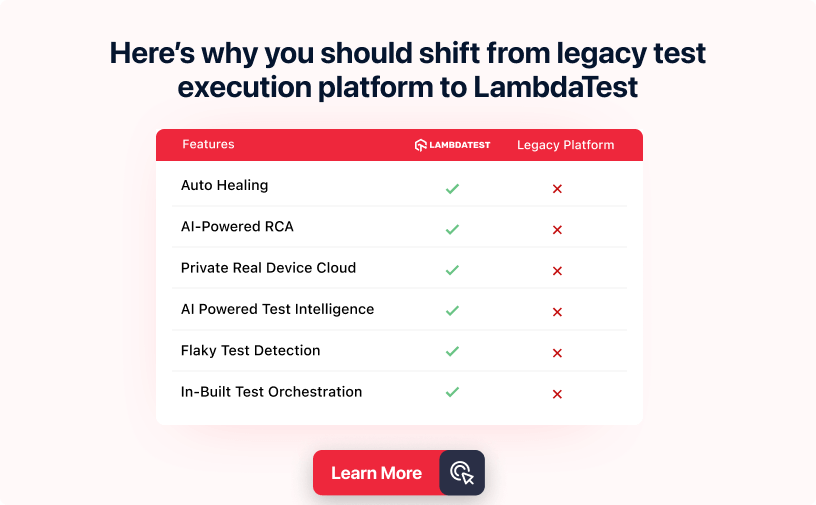

LambdaTest is an AI-native test orchestration and execution platform that allows you to run manual and automated tests at scale across 3,000+ browsers, and OS combinations, 10,000+ real devices

Since cross-browser compatibility is critical to user experience and a fundamental practice for testers, it’s often featured in test engineer interview questions to evaluate familiarity with both manual and automated testing approaches.

21. How Do You Perform Mobile Application Testing Using Appium?

Appium is a popular tool for automating mobile application testing.

Here’s how you can use it effectively:

- Setup: Install and configure Appium along with the required SDKs and dependencies for mobile platforms, such as Android Studio for Android or Xcode for iOS.

- Write Tests: Develop test scripts using Appium’s API. For example, you can use Appium's Java client to create a script that interacts with elements in your mobile application, such as clicking a login button or entering text into a field.

- Execute Tests: Run your tests on real devices or emulators/simulators. For instance, you might run tests on an Android emulator to check how your app performs in a simulated environment or on a real device to ensure compatibility.

- Analyze Results: Review the test results and logs to identify any issues. For example, if a test fails, the logs can help you understand whether it was due to a UI element not being found or a problem with the app's functionality.

Appium-related scenarios like these commonly appear in test engineer interview questions, not just for mobile automation testers, but also for web automation testers, as understanding mobile frameworks adds versatility and broader testing capabilities.

22. What Are the Main Differences Between QA and QC?

Understanding the difference between Quality Assurance (QA) vs Quality Control (QC) is essential for ensuring a comprehensive quality management approach.

While QA focuses on improving processes to prevent defects, QC is concerned with identifying and fixing defects in the final product. Knowing how these two work together can help optimize both product quality and development efficiency.

| Aspect | QA (Quality Assurance) | QC (Quality Control) |

|---|---|---|

| Focus | Ensures processes and practices prevent defects. | Identifies and fixes defects in the final product. |

| Activities | Setting up and maintaining standards, procedures, and guidelines. | Performing testing, defect management, and product inspections. |

| Objective | Prevent defects by improving processes and practices. | Detects defects in the final product and ensures it meets quality standards. |

| Approach | Process-oriented, aims to improve the overall development lifecycle. | Product-oriented, aims to verify the quality of the end product. |

| Examples | Developing a quality management system, process audits. | Conducting functional testing, regression testing, and defect tracking. |

23. How Do You Perform Security Testing for Web Applications?

Security Testing aims to identify and address vulnerabilities in web applications to protect against potential threats and attacks.

This process involves several key methods:

- Static Analysis: Analyze the source code of the application to detect security flaws before the application is run. Example: Checkmarx, SonarQube.

- Dynamic Analysis: Test the application while it is running to uncover vulnerabilities that might not be visible in the code alone. Example: OWASP ZAP, Burp Suite.

- Penetration Testing: Simulate real-world attacks to identify and assess security weaknesses that could be exploited by attackers. Example: Metasploit, Nessus.

- Vulnerability Scanning: Use automated tools to scan the application for known vulnerabilities and security issues. Example: Qualys, Nexpose.

- Review: Conduct thorough security reviews and audits of the application and its components to ensure overall security posture. Example: Manual code reviews, security audits.

Security testing techniques like these are often asked in most of the test engineer interview questions, especially when the role involves ensuring application security.

24.How Do You Handle Testing in an Agile Environment?

Handling testing in an Agile environment involves adapting to its iterative and collaborative nature:

- Collaboration: Engage with developers, product owners, and other stakeholders regularly to gain a clear understanding of requirements and priorities, ensuring alignment and effective testing.

- Iterative Testing: Conduct testing in each sprint or iteration. This allows you to provide timely feedback and address issues as they arise, keeping the development process agile and responsive.

- Automation: Leverage test automation to accommodate the frequent changes typical in Agile. Automation helps maintain consistent testing and provides continuous feedback throughout the development cycle.

- Adaptability: Remain flexible and adjust your testing strategies based on evolving requirements and feedback from the team. This adaptability ensures that testing remains relevant and effective in a dynamic environment.

These Agile testing practices are widely adopted in QA and are frequently covered in test engineer interview questions due to their importance in modern software development.

The intermediate-level test engineer interview questions listed above are curated to help both entry-level candidates and those with some hands-on experience prepare thoroughly for interviews.

As you advance, you’ll encounter more complex test engineer interview questions that are especially relevant for professionals with deeper expertise in automation, frameworks, and testing strategies.

Experienced-Level Test Engineer Interview Questions

Here, the focus shifts to topics essential for experienced test engineers. By exploring these advanced test engineer interview questions, you’ll gain a comprehensive understanding of complex testing frameworks, automation strategies, and QA processes, equipping you to tackle sophisticated testing challenges effectively.

25. What Is the Difference Between Smoke Testing and Sanity Testing?

Smoke testing and sanity testing are both crucial in the software testing process, each serving distinct purposes. Smoke testing ensures the basic functionality of the application is working, while sanity testing focuses on verifying specific components after changes.

Understanding the difference between these two is often highlighted in many test engineer interview questions

Here’s a comparison:

| Aspect | Smoke Testing | Sanity Testing |

|---|---|---|

| Purpose | To check the basic functionality and stability of the application. | To verify that specific functionalities or bug fixes work as expected. |

| Scope | Broad, covers the critical functionalities of the application. | Narrow, focuses on specific areas related to recent changes or fixes. |

| When Performed | Early in the testing process, often after the initial build. | After bug fixes or specific changes have been implemented. |

| Objective | To ensure that the application is stable enough for more detailed testing. | To confirm that specific issues have been resolved and no new problems have been introduced. |

| Testing Depth | Shallow, preliminary checks. | Deep, focused checks on particular areas or functionalities. |

| Relation to BVT | Smoke Testing is also known as Build Verification Testing (BVT), focusing on verifying the critical functionalities of a new build. | Sanity Testing does not typically involve build verification, but ensures that specific fixes or changes work as intended. |

26. Explain the Concept of Continuous Testing in DevOps

Continuous testing is the practice of integrating testing into the DevOps pipeline to provide ongoing feedback throughout the development process. It is one of the most frequent questions asked in most test engineer interview questions, especially for roles aligned with DevOps or CI/CD environments.

This approach ensures that testing is not a one-time activity but an integral part of the entire software lifecycle.

Key aspects of continuous testing include:

- Automation: Automated tests are executed at various stages of the development lifecycle, such as during code commits, builds, and integrations. This helps in quickly identifying defects and issues as soon as they arise.

- Early Feedback: By continuously running tests, teams receive immediate feedback on the quality of the code, allowing for faster identification and resolution of problems.

- Faster Releases: Continuous testing supports more frequent and reliable software releases by integrating testing seamlessly into the continuous integration/continuous deployment (CI/CD) pipeline.

- Higher Quality: With continuous testing, teams can ensure that each change meets quality standards, leading to higher-quality software and fewer defects in production

- Identify Risks: Analyze the application to uncover potential risks, considering factors such as functionality, complexity, and user impact. This helps pinpoint areas that may pose significant risks.

- Assess Risks: Evaluate the likelihood and impact of each identified risk to prioritize testing efforts. This prioritization determines which risks should be addressed first.

- Design Test Cases: Develop test cases that focus on high-risk areas. Ensure these cases thoroughly evaluate critical functionalities and features identified as high risk.

- Execute Tests: Perform tests based on the prioritized risk areas, addressing high-risk issues first to ensure they are thoroughly checked.

- Review and Adjust: Continuously review test results and adjust your testing strategy as needed. Update risk assessments and test cases based on new information or changes in the application.

- Define Objectives: Establish clear performance goals and criteria, such as acceptable response times, throughput, and resource usage. This sets the benchmarks for what constitutes successful performance.

- Design Test Scenarios: Develop test scenarios that mimic expected user load and usage patterns. These scenarios should reflect real-world conditions to accurately assess performance.

- Configure Environment: Set up the necessary testing environment, including test servers, network configurations, and performance testing tools. Ensure that the environment closely resembles the production environment to obtain valid results.

- Execute Tests: Run the performance tests under various conditions to measure key performance metrics such as response time, load handling, and system behavior under stress.

- Analyze Results: Review the performance data collected during testing to identify any bottlenecks, performance issues, or areas for improvement. Analyze metrics like response times, throughput, and resource utilization.

- Optimize: Based on the analysis, make adjustments to improve performance. This may involve optimizing code, improving infrastructure, or refining system configurations to address identified issues.

- LoadRunner: A performance testing tool that helps simulate a large number of users and analyze system behavior under load.

- JMeter: An open-source tool for performance testing that supports various protocols and allows you to create and run performance tests.

- Gatling: An open-source load testing tool known for its scalability and detailed reports on performance metrics.

- Test Scripts: These scripts are written in programming languages like Java, Python, or C#. They define individual test cases, including steps to interact with web elements and verify expected outcomes. For instance, a script might navigate through a web application, fill out forms, and check if the results are as expected.

- Test Data: Managed separately from the test scripts, test data is often stored in external files like Excel, CSV, or databases. This separation allows for easier updates to test data without modifying the scripts. For example, different sets of input data can be used to test various scenarios.

- Libraries: The framework includes reusable libraries or utility classes that handle common tasks such as browser setup, element interaction, and logging. For example, a library might contain methods for clicking buttons or capturing screenshots, which are used across multiple test cases.

- Reporting: Tools or modules are integrated to generate detailed reports and logs of test execution. These reports include information about passed and failed tests, error messages, and execution times. Tools like TestNG or JUnit can be used for generating comprehensive test reports.

- Integration: The framework integrates with other tools to enhance its functionality. For example, it can be integrated with CI/CD tools like Jenkins for automated test execution during the build process and with version control systems like Git to manage changes in test scripts.

- Problem and Challenge: Handling dynamic web elements in Selenium. These elements often changed attributes or position, making them difficult to interact with consistently.

- Solution:

- Dynamic Locators: Used adaptable locators, like relative XPath based on stable attributes, to manage changes in web page structure.

- Example: Replaced //button[@id='submit'] with //button[contains(@class, 'submit-btn')].

- Explicit Waits: Applied explicit waits to handle elements that appear or change state asynchronously, using WebDriverWait and expected conditions.

- Example: WebDriverWait(driver, 10).until(ExpectedConditions.visibilityOfElementLocated(By.id("dynamicElementId")));

- Page Object Model (POM): Implemented POM to encapsulate elements and actions in separate classes, making the code more maintainable and reducing the impact of changes.

- Example: Created a LoginPage class with methods for interacting with login elements.

- Setup CI/CD Tool: Choose and configure a CI/CD tool like Jenkins, GitLab CI, or CircleCI to handle the build and deployment processes. This tool will manage the automation of code integration and deployment tasks.

- Automate Test Execution: Configure the pipeline to automatically run tests after each build or deployment. This ensures that any new code changes are immediately tested for issues.

Example: In Jenkins, set up a job to execute your test suite using a build script or a test runner command. - Configure Reporting: Ensure that test results are collected and reported within the CI/CD pipeline. This allows for immediate visibility into test outcomes and facilitates quick feedback.

Example: Integrate reporting plugins in Jenkins or GitLab to generate test reports and display them on the pipeline dashboard. - Monitor and Optimize: Continuously monitor the performance of the test automation within the CI/CD pipeline and make necessary adjustments to improve efficiency and reliability.

Example: Analyze test execution times and failure rates to optimize test suites and pipeline performance. - Setup WebDriver: Install and configure Selenium WebDriver for the browser you intend to test on (e.g., Chrome, Firefox). Ensure that the appropriate browser driver (e.g., ChromeDriver for Chrome) is set up and accessible.

Example:Download ChromeDriver and set its path in your test script or environment variables - Write Scripts: Develop test scripts in a programming language such as Java, Python, or C#. These scripts will include commands to interact with web elements like clicking buttons, entering text, and navigating through pages.

Example: In Python, use driver.find_element(By.ID, 'loginButton').click() to click a login button. - Execute Tests: Run your test scripts to perform automated testing on the web application. This will simulate user interactions and execute the defined test cases.

Example: Execute the script through your IDE or command line to start the test session. - Validate Results: Review the application's behavior against the expected results. Verify that the application functions correctly and report any discrepancies or issues found during testing.

Example: Check if the login was successful by validating the presence of a user dashboard element, and log any test failures. - Test Annotations: Both frameworks use annotations to define the structure of tests.

- JUnit: Uses annotations like @Test for test methods, @Before for setup, and @After for teardown.

- TestNG: Similar annotations include @Test, @BeforeMethod, @AfterMethod, and additional features like @DataProvider for parameterized tests.

- Test Suites: Organize tests into suites for efficient execution and reporting.

- JUnit: Utilize @RunWith(Suite.class) to specify which classes to include in the test suite.

- TestNG: Define test suites and groups through XML configuration files or annotations.

- Assertions: Use assertions to compare expected outcomes with actual results, ensuring that tests pass or fail as anticipated.

- JUnit: Employ assertion methods such as assertEquals(), assertTrue(), assertFalse(), etc.

- TestNG: Provides similar assertion methods to validate test results.

- Reporting: Both frameworks generate reports to review test results and coverage.

- JUnit: Offers basic reporting features through integrated development environments (IDEs) or command-line tools.

- TestNG: Provides detailed reports, including HTML and XML formats, which offer comprehensive views of test results.

- Continuous Integration: Jenkins automates the build and integration process, ensuring that code changes are integrated regularly and consistently. It includes running automated tests as part of the build process.

- Test Execution: Jenkins triggers automated test execution whenever a build is initiated. It integrates with various testing tools and frameworks, such as Selenium, JUnit, and TestNG, to execute tests and validate changes.

- Reporting: Jenkins provides detailed test results and metrics through various plugins and reporting tools. This helps in analyzing test outcomes, tracking test coverage, and identifying issues.

- Pipeline Management: Jenkins manages and orchestrates the entire CI/CD pipeline. It coordinates the sequence of steps, including build, test, and deployment, ensuring smooth and automated workflows.

- Goal Setting: Establish clear, achievable goals and objectives for the team to ensure everyone understands their targets and the overall vision.

- Task Assignment: Distribute tasks based on each team member’s skills and expertise, ensuring that the right people are working on the right tasks for optimal results.

- Support: Provide necessary resources, training, and support to enable team members to perform their tasks efficiently and stay updated with the latest testing tools and practices.

- Communication: Encourage open communication through regular meetings and updates, allowing the team to discuss progress, challenges, and any issues that arise.

- Motivation: Recognize and reward the team’s achievements to keep motivation and morale high, creating a positive and productive work environment.

- Test Planning: Create a detailed test plan that outlines the scope, objectives, and specific areas to be tested, ensuring all parts of the application are addressed.

- Test Design Techniques: Utilize various test design techniques such as boundary value analysis, equivalence partitioning, and exploratory testing to cover different scenarios and edge cases effectively.

- Requirement Traceability: Link test cases directly to requirements and user stories to ensure that all specified functionality and business needs are thoroughly tested.

- Test Case Reviews: Regularly review and update test cases to incorporate new features, changes, or enhancements, ensuring that the testing remains relevant and comprehensive.

- Planning: Establish the scope, objectives, resources, and schedule for testing. This initial step outlines what needs to be tested, the goals of testing, and how it will be executed.

- Test Case Development: Develop detailed test cases derived from requirements and user stories. This involves specifying the steps, expected results, and conditions for each test to ensure comprehensive coverage.

- Execution: Run the test cases and document the results. Ensure that all planned tests are executed as per the test plan, and carefully record outcomes to track progress and identify any issues.

- Reporting: Summarize test results, report any defects found, and communicate the findings to stakeholders. This includes providing insights into the quality of the product and any necessary actions for improvement.

- Defect Density: Track the number of defects found per unit of application size. This metric helps assess the quality of the software and the effectiveness of the testing process.

- Test Coverage: Determine the percentage of the application’s functionality covered by test cases. This ensures that all critical areas are tested and helps identify any gaps.

- Test Execution Rate: Monitor the ratio of test cases executed versus those planned. A high execution rate indicates that the testing process is on track.

- Defect Resolution Time: Measure the average time taken to resolve and close defects. Shorter resolution times reflect a more efficient bug-fixing process.

- Stakeholder Satisfaction: Gather feedback from stakeholders regarding their satisfaction with the quality and effectiveness of the testing. This provides insight into how well the testing efforts meet business needs and expectations.

- Simulate Behavior: Virtual services replicate the responses and behaviors of real services, allowing tests to proceed as if they were interacting with the actual components.

- Testing in Isolation: Enables testing of application components without relying on the availability or stability of external services.

- Enhanced Coverage: Facilitates testing of edge cases and scenarios involving third-party services, which might otherwise be challenging to test.

- Improved Efficiency: Reduces the need for complex setups and dependencies, speeding up the testing process and making it more efficient.

- Functionality: Verify that the API performs the intended functions correctly and returns the expected responses. This includes checking endpoints, request methods (GET, POST, PUT, DELETE), and response formats (JSON, XML).

- Performance: Assess the API's performance under different conditions, including load and stress testing. Ensure it can handle the expected number of requests and responses within acceptable time limits.

- Security: Test for security vulnerabilities and verify that proper authentication and authorization mechanisms are implemented. Ensure that sensitive data is protected and that the API is resistant to common attacks.

- Error Handling: Check how the API handles errors, ensuring it returns meaningful error messages and appropriate HTTP status codes. Validate that the API can gracefully manage unexpected inputs and conditions.

- Documentation: Confirm that the API documentation is accurate, complete, and easy to understand. It should provide clear information on how to use the API, including endpoints, request parameters, and response formats.

In essence, continuous testing helps teams maintain high-quality software while keeping up with the fast-paced demands of modern development and deployment cycles.

27. How Do You Implement Risk-Based Testing?

Risk-based testing focuses on prioritizing testing efforts based on potential risks associated with an application. It’s a strategic question that has appeared in most of the test engineer interview questions.

Here’s how to implement it effectively:

28. Describe the Process of Performance Testing and the Tools Used

Performance testing aims to ensure that an application meets specific performance criteria and can handle expected loads efficiently.

It is a major topic in application testing and is frequently covered in test engineer interview questions, as it’s important for test engineers to have a solid understanding of performance metrics and tools.

Here’s a detailed process along with common tools used in performance testing:

Tools Used:

29. Explain the Architecture of a Test Automation Framework You Have Used.

In my experience, one of the test automation frameworks I've used is the Selenium framework. Its architecture is a common question frequently covered in most test engineer interview questions. It typically includes:

By leveraging these components, the Selenium framework provides a robust and scalable solution for automating web application testing, ensuring comprehensive coverage and efficient execution.

30. Describe a Challenging Automation Problem You Faced and How You Solved It.

This type of scenario is often asked in most test engineer interview questions to gauge problem-solving abilities and practical experience with test automation tools and strategies.

These strategies effectively addressed the issue of dynamic web elements, enhancing the stability of the automation scripts.

31. How Do You Integrate Test Automation Into CI/CD Pipelines?

Integrating test automation into CI/CD pipelines streamlines the testing process and ensures that software quality is maintained throughout development.

This is a common question frequently asked in many of the test engineer interview questions.

Here's a step-by-step approach:

Integration Steps:

By following these steps, you can effectively integrate test automation into your CI/CD pipeline, ensuring that testing is an integral part of the development workflow.

32. How Do You Use Selenium WebDriver for Web Application Testing?

Using Selenium WebDriver for web application testing involves several key steps to automate interactions with web elements and verify application behavior. This is often featured in many of the test engineer interview questions.

Here’s a concise overview:

Usage:

By following these steps, Selenium WebDriver enables effective automation of web application testing, providing efficient and reliable validation of application functionality.

33. Explain How You Use JUnit or TestNG for Test Management.

JUnit and TestNG are popular testing frameworks used for writing test scripts in Java. Understanding their usage is essential and is frequently asked in test engineer interview questions.

Here’s how to use them effectively for test management

JUnit/TestNG Usage:

34. What Is the Role of Jenkins in Test Automation?

Jenkins is a widely used tool in the CI/CD ecosystem and plays a crucial role in test automation. It’s one of the most important topics for testers and is frequently highlighted in many test engineer interview questions.

Here’s its role:

Subscribe to the LambdaTest YouTube Channel to get more videos on various CI/CD tools, GitLab, and more.

35. How Do You Manage and Lead a Testing Team?

Managing and leading a testing team effectively involves several key strategies. This is a critical topic and is often covered in many of the test engineer interview questions.

Here are some strategies:

36.What Strategies Do You Use to Ensure Thorough Test Coverage?

Ensuring thorough test coverage involves several strategic approaches. This is one of the most common questions that every test engineer must know, and it's often mentioned in most test engineer interview questions.

Here are the strategies:

37. Describe Your Approach to Writing and Executing Test Plans

When writing and executing test plans, a structured approach ensures thorough and effective testing. This is one of the most commonly asked questions in test engineer interview questions.

Here’s the approach:

38. How Do You Measure the Success of Your Testing Efforts?

To evaluate the effectiveness and success of your testing efforts, consider these key metrics:

39. Explain the Concept of Service Virtualization in Testing

Service Virtualization is a technique used to simulate the behavior of services or components that are either not available or difficult to access during testing.

By creating virtual services that mimic the behavior of real services, testers can perform testing in isolation from external dependencies.

This approach helps improve test coverage and enables the testing of scenarios that involve interactions with services that may be under development, unstable, or costly to access.

Key Points:

40. What Are the Key Considerations for Testing APIs?

API Testing focuses on evaluating the performance, functionality, and security of APIs to ensure they meet the desired requirements.

Here are the key considerations:

Conclusion

Preparing for a test engineer interview requires a solid understanding of core testing concepts and hands-on experience. Freshers should focus on basics like test plans, test cases, and essential testing tools, while also understanding the distinction between QA and QC.

For experienced professionals, it's crucial to highlight advanced topics like automation in CI/CD pipelines, continuous testing in DevOps, and risk-based testing.

Be prepared to answer questions commonly found in test engineer interview questions, especially those related to tools like Selenium WebDriver, JUnit/TestNG, and Appium, as well as your ability to manage test data and maintain scripts.

Clear, concise answers will help you stand out and demonstrate your readiness for the role. Best of luck with your interviews and career growth!

Frequently Asked Questions

Did you find this page helpful?

More Related Hubs

Start your journey with LambdaTest

Get 100 minutes of automation test minutes FREE!!