How to Build Enterprise-Grade AI Agents Using Robust Evaluation | Viktoria Semaan | TestMu 2025

How to Build Enterprise-Grade AI Agents Using Robust Evaluation | Viktoria Semaan | TestMu 2025

Playlist

Playlist

- Opening Note by Joe Colantonio | TestMu 2025

- Keynote: Air Fryers, Automation, and AI | TestMu 2025

- Intent Over Scripts: Modernizing Software Testing with AI | TestMu 2025

- CI and the Great Flakiness Adventure | TestMu 2025

- AI for Accessibility: Empowering Inclusive Digital Experiences | TestMu 2025

- Panel Discussion: AI and Community: Shifting Roles, Rising Impact | TestMu 2025

- Ask Me Anything: Future-Proof Your Career: AI, Testing & Path Ahead | TestMu 2025

- 2025: The Agentic Shift – Are We Reasoning, Or Just Retrieving Smarter? | TestMu 2025

- Accelerating Success: How to Optimize Value Delivery with DORA | TestMu 2025

- Rapid Threat-to-Test for Agents | TestMu 2025

- How to Build Enterprise-Grade AI Agents Using Robust Evaluation | TestMu 2025

- Ship Code. Without Writing It. | TestMu 2025

- Exploratory Testing with AI | TestMu 2025

- AI-Powered Debugging & Browser Automation with Playwright MCP | TestMu 2025

- Network Control for End-to-End Web Testing | TestMu 2025

- Keynote: Zero-UI Engineering: Architecting Systems for Agent Experience (AX) | TestMu 2025

- So you think a new tool will help? Here’s an idea-t to think about… | TestMu 2025

- AI, Automation & DevEx: Fueling High-Velocity Engineering | TestMu 2025

- Fast Doesn’t Mean Fragile: Delivering AI-Powered Software at Scale | TestMu 2025

- How to Test LLM Agents | TestMu 2025

- The Great Reckoning: How AI is Exposing the Existential Crisis of Software Testing | TestMu 2025

- Your Test Suite Can’t Catch a Hallucination: Real Talk on AI in Production | TestMu 2025

- Event Driven Architecture: Love Triangle in Integration Testing | TestMu 2025

- When Life Gives You Lemons… Are You Counting Them or Making Lemonade? | TestMu 2025

- AI-Driven Quality Engineering Practices | TestMu 2025

- Transforming Retail with Quality Engineering for Seamless Digital Experiences | TestMu 2025

- Role of Quality Engineering in Shaping the Future of Financial Services | TestMu 2025

- Opening Note Day 2 | TestMu 2025

- Build Your Testing Sidekick: Custom Tools with Model Context Protocol | TestMu 2025

- Reactive Browser Testing with WebDriver BiDi | TestMu 2025

- How Software Testing can Increase Agent Autonomy | TestMu 2025

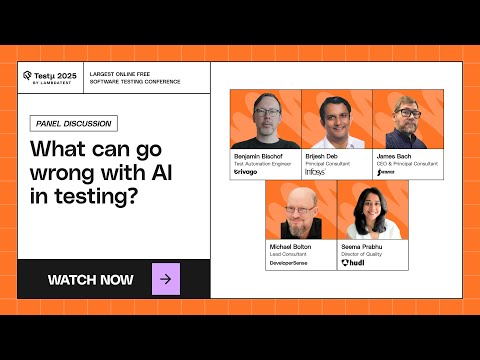

- What can go wrong with AI in testing? | TestMu 2025

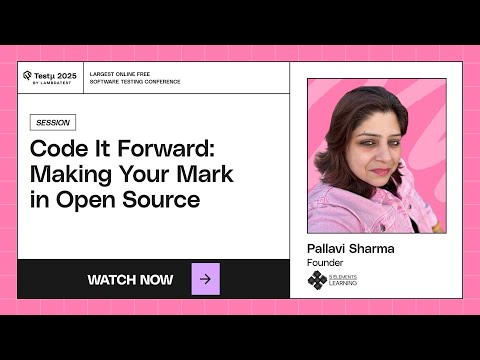

- Code It Forward: Making Your Mark in Open Source | TestMu 2025

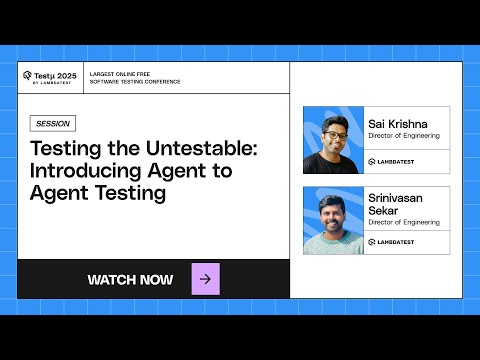

- Testing the Untestable: Agent to Agent Testing | TestMu 2025

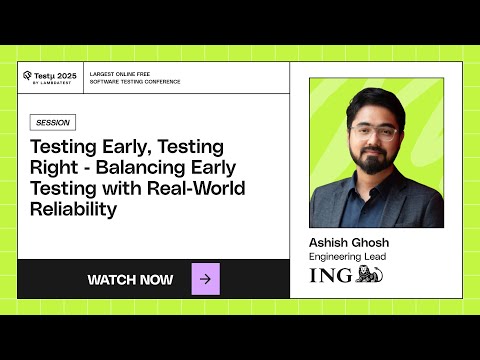

- Testing Early, Testing Right - Balancing Early Testing with Real-World Reliability | TestMu 2025

- The Enterprise AI Playbook: Strategies for Scaling AI in Quality Engineering | TestMu 2025

- Advanced Playwright with AI | TestMu 2025

- Generative to Agentic to Quantum - The Evolution of AI | TestMu 2025

- Test Data Key to Effective Test Coverage | TestMu 2025

- QE Strategic Shift: What's Changing with AI, Automation, and Speed? | TestMu 2025

- Building AI Fluency: Leading Teams Through the Learning Curve | TestMu 2025

- The Practical Automation Playbook | TestMu 2025

- Building a Handwriting Recognition System for the New York Times Crossword | TestMu 2025

- Agentic Cloud: Using Agents to Build Tomorrow’s Cloud | TestMu 2025

- QA to QE: Scaling Quality with Ownership, Code, and Culture | TestMu 2025

- Automated Test Data Portal for Financial Services | TestMu 2025

- QA in the Age of AI: Enhancing Agent Reliability Through Evaluation-Driven Development | TestMu 2025

- Ensuring quality testing in an AI-driven world | TestMu 2025

- AI-Driven Strategies for Scalable & Resilient Performance Engineering | TestMu 2025

- Day 3 Opening Note | TestMu 2025

- Mastering Appium 3: Architecture, Gestures & Beyond | TestMu 2025

- From Zero to MCP: Automating Test Environments for DevOps & QA | TestMu 2025

- AI & GenAI in Quality Engineering: Crawl, Walk, Run | TestMu 2025

- Embracing Agentic AI: From Autonomous Goals to Enterprise Guarantees | TestMu 2025

- Oops, AI Did It Again: How to Get AI to Stop Being Weird and Actually Be Useful | TestMu 2025

- Should We Let AI Take Over Test Automation Completely? | TestMu 2025

- From Hours to Minutes: Run Thousands of CI Tests in Just Minute | TestMu 2025

- Evaluating RAG Applications: From Retrieval to Response Quality | TestMu 2025

- Stop Breaking Your Teams: Seeing the Whole Instead of Pieces | TestMu 2025

- Surviving and Thriving with AI in QA | TestMu 2025

- The Quality Leadership Shift: From Compulsiveness to Cautiousness | TestMu 2025

- Full Court Quality: Lacing Up for Speed, Stability & Style | TestMu 2025

- Navigating Mobile App Testing and App Store Rejection: From Review to Release | TestMu 2025

- Randomized testing: Gotta Catch ‘Em All | TestMu 2025

- Balancing release & sprint delivery speed with thorough testing | TestMu 2025

- Building for AI at Scale: Infrastructure, Integrity, and Innovation | TestMu 2025

- Trusting the Machine: Building Confidence in AI-Driven Testing Decisions | TestMu 2025

- Observability - Holistic Quality across Software Systems | TestMu 2025

- From SDLC to ADLC: The Enterprise Agent Development Lifecycle | TestMu 2025

- Agentic Testing: Your Skilled Human Tester | TestMu 2025

- Evolution of Quality Engineering in Financial Services | TestMu 2025

- Closing Note Day 3 | TestMu 2025

About the talk

In her insightful session at TestMu Conference 2025, 𝐕𝐢𝐤𝐭𝐨𝐫𝐢𝐚 𝐒𝐞𝐦𝐚𝐚𝐧, Principal Technical Evangelist, Databricks, dives deep into the complexities of building enterprise-level AI agents. She emphasizes the importance of robust evaluation frameworks that go beyond basic benchmarks to measure the true performance of AI agents in real-world applications.

The session also covers key considerations for adapting AI agents to enterprise environments, ensuring they can handle multi-step tasks with minimal errors while maintaining efficiency. With a live demo and actionable insights, Viktoria guides you with the tools needed to assess and improve your AI agents for seamless integration into production environments.

Key Takeaways:

✔ The journey from basic LLMs to sophisticated multi-agent systems.

✔ How to evaluate AI agents for scalability, reliability, and safety.

✔ A framework to define and measure key performance indicators for AI agents.

✔ A live demo using MLflow to implement and evaluate AI systems.

Testμ

Testμ(TestMu) Conference is LambdaTest’s annual flagship event, one of the world’s largest virtual software testing conferences dedicated to decoding the future of testing and development. Built by the community, for the community, it’s a space where you’re at the center, connecting, learning, and leading together. From deep-dive sessions on emerging trends in engineering, testing, and DevOps, to hands-on workshops and inspiring culture-driven talks, every experience is designed to keep you at the heart of the conversation.