What Is Agentic AI Testing and Why Does It Matter?

Ninad Pathak

Posted On: November 7, 2025

17 Min

Agentic AI refers to autonomous artificial intelligence systems that can perceive their environment, make decisions, and take actions to achieve specific goals without constant human intervention. These AI agents use large language models, machine learning, and contextual understanding to operate independently while adapting to changing conditions.

When we look at the current software development workflows, product managers use AI for planning, and developers use AI for autocompleting code. But testers are left behind as there wasn’t an AI agentic testing framework, yet.

AI agents write tests, execute them automatically, and heal themselves when the UI changes. QA teams move from babysitting test scripts to providing natural language direction to intelligent assistants.

Overview

Agentic AI testing brings intelligence and autonomy to quality assurance. It helps software teams move beyond static automation by using AI agents that understand context, make independent decisions, and adapt to changes.

How Agentic AI Testing Works

Agentic AI testing functions by enabling autonomous actions, intelligent understanding, and continuous learning throughout the testing lifecycle.

- Autonomous Operation: Agentic AI generates and runs tests without manual scripting. It understands requirements and user flows, then creates the right tests automatically.

- Intelligent Decision-Making: AI agents observe changes, interpret their impact, and adjust their testing strategy to maintain accuracy.

- Continuous Improvement: With every test cycle, the system learns from outcomes and refines its approach for better future performance.

- Beyond Execution: Agents can manage the full testing process from test creation to execution, analysis, and reporting.

Key Benefits of Agentic AI Testing

Agentic AI enhances testing efficiency, reliability, adaptability, and scalability across evolving software environments.

- Speed and Efficiency: Cuts testing time by automating repetitive work and accelerating feedback cycles.

- Improved Coverage: Explores critical paths and hidden scenarios to detect issues early.

- Adaptability: Keeps tests running reliably by automatically updating them when the software evolves.

- Scalability: Supports large-scale, continuous testing across teams and environments.

Chapters

- AI Testing

- Generate Test Cases With AI

- Generate Tests With AI

- AI Testing Tools

- Open-Source AI Testing Tools

- AI in Test Automation

- NLP Testing

- Best AI/ChatGPT Prompts for Software Testing

- AI in Mobile Testing

- AI in Regression Testing

- AI in Performance Testing

- AI Agents

- Best AI Agents

- Agentic AI Testing

- AI in DevOps

- AI DevOps Tools

- AI and Accessibility

- AI in Software Testing Podcasts

TABLE OF CONTENTS

- What is Agentic AI Testing in Quality Assurance?

- How Does Agentic AI Testing Actually Work?

- What Are The Benefits of Agentic AI for Software Testing?

- What Are the Challenges of Agentic Software Testing?

- What Are the Key Use Cases for Agentic AI Testing?

- How to Start Using Agentic AI Testing in Your Organization?

- Why Does LambdaTest Lead the Agentic AI Testing Market?

- What Does the Future Hold for Agentic AI Testing?

- How to Prepare Your Organization for Agentic AI Software Testing?

- Frequently Asked Questions (FAQs)

What is Agentic AI Testing in Quality Assurance?

Agentic AI testing uses autonomous AI agents to manage software quality assurance from start to finish. These agents can generate test cases, run them, and adapt to changes without manual scripting. They use large language models and generative AI to simulate real-world scenarios quickly, testing applications with more intelligence and flexibility than standard automation methods.

Agentic testing differs from test automation in several ways.

AI agents handle generating, executing, and adapting tests without manual scripting. Test logic updates in real time when user interfaces, APIs, or workflows change. The result is reduced maintenance and test failures. Agents understand application behavior, so tests reflect user intent rather than just following predefined steps.

The approach works well for continuous, large-scale testing across complex systems like ERP platforms or AI-powered applications. Testers can move from creating tests to overseeing, analyzing, and guiding agents when human input is needed.

How Does Agentic AI Testing Actually Work?

Agentic AI in software testing uses autonomous AI agents to handle the entire quality assurance process. These agents write tests, run them, and fix them when things change without manual scripting.

The difference from standard automation is that automated testing follows rigid scripts. When development teams change a button or rename a field, the test breaks and testers must manually update the script.

Agentic testing works differently. The AI uses machine learning algorithms and large language models to understand applications. It recognizes what each element does based on context, not just hard-coded coordinates.

Here’s a real example. Developers change the “Submit” button to “Continue” and move it to the bottom of the page. Automated tests fail immediately. An agentic system recognizes the button’s purpose through vision models that understand screen contextually. The test adapts and keeps running.

The technology works through three main capabilities:

- Perception: Agents analyze UI elements, APIs, and data flows in real time

- Decision-making: They determine what needs testing based on risk and past failures

- Action: They generate tests, run them, and heal broken scripts automatically

What Are The Benefits of Agentic AI for Software Testing?

- Reduced Test Maintenance: Autonomous self-healing eliminates the endless cycle of fixing broken scripts after UI updates. Organizations see a 60-80% drop in maintenance work. QA engineers focus on exploratory testing and strategy instead of script repair.

- Faster Release Cycles: Teams running pilot programs report an 85% reduction in manual effort for creating initial test cases. Some cut testing time in half for certain requirement types, with junior engineers working at senior engineer speeds thanks to AI assistance.

- Increased Test Coverage: Agents expand coverage without expanding headcount by catching edge cases that manual testers miss. They scan codebases, analyze user workflows, and study past failures to create test scenarios covering paths previously unconsidered.

- Better Integration and Scale: The technology connects smoothly with Jenkins, GitHub Actions, and GitLab CI through standard APIs and webhooks. Teams run hundreds of tests simultaneously with no extra staff, scaling horizontally without linear cost increases.

- Enhanced QA Roles: Testers move from script maintainers to quality strategists, spending time on activities requiring human judgment like analyzing complex user flows, thinking through business logic, and identifying high-risk areas.

What Are the Challenges of Agentic Software Testing?

- Inconsistent Results: Agentic systems are non-deterministic, producing different results on each run even with identical inputs. The unpredictability complicates debugging and requires comprehensive logging of all agent actions, prompts, and outputs for auditing and improvement.

- Accuracy Degradation: Performance degrades when data patterns change. For software where new trends are constant, agents cannot produce false positives or miss real bugs due to outdated training. Regular retraining, recalibration, and close monitoring of performance metrics are essential.

- Legacy System Compatibility: Older systems usually lack the standardization required for implementing agentic testing. You need upfront investment in proper test automation foundations, clean data sources, and solid reporting before implementing agentic testing.

- Sensitive Data Exposure: AI agents need access to databases and systems containing sensitive information. Rigorous controls, including encryption, access management, and regular security monitoring, are essential, with privacy by design principles built into agent implementation from day one.

- Trust in AI Decisions: AI operates as a black box even to its creators. Unpredictability in decision-making and potential for hallucinations create trust issues. The human-in-the-loop (HITL) concept becomes essential, using agentic platforms to accelerate human testers rather than replace them.

- Skill Gaps: Testers struggle with AI-driven systems even though AI uses natural language. How instructions are phrased significantly affects AI reactions, making understanding of AI basics extremely important. Investment in training and foundational AI literacy helps build practical skills for working with agent systems.

- High Infrastructure Investment: Agentic AI testing demands computational resources. High-performance GPUs, TPUs, and scalable cloud services cost money. Modern platforms optimize for standard CPUs more efficiently, but planning for compute costs and ensuring infrastructure can support the load remains necessary.

What Are the Key Use Cases for Agentic AI Testing?

- Eliminating Flaky Tests: Flaky tests (tests that inconsistently pass or fail without code changes) plague even tech giants. Agentic testing systems eliminate this problem through reliable, sanitized infrastructure. For instance, Dashlane, a password management platform, achieved a 99.9% reduction in flaky tests after adopting agentic testing infrastructure.

- Accelerating Test Execution Speed: Slow test execution creates bottlenecks in CI/CD pipelines. Agentic testing platforms dramatically reduce execution time through intelligent test orchestration and parallel execution. Boomi, an integration platform provider, reduced test execution time by 78%, cutting its full test suite from 9.5 hours down to just 2 hours.

- Scaling Test Coverage Across Teams: Organizations with multiple development teams struggle to maintain independent testing without interference. Agentic testing enables massive scale while preserving team autonomy. Raiffeisen Bank International now runs over 8,000 daily tests across 40+ teams. The bank’s custom enterprise architecture with sub-organizations provides each team complete independence while sharing underlying infrastructure, including secure access to private environments and coverage across 30+ devices and multiple browsers.

- Handling High-Volume Testing at Scale: Organizations need infrastructure that can handle millions of tests without creating bottlenecks or requiring proportional increases in engineering staff. Best Egg, a fintech platform, executes 2.7 million automation tests with zero bottlenecks. Their testing infrastructure handles everything from personal loans to financial health platforms across diverse devices, including unusual platforms like smart fridges and gaming consoles, where customers access financial services.

- Supporting Rapid Release Cycles: Organizations pushing frequent releases need testing infrastructure that keeps pace without creating bottlenecks. Agentic platforms eliminate device lab management overhead and enable instant scaling. KAYAK, for instance, streamlined its release cycles by replacing multiple device labs in various locations that caused connectivity issues, node failures, and unpredictable downtimes.

- Ensuring Accessibility Compliance: Digital accessibility requirements are becoming mandatory across regions. Agentic testing platforms automate accessibility validation against standards like WCAG, ADA, and the European Accessibility Act. Transavia, a European airline, adopted accessibility automation to comply with multiple accessibility standards, ensuring their digital content is inclusive and accessible to all users while maintaining their testing velocity.

- Reducing Infrastructure Costs: Maintaining in-house testing infrastructure creates significant ongoing expenses. Agentic cloud-based testing platforms eliminate these costs while improving performance. Emburse, a spend management company, reduced infrastructure costs by 50% while simultaneously achieving 20% faster test execution, eliminating the burden of managing multiple Selenium grids and refocusing their efforts on higher-value use-cases.

How to Start Using Agentic AI Testing in Your Organization?

Implementation success depends on following a structured approach.

Step 1: Understand the Current System

Document what slows the team down. Maybe it’s the hours spent weekly fixing broken tests after UI changes, or regression suites taking days to run. Application complexity matters because frequent UI updates or tangled integration points indicate where agentic testing delivers the biggest wins.

Document current platforms for test creation, execution, and reporting. Find bottlenecks where manual work slows things down. Pay attention to areas where test maintenance consumes significant engineering time.

Step 2: Set Measurable Goals

“Better testing” lacks meaning. Specific, measurable goals matter: shipping features twice as fast, cutting bug escape rate in half, freeing up 10 hours per week of manual work.

Connect metrics to business outcomes. Faster regression cycles enable weekly instead of monthly releases. Better defect detection means fewer support tickets and happier customers.

Step 3: Pick The Right Agentic AI Testing Platform

Not all agentic testing platforms are equal. Some vendors add “AI-powered” labels to existing automation platforms without substantive changes.

Look for platforms like LambdaTest built specifically for autonomous testing. It generates tests, runs them, and self-heals when things break while testers explain requirements in simple, natural language.

Ensure the platform integrates with existing tools. Without a CI/CD pipeline or bug tracker integration, months get spent fighting infrastructure instead of improving quality.

Step 4: Phased Rollout

Successful teams start small. Pick one application or workflow where manual testing creates pain. Run a pilot for a month to learn how to write better prompts, what data agents need, and how to spot mistakes.

After proving success in one area, expand to two or three more. At full deployment time, the team will have real experience and proof that the approach works.

What Data Do Agents Need?

Agents need three things to work well:

- Access to real user journeys so they understand how people actually use the application

- Historical defect data so they know what tends to break

- Clear requirements so they can tell when something works correctly

Better data quality creates smarter agents. Keep logs of everything AI does for auditing decisions and improving performance over time.

How to Maintain Control

Autonomous doesn’t mean unsupervised. Someone needs to watch what agents do, especially initially. Set up dashboards showing which tests are running, failing, and why.

Create feedback loops so that when agents make mistakes, corrections help the system learn. Think of AI agents like junior engineers requiring onboarding, training, and regular check-ins. The difference is that they learn faster and never get tired.

Why Does LambdaTest Lead the Agentic AI Testing Market?

The agentic testing market has several strong players. Some platforms were built specifically for autonomous testing, while others are open source frameworks adaptable for agentic workflows.

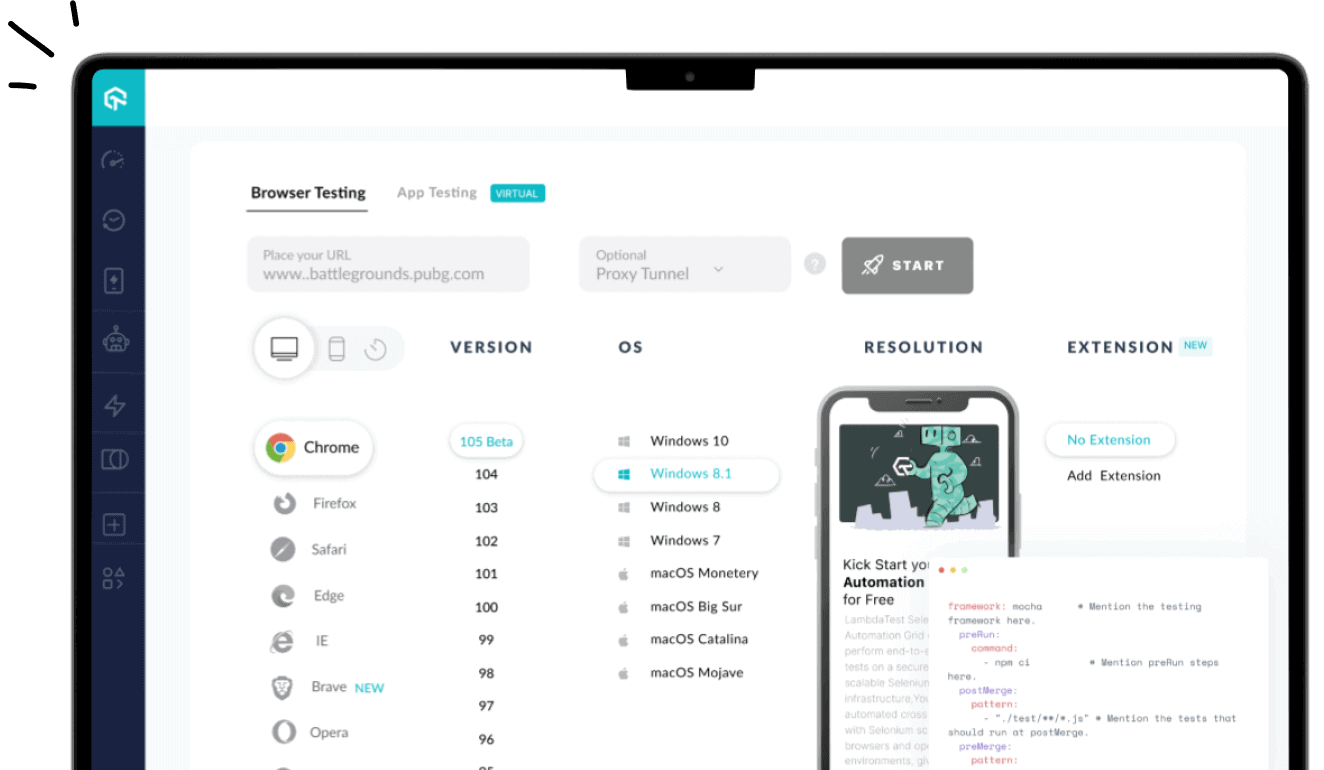

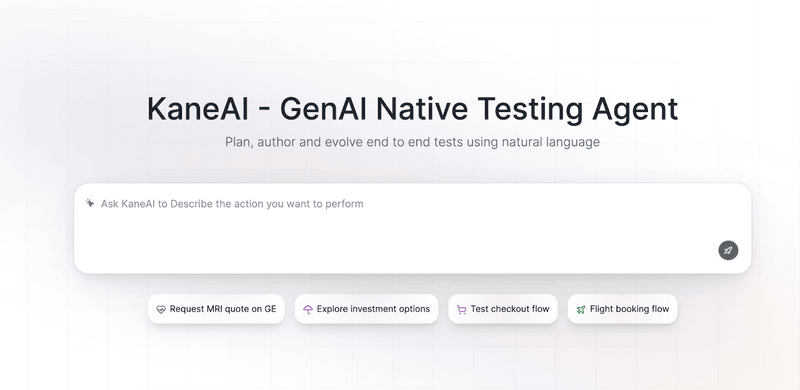

1. LambdaTest KaneAI

LambdaTest KaneAI is the world’s first end-to-end GenAI testing agent. Test instructions written in plain English generate, execute, and maintain tests automatically. When developers change a button label or move an element, KaneAI’s auto-healing recognizes the intent behind the original instruction and updates the test without breaking.

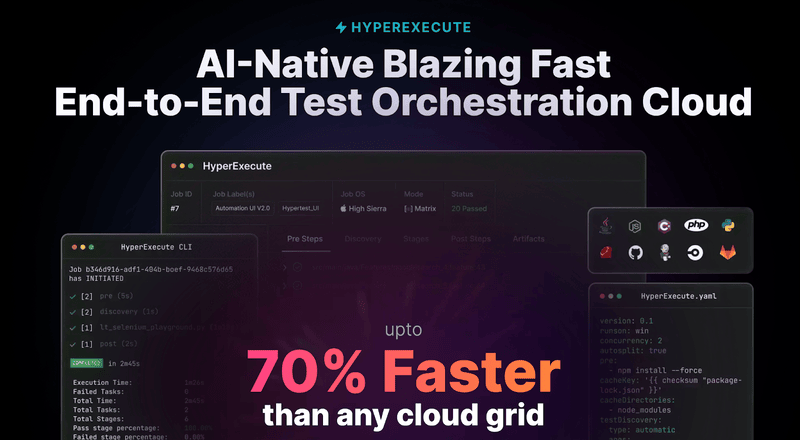

2. LambdaTest HyperExecute

HyperExecute is an intelligent test orchestration engine built for speed. It replaces the hub-and-node model with an architecture that minimizes network latency and optimizes test distribution. Teams report 50% to 70% faster test execution compared to conventional cloud grids.

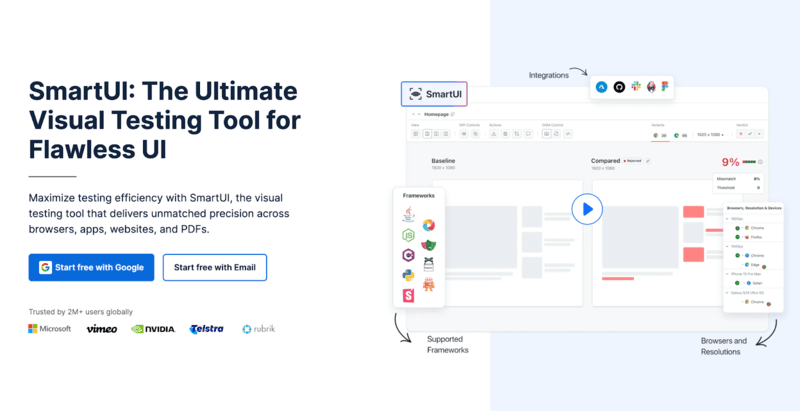

3. LambdaTest Smart UI

Smart UI focuses specifically on visual regression testing. It performs pixel-to-pixel comparisons to catch visual bugs that functional tests miss. The platform supports webhook integration and works with Selenium, Cypress, and Playwright.

What Does the Future Hold for Agentic AI Testing?

- Deeper AI Integration: Agentic testing platforms will get better at understanding context and generating smarter test scenarios. Bias detection will become standard as AI systems make more decisions affecting real people. Testing frameworks will catch when models produce unfair outcomes across different demographic groups.

- Moving Toward Full Autonomy: Teams currently use agentic testing with human oversight. Fully autonomous systems will handle test case creation, execution, and reporting without anyone watching. The AI will decide what needs testing based on code changes and past failures. Predictive quality is the next frontier. Instead of catching bugs after they happen, AI systems will forecast issues before code even ships. They’ll analyze patterns in codebases, user behavior, and system performance to spot problems early.

- Better DevOps Integration: Testing will blend seamlessly into Ops workflows. Real-time feedback will happen automatically as developers commit code. The gap between writing code and knowing if it works will shrink from hours to minutes. Development cycles will speed up as testing truly becomes continuous instead of a separate phase.

- Smarter Context Understanding: AI agents will understand application purpose and user intent more deeply. They’ll know why users click certain buttons and what they’re trying to accomplish. The result leads to test scenarios reflecting actual usage patterns across different platforms and browsers. Advanced analytics will spot anomalies before they become incidents. Tests will get generated preventively based on what AI predicts might break.

How to Prepare Your Organization for Agentic AI Software Testing?

- Quality professionals need different skills now. You need to know how to collaborate with AI systems effectively. This means understanding what they’re good at, where they fail, and how to get better results through clearer instructions.

- Business thinking matters more than ever. Every testing decision should connect to business outcomes. Ask yourself how faster testing helps your company compete or how better quality improves customer retention.

- Cross-functional collaboration becomes essential. You’ll work more closely with product managers on what features matter most, with developers on architecture decisions that affect testability, and with business stakeholders on balancing speed versus thoroughness.

- Organizations should invest in AI-native platforms now rather than waiting. The gap between teams using modern tools and teams stuck with legacy automation is growing.

Frequently Asked Questions (FAQs)

How is agentic AI testing different from traditional automation?

Traditional automation depends on rigid scripts that often break whenever the app changes. Agentic AI testing, on the other hand, adapts automatically. It understands context, adjusts to UI or workflow updates, and keeps tests running smoothly without manual fixes.

Does agentic AI replace human testers?

Not at all. It takes over repetitive tasks so testers can focus on creative and strategic work, like improving test design, uncovering complex bugs, and analyzing quality trends across the product.

How reliable is agentic AI compared to manual testing?

Agentic AI delivers consistent results when trained with the right data and goals. Unlike manual testing, it doesn’t lose focus or miss steps, which means fewer errors and faster feedback during development cycles.

Can agentic AI testing work with existing DevOps workflows?

Yes. Most agentic platforms integrate easily with CI/CD pipelines through Jenkins, GitHub Actions, GitLab, and other tools. This enables continuous, automated testing within standard development processes.

Which types of projects benefit most from agentic AI testing?

It’s ideal for large, fast-changing applications such as enterprise platforms, fintech systems, and AI-driven products. Projects with frequent UI updates or complex integrations see the biggest time savings. Legacy systems may need some modernization before agentic testing can run effectively.

How does agentic AI handle sensitive or private data?

The top platforms are built with security in mind. They use encryption, role-based access, and privacy controls to ensure that sensitive data stays protected while testing at scale.

Author