22 Best ETL Tools For Managing Data Pipelines [2025]

Tahneet Kanwal

Posted On: August 8, 2025

16 Min

ETL tools help businesses collect data from multiple places, convert it into a consistent format, and store it where it can be used for reporting and decision-making. They automate these tasks to save time and reduce errors.

Overview

ETL tools automate the process of extracting data, transforming it, and loading it into storage systems so it’s ready for analysis.

Some of the best ETL tools

Explore some of the ETL tools for fast, accurate, and easy data integration.

- SSIS: ETL tool for SQL Server.

- Portable.io: Cloud-based data integration.

- Matillion: Cloud warehouse ETL.

- Integrate.io: Easy cloud data pipelines.

- DLT: Bulk data loader.

- ODI: Enterprise data integration.

- AWS Glue: Managed cloud ETL service.

- Singer: Open-source modular ETL.

- Debezium: Real-time database syncing.

- Azure Data Factory: Cloud ETL orchestrator.

- Google Cloud Dataflow: Stream & batch ETL.

- Stitch: Quick data replication.

- Hevo: No-code data pipelines.

- Rivery: Cloud ETL & orchestration.

- Qlik Compose: Data warehouse automation.

- Astera Centerprise: Enterprise ETL platform.

- Informatica: Scalable data integration.

- Fivetran: Automated data replication.

- Airbyte: Open-source ETL tool.

- Meltano: ELT & analytics pipelines.

- Keboola: Cloud ETL & transformation.

- SnapLogic: AI-driven ETL automation.

Types of ETL tools

These ETL tools vary by processing method and deployment, open-source, on-premise, cloud-native, or hybrid, each suited to different workloads, security, and scalability needs.

How to Choose the Best ETL Tools?

Choosing the right ETL tool means evaluating how well it manages data processing, ensures consistent transformations, performs error-free loading, and includes quality checks for reliable data across systems.

Table of Contents

- What Are ETL Tools?

- Some of the Best ETL Tools

- Microsoft SQL Server Integration Services (SSIS)

- Portable.io

- Matillion

- Integrate.io

- Data Load Tool (DLT)

- Oracle Data Integrator (ODI)

- AWS Glue

- Singer

- Debezium

- Azure Data Factory

- Google Cloud Dataflow

- Stitch

- Hevo

- Rivery

- Qlik Compose

- Astera Centerprise

- Informatica

- Fivetran

- Airbyte

- Meltano

- Keboola

- SnapLogic

- Types of ETL Tools

- Factors to Consider for Choosing the Best ETL Tool

- Frequently Asked Questions (FAQs)

What Are ETL Tools?

ETL tools are software that pull data from different sources, apply rules to clean and organize it, and then load it into a system like a data warehouse for easy access and analysis.

- Extract: Raw data is collected from sources like transactional databases, flat files, and APIs. Instead of sending data directly to a warehouse, it is placed in temporary storage to protect the original data.

- Transform: Extracted data is cleaned, formatted, and standardized based on predefined rules. Tasks include auditing, compliance checks, and restructuring to create a structured data warehouse that supports decision-making.

- Load: Transformed data is stored in a central repository like a data warehouse or data lake. Loading starts with a full transfer, followed by updates or periodic refreshes. Automation helps maintain consistency, using batch processing or continuous updates based on business needs.

Some of the Best ETL Tools

Here’s a curated list of the best ETL tools for businesses to efficiently process data. These tools streamline extracting, transforming, and loading data to deliver better insights.

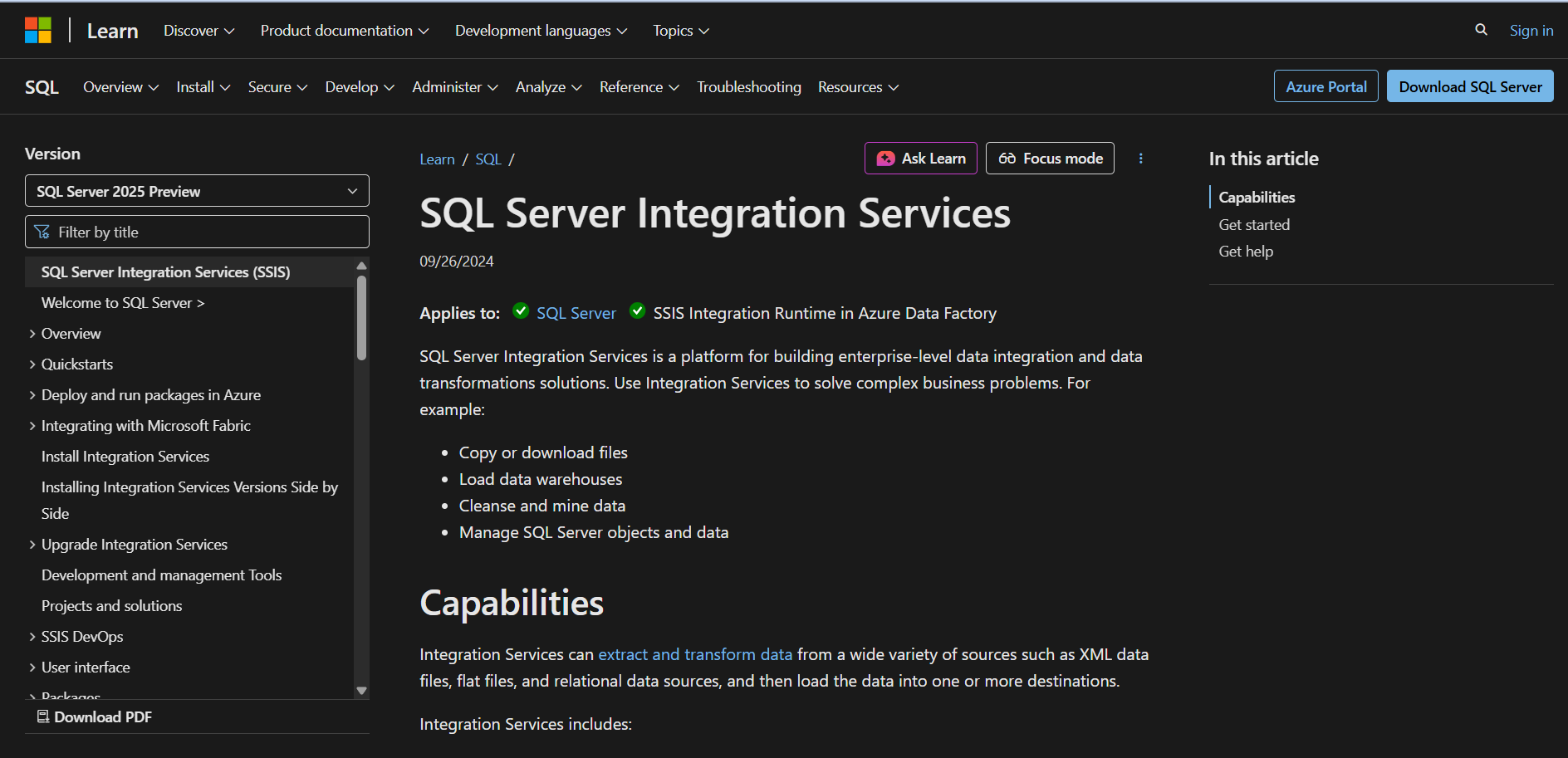

1. Microsoft SQL Server Integration Services (SSIS)

Microsoft SQL Server Integration Services is designed for data engineers and enterprises. It offers powerful ETL capabilities with an easy interface for building complex pipelines.

It connects to SQL Server, Oracle, cloud storage, and flat files, enabling data cleaning, merging, and processing before loading into target systems. SSIS doesn’t natively support real-time streaming or modern event-driven architectures. Real-time capabilities require additional tools like StreamInsight or integration with Azure Stream Analytics.

Key features:

- Built-in transformations: Allows cleaning, merging, and processing of data before loading it into target systems.

- ETL task automation: Automates data extraction, transformation, and loading processes to streamline workflows.

- Parallel execution: Supports parallel processing and performance tuning for handling large-scale data.

- Error handling: Includes logging, error detection, and event-driven workflows to manage data integrity.

- Tool integration: Works seamlessly with SQL Server Management Studio, Azure Data Factory, and other Microsoft tools.

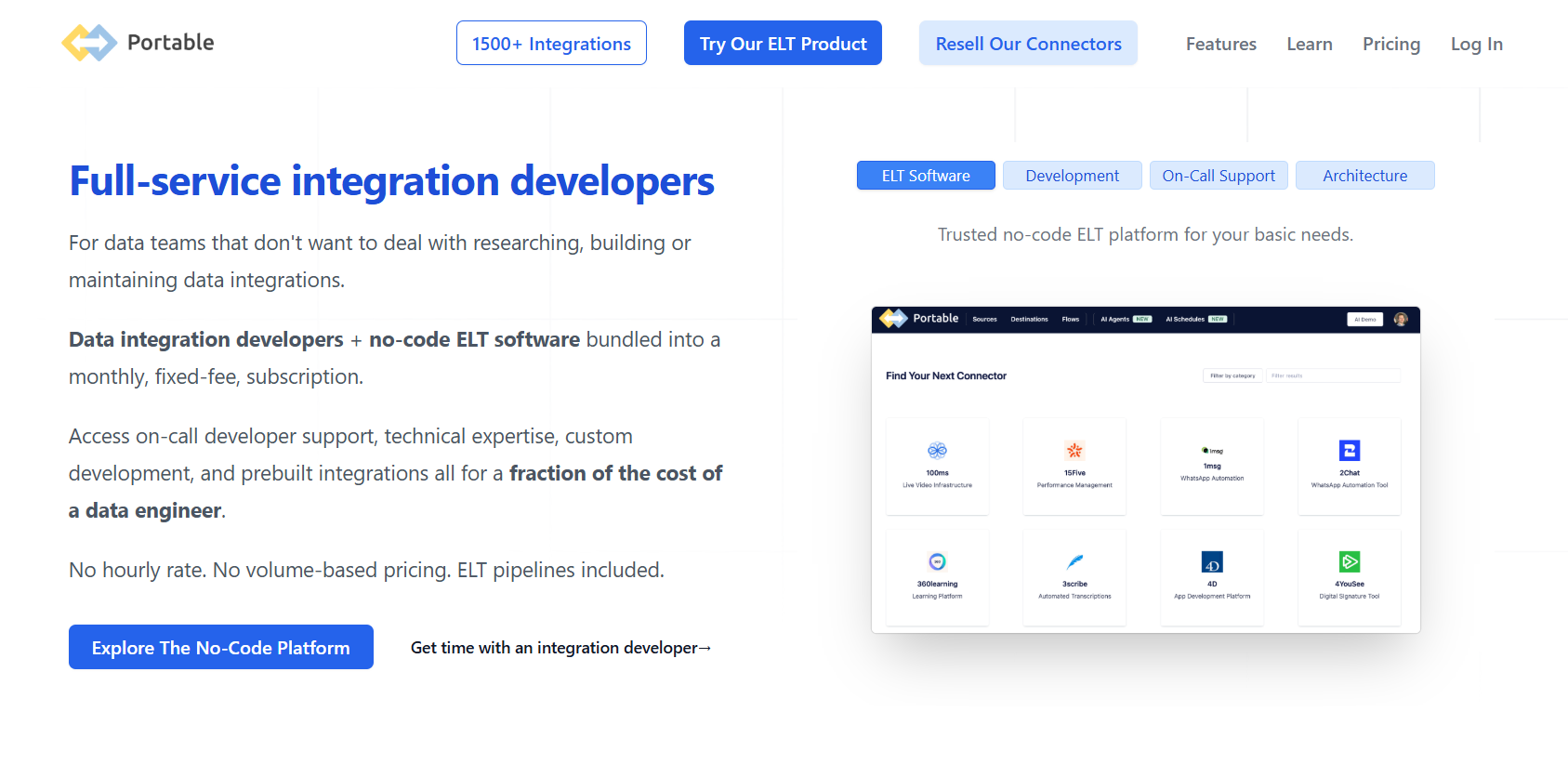

2. Portable.io

Portable.io is an ELT tool that helps data teams integrate data from SaaS applications and other sources often overlooked by traditional ETL tools. Although often categorized as ETL, Portable.io follows an ELT pattern by loading data before transformation in most use cases.

Unlike traditional ETL tools, it provides free custom connector development and maintenance, ensuring flexibility without hidden costs.

Key features:

- No-code setup: Provides a no-code interface for configuring and managing connectors.

- Data scheduling: Automates and schedules data transfers to maintain up-to-date pipelines.

- Pipeline monitoring: Detects issues in data workflows and triggers alerts for resolution.

- Destination flexibility: Sends data to multiple warehouses and BI tools without extra setup.

- Real-time integration: Connects with real-time data sources and custom applications.

- Connector support: Offers 500+ built-in connectors for data extraction and loading, with support for custom sources.

3. Matillion

Matillion is a cloud-native ETL tool that processes and transforms large datasets within cloud data warehouses (e.g., Snowflake, BigQuery, Redshift). It does not support on-premise deployments.. It supports low-code and high-code ETL pipeline building and integrates with major cloud warehouses to enable advanced transformations using native SQL pushdown. Its automation and AI-driven features simplify cloud data management.

Key features:

- Pre-built connectors: Includes a large set of pre-built connectors for databases, APIs, and cloud storage solutions.

- Transformation tools: Provides drag-and-drop options and custom code editors for flexible data processing.

- High-volume processing: Processes large amounts of data and handles workloads from gigabytes to petabytes without slowdowns.

- Workflow orchestration: Supports workflow orchestration and scheduling so users can automate ETL processes based on business needs.

- Centralized management: Offers centralized management through the Matillion Hub with tools for scheduling and monitoring ETL workflows.

4. Integrate.io

Integrate.io is a cloud-based ETL platform with an intuitive interface for managing data workflows. It connects to databases, CRMs, and cloud storage, supporting ETL (Extract, Transform, Load), ELT (Extract, Load, Transform), Reverse ETL (Reverse Extract, Transform, Load), and CDC. It automates workflows, accelerates processing, and ensures GDPR compliance.

Key features:

- API generation: Allows users to generate REST APIs on any data source for easier integration.

- Connector library: Supports 150+ data sources, including databases, cloud storage, and SaaS apps.

- Workflow support: Handles both scheduled workflows and real-time data streaming.

- Bidirectional integration: Offers strong support for Salesforce and other two-way data syncs.

- Data transformation: While transformation tools are available, highly complex logic often requires external scripting or APIs.

5. Data Load Tool (DLT)

Data Load Tool (DLT) is an open-source Python-based ELT library designed for simplicity and flexibility. It enables data teams to build robust pipelines with minimal setup, supporting schema detection, incremental loads, and data contract enforcement.

Key features:

- Python-native interface: Build pipelines using familiar Python syntax and script logic.

- Schema management: Auto-detects schema changes and adjusts transformations accordingly.

- Incremental loads: Supports change tracking for efficient syncs.

- Prebuilt connectors: 60+ connectors for databases, APIs, and cloud storage platforms.

- Workflow integration: Works with Airflow, dbt, and serverless environments for seamless orchestration.

6. Oracle Data Integrator (ODI)

ODI is an ETL tool that manages data workflows across databases, cloud platforms, and big data systems. It uses an E-LT architecture to load and process data within the target system. It supports parallel processing, built-in transformations, data validation, and profiling with a modular, user-friendly interface.

Key features:

- E-LT architecture: Processes data within the target system, reducing source load.

- Parallel execution: Supports push-down transformations for large-scale processing.

- Change Data Capture: Enables real-time event-based data updates.

- Validation and profiling: Includes CDC and real-time sync features that rely on Oracle GoldenGate, which requires separate licensing.

- Integration support: Works with Oracle GoldenGate, Apache Spark, and more.

7. AWS Glue

AWS Glue is a cloud-native, serverless ELT/ETL platform for automating data discovery, transformation, and loading. It supports visual development with Glue Studio and code-based authoring in Python or Scala using Apache Spark. It integrates with AWS tools like S3 and Redshift, offers schema inference, and supports Apache Iceberg (Glue 4.0+). Cold starts and streaming via Kafka/Kinesis may need tuning.

Key features:

- Centralized metadata: Uses AWS Glue Data Catalog for dataset management.

- Schema management: Automates schema discovery and versioning.

- Visual ETL: Includes AWS Glue Studio with drag-and-drop job creation.

- Real-time ETL: Supports event-driven triggers for Kinesis and Kafka.

- Job automation: Uses triggers to start ETL jobs on new data availability.

- Workflow orchestration: Chains jobs, crawlers, and triggers.

- Large dataset support: Supports Apache Iceberg for optimized storage.

- Storage integration: Connects with Amazon S3, RDS, DynamoDB, MongoDB, and more.

8. Singer

Singer is an open-source ELT framework that standardizes data pipeline development using a modular architecture. It separates the extract and load phases through “taps” and “targets,” making it easier to build and manage portable data connectors across various sources and destinations.

Key features:

- Modular Architecture: Uses taps and targets to build reusable pipelines.

- Standardized Format: Transfers data in a JSON-based schema.

- Language Flexibility: Built primarily in Python but supports other languages.

- Community-Driven: Offers a growing library of prebuilt connectors.

- Integration Support: Works with Meltano and other orchestration tools.

9. Debezium

Debezium is an open-source Change Data Capture (CDC) tool that streams real-time data changes from databases. Built on Apache Kafka, it monitors database logs and propagates inserts, updates, and deletes to downstream systems without impacting performance.

Key features:

- Real-Time CDC: Captures and streams data changes as events using Kafka topics.

- Broad DB Support: Works with MySQL, PostgreSQL, MongoDB, SQL Server, Oracle, and more.

- Low Overhead: Reads transaction logs instead of querying databases, ensuring minimal impact.

- Schema Evolution: Automatically detects and publishes schema changes.

- Integration-Friendly: Seamlessly integrates with Kafka Connect, Kafka Streams, Flink, and other streaming systems.

10. Azure Data Factory

Azure Data Factory is a cloud-based ETL tool that orchestrates data movement and transformation across on-premises and cloud environments. It enables the creation of ETL pipelines with built-in scheduling, automation, and support for multiple data sources and storage platforms.

Key features:

- Data Compression: Reduces file sizes to optimize bandwidth.

- Custom Data Flows: Supports complex transformation logic.

- Validation Tools: Allow preview and verification of data during transfer.

- Scalable Transformations: Handles large-scale data with built-in activities.

- Pipeline Grouping: Organizes activities for easier monitoring and management.

- Event Triggers: Automates workflows based on predefined conditions.

11. Google Cloud Dataflow

Google Cloud Dataflow is a managed service for batch and streaming data processing, running on a serverless architecture for performance and cost efficiency. Built on Apache Beam, it supports parallel processing and allows engineers to define pipelines using the Apache Beam SDK.

These pipelines run on Google Cloud Dataflow or other execution engines like Apache Flink and Spark, with Dataflow handling execution as a distributed system.

Key features:

- Serverless Architecture: Eliminates infrastructure management.

- Batch and Streaming: Supports both batch and real-time data processing.

- Auto-scaling: Automatically adjusts based on data volume and workload.

- Apache Beam Support: Enables development in Java, Python, and other languages.

- Cloud Integration: Works with BigQuery, Cloud Storage, Pub/Sub, and more.

12. Stitch

Stitch is an ETL tool that automates data extraction and loading from databases, SaaS apps, and cloud platforms into data lakes and warehouses. It supports ETL and ELT workflows, offers basic transformations, selective replication, and features an intuitive interface for quick pipeline setup.

Key features:

- Cloud Integration: Loads data into warehouses or databases for analysis.

- Automated Updates: Continuously runs data syncs to keep data current.

- ETL Process: Uses a three-phase Extract, Prepare, and Load method for structured data movement.

- Source Support: Extracts data from 140+ sources with no coding required.

13. Hevo

Hevo Data is a cloud-based ETL platform with a low-code interface for building data pipelines. It supports real-time streaming, automated schema management, and SQL or Python-based transformations. Hevo follows compliance standards like HIPAA, SOC 2, and GDPR, offering scalable, low-latency data processing.

Key features:

- Preload Transformations: Format data before the destination and customize schema mapping.

- Pipeline Monitoring: Real-time dashboards for visibility and failure detection.

- Fault Tolerance: Maintains data integrity with low latency and no data loss.

- Security Compliance: End-to-end encryption and adherence to major security standards.

- Extensive Integrations: 150+ sources, including SaaS, databases with CDC, and REST API support.

- Quick Setup: Configure pipelines rapidly with automatic maintenance despite source changes.

14. Rivery

Rivery is a cloud-based multi-tenant ELT tool supporting inline Python transformations and reverse ETL. It enables workflows and loads data into multiple destinations. It offers real-time source capabilities via Change Data Capture (CDC) but processes data in micro-batches instead of continuous streaming.

Key features:

- Transformations: Supports SQL and Python-based processing with built-in functions.

- Workflow Orchestration: Includes conditional logic, loops, and scheduling automation.

- Development Support: Offers version control and multi-environment setups.

- Monitoring: Provides full visibility into pipelines and resource usage.

- AI Assistance: Features Rivery Copilot for faster pipeline creation.

- Reverse ETL: Pushes processed data back into operational systems.

- Source Connectivity: Connects to 200+ data sources, including custom APIs.

15. Qlik Compose

Qlik Compose automates data warehouse design and generates ETL code, eliminating manual development. It extracts data from multiple sources, moves it to data warehouses, and offers a Workflow Designer and Scheduler for easy pipeline management. Built-in data validation ensures accuracy. For real-time needs, it integrates with Qlik Replicate.

Key features:

- Automatic ETL Code Generation: Creates and updates ETL code using best practices and proven patterns.

- Data Warehouse Automation: Manages processes from data ingestion to preparation for analytics.

- Push-down Processing: Uses the target warehouse for set-based SQL execution to enhance performance.

16. Astera Centerprise

Astera Centerprise is a no-code ETL tool with a drag-and-drop interface, featuring an ETL/ELT engine, 200+ transformations, and scheduling for automation. It supports data extraction, transformation, cleansing, and validation, integrating with databases, cloud services, and applications.

Key features:

- Code-free Environment: User-friendly drag-and-drop interface for all skill levels.

- Multi-threaded Processing: Speeds up data integration and transformation.

- Data Support: Handles structured and unstructured data, including XML, JSON, EDI, flat files, and streams.

- Wide Connectivity: Connects to web services, databases, CRM, ERP, mainframes, and more.

- Advanced Transformations: Supports parsing and processing complex data formats.

- Process Automation: Orchestrates tasks like FTP transfers, SQL execution, and email alerts.

- Pushdown Optimization: Processes data at source or target with automatic SQL generation.

- Cloud Connectivity: Integrates with Azure, Amazon S3, Google Drive, Dropbox, Redshift, etc.

- BI Integration: Works with Power BI, Tableau, and other visualization tools.

17. Informatica

Informatica is an enterprise ETL tool designed for processing large volumes of structured and unstructured data. It includes client tools, a server, a metadata repository, and integration services. Workflows created in the workflow manager are executed by the server and monitored via the workflow monitor, with job design handled in the mapping designer.

Key features:

- Drag-and-drop Interface: Simplifies creation of complex data transformations.

- Batch and Real-time Processing: Supports event-driven workflows.

- Security: Provides field-level encryption for sensitive data.

- Automation: Handles schema management, deduplication, and validation.

- Scalability: Adapts to growing workloads with a scalable architecture.

Note

NoteTest your data workflows with confidence. Try KaneAI Today!

18. Fivetran

Fivetran is a cloud-native ELT tool that focuses on raw data extraction and loading before transformation. It supports Change Data Capture (CDC) and integrates with dbt core for SQL-based transformations. Fully managed and scalable, Fivetran handles schema changes automatically and provides reliable, low-latency data replication.

Key features:

- SaaS ELT Platform: Easy-to-use interface for no-code pipeline setup.

- Connector Library: Offers 400+ native and 300+ API-based lite connectors.

- Scalability: Efficiently handles large data volumes.

- dbt Integration: Built-in support for managing data transformations.

- Extraction and Loading: Uses batch processing and Change Data Capture (CDC).

19. Airbyte

Airbyte is an open-source data movement ETL tool with 400+ connectors, enabling businesses to transfer data across various sources. It simplifies integration with a Connector Builder for custom connectors and a marketplace for additional options. This ETL tool ensures scalability as data needs evolve, making it a reliable choice for syncing and managing growing datasets.

Key features:

- No-code Extraction: Extracts data from sources without coding or complex setup.

- Flexible Loading: Loads data into warehouses/databases with type handling and deduplication; integrates with dbt and Airflow.

- Custom Connectors: Enables quick creation using the Connector Development Kit without extra environments.

- Sync Scheduling: Supports sync intervals from 5 minutes to daily, adapting to schema changes.

- Extensive Source Support: Connects to 400+ sources with high-speed replication and incremental updates.

20. Meltano

Meltano is an open-source ETL tool that helps data teams build flexible pipelines. It integrates with Singer, dbt, and Airflow, supporting ELT, incremental replication, and stream mapping. Using command-line and YAML configurations, Meltano offers scheduling, secure secrets management, and centralized plugin integration via MeltanoHub.

Key features:

- Modular System: Builds and customizes pipelines with plugins.

- Integration Support: Uses Singer taps/targets, dbt, Airflow, and Great Expectations.

- ELT Focus: Extracts and loads data before transforming it.

- Incremental Replication: Tracks updates to optimize data transfers.

- CLI Interface: Manages pipelines efficiently via the command line.

- YAML Configuration: Ensures reproducibility and easier management.

- Scheduling: Automates data workflows.

- Security: Handles secrets and credentials securely.

21. Keboola

Keboola is a cloud-based ETL tool that moves and processes data from various sources. It supports combining structured and unstructured data, with transformation tools for cleansing, enrichment, and aggregation. Its simple interface and automation features make workflow creation easy for all users.

Key features:

- User-friendly interface: Simplifies building and managing data pipelines.

- Prebuilt connectors: Integrates with various data sources and applications.

- Transformation tools: Support data cleansing, enrichment, and aggregation.

- Custom workflows: Enables automation and application of business rules.

22. SnapLogic

SnapLogic is a cloud-based ETL and iPaaS solution that simplifies data integration across applications, databases, and cloud platforms. It features a low-code, AI-powered interface with a visual pipeline builder for designing and managing data workflows. Supports real-time, batch, and event-driven integration with AI-driven automation to optimize pipelines.

Key features:

- Drag-and-drop interface: Builds data pipelines without coding.

- Flexible integration: Supports real-time, batch, and event-driven workflows across cloud and on-premises.

- Connector library: Offers 600+ prebuilt connectors (Snaps) for databases, apps, and SaaS.

- AI automation: Optimizes performance and reduces manual work.

- Security: Includes governance, monitoring, and compliance controls.

Factors to Consider for Choosing the Best ETL Tool

Selecting the right ETL tool requires evaluating its ability to process, store, and transform data efficiently.

Below are key factors to consider:

- Data Management: The tool should organize and retrieve data efficiently while handling large volumes securely. It must support multiple data sources and ensure high performance.

- Data Transformation Capabilities: A good ETL tool should allow simple and complex transformations, such as cleaning, modifying, and merging data, to meet various business needs.

- Data Ingestion: The tool should collect data from different sources in real-time or at scheduled intervals, supporting multiple formats and scaling as data sources grow.

- Data Quality: Ensuring accurate and complete data is critical for reliable analysis. The ETL tool should maintain consistency across systems and prevent errors.

- Quality Assurance Management: Built-in quality checks, error tracking, and version control help maintain data integrity and troubleshoot issues efficiently.

Conclusion

These ETL tools provide diverse options for managing data pipelines and reducing the effort spent on manual data processing. You can choose the one that best fits your business needs and start optimizing your data workflows.

Selecting the right ETL tool is essential for businesses that rely on accurate and properly integrated data. ETL processes build the foundation for data analytics and machine learning by preparing raw data for storage and analysis. The stored data can then be used to generate reports, dashboards, or predictive models. Different ETL tools address different challenges, so it is important to evaluate the specific needs of teams and the organization as a whole.

A well-chosen ETL tool simplifies data operations and supports business growth by improving analytics and decision-making.

Frequently Asked Questions (FAQs)

What are the key steps in the ETL process?

The ETL process involves three key steps: Extracting data from multiple sources, transforming it to meet business or technical needs (such as cleaning, formatting, or joining), and loading it into a target database or data warehouse for analysis.

What is the best ETL tool?

There isn’t a single best ETL tool – it depends on your needs. Microsoft SQL Server Integration Services (SSIS) works well with Microsoft-based environments, and Portable.io provides flexible and scalable data integration with custom connectors. The best option depends on how well it performs, how easy it is to use, and how it fits with existing systems.

Is SQL an ETL tool?

No, SQL isn’t an ETL tool – it’s a language for working with databases. However, you can use SQL as part of the ETL process, especially for transforming data.

What are SAP ETL tools?

SAP offers several ETL tools, with SAP Data Services being the main one. It helps move and transform data within SAP systems and between SAP and other systems. Other tools include SAP HANA smart data integration and SAP Cloud Platform Integration. These are specialized tools designed to work particularly well with SAP’s business software ecosystem.

What are some popular ELT tools in the market?

Popular ELT tools include Snowflake, Google BigQuery, Amazon Redshift (combined with tools like dbt or Fivetran), and Azure Synapse. These tools are optimized for transforming large volumes of data after loading into the destination.

What is ELT, and how is it different from ETL?

ELT (Extract, Load, Transform) is a modern data integration process where data is first loaded into the destination system and then transformed using the destination system’s computing power. Unlike ETL, where transformation happens before loading, ELT is ideal for cloud-based and big data platforms.

When should you choose ELT over ETL?

ELT is best suited for modern cloud-based data warehouses that can efficiently handle large-scale data transformations. It’s ideal when you need to offload transformation logic to a powerful destination system, reducing the need for intermediate servers.

Can ETL tools also support ELT processes?

Yes, many modern ETL tools like Talend, Informatica, and Matillion support ELT as well. They offer flexibility to adapt based on your infrastructure, whether transformation happens before or after loading the data.

Is ELT suitable for real-time data processing?

ELT is generally used for batch processing, but with advancements in cloud platforms and tools like Kafka and Snowflake’s streaming capabilities, some ELT pipelines can support near real-time processing. However, ETL is still preferred for strict real-time use cases.

How does DBT fit into the ELT landscape?

DBT (data build tool) is a transformation-focused ELT tool that works by running SQL-based transformations directly in your data warehouse. It’s widely used with modern data stacks to build, test, and document analytics pipelines in a scalable and version-controlled way.

Author