Leveraging Machine Learning in Test Automation

Saif Sadiq

Posted On: August 5, 2017

6 Min

The goals we are trying to achieve here by using Machine learning in test automation are to dynamically write new test cases based on user interactions by data-mining their logs and their behavior on the application / service for which tests are to be written, live validation so that in case if an object is modified or removed or some other change like “modification in spelling” such as done by most of the IDE’s in the form of Intelli-sense like Visual Studio or Eclipse.

Machine learning in test automation enhances efficiency, enabling predictive maintenance, improved test generation, and increased test coverage, ensuring faster, more accurate testing outcomes.

Machine Learning in “Test Automation” can help prevent some of the following but not limited cases:

- Saving on Manual Labor of writing test cases

- Test cases are brittle so when something goes wrong a framework is most likely to either drop the testing at that point or to skip some steps which may result in wrong / failed result.

- Tests are not validated until and unless that test is run. So, if a script is written to check for an “OK” button then we wouldn’t know about its existence until we run the test.

The Machine Shall help recover from tests on the fly by applying fuzzy matching, that means if an object gets modified or removed then the program then the script must be able to find the closest object to the one it was looking for and then continue the test. For example, if a web services has options “small, medium, large” at first and the script was written according to that and if another choice i.e. “extra-large” is added then the script must be able to adapt to that and anticipate that change so that the test run can continue running without fail.

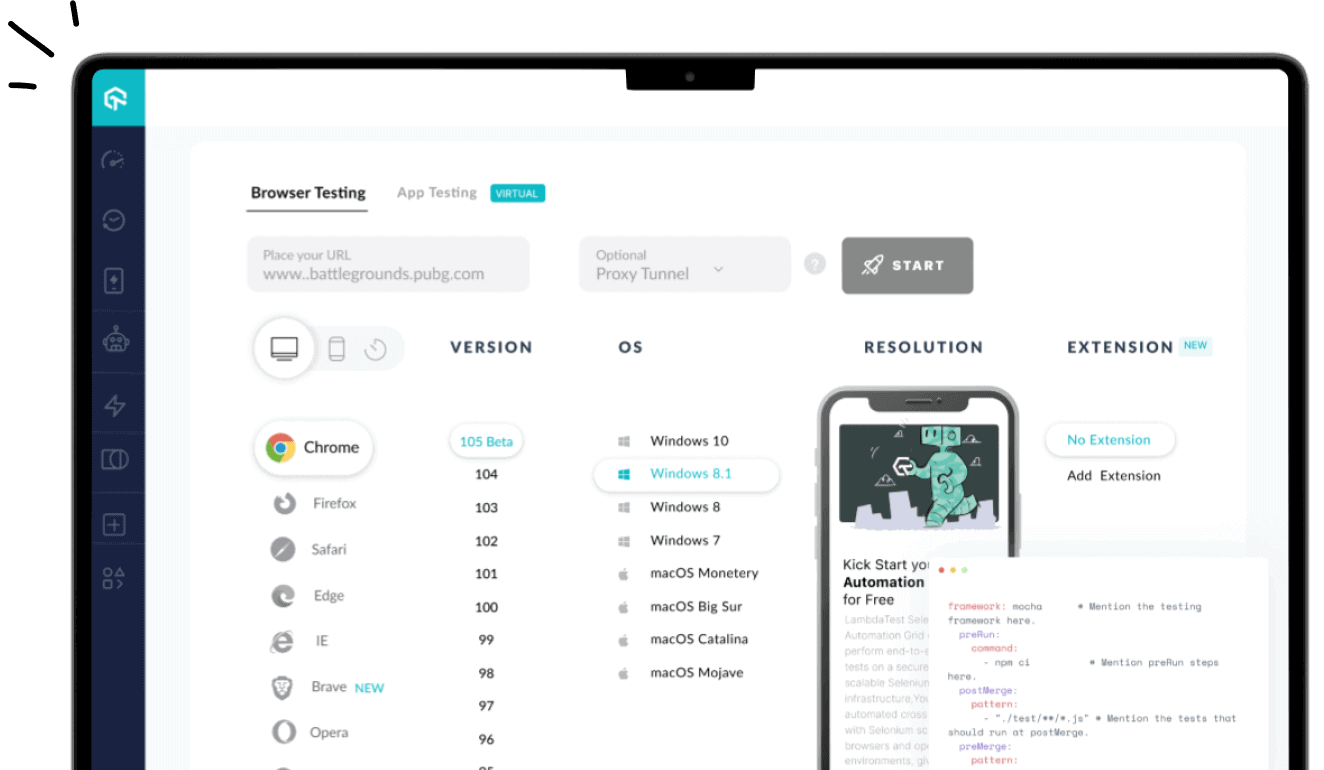

HP Unified Function Testing is one of the well-known tools available in the market used for automated testing. It has a GUI interface for the same, other than that there are other tools like selenium libraries (implemented in several languages like Java, C++, C#, Python etc.) and Cucumber. Tools like these allow one to write their scripts and let the scripts take the job of testing from there by running through several cases.

Ideas for Implementation

Before starting the tests the system needs to learn the cases. We need to give it something to begin with. Before triggering the training part, we had to setup the system where some ads where shown to the normal users in a customized window of time, and during that time logs were collected and recorded which helped us in generating a gender ratio and age groups of people who looked at specific ads. The goal was to find out which age group and gender of users were stimulated to purchase something from the website after looking at certain ads presented to them. The results were saved as training data so that tests could be performed on them.

The machine was trained to write test cases based on the collected information. Training data was updated every once in a while so that tests could be run on the latest data based on different demographics and relevant ads could be delivered to potential customers. Had this been done manually one might have to make modifications in the script or add test cases manually each time trends changes or the website changes.

ML algorithms for automated testing

SVM: It belongs to the “linear classifier” family of ML algorithms that attempt to find a (linear) hyperplane that separates examples from different classes. In the learning phase, SVM treats the training data as a vector of k-1 dimensions. And the goal is to find maximum margin (distance). SVM technique is mostly used for the binary classification. Other than this we have MartiRank, a ranking algorithm, in the learning phase. It takes a number of rounds and during each round/iteration data is broken down into N sub-lists, each sub-list containing 1/n of the total number of device / app failures.

In case of Regression testing a suite of test cases needed to be developed, development of MartiRank is an ongoing process and has been used to detect new bugs. Ex: A dev might have refactored some code in the application and put into a new function. Regression testing showed us that the resulting models were different from the previous ones.

When we write test cases we test how the software is supposed to behave theoretically and there’s no real data with us, some of the test cases might never be used in real life and some that missed the test cases might be the most important ones and that is why data-mining the logs and letting the machine write test cases according to those logs automatically saves a lot of man-hours and helps in practical testing. Services like HockeyApp & TestFlight are providing automated mobile app testing as a service.

As for GUI tests there are some research papers out there that talk about DeepLearning and ReinforcementLearning for automation of the test. The systems that were being tested were first data-mined to get the meaningful clicks, texts and button pushes on the GUI interface which generated a good amount of training data. That data was then used to perform tests on the software for a few hours. Best part was that there were no need for models or test cases to be written and the bugs were being found as the time passed by, but some of the cases were not being tested which can be due to lack of training data. The reinforcement approach improved the testing as they were running through multiple iterations.

Intel and Nvidia have been investing heavily in hardware solutions that can aid Deep Learning and related algorithms to achieve results more quickly. Moving from a mobile first world to an AI first world. We know that for testing a certain product whether that be a small calculator there can never be enough number of right test cases and that is why developers and testers are encouraged to write more and more test cases in order to make their product more stable. Paul Graham once suggested the used of Bayesian Filter for filtering out spam emails, thousands of emails were fed to the system and it was made to learn then tests were performed on that training data to make sure that the filter was fool-proof. Web crawlers move through different websites looking for 404 or other errors all the time, updating their indexes and updating their test cases in real time.

Author