AI Testing vs Traditional Testing: What’s The Difference?

Ninad Pathak

Posted On: July 16, 2025

9 Min

AI testing vs. traditional testing. Which one is right for you?

Traditional automation testing has seen decades of upgrades.

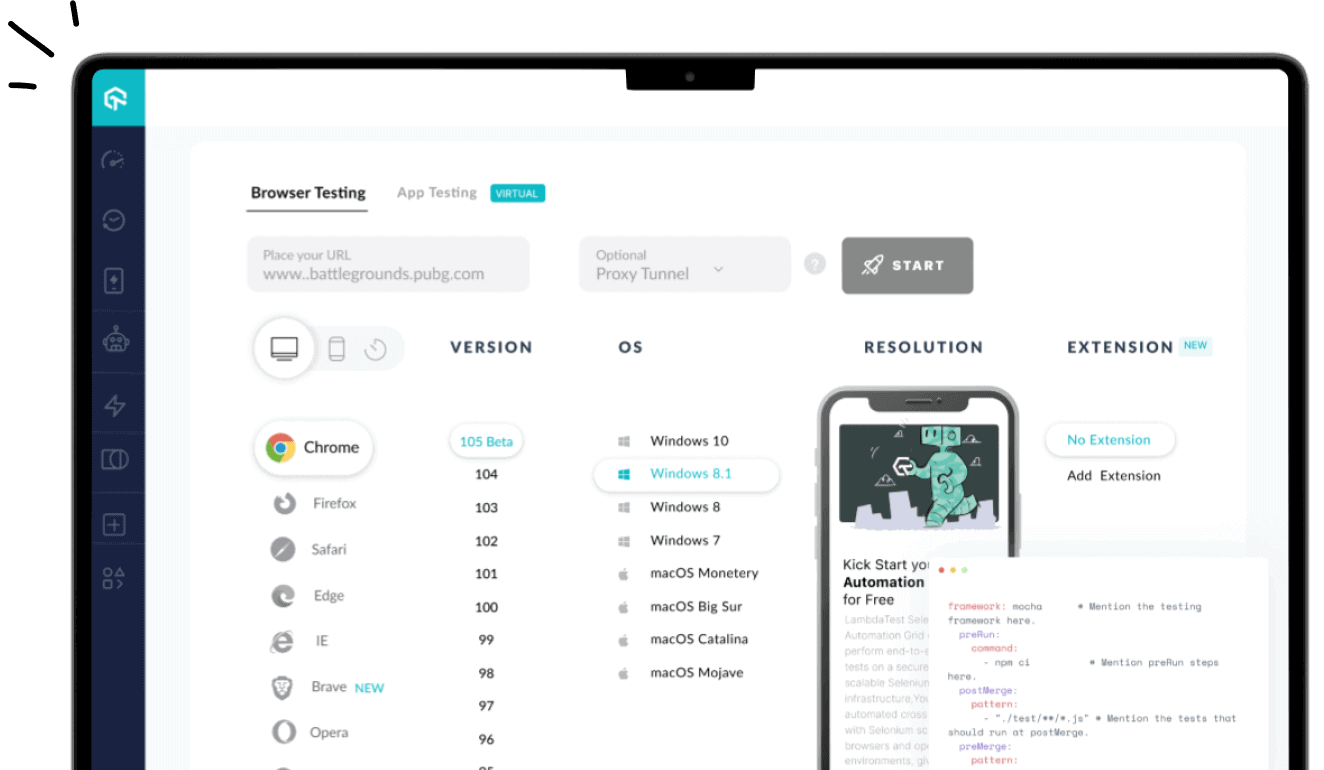

First, there was Selenium, where developers manually wrote every single test step in code, requiring deep programming knowledge. Then, Selenium IDE introduced record-and-playback functionality, allowing testers to create basic scripts without extensive coding. Recently, we saw codeless automation platforms that promised to test without programming through visual interfaces and drag-and-drop functionality.

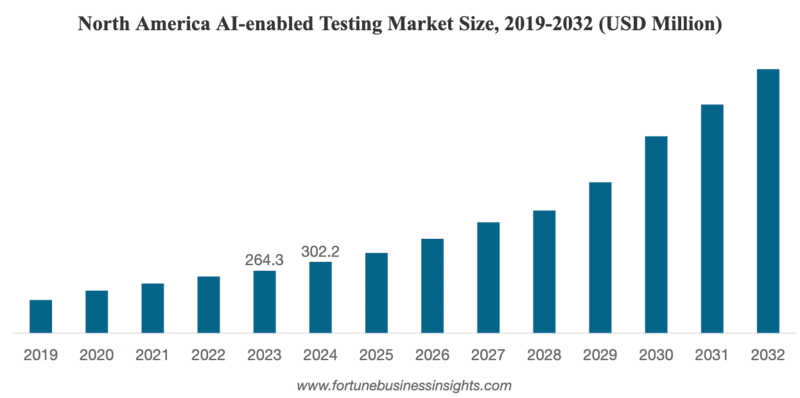

But now, AI testing is the next evolution in the market that’s already gaining momentum.

The AI testing market is projected to hit $3.8 billion by 2032, a 350% increase from the $1.1 billion in 2025. This explosive growth signals a fundamental shift in how organizations approach software testing.

So, with a more future-proof option available, does traditional test automation still stand its ground today? Or should you take the AI-native test automation route for your organization?

Overview

As testing needs grow more complex, traditional automation is reaching its limits. AI-native testing is emerging as a smarter, scalable alternative that addresses the key challenges of conventional methods.

Key benefits of AI-native testing:

- Self-healing tests: Automatically adjust to UI or DOM changes.

- Natural language testing: Write tests without code.

- Smart test orchestration: Optimize execution paths and reduce runtime.

- Real-time insights: Get instant feedback and root cause analysis.

- Higher productivity: Free up teams from maintenance tasks.

- Faster ROI: Lower setup and upkeep costs

Let’s break down both approaches to understand them better.

TABLE OF CONTENTS

- What is Traditional Automation Testing?

- What is AI-Native Testing?

- Traditional vs. AI-Native Testing: Business Impact at a Glance

- Challenges with Traditional Automation Testing

- How AI Testing Solves Traditional Automation Challenges

- Why Are Enterprises Adopting AI Testing?

- The Testing Maintenance Problem Just Solved Itself with AI

What is Traditional Automation Testing?

Traditional automation testing is a method where testers use pre-written scripts with fixed instructions to validate software functionality. You write specific commands for every user action: click this button, enter text, and check if elements appear.

Each step requires exact locators like CSS selectors, XPath expressions, and element IDs that must match perfectly. The pattern never changes: identify test cases, write automation scripts, run them in CI/CD pipelines, monitor results.

Even though frameworks like Selenium, Cypress, and Playwright provide the tools, the rigid logic remains unchanged, treating applications as unchanging systems with predictable behaviors.

What is AI-Native Testing?

AI testing represents the next evolution of automation testing, where AI agents adapt, learn, and make intelligent decisions, moving from instruction-based to intelligence-based validation.

Instead of rigid scripts, AI testing platforms understand context through Agentic QA, where AI agents act as autonomous testing assistants that use natural language processing to interpret test requirements.

These agents automatically find alternative element selectors using computer vision and pattern recognition, analyzing relationships, visual features, and positioning patterns to maintain tests without human intervention.

AI-native testing doesn’t fail when applications change; it automatically adapts by learning from each test run, identifying patterns in application behavior and user workflows to improve future test performance.

AI Testing vs Traditional Testing: Business Impact at a Glance

| Business Impact Area | Traditional Automation Testing | AI Testing |

|---|---|---|

| Resource Allocation | High upfront scripting costs, ongoing maintenance overhead | Lower setup costs, automated maintenance |

| Team Productivity | More time fixing tests than creating them | Focus on strategy and exploration |

| Time to Market | Delays from brittle tests and manual updates | Faster releases through self-healing |

| Maintenance Burden | Exponential growth with complexity | Minimal maintenance through adaptation |

| Technical Debt | Creates mounting overhead | Reduces debt through adaptive capabilities |

| Test Creation | Only developers and testers can write scripts | Anyone can create tests in natural language |

Challenges with Traditional Automation Testing

Despite decades of evolution, traditional automation testing continues to face fundamental challenges that limit its effectiveness and increase costs.

These challenges can explain why many organizations are exploring AI-Native alternatives:

1. Constant Maintenance Requirements

Since automation scripts are hard-coded, every update to the Application Under Test (AUT) requires an update to the script. When the AUT changes, the identifiers in existing scripts break immediately.

Even a simple button ID update can fail multiple tests.

According to LambdaTest’s Future of Quality Assurance Survey, over 40% of participants spend more than 20% of their time fixing unreliable tests and maintaining test environments.

2. High Technical Barrier to Entry

Traditional automation requires programming knowledge and understanding of complex frameworks, even if you are using no-code tools.

Mostly, developers and experienced testers can create and maintain automation scripts, limiting team participation and creating bottlenecks in the testing process.

3. Brittle Test Architecture

Most traditional tests fail when applications change due to rigid element locators and fixed test paths.

With multiple tests across multiple devices, this brittleness becomes overwhelming, forcing teams to maintain two complex systems: the application they’re building and the test automation that checks it.

4. Limited Scalability

As application complexity grows, traditional automation becomes the bottleneck for scalability.

What once required a couple of tests to release new features now needs dedicated resources to write and maintain scripts. And the requirements continue to grow exponentially as you add more features.

5. Slow Test Creation Process

Creating comprehensive test coverage requires extensive manual scripting for each user journey, making test creation time-consuming and resource-intensive. Traditional tests simply cannot keep up with the pace at which features are expected to move.

How AI Testing Solves Traditional Automation Challenges

AI-native testing provides a better solution to directly address each of these pain points. Here’s how AI testing transforms the testing landscape:

1. Self-Healing Tests Eliminate Maintenance

AI-native testing automatically recognizes changed locators on the DOM and updates the test to avoid failures. If timing issues cause failures, it also adjusts wait times automatically.

When elements change, AI automatically adjusts the tests based on the changed setup and continues with the tests. This self-healing mechanism is what’s driving the AI testing adoption at such a scale.

2. Natural Language Test Creation

Beyond eliminating maintenance, AI testing democratizes test creation itself.

LambdaTest’s KaneAI demonstrates how testers can write steps in a natural language like “Click the Login button” or “If a popup appears, then click the submit button,” and the AI agent understands and executes them.

This allows anyone to create tests without programming knowledge.

3. Advanced Test Orchestration

Platforms like LambdaTest’s HyperExecute an AI-native test orchestration platform, actively optimize tests based on previous user interactions and test executions.

Over time, HyperExecute users like Transavia have noticed their tests run up to 70% faster than traditional grids, offering smart test distribution, automatic retries, real-time logs, and seamless CI/CD integration.

The system learns from each test run, identifying patterns in application behavior and user workflows to improve future test performance.

4. Model Context Protocol (MCP) Integration

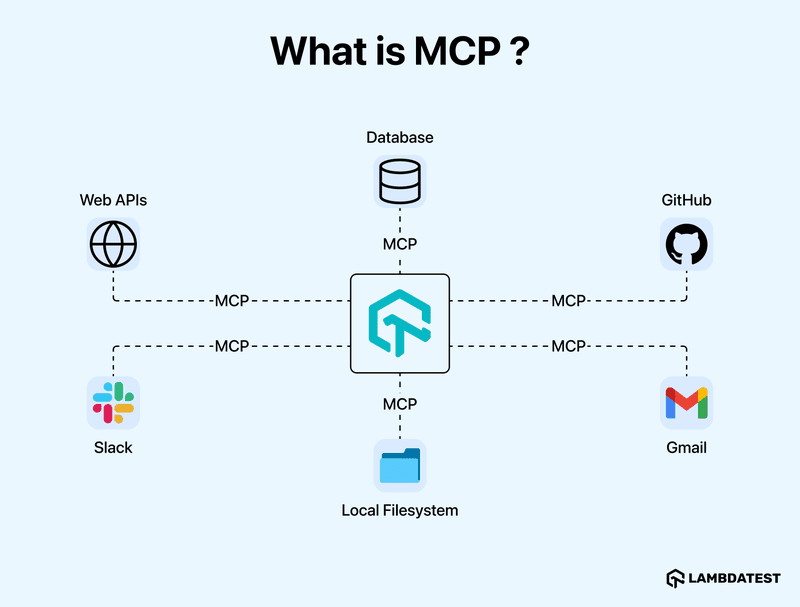

You can test in isolation. To achieve seamless integration across testing ecosystems, AI testing platforms need standardized communication protocols.

AI testing platforms use Model Control Protocols (MCP) to communicate with testing platforms through standardized interfaces, eliminating custom integrations. Think of MCP as a “USB-C for AI integrations,” allowing AI assistants to connect with diverse software tools through a standardized interface.

For instance, HyperExecute MCP Server dramatically simplifies and accelerates automated testing workflows by using the MCP. It enables seamless integration between AI assistants and testing environments, reducing setup time from hours to minutes.

5. Real-Time Intelligence and Monitoring

To complete the AI testing ecosystem, platforms provide comprehensive visibility and insights throughout the testing process.

The integrated Job Info Tool delivers immediate updates regarding test executions, simplifying progress tracking, while integrated AI agents utilizing Agentic RAG technology quickly deliver relevant insights from HyperExecute’s documentation, providing immediate assistance when needed.

Beyond test automation, AI in data integration also plays a vital role in preparing and aligning test environments with real-world data, enabling smarter, context-aware testing.

Why Are Enterprises Moving Towards AI Testing over Traditional Testing?

Given these comprehensive solutions to traditional automation challenges, it’s clear why enterprises are rapidly shifting toward AI-native testing approaches. The business benefits extend far beyond technical improvements:

Immediate Cost Reduction and ROI

AI testing flips the cost equation of traditional automation.

While traditional automation demands massive upfront investment in writing scripts and creates ongoing maintenance overhead, AI testing focuses on configuration rather than extensive scripting, with maintenance becoming largely automated.

For instance, Bajaj Finserv Health achieved a 5-hour testing time reduction using AI-native orchestration.

Similarly, Noibu gained 100% efficiency increase, 4x faster deployment, and 400% improvement in developer feedback time.

Competitive Advantage Through Speed

Organizations sticking to traditional automation generally see rising costs and decreasing agility compared to organizations that have moved to AI-native testing.

Early adopters of AI testing can quickly capture compounding advantages while competitors remain stuck in evaluation phases.

The competitive gap widens daily as AI testing becomes more sophisticated while traditional approaches become more expensive to maintain.

Future-Proofing Testing Infrastructure

Smart enterprises also recognize AI testing as a strategic investment in their long-term testing capabilities.

Current AI testing platforms already serve as foundations for fully autonomous testing systems requiring minimal human input beyond strategic guidance.

Future systems will understand application architecture, business logic, and user intentions well enough to generate complete test strategies autonomously.

AI testing platforms increasingly integrate with development tools, providing real-time feedback about code quality and potential issues before deployment.

Strategic Business Intelligence

AI testing generates valuable insights about application quality, user behavior, and system performance that directly inform product decisions, development priorities, and business strategy. Smart organizations position themselves to leverage these broader strategic benefits as capabilities mature, moving beyond quality assurance to comprehensive business intelligence.

Skills Development and Market Value

According to PWC, AI skills command a 56% wage premium in the market.

Upskilling existing employees in AI testing is more cost-effective than hiring external talent, while internal employees are eager to learn skills that could help them get better salaries down the line.

This creates a win-win scenario for both organizations and their testing teams.

The Test Maintenance Problem Solved Itself with AI

Testing teams used to spend most of their time fixing broken scripts. Now, AI agents auto-fix broken scripts and communicate with your entire testing stack through MCP.

The teams using this approach are no longer maintaining tests. Instead, they’re building features while their tests work automatically in the background.

You could continue debugging Selenium tests or join the future of testing with LambdaTest’s AI-native testing suite.

Author