Context Engineering Part 1: Why AI Agents Forget

Srinivasan Sekar

Posted On: October 17, 2025

26 Min

AI agents often struggle with memory limitations, causing errors, inconsistent outputs, and overlooked details. Context Engineering addresses this challenge by defining what AI should remember, how it retrieves information, and how it manages context efficiently.

Part 1 of this Context Engineering series explains why AI forgets, the four common failure modes, and practical strategies, WRITE and SELECT, for structuring, storing, and retrieving information. These approaches ensure reliable reasoning, accurate outputs, and scalable performance across complex, multi-step AI tasks.

Overview

Context Engineering is the practice of structuring, selecting, and managing the information that an AI model “sees” at any given time, so it can make accurate, relevant, and reliable decisions.

Why Does Context Engineering Matter for AI Agents?

AI models often fail because they cannot reliably recall relevant information. Context Engineering ensures continuity, accuracy, and dependable behavior across dynamic tasks.

- Memory Protection: Context Engineering prevents memory corruption by filtering irrelevant or outdated inputs, ensuring models maintain accurate and reliable information consistently across tasks.

- Consistency: Structured context retrieval aligns AI outputs with user intent, reducing errors and producing consistent, predictable responses across multiple sessions or interactions.

- Accuracy: By prioritizing high-value data within limited context windows, Context Engineering improves output precision and reduces mistakes caused by irrelevant or noisy information.

- Scalability: Well-designed context management allows AI to integrate short-term and long-term memory seamlessly, supporting reliable operation across complex, multi-step tasks or projects.

- Reliability: Proper Context Engineering transforms unpredictable AI behavior into consistent, context-aware performance, enabling models to respond accurately to evolving inputs and user requests.

What Are the Four Pillars of Context Engineering?

The Four Pillars provide a framework for managing information flow, ensuring AI agents remain accurate, relevant, and reliable at every stage of reasoning.

- WRITE: The AI stores only verified, structured information, ensuring memory consistency, preventing errors, and enabling reliable reuse across multiple tasks or sessions.

- SELECT: Relevant context is identified and retrieved efficiently, limiting distractions, optimizing decision-making, and ensuring the model focuses only on critical information.

- COMPRESS: Information is condensed without losing essential details, reducing redundancy, improving storage efficiency, and enabling faster access while preserving context accuracy.

- ISOLATE: Critical data and context are separated from irrelevant or volatile information, preventing interference, protecting integrity, and ensuring reliable reasoning across tasks and sessions.

The AI Memory Problem: An Introduction

Consider you’re at a restaurant, and the waiter has a wonderful memory. They recall:

- The 50-page menu has every dish on it.

- All of the conversations with customers from the past week.

- Every request that was made that was special.

- The ingredients in each dish.

- Every order and table number.

- All ways to pay are available.

Sounds wonderful, doesn’t it? The problem is that when you ask, “What’s good for lunch?” the waiter stops. They have so much information that they can’t pay attention to your simple question. If you don’t do proper Context Engineering, this is what happens to AI agents.

AI agents are getting smarter and better at doing more complicated tasks, but they have a big problem: their “working memory” is limited. AI models can only hold so much information in their active context at any one time, just like the waiter who is too busy to help you. If you load them too much, they slow down, make mistakes, or even stop working altogether.

Context Engineering is the field that deals with controlling what AI agents remember, forget, and find at each step of their work.

What Is Context Engineering?

AI researchers define Context Engineering as the delicate art and science of filling the context window with just the right information for the next step.

Let’s look at what “context” means in AI to get a better idea of this:

Understanding the Context Window

A context window is the amount of text that modern Large Language Models (LLMs) can “pay attention to” when they make a response.

It’s like your computer’s RAM:

- The CPU is what gives the LLM its reasoning power.

- The RAM is the memory that is used to work (the context window).

- The hard drive is a place to store things for a long time (like documents and external databases).

As Andrej Karpathy explained, “LLMs are like a new kind of operating system. The LLM is like the CPU and its context window is like the RAM.”

What Goes Into Context?

AI context usually has three parts:

- Instructions

- System prompts

- Few-shot examples

- Tool descriptions

- Response format requirements

- Behavioral guidelines

- Knowledge

- Domain facts

- Company policies

- Historical data

- User preferences

- Retrieved documents

- Tools

- Available function descriptions

- API specifications

- Previous tool call results

- Error messages and feedback

- 🟨 Yellow sticky: “Don’t forget: Delta is the user’s favourite airline.”

- 🟦 Blue sticky: “Important: The budget is $500 at most.”

- 🟩 Green sticky: “To Do: Look up the prices of flights for October”

- When they learn something new, they should write it down on a sticky note.

- When you need to remember something, read old sticky notes.

- To understand what the sticky notes say, read them all. The AI doesn’t just remember everything; it writes things down and looks them up when it needs to.

- “Sarah likes her coffee with no sugar and no cream.”

- “Sarah can’t eat peanuts because she’s allergic to them.”

- “Blue is Sarah’s favorite colour.”

- “We fixed the login problem last Tuesday by changing the password.”

- “We set up the new database two weeks ago.”

- “We tried approach A last month, but it didn’t work.”

- Step 1: Combine the sugar and flour.

- Step 2: Put in the milk and eggs.

- Step 3: Put in the oven at 350°F for 30 minutes.

- Schema-Driven: Use structured formats (JSON, databases), not free text.

- Time-Stamped: Track when information was added.

- Tagged: Enable filtering by category, importance, freshness.

- Versioned: Track changes to memories over time.

- Queryable: Support efficient lookup and retrieval.

- Take all 10,000 books to your desk.

- Try to read them all at once.

- Feel overwhelmed and lost.

- Take a long time to find the answer.

- Put a label on each book that says “Cooking”, “History”, “Science”, etc.

- Make a card catalogue that works like a magic search index.

- Every card points to books that are related.

- Question: “Chocolate chip cookie recipe?”

- The magic catalogue says, “Check the ‘Baking Cookbook’ on shelf 5!”

- Grab ONLY that one book (not all 10,000!).

- Read only the cookie section (not the whole book).

- Find the recipe.

- Give the answer.

- Friend 1 (Semantic Search): “Did you lose something round and red? I It might be in the toy box with other balls!”

- Understands meaning and finds similar things

- Friend 2 (Keyword Search): “You said ‘red ball’? I’ll look for anything labelled ‘red’ or ‘ball’!”

- Looks for exact words and labels

- Together: They put their results together and show you the best ones!

- Friend 1 found three types of balls: a red ball, a red bouncy ball, and a rubber ball.

- Friend 2 found: a red ball, a ball pit, and a red balloon.

- Combined: red ball (both found it!), bouncy ball, rubber ball < Best results!

- You only read that one sentence.

- “…and then she opened the door.”

- Wait… who is “she”? What door? Why?

- Read the page before (page 41): “Sarah walked nervously toward the old house…”

- Read the target page (page 42): “…and then she opened the door.”

- Read the page after (page 43): “Inside, she found the mysterious box…”

- Pepperoni ✓ (good!)

- Chocolate chips ✗ (weird on pizza…)

- Mushrooms ✓ (good!)

- Gummy bears ✗ (definitely not!)

- Cheese ✓ (perfect!)

- Pepperoni: 9/10 ⭐⭐⭐⭐⭐

- Cheese: 10/10 ⭐⭐⭐⭐⭐

- Mushrooms: 8/10 ⭐⭐⭐⭐

- Chocolate chips: 1/10 ⭐

- Gummy bears: 0/10

- Water bottle (2 pounds) → Always pack this!

- Your phone (1 pound) → Must have!

- Current weight: 3 pounds

- Lunch box (2 pounds) → Got room? Yes! Pack it.

- Sunscreen (0.5 pounds) → Got room? Yes! Pack it.

- Current weight: 5.5 pounds.

- Comic book (1 pound) → Still have room? Yes!

- Frisbee (0.5 pounds) → Still have room? Yes!

- Skateboard (4 pounds) → Will this fit? No! (would make it 11 pounds).

- Current weight: 7 pounds.

- Search through ALL toys (dolls, blocks, cars, puzzles, stuffed animals…).

- Takes forever!

- Find 100 toys, most not even cars.

- Filter 1: “Only show me cars” (not dolls, not blocks).

- Now looking at 20 cars instead of 1000 toys.

- Filter 2: “Only red ones” (not blue, not yellow).

- Now looking at 5 red cars.

- Filter 3: “Only LEGOs” (not Hot Wheels, not wooden cars).

- Found it! 1 red LEGO car.

- Faster: Searched 5 items instead of 1000.

- More accurate: Every result is relevant.

- Respects rules: Won’t show you toys that belong to your sibling.

- Fresh: Can filter to “only toys bought this month”.

- Try to load all 10,000 pages → Context overflow.

- Load random pages → Wrong answers.

- Load recent pages → Misses critical info.

- Core functionality rules.

- Platform capabilities.

- Security requirements.

- Feature specifications.

- Integration guidelines.

- API references.

- Current workflow state.

- User preferences.

- Active task details.

- Recent actions.

- Generated outputs.

- Feedback received.

- Short-term (Session Memory): Tracks the current workflow, decisions made, and progress.

- Long-term (Cross-Session Memory): Learns user patterns, common workflows, and domain-specific knowledge.

- Analyze the task → Determine what information categories are needed.

- Fetch relevant subset → Use semantic search and metadata filtering.

- Load minimal context → Only what’s necessary for current step.

- Cache for reuse → If the same information is needed again.

- Dramatically improved accuracy across workflows.

- Significant reduction in processing time.

- Better cost efficiency per operation.

- More consistent, reliable outputs.

- Enhanced user satisfaction.

- Context Poisoning → Mistakes get saved and reused.

- Context Distraction → Too much info, AI loses focus.

- Context Confusion → Irrelevant info influences decisions.

- Context Clash → Contradictory info causes inconsistency.

- Use scratchpads for temporary notes.

- Build structured long-term memory (semantic, episodic, procedural).

- Organize information hierarchically.

- Tag and timestamp everything.

- Use RAG for large knowledge bases.

- Apply hybrid search (semantic + keyword).

- Grab context around relevant chunks.

- Rerank for precision.

- Load dynamically by priority.

- Filter by metadata first.

- WRITE it down in organized notes.

- SELECT only what you need right now.

- ❌ Carrying all your textbooks to every class.

- ✅ Bringing only today’s math book to math class.

- Pillar 3: COMPRESS – Making information smaller without losing meaning.

- Hierarchical summarization.

- Sliding windows for conversations.

- Tool output compression.

- When to use lossy vs lossless compression.

- Pillar 4: ISOLATE – Separating concerns for clarity

- Multi-agent architectures (90.2% performance improvement!).

- Sandboxed execution.

- State-based isolation.

- Production Challenges – Real engineering lessons

- Handling errors that compound.

- Debugging non-deterministic systems.

- Deployment strategies.

- Anthropic’s hard-won lessons from production.

- Advanced Patterns – Taking it to the next level

- Context tiering strategies.

- Long-horizon conversation management.

- Smart caching techniques.

- How Long Contexts Fail (and How to Fix Them): Drew Breunig’s essential guide covering the four context failure modes with extensive research citations.

- Context Engineering for AI Agents: A comprehensive guide covering the four pillars (WRITE, SELECT, COMPRESS, ISOLATE).

- Anthropic’s Multi-Agent Research System: Deep dive into building production multi-agent systems.

- DeepMind Gemini 2.5 Technical Report: Context poisoning in game-playing agents.

- Microsoft/Salesforce Sharded Prompts Study: 39% performance drop from context clash.

- Berkeley Function-Calling Leaderboard: Every model performs worse with more tools.

- Daffodil Software Best Practices: Hierarchical organization patterns.

Note

NoteTest your AI agents across real-world scenarios. Try Agent to Agent Testing Today!

Why Context Engineering Matters: The Four Failure Modes?

When context isn’t handled correctly, AI agents break down in certain, predictable ways. Drew Breunig identified four critical failure modes that impact AI agents:

1. Context Poisoning

What happens: AI remembers a hallucination or mistake and keeps it in its memory between interactions.

The DeepMind team documented this problem statement in their Gemini 2.5 technical report while the AI was playing Pokémon:

“An especially egregious form of this issue can take place with ‘context poisoning’ – where many parts of the context (goals, summary) are ‘poisoned’ with misinformation about the game state, which can often take a very long time to undo.”

Real-world scenario:

|

1 2 3 4 5 6 7 8 |

Turn 1: User: "My account number is 12345" AI: "Got it, account 54321" (misheard) AI saves to memory: account_number = 54321 Turn 2 (next day): User: "Check my account" AI: "Looking up account 54321..." (wrong every time) |

Impact: The AI will always work with wrong information until someone fixes its memory. If the poisoned context changes the AI’s goals or strategy, it can “become fixated on achieving impossible or irrelevant goals.”

2. Context Distraction

What happens: AI gets too much information and starts to focus on things that aren’t important instead of the task at hand.

As Drew Breunig explains, “Context Distraction is when the context overwhelms the training.” The Gemini 2.5 team observed this while playing Pokémon:

“As the context grew significantly beyond 100k tokens, the agent showed a tendency toward favoring repeating actions from its vast history rather than synthesizing novel plans.”

Instead of using what it learnt to come up with new plans, the agent became obsessed with doing things it had done before in its long history.

Real-world scenario:

|

1 2 3 4 5 6 7 8 9 10 |

Context includes: - 500 lines of user's purchase history - 200 product descriptions - 50 previous conversations User asks: "What's my current cart total?" AI responds: "You previously bought a blue sweater on March 3rd for $29.99, and before that you looked at red shoes on February 28th..." (Never actually answers the cart total!) |

Impact: The AI gets distracted by accumulated context and either repeats past actions or focuses on irrelevant details instead of addressing the actual query.

3. Context Confusion

What happens: Superfluous context influences responses in unexpected ways.

Drew Breunig defines this as “when superfluous context influences the response”. The problem: “If you put something in the context, the model has to pay attention to it.”

Even more dramatic: when researchers gave a Llama 3.1 8B model a query with all 46 tools in the GeoEngine benchmark, it failed. However, when the team reduced the selection to just 19 relevant tools, the project succeeded – even though both options were well within the 16k context window.

Real-world scenario:

|

1 2 3 4 5 6 7 |

Context includes: "User is from Texas" User asks: "What's a good barbecue sauce recipe?" AI assumes: Texas-style BBQ AI responds: "Here's a great Texas BBQ sauce recipe with..." But user wanted: >Korean BBQ sauce (never specified because seemed obvious to them) |

Impact: The AI gets sidetracked by all the context it has and either does the same thing again or focuses on things that aren’t relevant instead of answering the question.

4. Context Clash

What happens is that too much context changes how people respond in ways that are hard to predict.

Drew Breunig describes this as “when parts of the context disagree.”

Microsoft and Salesforce research documented this brilliantly. They took benchmark prompts and “sharded” their information across multiple chat turns, simulating how agents gather data incrementally. The results were dramatic: an average 39% drop in performance.

Why? Because “when LLMs take a wrong turn in a conversation, they get lost and do not recover.” The assembled context contains the AI’s early (incorrect) attempts at answering before it had all the information. These wrong answers remain in the context and poison the final response.

Real-world scenario:

|

1 2 3 4 5 6 7 |

Context piece 1: "Free shipping on orders over $50" (general policy) Context piece 2: "No free shipping on furniture items" (category exception) Context piece 3: "Holiday sale: Free shipping on everything!" (temporary promo) User: "Do I get free shipping on this $75 chair?" AI: "Uh... yes? No? Maybe? It depends on... wait..." (confused) |

Impact: AI makes wrong guesses based on information that isn’t directly related. The model has to pay attention to even information that isn’t relevant in the context window, which hurts performance.

Anthropic’s research emphasises that “context is a critical but finite resource” that must be managed carefully to avoid these failures.

These four failure modes don’t just appear in research papers – they also show up in production environments every day, especially when multiple agents collaborate or pass work between each other. In these environments, even a small mismatch in shared memory or a context handoff can ripple through the chain, producing inconsistent behavior that’s hard to trace.

Testing each agent in isolation only catches part of the issue. You also need to see how they behave when integrated, when outputs from one become inputs for another, when reasoning chains overlap, or when context updates get out of sync. This is where agent testing becomes essential. It helps surface subtle issues like message drift, state misalignment, and reasoning divergence long before they reach production.

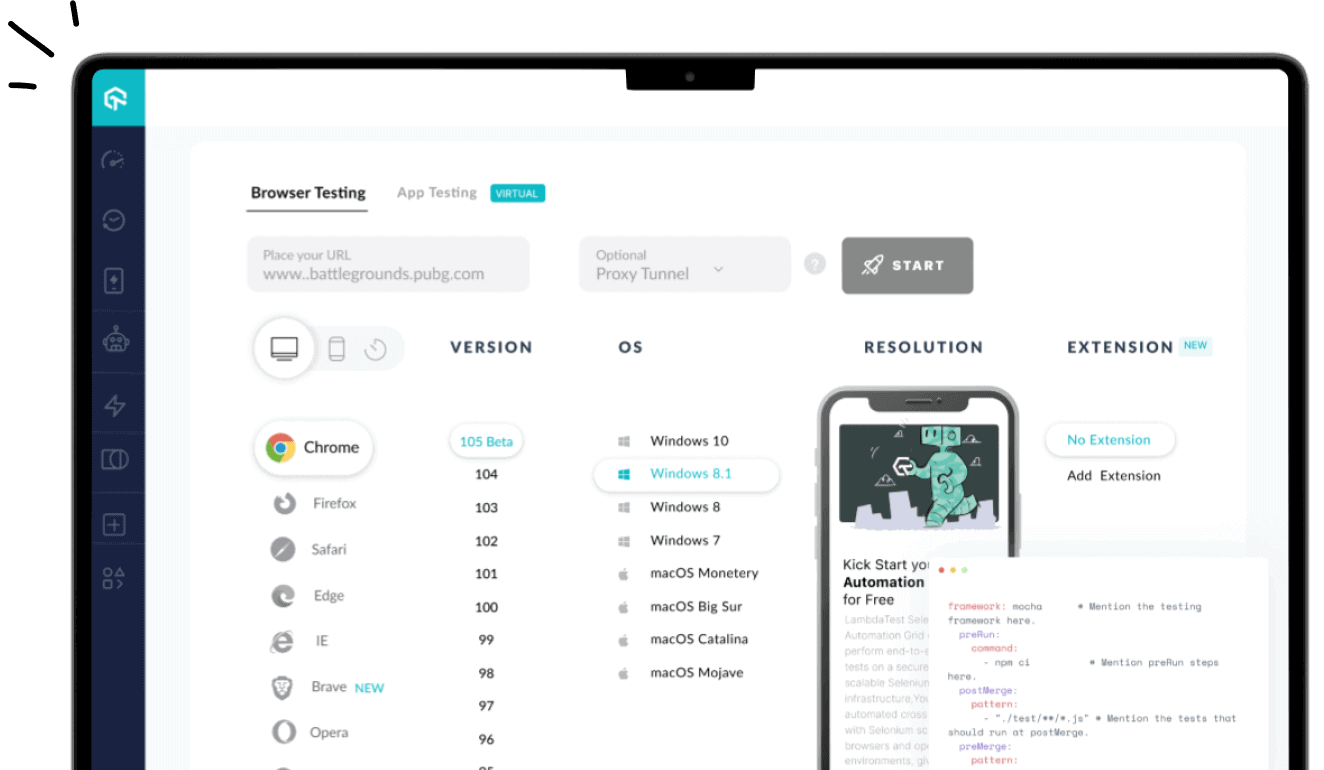

To test AI agents, consider using AI-native agentic cloud platforms like LambdaTest. It offers Agent to Agent Testing where you define multiple synthetic personas and simulate chat, voice, and multimodal interactions. They help you measure the same metrics (bias, hallucination, tone consistency) at scale, under varied input types and in handoffs between agents.

To get started, check out this LambdaTest Agent to Agent Testing guide.

The Four Pillars of Context Engineering

Top AI researchers and companies have agreed upon four basic ways to manage context well. This post will talk about the first two pillars. The last two will be covered in Part 2.

Pillar 1: WRITE (Structured Context Storage)

Core Principle: Don’t make AI remember everything that’s in active memory. Store data in formats that are easy to find and organized outside the context window.

Technique 1.1: Scratchpads

A scratchpad is a place where the AI can write down temporary notes while it works on a task.

How it works:

Think of it like sticky notes on a desk:

The AI can:

Example Usage:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

AI Task: "Analyze 5 research papers and synthesize findings" Turn 1: Read Paper 1 Scratchpad: "paper_1_key_finding: Machine learning improves accuracy by 15%" Turn 2: Read Paper 2 Scratchpad: "paper_2_key_finding: Neural networks require large datasets" Turn 3: Read Paper 3 Scratchpad: "paper_3_key_finding: Ensemble methods reduce overfitting" Turn 4: Synthesize AI reads all scratchpad notes Context: >Only the key findings (not the full papers!) Generates: Comprehensive synthesis |

Anthropic’s multi-agent research system uses this approach extensively: “The Lead Researcher begins by evaluating the method and saving the plan to Memory to maintain context, as exceeding 200,000 tokens in the context window will result in truncation, making it crucial to preserve the plan.”

Technique 1.2: Structured Memory Systems

AI agents need more than just temporary scratchpads; they need long-term memory that lasts between sessions.

There are three types of memory:

Semantic Memory (Facts & Knowledge)

Like knowing things about someone:

The AI keeps track of things about you and what you like. It doesn’t remember when it learnt these things; it only knows that they are true.

Episodic Memory (Past Events)

Like remembering certain times:

The AI can remember certain events and what happened. Like your photo album, each memory has a date and a story.

Procedural Memory (How-To Knowledge)

Like knowing how to make a cake:

The AI knows how to do things. You follow the steps in a recipe book to get something done.

Key Design Principles:

Pillar 2: SELECT (Intelligent Context Retrieval)

The main idea is not to load everything. Get only what you need for the task at hand.

Technique 2.1: Retrieval-Augmented Generation (RAG)

RAG is probably the most important method in modern Context Engineering. It lets AI work with knowledge bases that are much bigger than their context windows.

How RAG Works:

Think about being in a big library with 10,000 books. “What’s the recipe for chocolate chip cookies?” someone asks.

Without RAG (The Dumb Way):

With RAG (The Smart Way):

Step 1: Organize the Library (Done Once)

Step 2: Smart Search (Every Question)

Step 3: Quick Answer

You only had to work with one book instead of ten thousand! That’s RAG: finding the needle without having to carry the whole haystack.

The RAG Pipeline in More Detail

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

┌─────────────────────────────────────────────────┐ │ >INDEXING PHASE(Done Once) │ │ │ │ 1. Split documents into chunks │ │ "Large Document" → [Chunk1, Chunk2, ...] │ │ │ │ 2. Create embeddings for each chunk │ │ Chunk1 → [0.23, 0.45, ...] (vector) │ │ │ │ 3. Store in vector database │ │ VectorDB.insert(chunk_id, embedding, text) │ └─────────────────────────────────────────────────┘ ┌─────────────────────────────────────────────────┐ │ RETRIEVAL PHASE (Every Query) │ │ │ │ 1. User asks question │ │ "How do I reset my password?" │ │ │ │ 2. Create question embedding │ │ Question → [0.21, 0.47, ...] (vector) │ │ │ │ 3. Find similar chunks │ │ VectorDB.similarity_search(question_vec) │ │ │ │ 4. Retrieve top K most similar chunks │ │ Returns: [Chunk45, Chunk12, Chunk89] │ │ │ │ 5. Load ONLY those chunks into context │ │ │ │ 6. Generate response │ │ AI uses just 3 chunks (not all 1000!) │ └─────────────────────────────────────────────────┘ |

Advanced RAG Techniques:

Hybrid Search (Keyword + Semantic)

Think of two friends helping you look for a toy you lost:

Using both methods together finds better answers than using just one!

Contextual Chunk Retrieval

Imagine reading a storybook, and you find the perfect sentence on page 42:

Without Context:

With Context (The Smart Way):

Now you understand! Sarah is the character, it’s an old house door, and she’s searching for something.

The lesson is to not just take the exact piece; take a little bit before and after to get the whole picture!

Reranking for Precision

I think about what the best pizza toppings are:

First Search: Someone quickly grabs 10 random toppings from the pantry:

Reranking (The Smart Judge): Now a pizza expert looks at these 10 items and rates them:

Final Selection: Pick the top 5 rated items!

The reranker is like a specialist who double-checks the first search and puts the best items at the top!

RAG Impact:

If done right, RAG can make a big difference in performance. Cornell University research indicates that employing RAG for tool descriptions can enhance tool selection accuracy by threefold in comparison to loading all tools into context.

The Berkeley Function-Calling Leaderboard demonstrates that every model does worse with more tools, even when they are within their context window limits.

Technique 2.2: Dynamic Context Loading

Imagine you’re packing for a day trip, and your backpack can hold 10 pounds:

Priority 1 – Critical (MUST pack)

Priority 2 – Important (Pack if room)

Priority 3 – Nice-to-Have (Fill remaining space)

Final backpack: Water, phone, lunch, sunscreen, comic book, frisbee = 7 pounds (under 10 limit!).

The AI does the same thing! It packs the most important information first, then adds more until the backpack (context window) is nearly full, but never overflowing.

Technique 2.3: Metadata Filtering

The Smart Pre-Filter Strategy

Imagine you’re looking for your red LEGO car in a huge toy room:

Dumb Way

Smart Way (Metadata Filtering)

Why this is brilliant:

The AI does this with information – it filters first, then searches!

Real-World Example: Enterprise Documentation Assistant

Let’s see how WRITE and SELECT work together in practice:

Challenge: The company has 10,000 pages of documentation. The AI assistant should answer employee questions.

Without Context Engineering

With Context Engineering (WRITE + SELECT)

WRITE Phase

|

1 2 3 4 5 6 7 8 9 |

Step 1: Organize all documentation - Create labels and categories - >Add metadata (department, date, topic) - Build search index Step 2: Store in structured format - HR policies → HR database - IT procedures → IT database - Product docs → Product database |

SELECT Phase

|

1 2 3 4 5 6 7 |

Employee asks: "What's our PTO policy?" Step 1: Identify intent → HR question Step 2: Filter metadata → HR database only Step 3: RAG search → "PTO policy" sections Step 4: Retrieve → 3 >relevant pages (not 10,000!) Step 5: Load into context → Just those 3 pages Step 6: Generate answer → Fast and accurate! |

Result: Fast, accurate answers without context overload!

How We’re Applying WRITE and SELECT at LambdaTest?

At LambdaTest, we’re deeply invested in building intelligent AI agents. Here’s how we’re applying these first two pillars (without revealing proprietary details):

Our WRITE Strategy

Our WRITE strategy begins with a Structured Information Architecture, ensuring that every piece of data is organized for clarity and accessibility.

Structured Information Architecture

We organize information hierarchically, following best practices, as outlined in Daffodil Software Engineering Insights.

Level 1: System Policies (rarely change)

Level 2: Feature Documentation (monthly updates)

Level 3: User Context (session-based)

Level 4: Interaction History (turn-based)

Why this works: AI quickly knows where to look. Need a core rule? Check Level 1. Need user preference? Check Level 3.

Memory Management

We implement both short-term and long-term memory:

The AI gets smarter with each interaction, like an experienced colleague!

Our SELECT Strategy

Our SELECT strategy focuses on Intelligent Context Retrieval, carefully identifying and extracting the most relevant information for each task.

Intelligent Context Retrieval

We don’t load everything at once. Instead:

Example Workflow:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

User Task: "Process this technical document" Step 1: Identify document type Context loaded: >Document type classifiers (lightweight) Step 2: Extract key information Context loaded: Extraction patterns for this type Step 3: Generate structured output Context loaded: Output schemas and examples Each step: Different focused context! |

Results We’re Seeing:

While we can’t share exact numbers, we’re experiencing:

The investment in Context Engineering has fundamentally transformed our AI agents from “useful” to “production-grade””.

Key Takeaways From Part 1

Here are some key takeaways summarized from this blog:

The Four Failure Modes (What Goes Wrong)

Understanding the common failure modes in context management is crucial for reliable AI performance. Each type highlights how improperly handled information can undermine results:

The First Two Solutions (What to Do)

The first two solutions focus on capturing and retrieving information effectively. WRITE ensures that knowledge is recorded systematically and organized for easy access, while SELECT emphasizes retrieving the most relevant context to guide AI decision-making.

WRITE

SELECT

The Core Insight

Don’t try to load everything into the AI’s “backpack” at once. Instead:

This is like the difference between:

What’s Coming in Part 2

In Part 2 of Context Engineering series on advanced techniques for production AI, we’ll explore:

Don’t miss Part 2!

Further Reading

These resources expand on the foundations introduced in Part 1, offering practical insights and technical depth for anyone building reliable, context-aware AI agents. Each piece adds a unique perspective on solving memory and context challenges in real-world deployments.

Essential for Understanding Part 1

These foundational resources clarify why context failures happen and how structured engineering principles resolve them. They offer practical insights into maintaining reliable, scalable AI reasoning.

Research Referenced

These studies highlight real-world evidence of context degradation in advanced AI agents. They underscore the need for disciplined context management to sustain accuracy, stability, and consistent performance.

Frequently Asked Questions (FAQs)

Why do AI agents forget information during a conversation?

AI agents forget because their context window has limits. Once that window fills with tokens, older information gets pushed out. The model can only process what remains visible inside that window, so earlier details effectively disappear from its working memory during generation.

Is this forgetting a design flaw in AI models?

No, it is a structural limitation rather than a defect. Language models are built to process a fixed number of tokens. When the limit is reached, older content falls off. Forgetting reflects the model’s operational boundaries, not a functional failure in design.

How do tokens influence what the AI remembers?

Tokens represent every word, symbol, and space. The model handles only a limited number per session. When you exceed that number, newer text replaces older tokens. Managing prompt length and clarity helps preserve vital context and delay the onset of forgetting.

Do larger AI models remember more information?

Yes, within limits. Larger models typically include wider context windows, allowing them to retain more tokens before truncating older content. However, no model remembers indefinitely. Once the window’s maximum token capacity is reached, earlier context is still lost regardless of size.

Can fine-tuning help prevent forgetting?

Fine-tuning strengthens pattern recognition and improves contextual understanding, but it does not expand memory capacity. The model still forgets once token limits are reached. Persistent memory requires an external storage mechanism capable of recalling information beyond the built-in context window.

Why do AI agents sometimes remember only fragments?

Partial recall occurs when bits of earlier information remain within the visible token window. The model can reference these fragments but loses everything that has scrolled beyond the limit. The result is selective memory, which creates uneven continuity in longer interactions.

How does poor prompt design increase forgetting?

Inefficient prompts waste tokens on unnecessary wording or repetition. This causes critical details to drop from the context window sooner. Concise, structured prompts preserve valuable space and ensure the model focuses on essential information needed to maintain logical conversation continuity.

Why can’t AI agents hold long-term memory like humans?

AI lacks persistent biological memory. Each session is stateless unless an external system records and reintroduces information. Without such integration, the model resets after every interaction. It recalls only what fits inside its active window, losing all prior conversational context.

How does summarization reduce memory loss?

Summarization compresses earlier text into concise, meaningful form. By reintroducing these summaries instead of full transcripts, the AI retains context without overloading tokens. This technique maintains coherence over extended conversations while keeping the total input safely within processing limits.

What is the most practical way to fix AI forgetting?

Use a combination of summarization and external memory. Summaries maintain short-term coherence, while an external database stores key facts for recall. Feeding this structured context back to the model allows consistent continuity across long interactions without exceeding the token window.

Co-Author: Sai Krishna

Sai Krishna is a Director of Engineering at LambdaTest. As an active contributor to Appium and a member of the Appium organization, he is deeply involved in the open-source community. He is passionate about innovative thinking and love to contribute to open-source technologies. Additionally, he is a blogger, community builder, mentor, international speaker, and conference organizer.

Author