Unit Testing vs Integration Testing: Which One to Choose?

Kavita Joshi

Posted On: September 22, 2025

22 Min

When deciding between unit testing vs integration testing, it’s important to understand how each plays a role in building reliable software. Unit testing focuses on validating individual components to ensure they work correctly in isolation, while integration testing ensures those components interact seamlessly within the software.

AI Overview

Unit testing and integration testing are different but work together to ensure software is reliable and functions correctly.

What is Unit Testing?

Unit testing is the process of verifying individual components or functions of a software application independently. It ensures that each part performs its intended functionality correctly.

What is Integration Testing?

Integration testing focuses on evaluating how different components or modules of a software system interact with each other, ensuring that combined functionality works seamlessly and reliably.

What are the differences between Unit Testing and Integration Testing ?

Understanding the differences between unit and integration testing is essential for building reliable software. Each type serves a distinct purpose, focusing on isolated code versus module interactions.

- Scope: Unit Testing verifies one module independently, while Integration Testing checks combined modules, ensuring they collaborate correctly and produce expected results when working together.

- Focus: Unit Testing validates the correctness of internal logic in isolation, while Integration Testing confirms smooth data exchange, interfaces, and software-wide workflows between different components.

- Goal: Unit Testing ensures small code units behave correctly, while Integration Testing ensures collaboration among modules works without failures, covering data flow, APIs, and dependencies.

- Speed: Unit Testing is fast, running in milliseconds with simple logic, while Integration Testing is slower since multiple components, services, or dependencies interact.

Integration tests are generally more complex and are executed after unit tests to validate the overall functionality of the application.

TABLE OF CONTENTS

- What is Unit Testing?

- What is Integration Testing?

- How to Perform Unit Testing ?

- How to Perform Integration Testing?

- Unit Testing vs Integration Testing: Key Differences

- How to Optimize Unit and Integration Tests for CI/CD?

- Common Anti-Patterns in Unit and Integration Testing

- Frequently Asked Questions

What is Unit Testing?

Unit testing is a type of technique that focuses on validating the functionality of a single unit of code, typically a function or method. The purpose of unit testing is to ensure that individual components of the software are working as expected.

These tests are typically isolated that are independent of external software like databases or APIs, making them faster and more focused.

When to Perform Unit Testing?

Unit testing should be performed during the development phase, after writing individual functions or methods, to ensure each unit of code works as intended before integration.

Unit testing plays a critical role in ensuring code reliability from the very beginning of development. Knowing when to perform these tests helps developers catch issues early, save time, and maintain high-quality standards throughout the software lifecycle.

- During Development: Write unit tests while developing new features or components, ensuring correctness early, reducing rework later, and building confidence that each module performs exactly as intended.

- Before Code Integration: Perform unit tests before merging into shared repositories, catching errors early, preventing integration issues, and ensuring that collaborative work doesn’t break existing functionality.

- When Refactoring Code: After refactoring, run unit tests to verify that optimizations or structural changes haven’t introduced hidden bugs or unintentionally broken previously working features.

- For New Functionality: Whenever new functions, classes, or modules are introduced, write unit tests to confirm behavior in isolation and ensure additions don’t negatively impact existing logic.

- To Test Small, Isolated Units of Code: Unit testing works best for individual functions or methods, excluding external dependencies, to ensure correctness at the smallest, most manageable level.

- To Check Edge Cases: Apply unit tests for edge cases and boundary conditions, such as null values, empty inputs, or maximum limits, to validate consistent, predictable software behavior.

- When Adding Bug Fixes: After applying bug fixes, create unit tests to confirm the resolution is permanent and guard against recurrence of the same issue in the future.

- For TDD: As part of Test-Driven Development, write unit tests before actual implementation to ensure all new code begins with clear requirements and automated verification.

- For Continuous Integration Pipelines: Automate execution of unit tests in CI/CD pipelines, ensuring every code change is instantly validated, minimizing integration failures, and supporting reliable, stable software delivery.

What is Integration Testing?

Integration testing is a type of technique that focuses on verifying the interaction between multiple components of a software application. Unlike unit tests, which test small, isolated units, integration tests ensure that the different pieces of the application, such as databases, APIs, or external services, work together correctly.

When to Perform Integration Testing?

Integration testing should be performed after unit testing, once individual components are developed. It ensures that different units work together as expected when integrated.

Integration tests should be used when:

- After Unit Testing: Once unit tests are completed and individual components are verified, perform integration tests to ensure that these components work together as expected.

- When Connecting Multiple Components: When multiple components or services (e.g., database, API, external software) need to communicate, integration tests are essential to verify their interactions.

- After Refactoring Code with Interdependencies: When refactoring code that involves changes across multiple modules or services, run integration tests to ensure that all affected parts function correctly together.

- For End-to-End Workflows: When testing end-to-end workflows that span multiple modules, software or services to ensure that the complete functionality works as intended.

- When Adding New Features with External Dependencies: If you introduce new features that depend on existing services, databases, or APIs, integration tests help ensure that these dependencies integrate smoothly.

- When Integrating Third-Party Services: When integrating external services (like payment gateways, email services, etc.), perform integration tests to ensure that these external software work correctly within your application.

- Before Deployment to Staging or Production: Run integration tests to validate that all software components work together properly before deploying to staging or production environments.

- To Check Data Flow Between Software: When testing the flow of data between multiple software (e.g., from a user interface to a database and then back to the UI), integration tests ensure that data is properly transmitted and processed.

- During Continuous Integration (CI) Pipelines: Include integration tests in your CI pipeline to verify that all software components work together after every code change.

- When Encountering Complex Interactions: If your application involves complex interactions, such as multiple microservices or APIs, integration testing is crucial to ensure that they work together seamlessly.

How to Perform Unit Testing ?

Unit tests are simple to write, run, and debug, but setting them up can be time-consuming if not automated. There are two primary ways to perform unit testing:

1. Manual Testing Manual unit testing involves executing each test case step by step without automation tools. While feasible for small projects, this method is time-consuming, repetitive, and error-prone, making it less efficient for modern development practices.

2. Automated Testing Automated unit testing leverages frameworks and tools to run tests quickly and consistently. You can record, save, and replay tests without manual effort, making it ideal for repetitive test cases and continuous integration.

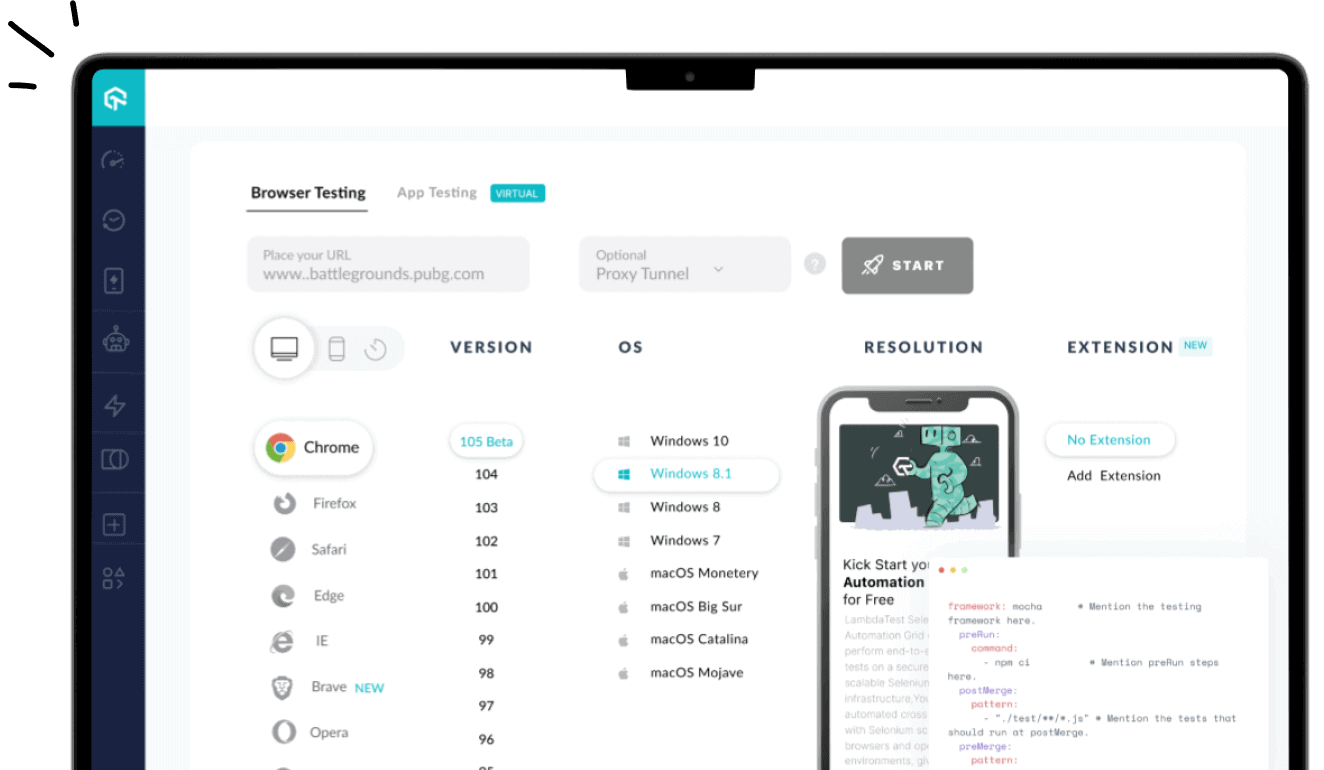

To scale your automated tests efficiently, consider using a test automation cloud platform like LambdaTest. LambdaTest allows you to run your tests across multiple browsers, devices, and operating systems simultaneously, reducing execution time and improving test coverage.

With features like parallel testing, real-time reporting, and seamless integration with CI/CD pipelines, you can accelerate delivery while maintaining high software quality. This approach also eliminates the overhead of managing infrastructure, letting your team focus on building and optimizing tests rather than maintaining environments.

How to Perform Integration Testing?

Integration testing ensures that different modules, services, or components work together as expected. It validates data flow, APIs, and system interactions.

You can perform integration testing in two ways:

- Big Bang Approach: All modules are integrated and tested together. It quickly reveals system-level issues but makes debugging harder.

- Incremental Approach: Modules are integrated and tested step by step (top-down, bottom-up, or sandwich). This helps detect issues earlier and isolate root causes easily.

Best practices for performing integration testing include:

These practices help ensure integration testing is systematic, reliable, and effective in identifying issues between modules before they reach production.

- Define Clear Test Scenarios

Create test cases that cover critical workflows spanning multiple modules or services. This ensures you’re not just validating isolated functions but also verifying the complete data flow and interactions that reflect real user journeys.

- Use Realistic Data

Testing with production-like data exposes edge cases and hidden issues that dummy or overly simple data might miss. Realistic datasets help identify format mismatches, null values, and integration failures under actual business conditions.

- Automate Where Possible

Automation tools like Selenium (UI), Postman (APIs), or TestNG (framework-level tests) make integration testing more reliable and repeatable. Automated test suites reduce human effort, minimize errors, and allow continuous testing at scale.

- Leverage CI/CD

Embedding integration tests into CI/CD pipelines ensures every code change is validated before merging or deploying. This early detection of issues reduces integration failures in staging or production, speeding up feedback cycles.

Unit Testing vs Integration Testing: Key Differences

Understanding the key differences between unit testing vs integration testing helps in choosing the right approach based on the testing needs of your application. Below is a comparison that highlights these differences clearly:

| Aspect | Unit Testing | Integration Testing |

| Definition | Testing individual functions, methods, or classes in isolation to verify the correctness of internal logic and expected outputs. | Testing interactions between multiple modules, services, or software to ensure they collaborate correctly and that data flows as intended. |

| Scope | Narrow – focuses only on a single unit of code. | Broader – covers multiple components working together. |

| Focus | Validates internal logic, algorithms, and correctness of small isolated code pieces. | Validates data flow, integration points, and software behavior across modules. |

| Dependencies | Uses mocks, stubs, or fakes to isolate the unit from external dependencies. | Uses real or combined dependencies, APIs, or databases for more realistic interaction testing. |

| Granularity | Fine-grained, very detailed tests targeting single methods or classes. | Coarse-grained, higher-level tests covering workflows, modules, or services. |

| Goal | Ensure the correctness of small code units, making debugging easier and faster. | Ensure components interact seamlessly, identifying issues in communication, interfaces, or workflows. |

| Timing | Performed early during development (shift-left approach) alongside coding new features. | Performed after unit testing, once multiple modules are built and ready to work together. |

| Responsibility | Usually handled by developers while writing code. | Handled by developers and QA engineers collaboratively. |

| Tools | JUnit, NUnit, PyTest, Jasmine, Mocha, xUnit. | TestNG, Postman, REST Assured, Selenium, Cypress, SoapUI. |

| Speed | Very fast, executes in milliseconds or seconds since only small modules are tested. | Slower than unit tests due to multiple dependencies, larger data, and external services involved. |

| Cost of Maintenance | Low – changes only affect specific unit tests. | Higher – changes in modules or APIs may break multiple integration tests. |

| Reliability | High reliability for verifying the correctness of small isolated code segments. | High reliability for ensuring real-world workflows function correctly end-to-end. |

| Examples | Testing a calculateTax() function with different input values to verify correct outputs.

|

Testing an e-commerce checkout flow where cart service, payment service, and order service must work together correctly. |

| When to Perform | During development, refactoring, adding bug fixes, or creating new methods/functions. | After unit testing, when modules are integrated, before deployment, or when validating interactions in CI/CD pipelines. |

| Defect Detection | Detects logical errors, incorrect calculations, or mistakes inside a single function or class. | Detects interface mismatches, incorrect data formats, broken communication between services, or improper database/API handling. |

| CI/CD Role | Provides quick feedback in pipelines by running on every code commit or pull request. | Provides assurance during staging or pre-release phases by validating software-level interactions across environments. |

| Test Data | Often uses small, mock, or dummy datasets to validate isolated logic. | Requires larger, more realistic datasets to simulate actual data exchange across integrated modules. |

| Risk Coverage | Covers risk of internal logic bugs. | Covers risk of integration issues, interface mismatches, and workflow failures. |

Practical Example of Unit Testing

Let’s explore a simple example of unit testing to understand how individual units are tested.

Scenario: Testing a Calculator Function

Consider a simple calculator method that adds two numbers:

|

1 2 3 4 5 |

public class Calculator { public int add(int a, int b) { return a + b; } } |

A unit test for this function would be as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

import org.junit.jupiter.api.Test; import static org.junit.jupiter.api.Assertions.assertEquals; public class CalculatorTest { @Test public void testAdd() { Calculator calculator = new Calculator(); // Test addition of two positive numbers assertEquals(5, calculator.add(2, 3)); // Test addition of a positive and a negative number assertEquals(0, calculator.add(-1, 1)); // Test addition of two zeros assertEquals(0, calculator.add(0, 0)); } } |

Code Walkthrough:

- This unit test verifies that the

addmethod in theCalculatorclass performs correctly for different inputs: positive numbers, negative numbers, and zero. - The test isolates the add method and verifies its correctness independently of other components.

Practical Example of Integration Testing

Now, let’s look at a practical example of integration testing to see how different modules interact, exchange data, and function together.

Scenario: Testing a User Registration Software

You have a registration portal that involves multiple components: an API, a database, and an email service. Here’s how you might write an integration test to verify the registration process.

Let’s assume you have a UserRepository that handle user registration:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

public class User { private String name; private String email; private String password; // Constructors, getters, and setters } public class UserRepository { public void save(User user) { // Save user to the database } } |

An integration test for this could be written like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 |

import org.junit.jupiter.api.BeforeEach; import org.junit.jupiter.api.Test; import static org.junit.jupiter.api.Assertions.assertNotNull; public class UserRegistrationTest { private UserService userService; private UserRepository userRepository; @BeforeEach public void setup() { userRepository = new UserRepository(); // In-memory or mock database setup userService = new UserService(userRepository); } @Test public void testUserRegistration() { User user = new User("John Doe", "john@example.com", "password123"); // Simulate the user registration process userService.registerUser(user); // Verify that the user is saved in the database (e.g., UserRepository) assertNotNull(userRepository.findByEmail("john@example.com")); // Verify an email is sent (mocking email service) // Assuming emailService is mocked, and you verify its behavior // emailService.verifySentEmail("john@example.com"); // In a real scenario, you would check the email and HTTP response as well } } |

Code Walkthrough:

- This test simulates the user registration process, integrating various components like the

UserServiceandUserRepository. - It verifies that the user is saved to the database and an email is sent as part of the registration process (email service is assumed to be mocked in this case).

- This integration test ensures that the end-to-end flow works correctly, covering database interaction and other software components.

How to Optimize Unit and Integration Tests for CI/CD?

Efficient integration testing vs unit testing is key to a fast and reliable CI/CD pipeline. By optimizing test execution and minimizing delays, you can ensure quicker feedback and maintain high-quality standards.

Here’s how to optimize tests for your pipeline.

- Parallel Execution: Run unit and integration tests in parallel across environments, reducing pipeline execution time while ensuring faster feedback loops and quicker detection of potential failures.

- Test Prioritization: Identify critical unit and integration tests, running them earlier in the pipeline to catch major issues quickly, optimizing overall execution efficiency within CI/CD.

- Mocking Services: Use mocks or stubs during unit tests to simulate dependencies, ensuring isolated validation, while reserving real services for integration tests in pipeline environments.

- Containerization: Employ Docker or similar tools to create consistent, reproducible environments where unit and integration tests execute reliably across different CI/CD stages and infrastructure layers.

- Continuous Feedback: Configure pipelines to provide instant feedback on test results, enabling developers to quickly address failing unit or integration tests and maintain workflow momentum.

- Scalable Infrastructure: Leverage cloud-based or distributed test runners to handle large integration suites efficiently, preventing bottlenecks and keeping pipeline execution times within acceptable thresholds.

- Test Data Management: Maintain clean, reusable, and version-controlled test datasets for integration testing, ensuring consistency, repeatability, and reducing failures caused by inconsistent or corrupted data.

- Code Coverage Metrics: Track code coverage across unit and integration tests, focusing on critical modules, and use thresholds in pipelines to maintain high-quality, reliable code standards.

Common Anti-Patterns in Unit and Integration Testing

Optimizing your unit and integration tests is crucial for maintaining an efficient CI/CD pipeline. Below are common anti-patterns to avoid and how to address them.

- Over-Mocking Dependencies: Excessive mocking in unit tests hides real behavior, creating false confidence and fragile tests that break whenever implementation details change unexpectedly.

Solution: Mock only external services, while validating core logic through real dependencies to ensure stronger, more reliable tests overall.

- Ignoring Edge Cases: Writing tests only for happy paths neglects edge cases, causing undetected defects in both unit and integration tests, especially under unusual data conditions.

Solution: Identify edge scenarios early, create test cases for boundaries, null values, and extreme inputs to strengthen coverage across both unit and integration layers.

- Slow Test Suites: Allowing test suites to grow excessively slow reduces developer productivity, delays feedback in CI/CD pipelines, and discourages frequent execution of vital test checks.

Solution: Continuously refactor tests, run unit tests first, and parallelize integration testing to optimize execution times while preserving comprehensive coverage in CI/CD pipelines.

- Testing Implementation Details: Coupling tests too closely with internal code logic makes them brittle, requiring frequent rewrites whenever code structure changes, without necessarily impacting behavior.

Solution: Write tests based on expected behaviors and outputs instead of implementation specifics, ensuring test resilience despite code refactors or internal structural modifications.

- Inconsistent Test Data: Using unstructured or inconsistent data during integration testing leads to unreliable results, flaky tests, and failures that cannot be easily reproduced or debugged.

Solution: Maintain standardized, version-controlled datasets, employ seed data for reproducibility, and design automated cleanup routines to eliminate environmental inconsistencies across test runs.

- Skipping Cleanup: Neglecting environment or database cleanup after integration tests leaves residual state, causing unpredictable results and failures in subsequent tests sharing the same environment.

Solution: Implement teardown scripts or hooks to reset databases, environments, and services after every run, ensuring clean, reliable states for subsequent testing executions.

- Duplicated Test Logic: Repeating similar test cases across suites increases maintenance effort, introduces redundancy, and makes the testing framework harder to extend or evolve effectively.

Solution: Refactor shared logic into reusable helper functions or libraries, reducing duplication, simplifying maintenance, and promoting a cleaner, more scalable testing framework overall.

- Unclear Test Naming: Poorly named unit or integration tests reduce readability, making it difficult for teams to quickly understand intent, diagnose failures, or maintain test suites.

Solution: Adopt naming conventions that describe input, expected output, and behavior clearly, improving readability, team collaboration, and long-term maintainability of test suites.

Conclusion

Unit testing and integration testing are two crucial approaches in modern software development. While unit tests ensure that individual components of your application function as expected, integration tests confirm that those components work together seamlessly.

By understanding when and how to use each of these tests, you can create a robust and efficient testing strategy that improves both the development process and the quality of your application.

Frequently Asked Questions (FAQs)

What is the difference between Unit Testing and Integration Testing?

Unit testing focuses on testing individual components or functions of a software application in isolation. The goal is to ensure that each small piece of code (like a method or class) performs as expected. Integration testing, on the other hand, checks how multiple modules or services interact when combined. It ensures that the flow of data and functionality across different parts of the software works correctly.

Which comes first, Unit Testing or Integration Testing?

Unit testing always comes first. Developers write and run unit tests during development to verify the correctness of small building blocks of the application. Once those units are stable, integration testing is performed to validate how these blocks work together. Running integration tests without solid unit tests can lead to false failures caused by trivial code errors.

Why is Unit Testing important if we also do Integration Testing?

Unit tests act as the first line of defense. They catch bugs at the earliest stage, making debugging faster and cheaper. Without unit tests, integration tests may frequently fail due to small, local issues, making it harder to diagnose the root cause. Together, unit tests ensure quality at the micro level, while integration tests validate quality at the software level.

Can Unit Testing replace Integration Testing?

No. Unit tests and integration tests complement each other. While unit tests confirm that each function works in isolation, they cannot guarantee that different modules will work together properly. Integration testing is crucial for verifying workflows such as database connections, API requests, and cross-service communication. Skipping integration tests could result in software that works in parts but fails as a whole.

Which type of testing finds more critical bugs: Unit or Integration Testing?

Unit tests usually detect small, localized bugs like incorrect calculations, broken conditions, or logic errors. Integration tests uncover bigger issues such as mismatched data formats, broken communication between modules, and workflow failures. While unit testing prevents bugs from spreading, integration testing is more likely to identify defects that could impact end users.

Who is responsible for Unit vs Integration Testing?

Typically, developers are responsible for unit testing since it directly relates to the code they write. Integration testing, however, may involve both developers and QA engineers. Developers often write automated integration tests to check module interactions, while QA teams may focus on end-to-end workflows that include integration checks.

What tools are used for Unit Testing vs Integration Testing?

Unit Testing Tools: JUnit (Java), NUnit (C#), pytest (Python), Mocha/Jest (JavaScript), Jasmine (Angular/TypeScript).

Integration Testing Tools: TestNG, Postman (for APIs), Selenium (for UI flows), REST Assured (API testing), Cucumber (BDD testing), JUnit (extended for integration).

The choice depends on the programming language, framework, and whether the tests are API-level or UI-level.

How much code coverage should Unit and Integration Tests have?

Unit tests usually target 70–90% coverage, since they are lightweight and easy to execute frequently. Integration tests typicall

Author