Generative AI: A Catalyst for Transformative Automation in Organizations

Bharath Hemachandran

Posted On: June 15, 2023

![]() 21447 Views

21447 Views

![]() 9 Min Read

9 Min Read

Organizations are continually looking to leverage emerging technologies to optimize their operations, particularly in the area of testing automation. One such groundbreaking technology is Generative AI. Promising to revolutionize testing automation, it introduces a new paradigm that can reshape organizational operations and boost efficiency. However, its adoption requires strategic planning and a certain level of technological maturity. This article will delve into how Generative AI can transform testing automation in organizations and explore opportunities across the software development life cycle (SDLC).

Key Candidates for Generative AI in Automation

The most promising areas for the application of Generative AI automation are those which demand considerable manual effort, involve repetitive tasks, and require learning from patterns. For instance, the generation of test cases, automated scripting, data creation for testing purposes, and automated defect prediction are prime candidates for generative AI applications (Parasuraman et al., 2018)1.

Maturity for Generative AI Adoption

Before an organization can effectively use Generative AI as a solution, it must first possess a requisite level of technological maturity. The foundation of this maturity lies in data readiness, computational power, digital transformation, and a strong understanding of AI models.

They must be capable of processing and analyzing large volumes of data to train their AI models. Furthermore, they need a robust infrastructure that supports AI workloads and a skilled workforce capable of understanding and implementing AI technologies (Davenport, 2020)2.

Reaching maturity for Generative AI adoption is a process that involves multiple key stages and elements:

Data Readiness

The first prerequisite is the readiness of data. AI systems learn from data, so organizations must have access to high-quality, clean data. This can involve setting up processes for data collection, storage, management, and cleaning. This stage also includes setting up data governance practices to ensure data privacy and security.

For test automation, data readiness primarily involves the creation and management of test data. High-quality test data should cover all possible edge and corner cases, be diverse enough to mimic real-world scenarios, and be free of inconsistencies and errors.

This requires processes for not just data collection, but also for data generation and manipulation. For instance, Generative AI systems can be trained to generate synthetic data that covers a broad spectrum of test cases. Also, data masking and pseudonymization techniques should be in place to handle sensitive data during testing, ensuring data privacy and security.

Infrastructure Readiness

The next stage is infrastructure readiness. Generative AI requires significant computational power, so organizations need to have the necessary hardware and software infrastructure. This could involve investing in powerful servers, adopting cloud computing, or leveraging edge computing.

In terms of test automation, infrastructure readiness could mean having sufficient resources for parallel test execution, or investing in cloud-based test environments to easily scale up or down based on the needs of the testing process. It could also involve investing in tools and platforms that support continuous testing as part of CI/CD pipelines.

Note

NotePerform seamless testing with AI powered Test Intelligence insights. Try LambdaTest Today!

Technological Readiness

Technological readiness refers to having the necessary technological knowledge and expertise within the organization. This can involve training existing staff, hiring new experts, or partnering with external AI providers. The organization also needs to have a clear understanding of AI and its potential benefits and risks.

For an organization to embrace AI for test automation, it needs to cultivate expertise in both AI and test automation. This could involve training existing testers on AI and machine learning principles and techniques, hiring AI specialists, or collaborating with external AI vendors. In addition, they need to understand how AI can be leveraged in testing, what benefits it can bring, and what potential challenges to expect

Digital Transformation

This stage involves the wider adoption of digital technologies across the organization. This could involve automating manual processes, leveraging digital technologies to improve customer experience, and using data and analytics to inform decision making. This could mean automating regression testing with AI, using AI to improve test coverage, or leveraging AI to automate the generation of test cases or test data.

Organizational Readiness

The final stage is organizational readiness. This involves preparing the organization for the changes that AI will bring. This can involve changing organizational structures, processes, and culture. It also involves managing the ethical, legal, and societal implications of AI.

These stages aren’t strictly linear and can often overlap. For example, an organization may start preparing its infrastructure while also beginning to transform its processes. It’s also a continuous journey – even after an organization has reached a high level of AI maturity, it needs to continue learning, experimenting, and evolving as the field of AI continues to progress.

In terms of culture, there should be a willingness to experiment and learn. AI in testing is still a relatively new field, and there will be a learning curve and inevitable mistakes along the way. An experimental mindset, a willingness to learn from mistakes, and a focus on continuous improvement are essential for success.

Managing the ethical, legal, and societal implications of AI is also crucial. This includes ensuring that AI testing systems are transparent, fair, and responsible, and do not inadvertently introduce bias into the testing process.

Opportunities in the SDLC

| SDLC Stage | Opportunities with Generative AI |

|---|---|

| Requirements Gathering and Validation |

|

| Design Phase |

|

| Coding Phase |

|

| Testing Phase |

|

| Deployment Phase |

|

| Maintenance and Iteration Phase |

|

Challenges and Conclusion

It is essential to note that while Generative AI provides transformative opportunities for testing automation, its implementation is not without challenges. Security, privacy, and ethical issues related to AI need to be addressed. Moreover, organizations must invest in re-skilling their workforce to leverage this technology fully.

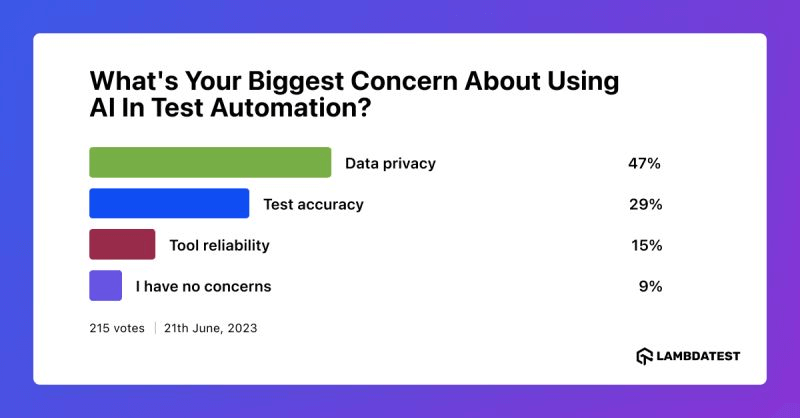

As we explore the advanced capabilities and challenges of using generative AI as a catalyst for transformative automation in organizations, it’s important to also understand what professionals in the field think about it. To get a wider perspective, we carried out a poll on social media, asking, ‘What’s your biggest concern about using AI in test automation?’ The findings from this survey offer an essential viewpoint on the practical challenges and possibilities presented by AI in testing scenarios.

In conclusion, Generative AI presents a powerful tool for organizations to transform testing automation across the SDLC. It can significantly reduce manual effort, increase efficiency, and enhance the ability to detect issues early in the process. By carefully considering their technological maturity and investing in the right infrastructure and skills, organizations can harness the full potential of Generative AI.

References

- Parasuraman, A., Mani, S., & Liu, Y. (2018). Generative AI in Testing. IEEE Software, 35

- Davenport, T. (2020). The AI Maturity Model: Four Steps to AI Success. Forbes.

- Microsoft. (2020). AI at Scale: Transforming the way we work at Microsoft. Microsoft AI.

- Basiri, A., Behnam, N., de Rooij, R., Hochstein, L., Kosewski, L., Reynolds, J., & Rosenthal, C. (2016). Chaos Monkey: Increasing SDLC Velocity at Netflix by Reducing Failures. Netflix Technology Blog.

- Wang, Y., Wang, S., Tang, J., & Liu, H. (2019). Using AI to predict system failures. IEEE Access, 7, 148512-148523.

Got Questions? Drop them on LambdaTest Community. Visit now