How to Use AI in QA Testing: A Complete Guide

Salman Khan

Posted On: October 28, 2025

21 Min

Traditional QA faces challenges like test maintenance, flaky tests, high overhead, and regression delays, all of which slow down releases and impact software quality. Integrating AI in QA helps overcome these challenges by stabilizing automation, prioritizing test coverage, and reducing manual intervention.

Overview

AI in QA uses machine learning and smart algorithms to improve software testing. It reviews past test results to find high-risk areas, prioritize regressions, and ensure better coverage.

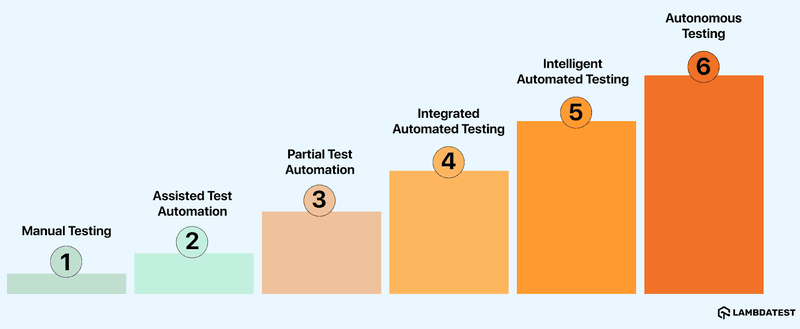

What Are the Levels of AI in QA?

AI in QA progresses from fully manual testing to fully autonomous testing. It gradually reduces human intervention while improving efficiency, accuracy, and test coverage across all testing phases. Different levels include:

- Manual Testing: Human testers perform all tasks, including writing, executing, analyzing test cases, identifying defects, and reporting issues, entirely without automation support.

- Assisted Test Automation: Automated tools assist test execution while humans continue creating scripts, managing workflows, and handling analysis and validation manually throughout the process.

- Partial Test Automation: Testing is shared between humans and automation. Automation handles repetitive tasks while humans manage execution, data handling, and result analysis under supervision.

- Integrated Automated Testing: AI provides recommendations within tools. Testers review and apply these suggestions to refine test cases and adjust automated test suites.

- Intelligent Automated Testing: AI generates test cases, executes tests, and reports results. Human involvement is optional, limited to specific scenarios or exception handling.

- Autonomous Testing: AI manages test creation, execution, and evaluation without human intervention, monitoring code changes, running tests, and detecting defects autonomously.

How to Use AI in the QA Process?

Implementing AI in QA requires careful planning, from identifying opportunities to selecting, training, and validating models. Here is the structured approach:

- Identify Test Scope: Determine where AI can add value in QA. Define objectives such as improving coverage, automating repetitive tasks, or prioritizing high-risk tests.

- Select AI Models: Choose models that fit project needs. For test generation, NLP-based AI tools or agents can convert prompts into automated test scripts.

- Train AI Models: Gather, curate, and label high-quality data. Proper annotation ensures models recognize patterns, execute tests accurately, and predict potential defects reliably.

- Validate AI Models: Test and evaluate models using subsets of data. Platforms like LambdaTest Agent to Agent Testing simulate real interactions of AI agents using these models to verify performance and reliability.

- Integrate AI Models: Deploy trained models into the QA workflow to automate test creation, execution, and analysis, ensuring improved coverage, defect detection, and efficiency.

TABLE OF CONTENTS

What Is AI in QA?

AI in QA leverages machine learning and intelligent algorithms to enhance the software testing process. It analyzes historical test results to identify high-risk areas, prioritize regression tests, and optimize coverage.

Automatic test case generation from natural language accelerates test creation, while self-healing scripts adapt to software changes, reducing maintenance effort. Predictive analytics forecast potential failures, enabling teams to address issues before they occur.

AI can also flag brittle tests, detect hidden defects, and identify visual inconsistencies in the user interface, resulting in more reliable and comprehensive testing.

Why Use AI in Quality Assurance?

Using AI in QA automates repetitive tests, detects flaky tests, predicts defects, and adapts test scripts automatically. It also optimizes regression coverage, ensures UI consistency, prioritizes high-risk tests, and accelerates releases.

- AI-Enhanced Test Execution: Improves execution efficiency by identifying stable tests, recommending parallel runs, and automating repetitive validations. This reduces execution time and frees testers to focus on exploratory and critical functional testing.

- Intelligent Test Selection: Evaluates code changes, historical defects, and execution data to select the most relevant test cases. This avoids redundant runs, saves time, and ensures focus on high-risk areas.

- Predictive Defect Analysis: Analyzes past test results and change histories to highlight modules most likely to fail. This software defect prediction helps teams improve coverage and prevent critical production defects.

- Flaky Test Identification: Tracks test stability, detects intermittent failures, and highlights unreliable scripts. It helps reduce false failures, lower debugging effort, and stabilize automation suites over time.

- Enhanced Defect Accuracy: Correlates multiple data sources, logs, test results, and past defect trends to detect issues and reduce false positives. This increases confidence in test results and defect reporting.

- Optimized Regression Coverage: Prioritizes regression runs based on recent code commits, risk level, and defect history. It ensures critical functionality is validated first within limited test execution windows.

- Faster Release Cycles: Reduces maintenance effort, eliminates redundant runs, and improves overall test stability. AI helps QA teams shorten release cycles and maintain higher quality standards.

Note

NoteGet insights into test results with AI-native Test Insights. Try LambdaTest Today!

What Are Examples of AI in Quality Assurance?

Examples of AI in QA include generating test data, creating test scripts, and prioritizing high-risk tests. AI can also manage test scheduling, self-heal broken scripts, and provide actionable analytics.

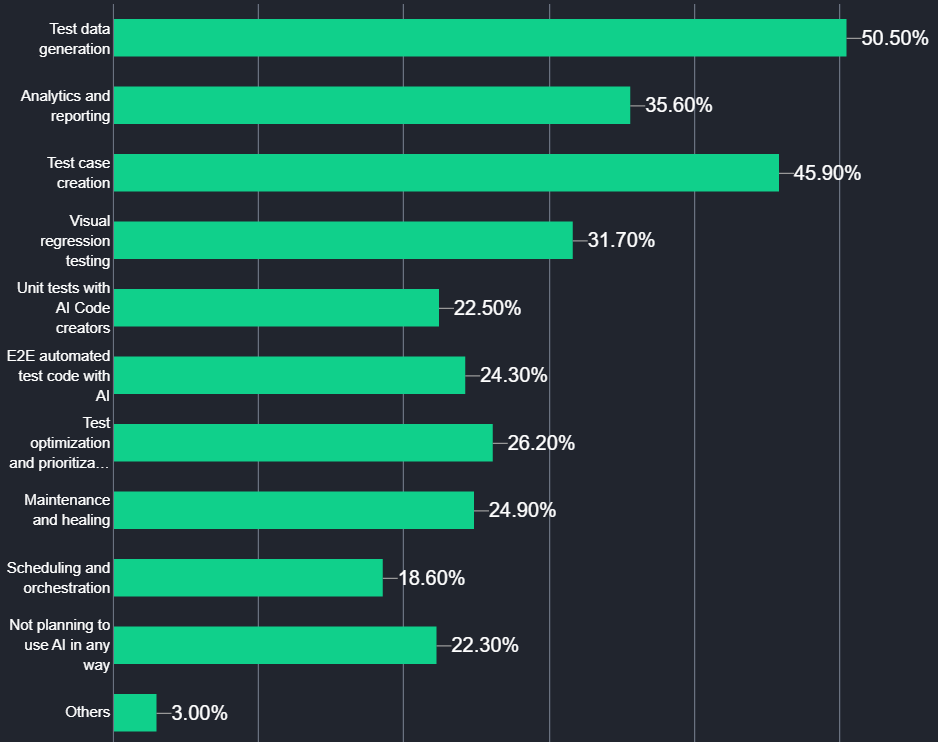

According to the Future of Quality Assurance Report, several key examples highlight how AI is used in testing processes.

- Test Data Generation: Generates diverse and realistic test datasets that simulate user behavior and edge cases. It reduces the need for manual data preparation and ensures comprehensive coverage for functional, integration, and performance tests.

- AI-Driven End-to-End Testing: Creates E2E test scripts that simulate real user interactions across workflows. These scripts are executed within automation frameworks to validate integrations, APIs, and UI components under varying conditions.

- Unit Test Generation: Analyzes source code to automatically generate unit tests, covering standard scenarios and edge cases. AI unit test generation can increase coverage and ensure individual components behave as expected.

- Test Optimization and Prioritization: Evaluates historical test results, code changes, and defect patterns to prioritize test execution. High-risk areas are tested first, redundant runs are minimized, and regression cycles become more efficient without compromising coverage.

- Scheduling and Orchestration: Manages test execution across environments, allocating resources dynamically and scheduling tests to avoid conflicts. It ensures efficient utilization of test infrastructure and timely completion of automated test suites.

- Visual Regression Testing: Compares UI snapshots across builds to detect layout shifts, misaligned elements, or missing components. Platforms like LambdaTest SmartUI offer smart visual testing that automatically highlights visual deviations, enabling rapid correction before end users are impacted.

- Self-Healing Test Scripts: Detects changes in UI elements or workflows and automatically updates affected test scripts. Self-healing test automation minimizes manual maintenance, keeps regression tests functional, and allows QA teams to focus on validation and analysis.

- Analytics and Reporting: Analyzes test execution data, defect trends, and coverage gaps to predict potential failures. Predictive analytics can generate actionable insights and reports that help QA teams optimize testing, reduce risk, and improve release confidence.

Learn how to generate tests with AI.

Building on analytics, platforms like LambdaTest provide AI Test Insights that help teams understand execution patterns and coverage gaps, while its Test Intelligence platform leverages AI to detect unstable tests, adapt scripts, and highlight UI issues, guiding smarter prioritization and execution decisions.

What Are the Six Levels of AI in QA Testing?

The six levels of AI in QA testing represent a spectrum of automation, from manual testing to fully AI-based testing. As AI capabilities evolve, they gradually reduce the dependency on manual intervention while improving testing efficiency and accuracy.

Let’s take a look at each level:

- Manual Testing: Human testers perform all tasks, including writing, executing, and analyzing test cases, identifying defects, and reporting issues. Testing is conducted entirely without automation support.

- Assisted Test Automation: Automated tools assist testers with test execution. Humans continue to create and maintain scripts, manage workflows, and handle analysis and validation manually.

- Partial Test Automation: Testing is shared between humans and automation. Testers manage execution, data handling, and result analysis, while automation handles repetitive tasks under human supervision.

- Integrated Automated Testing: AI provides recommendations within automated tools. Testers review and apply these suggestions to refine test cases and adjust test suites as needed.

- Intelligent Automated Testing: AI can generate test cases, execute tests, and report results. In intelligent test automation, human involvement is optional and limited to handling specific scenarios or exceptions.

- Autonomous Testing: In autonomous testing, AI manages test creation, execution, and evaluation without human involvement. It monitors code changes, runs tests, and identifies defects autonomously.

How to Integrate AI in QA Testing?

Start by identifying where AI can help in your QA process. Choose and train the right models, validate their accuracy, and integrate them to automate tests, catch defects faster, and improve coverage.

Here are the five essential steps that outline how to integrate AI in the QA process:

- Identify Test Scope: Focus on identifying the scope and objectives of implementing AI in QA. Define the key areas for using AI in different areas, such as improving test coverage or automating repetitive tasks.

- Select AI Models: Now select the AI models that best fit your software project requirements. For example, if you want to automate the test generation process, you can choose an NLP-based AI model or tool to generate tests.

- Train AI Models: High-quality data is essential for training AI models. Collect, curate, and label the data needed for training AI models. Also, use the proper data annotation method to ensure that the AI model can recognize patterns, execute accurate tests and predict defects.

- Validate AI Models: Once the AI model is trained, test and validate it. Develop test algorithms and evaluate models using subsets of the annotated data. The goal is to verify that the model performs as expected in real-world scenarios by producing accurate and consistent results.

- Integrate AI Models Into Your Workflow: Once the AI model is tested and validated, integrate it into your testing infrastructure. This can involve automating aspects of the testing process, like generating test cases or analyzing test results.

For example, GenAI-native test agents like LambdaTest KaneAI help you generate tests using natural language prompts. It lets you quickly generate tests without manually writing test scripts. This speeds up test creation, ensures better coverage.

To validate the behavior of AI agents that operate using these models, consider using platforms like LambdaTest Agent to Agent Testing. It helps you validate AI agents by simulating real-world interactions to evaluate how these agents perform, respond, and adapt in dynamic scenarios.

You can also measure accuracy, reliability, bias, and safety, helping teams identify weak behaviors and improve overall agent performance.

To get started, check out LambdaTest Agent to Agent Testing guide.

Which AI Tool Is Best for QA Testing?

The choice of an AI tool for QA testing depends on the project requirements. However, you can leverage Generative AI testing tools like LambdaTest KaneAI to plan, organize, and author tests using natural language prompts.

The following are the popular AI testing tools in quality assurance:

LambdaTest KaneAI

LambdaTest KaneAI is a GenAI testing agent designed to support fast-moving AI QA teams. It lets you create, debug, and enhance tests using natural language, making test automation quicker and easier without needing deep technical expertise.

Features:

- Intelligent Test Generation: Automates the creation and evolution of test cases through NLP-driven instructions.

- Smart Test Planning: Converts high-level objectives into detailed, automated test plans.

- Multi-Language Code Export: Generates tests compatible with various programming languages and frameworks.

- Show-Me Mode: Simplifies debugging by converting user actions into natural language instructions for improved reliability.

- API Testing Support: Easily include backend tests to improve overall coverage.

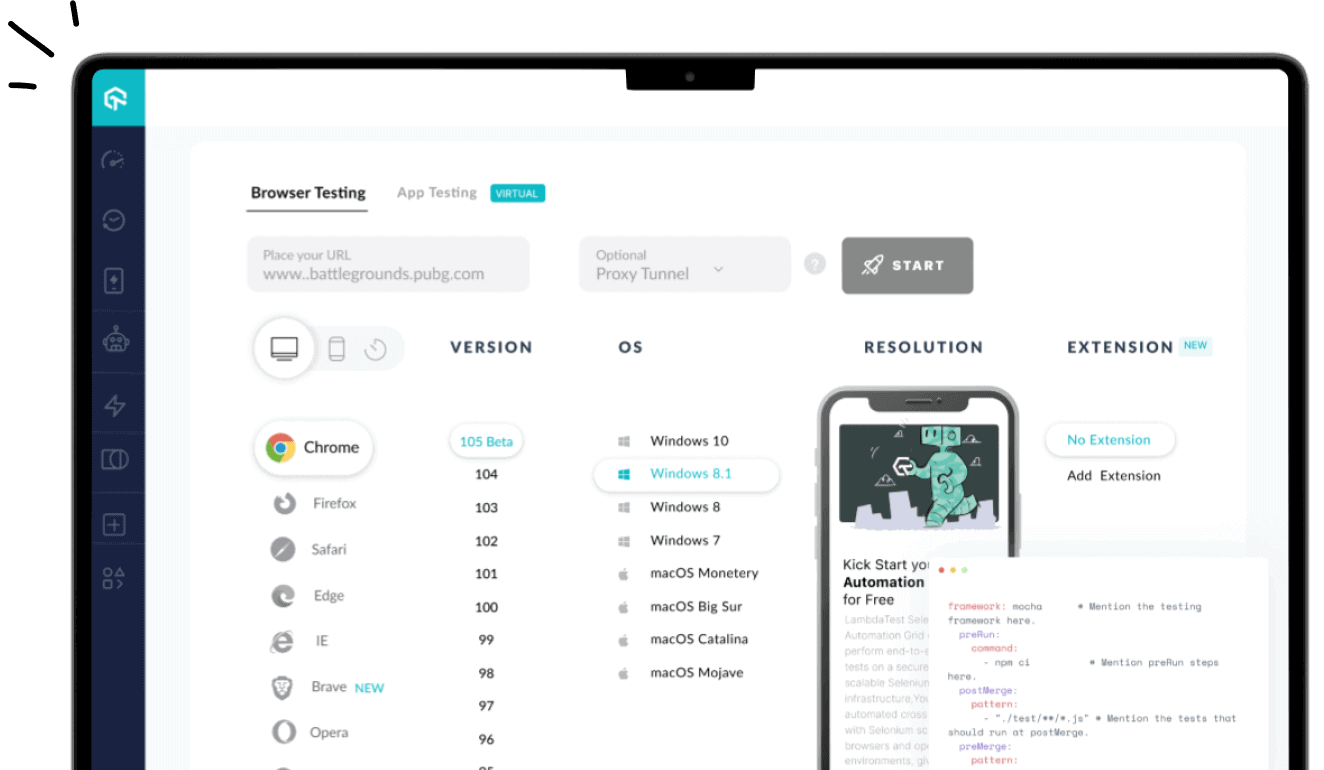

- Wide Device Coverage: Run your tests across 3000+ browsers, devices and operating systems.

With the rise of AI in testing, it’s crucial to stay competitive by upskilling or polishing your skill sets. The KaneAI Certification proves your hands-on AI testing skills and positions you as a future-ready, high-value QA professional.

Aqua Cloud

Aqua Cloud provides intelligent test management solutions, leveraging AI for test planning and test optimization. It centralizes testing workflows and offers predictive analytics to enhance decision-making.

Features:

- Test Management Automation: Reduces manual overhead with AI-driven workflows.

- Collaboration Tools: Supports cross-functional QA and development team collaboration.

- Scalability: Handles extensive testing needs across large software ecosystems.

- Analytics and Reporting: Provides actionable insights through predictive data analysis.

Virtuoso

Virtuoso is an AI-powered test automation platform that helps to create and maintain functional tests by using natural language processing and self-healing capabilities to increase testing speed without deep coding knowledge.

Features:

- Live Authoring With AI Suggestions: It suggests test steps in real time while you’re writing code, making it quicker to build reliable test cases without starting from scratch.

- Cross Browser Testing in the Cloud: It executes your tests on different browsers and OS in the cloud, so you don’t need to configure anything manually.

- Self-Healing With Real-Time Updates: It identifies changes in the app’s UI and automatically updates your test scripts, so you don’t have to rewrite them each time something changes.

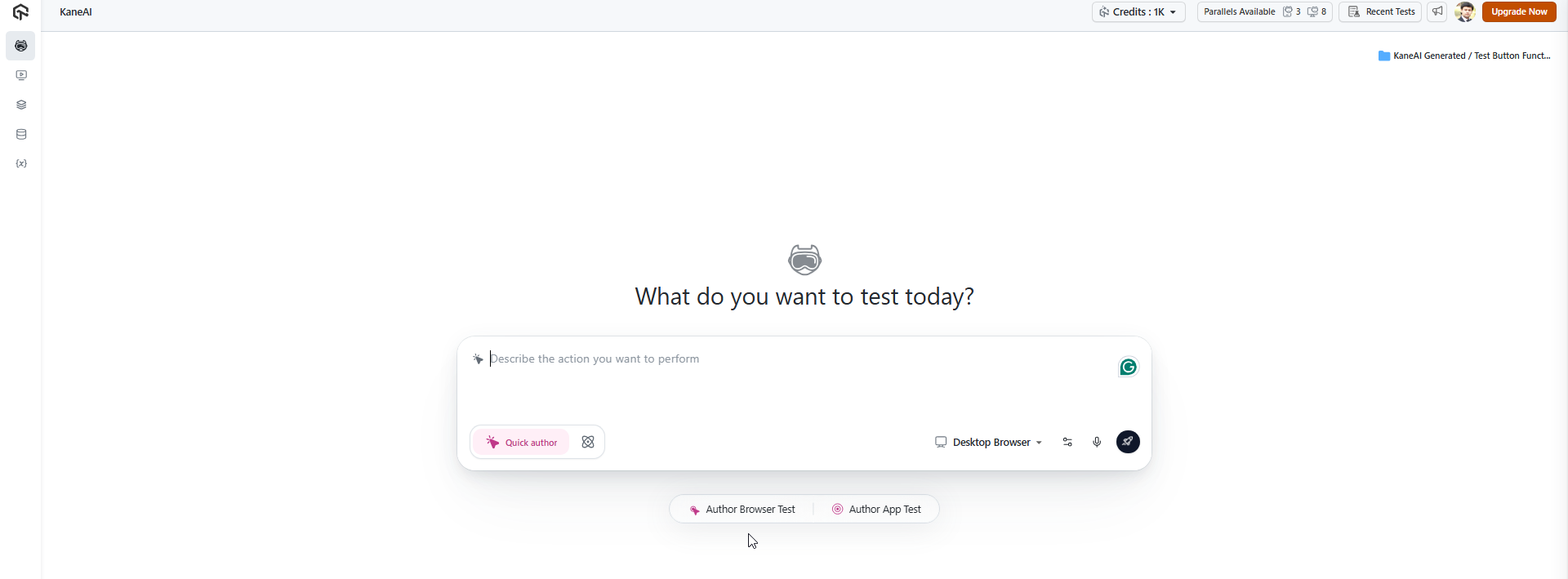

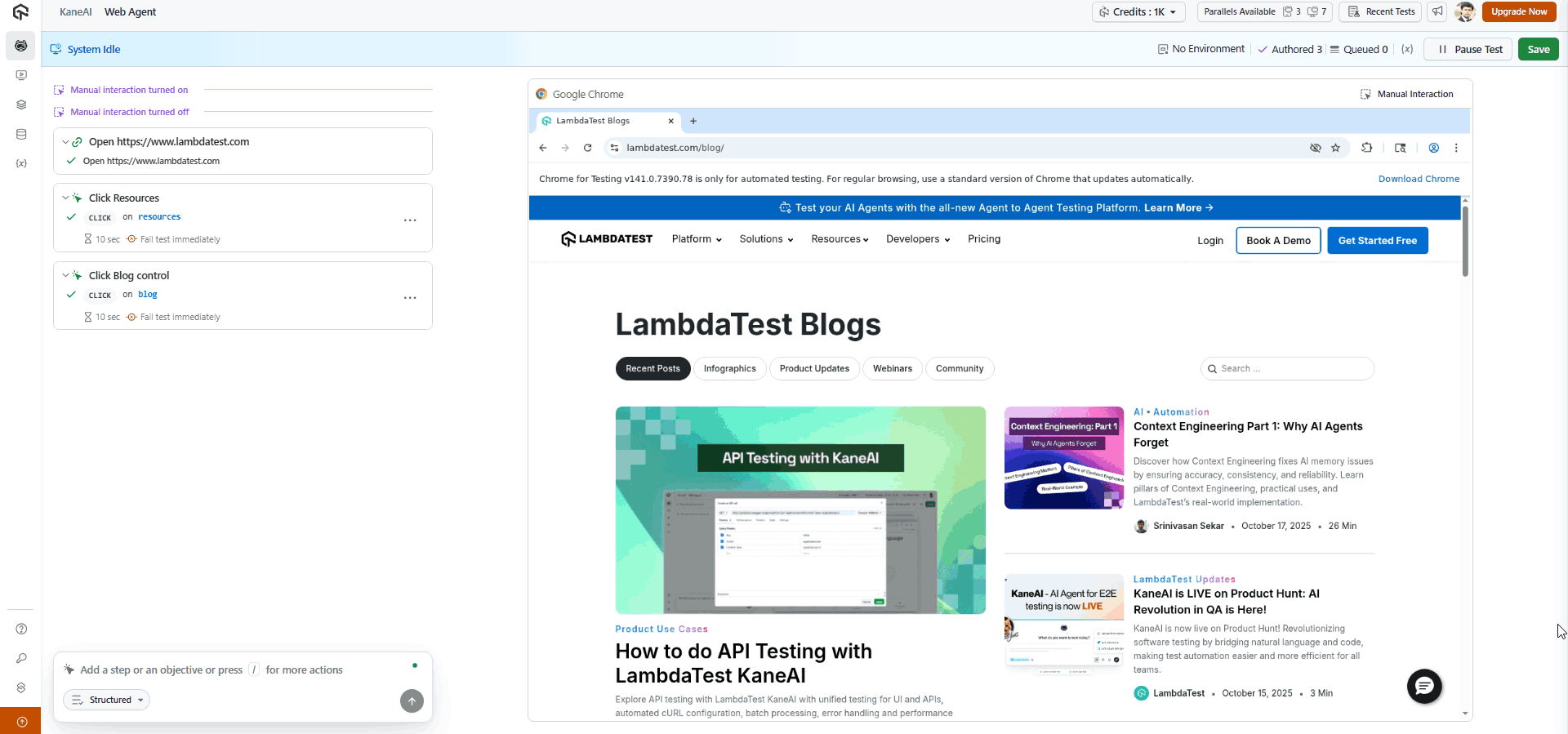

Demo: Using LambdaTest KaneAI for AI QA Testing

To see the real impact of AI in quality assurance, let’s explore how Generative AI testing tools like LambdaTest KaneAI can automate test creation, detect issues faster, and improve overall test coverage.

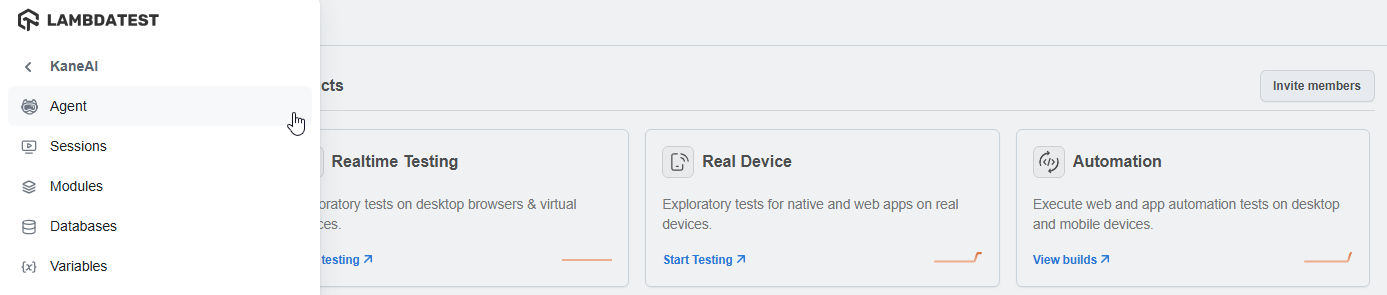

Step 1: From the LambdaTest dashboard, navigate to KaneAI > Agent.

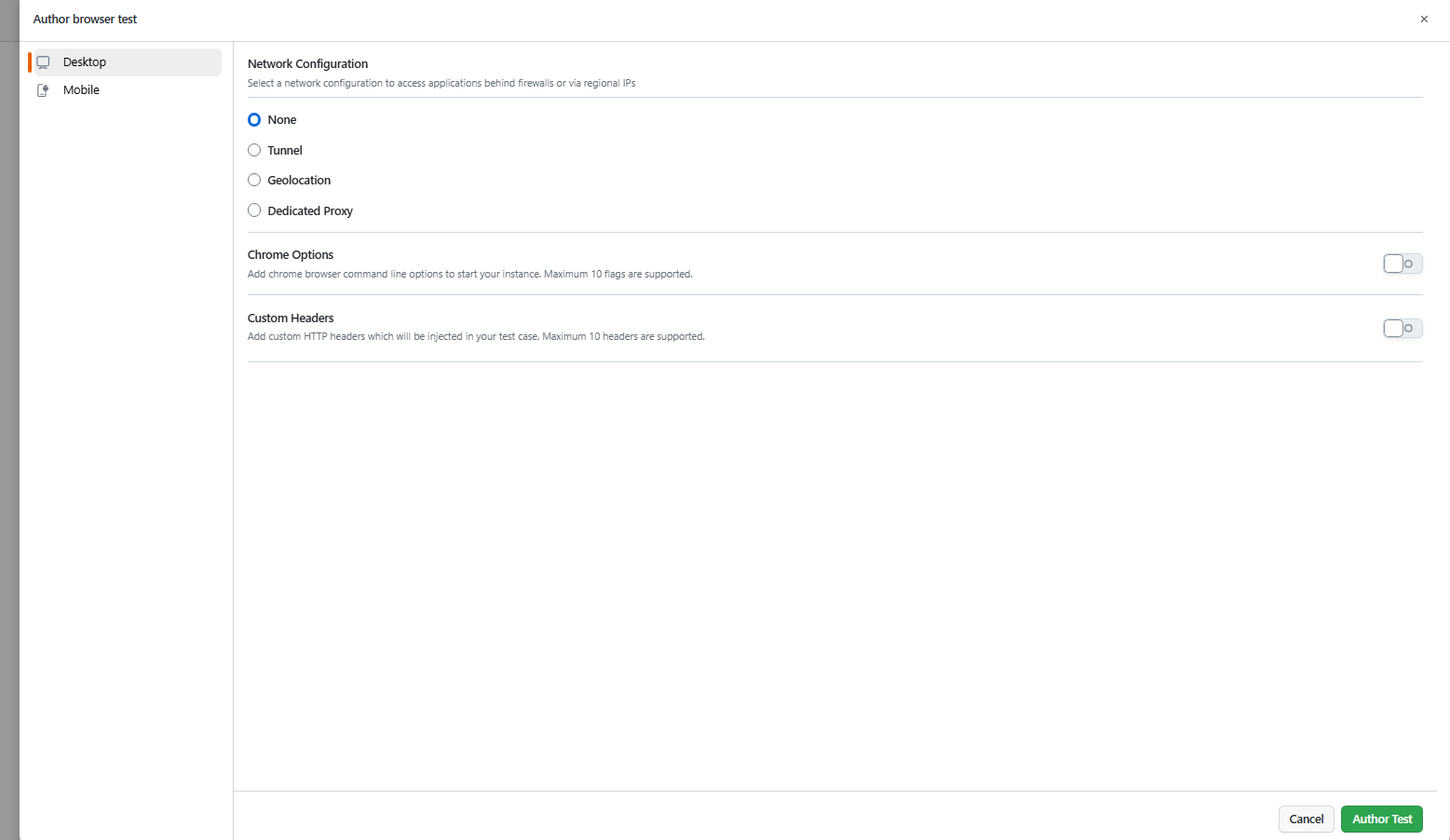

Step 2: Click on Author Browser Test.

Step 3: Select Desktop or Mobile and your preferred network configuration. Then, click Author Test.

Step 4: In the textarea, enter your test steps one-by-one and press the Enter button. For example, let’s provide these test steps:

- Visit the URL www.lambdatest.com

- Click on Resources

- Click on Blog

KaneAI will generate the test cases based on your test steps:

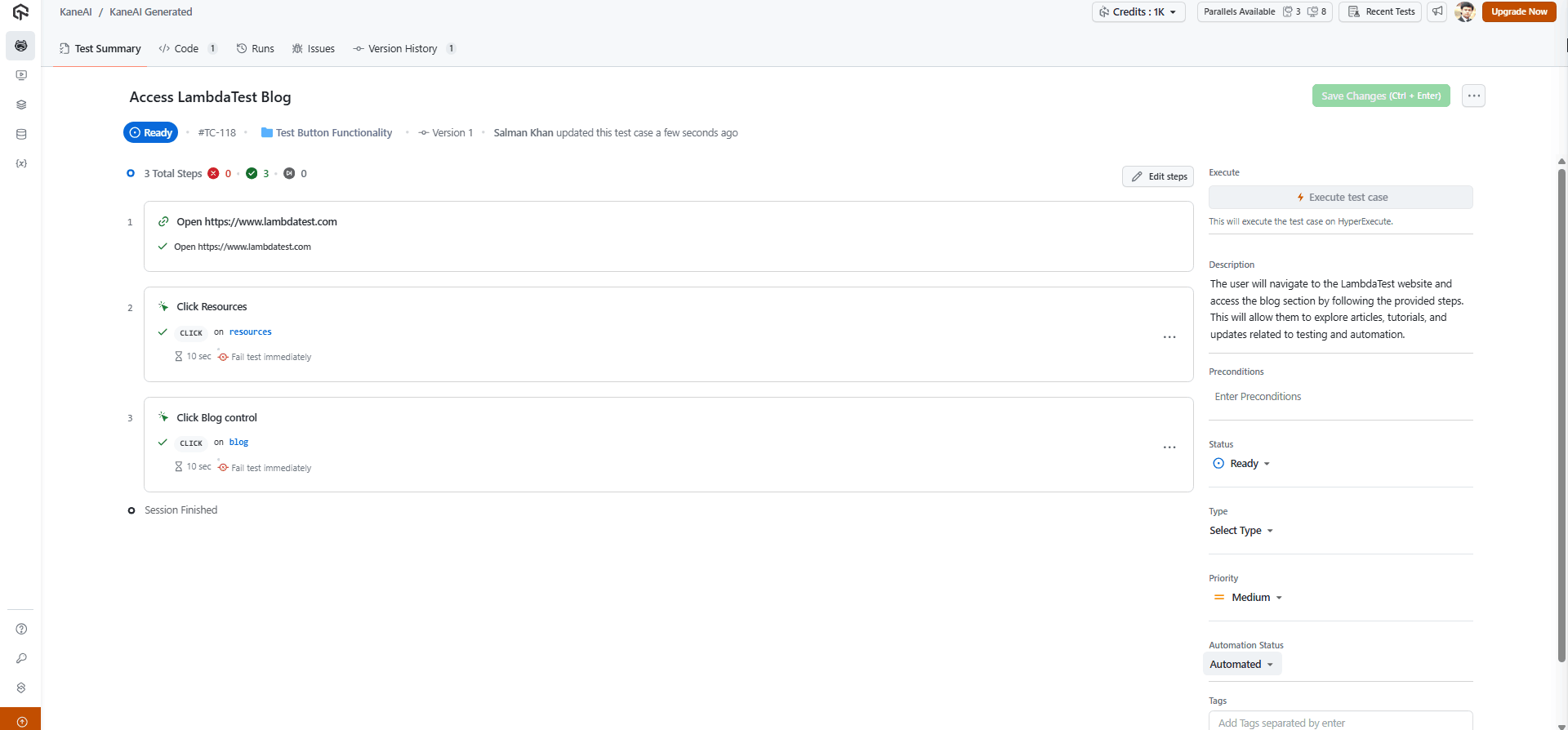

Now click on Save.

It will now redirect you to the screen below, where you can select and use different options according to your requirements.

To get started, refer to this guide on LambdaTest KaneAI.

What Is the Role of an AI Agent in the QA Life Cycle?

AI QA agent design tests from requirements, automates scripts, prioritizes and runs high-risk tests. It can also detect defects and generate actionable insights. They guide coverage, execution, and analysis efficiently.

Here’s how an AI QA agent contributes to each phase:

- Test Design: Reads requirements, user stories, or design documents and generates test cases automatically. It can focus on key workflows, edge cases, or validation points. In practice, this eliminates repetitive manual scripting and ensures critical scenarios are included in every test cycle.

- Test Automation: Converts designed test cases into executable test scripts across multiple languages and frameworks. It identifies UI elements and adjusts scripts when application changes occur. Test data can be generated automatically, and adaptive validations keep tests stable without constant human intervention.

- Test Execution: AI agents analyze factors like code changes, historical results, and user behavior to prioritize tests, ensuring that high-risk areas are addressed first. They can also seamlessly integrate with existing Continuous Integration/Continuous Deployment (CI/CD) tools, enhancing the overall automation workflow.

- Reporting and Insights: The agent processes all execution data into actionable insights. Patterns, recurring failures, and high-risk areas are highlighted in clear reports, allowing testers to make decisions faster.

- Defect Detection and Analysis: During runs, the agent tracks failures, unstable tests, and patterns of repeated errors. It highlights the most likely root causes, helping testers quickly pinpoint and fix issues rather than spending hours combing through logs.

Where Does AI Fit in the Future of QA?

AI in QA is evolving to plan, execute, and adapt tests autonomously while collaborating with humans. It identifies high-risk areas, integrates with DevOps pipelines, improves visual and accessibility testing, and leverages cloud platforms for scalable testing.

Key future trends include:

- Agentic AI in Test Automation: Emerging AI agents are designed to autonomously plan, execute, and adapt test strategies in real-time. These agents collaborate with human testers, providing insights and recommendations, thereby enhancing decision-making and efficiency in complex testing scenarios.

- Predictive Risk-Based Testing: AI models are increasingly used to analyze historical data and identify potential risk areas within applications. By focusing testing efforts on high-risk components, teams can optimize resource allocation and improve the effectiveness of their testing efforts.

- Integration With DevOps and Continuous Delivery: AI is becoming integral to DevOps pipelines, facilitating continuous testing and integration. This integration ensures that testing is embedded into the development process, leading to faster delivery cycles and more reliable software releases.

- Enhanced Visual and Accessibility Testing: AI-powered tools are advancing in detecting visual anomalies and accessibility issues across various platforms. These tools ensure that user interfaces are consistent and accessible, improving the overall user experience.

- Cloud-Based and Scalable Testing Solutions: The adoption of cloud technologies allows for scalable and flexible testing environments. AI-driven cloud testing platforms enable teams to perform GenAI testing across multiple configurations without the constraints of on-premises infrastructure.

Learn more about visual AI in software testing and the role of AI and accessibility.

Conclusion

AI is improving QA by providing advanced solutions that improve speed, accuracy, and efficiency in software testing. From automating test case creation to predicting defects and generating test data, AI helps streamline the testing process and ensures higher-quality outcomes. With different AI tools, as mentioned, AI-driven testing is becoming a critical component of modern QA strategies.

Frequently Asked Questions (FAQs)

How does AI in QA reduce flaky tests?

AI in QA analyzes historical test runs and execution patterns to detect unstable tests. It flags scripts prone to intermittent failures, recommends stabilization strategies, and adapts tests dynamically, improving reliability across different builds and reducing manual debugging effort.

Can AI in QA predict defects before deployment?

Yes. AI in QA examines past defects, code changes, and execution trends to identify high-risk modules. This allows teams to prioritize testing, catch potential issues early, and minimize production defects, improving software reliability and release confidence.

How does AI in QA optimize regression testing?

AI in QA evaluates code changes, previous results, and risk factors to select relevant tests. It prioritizes critical scenarios, avoids redundant runs, and ensures efficient validation of essential functionality, reducing overall regression cycle time while maintaining coverage quality.

Can AI in QA generate test data automatically?

AI in QA can create realistic, diverse datasets by analyzing application logic, user behavior, and edge cases. This reduces manual effort, increases coverage, and ensures important scenarios are tested without missing edge conditions or unusual inputs.

How do AI in QA agents adapt to UI changes?

AI in QA agents detect modifications in UI elements or workflows and automatically update scripts. This self-healing capability reduces maintenance effort, keeps regression tests functional, and allows teams to focus on validation instead of constantly rewriting automation.

Can AI in QA integrate with CI/CD pipelines?

Yes. AI in QA connects with CI/CD pipelines to track code changes, execute prioritized tests, and provide insights in real-time. This ensures continuous testing aligns with development cycles and supports faster, more reliable release delivery.

How does AI in QA support visual and accessibility testing?

AI in QA compares UI snapshots across builds to detect layout shifts, misaligned elements, or missing components. It also highlights accessibility issues, helping teams maintain consistent, usable, and compliant interfaces across devices without extensive manual effort.

Can AI in QA suggest improvements in test coverage?

AI in QA analyzes execution data, defect patterns, and coverage gaps to recommend additional tests or highlight areas needing attention. This ensures comprehensive validation, reduces missed scenarios, and strengthens overall software quality.

How do AI in QA agents assist human testers?

AI in QA agents handle repetitive tasks such as test case generation, data creation, and monitoring execution. They provide actionable insights and highlight risk areas, allowing human testers to focus on exploratory testing and critical functional validation.

Is AI in QA suitable for complex, multi-platform applications?

Yes. AI in QA can manage tests across multiple devices, browsers, and environments. It adapts scripts, prioritizes high-risk areas, and integrates with cloud platforms, ensuring consistent validation across complex applications without excessive manual intervention.

Author