AI-Powered Selenium

Testing Cloud

Supercharge QA with AI for Faster & Smarter Software Testing

- Testing & QA

- Home

- /

- Learning Hub

- /

- Quality Control Interview Questions and Answers

Top 50+ Quality Control Interview Questions and Answers [2026]

Explore 50+ Quality Control interview questions and answers to help you understand QC concepts, testing practices, and prepare confidently for interviews.

Last Modified on: November 19, 2025

- Share:

Quality control plays a vital role in ensuring that every product meets performance, safety, and reliability standards. It's about building trust through consistency and precision at every stage of production.

For professionals looking to advance in this field, exploring quality control interview questions offers valuable insight into core practices such as process optimization, defect prevention, and compliance, while helping strengthen analytical thinking and confidence during interviews.

Note: We have compiled all Quality Control Interview Questions for you in a template format. Check it out now!

Freshers-Level Quality Control Interview Questions

Starting a career in quality control involves understanding key principles, standard methods, and foundational industry practices. These fresher-level quality control interview questions are designed to help candidates strengthen their basics, grasp core QC techniques, and approach their first interviews with confidence.

1. What Is Quality Control (QC)?

Quality Control (QC) is a process-oriented approach that focuses on identifying and correcting defects in finished products or services before they reach the customer. It involves inspection, testing, and measurement activities to ensure that products meet specified requirements and standards.

This is reactive in nature, examining outputs to detect problems and taking corrective actions when deviations are found. This concept is foundational and commonly asked in quality control interview questions to assess your understanding of process-driven quality practices.

2. What Is the Difference Between Quality Assurance (QA) and Quality Control (QC)?

Quality Assurance(QA) builds quality into the process, while QC checks quality in the final product.

Here are the key differences between the two:

| Aspect | Quality Assurance | Quality Control |

|---|---|---|

| Objective | Focuses on providing assurance that the quality requested will be achieved. | Focuses on fulfilling the quality requested. |

| Technique | It's a technique of managing quality. | It's a technique to verify quality. |

| Phase | It is involved during the development phase. | It is not included during the development phase. |

| Program execution | It does not include the execution of the program. | Always includes the execution of the program. |

| Type of tool | It is a managerial tool. | It's a corrective tool. |

| Process/ Product-oriented | More process-oriented | It is a product-oriented approach. |

| Order of execution | Quality Assurance is performed before Quality Control. | Quality Control is performed after the Quality Assurance activity is done. |

| SDLC/ STLC | Responsible for the entire software development life cycle. | Responsible for the software testing life cycle |

Understanding how quality assurance vs quality control activities complement each other ensures effective process management, defect detection, and overall product quality.

3. What Is the Role of a QC Specialist?

A QC specialist plays a crucial role in software development by ensuring that software products meet specified requirements and quality standards before release.

Their responsibilities include:

- Test Planning and Design: Create comprehensive test plans, test cases, and testing strategies

- Test Execution: Perform manual and automated testing across different software components

- Defect Identification: Find, document, and track bugs throughout the testing process.

- Quality Verification: Validate that software meets functional and non-functional requirements.

- Documentation: Maintain detailed test reports, defect logs, and quality metrics

- Collaboration: Work closely with developers, business analysts, and project managers.

Additional tasks include reviewing requirements for testability, creating test data, participating in code reviews, and supporting UAT. Understanding this role is essential and often covered in quality control interview questions to test your grasp of real-world testing responsibilities.

4. Name Different Types of Testing in QC?

QC involves different types of software testing to ensure comprehensive coverage:

Manual Testing and Automated Testing:

- Manual Testing: Human testers execute test cases manually.

- Automated Testing: Tools and scripts execute tests automatically.

Based on Knowledge of Code:

- Black Box Testing: Testing without knowledge of internal code structure.

- White Box Testing: Testing with complete knowledge of code implementation.

- Gray Box Testing: A Combination of black box and white box approaches.

Based on Testing Levels:

- Unit Testing: Testing individual components or modules.

- Integration Testing: Testing interactions between integrated components.

- System Testing: Testing the complete integrated system.

- Acceptance Testing: Validating software meets business requirements.

Based on Functionality:

- Functional Testing: Verifies software functions according to specifications

- Non-Functional Testing: Tests performance, security, usability, and other quality attributes

Specific Testing Types:

- Smoke Testing: Basic functionality verification after build deployment.

- Sanity Testing: Narrow regression testing focused on specific functionality.

- Regression Testing: Ensuring existing functionality works after changes.

- Performance Testing: Evaluating speed, scalability, and resource usage.

- Security Testing: Identifying vulnerabilities and security weaknesses.

- Usability Testing: Assessing user experience and interface design.

- Compatibility Testing: Ensuring software works across different environments.

- API Testing: Testing application programming interfaces.

- Database Testing: Validating data integrity and database operations.

Knowing these types is fundamental and is often highlighted in more of the quality control interview questions.

5. What Is a Test Case? What Makes It Good?

A test case is a detailed document that specifies the conditions, steps, input data, and expected results needed to verify a particular software feature or requirement. It serves as a blueprint for testing activities.

Components of a Test Case:

- Test Case ID: Unique identifier for tracking.

- Test Description: Brief summary of what is being tested.

- Preconditions: Set up requirements before test execution.

- Test Steps: Detailed actions to be performed.

- Input Data: Specific data values to be used.

- Expected Results: Anticipated outcome of the test.

- Actual Results: What actually happened during execution.

- Pass/Fail Status: Test execution result.

A good test case should be written in simple, unambiguous language with clear, easy-to-follow steps and specific, measurable expected results. It must cover all aspects of the functionality being tested, including both positive and negative scenarios as well as boundary conditions and edge cases. Understanding these phases is key for anyone preparing for quality control interview questions focused on real-world testing workflows.

6. What Are the Phases of Standard QC Procedures?

Standard QC procedures in software development follow a systematic approach through multiple phases. Each phase has specific objectives and deliverables that together ensure thorough validation and controlled release of software.

Phase 1: Test Planning

- Analyze requirements and define testing scope

- Identify test objectives, approach, and strategy

- Determine resource requirements and timelines

- Create a master test plan document

- Define entry and exit criteria for testing

Phase 2: Test Design

- Design test cases based on requirements

- Create test scenarios and test data

- Develop test scripts for automated testing

- Design test environment setup requirements

- Create a traceability matrix linking tests to requirements

Phase 3: Test Environment Setup

- Configure hardware and software environments

- Install and configure testing tools

- Set up test data and databases

- Verify environment readiness for testing

- Conduct environment smoke testing

Phase 4: Test Execution

- Execute test cases according to the test plan

- Log defects found during testing

- Perform retesting of fixed defects

- Conduct regression testing for impacted areas

- Document test results and maintain test logs

Phase 5: Defect Tracking and Management

- Report and prioritize identified defects

- Track defect lifecycle from discovery to closure

- Collaborate with the development team for defect resolution

- Verify defect fixes through retesting

- Maintain defect metrics and reports

Phase 6: Test Reporting and Closure

- Generate comprehensive test reports

- Analyze test coverage and effectiveness metrics

- Document lessons learned and best practices

- Obtain sign-off from stakeholders

- Archive test artifacts for future reference

This distinction between build and release management often appears in quality control interview questions, especially for roles involving test environment coordination.

7. What Is the Difference Between a Build and a Release?

Builds are work-in-progress versions, while releases are finished products.

Here are the key differences between the two:

| Aspect | Build | Release |

|---|---|---|

| Definition | Compiled version of software code ready for testing | Officially delivered software version to end users |

| Purpose | Internal testing and validation | Customer/user deployment |

| Audience | Development and QA teams | End users, customers, production environment |

| Frequency | Multiple builds per day/week | Periodic releases (weeks/months) |

| Quality Level | May contain known bugs for testing | Thoroughly tested and approved quality |

| Environment | Development/test environments | Production/customer environments |

8. Explain the Bug (Defect) LifeCycle

The bug life cycle represents the journey of a defect from discovery to resolution. A clear understanding of this cycle is essential for QA professionals and is commonly covered in quality control interview questions.

Typical phases include:

- New / Open: When a tester finds a bug, they report it with basic details like description, severity, and priority. The bug gets an initial status of "New" and is assigned to the development team for review.

- Assigned: The development team assigns the bug to a specific developer who takes ownership. The developer analyzes the issue to understand what's causing it and estimates how long it will take to fix.

- In Progress / Active: The developer starts working on fixing the bug. They identify the root cause, make the necessary code changes, and perform basic testing to ensure their fix works.

- Fixed / Resolved: Once the developer completes the fix, they commit their changes to the code repository and deploy them to the test environment. The bug status is updated to "Fixed" or "Resolved."

- Retest / Pending Retest: The bug goes back to the tester for verification. The tester runs tests to confirm the bug is actually fixed and checks if the fix hasn't broken anything else.

- Verified / Closed: If the tester confirms the bug is fixed and no new issues are found, the defect is marked as "Closed" or "Verified," indicating successful resolution.

A clear understanding of this cycle is essential for a QA professional, as it's highlighted in most of the quality control interview questions, as testing your grasp of defect management processes.

9. What Is a Traceability Matrix, and Why Is It Important?

A traceability matrix is a document that maps and traces the relationships between requirements, test cases, and defects throughout the Software Development LifeCycle(SDLC).

It provides a structured way to ensure complete test coverage and requirement validation.

Traceability Matrix is important for the following reasons:

- Ensures Complete Coverage: Confirms all requirements are tested and mapped to relevant test cases.

- Identifies Gaps: Highlights untested areas to maintain full validation.

- Tracks Changes: Helps assess how requirement updates impact related test cases.

- Improves Visibility: Monitors testing progress and quality status at every stage.

- Supports Prioritization: Focuses testing on critical business requirements.

- Reduces Risk: Prevents missing functionality and defects before release.

A well-maintained traceability matrix improves test coverage and control, a key topic that is covered in most of the quality control interview questions.

10. What Are the Entry and Exit Criteria for Testing?

Entry and exit criteria define when testing should start and when it can be considered complete. These criteria act as checkpoints to ensure that testing begins only when all prerequisites are met and ends once quality objectives are achieved.

Entry Criteria:

- Approved Requirements: All functional and technical specifications are reviewed and finalized.

- Stable Test Environment: Hardware, software, and tools are properly configured.

- Deployable Build: A testable version of the application is available.

- Reviewed Test Cases: Test cases are documented, reviewed, and ready for execution.

- Prepared Test Data: Input data is validated and available for test runs.

Exit Criteria:

- All Tests Executed: Planned test cases have been run with acceptable pass rates.

- Critical Defects Fixed: Major and high-priority issues are resolved and verified.

- Adequate Test Coverage: Requirements are fully validated against test cases.

- Performance Targets Met: The software meets stability and performance benchmarks.

- Final Test Reports: Detailed results and metrics are documented for stakeholder review.

Entry and exit criteria serve as essential checkpoints in quality control processes, a frequently discussed topic in quality control interview questions.

11. Describe Verification and Validation

Verification and Validation are two key aspects of software quality assurance that ensure the product is both correctly built and meets user needs.

- Verification: Verification checks whether the software is developed according to specified requirements and design documents.

- Static Process: Involves reviewing documents without executing code.

- Process-Oriented: Ensures the product is built right.

- Prevention-Based: Detects defects early in the lifecycle.

- Document Review: Includes reviews, walkthroughs, and inspections.

- Verification: Validation ensures that the final product meets user expectations and performs as intended.

- Dynamic Process: Involves executing the code.

- Product-Oriented: Ensures the right product is built.

- Detection-Based: Identifies defects through execution.

- User-Focused: Confirms the software fulfills customer needs.

Though they might sound the same but there is a slight difference in performing verification and validation in the STLC process. To know their difference, follow this blog on verification vs validation.

12. What Are the Common Test Documents in QC?

The key test documents used in quality control include:

- Test Plan: High-level document outlining testing approach, scope, resources, and timeline for a project or release.

- Test Strategy: Defines the overall testing methodology, types of testing to be performed, and guidelines for the testing process.

- Test Cases: Detailed step-by-step instructions for testing specific functionalities, including expected results.

- Test Scenarios: High-level test conditions derived from use cases that describe what to test.

- Requirement Traceability Matrix (RTM): Maps requirements to test cases, ensuring complete coverage.

- Test Data: Sample data sets used during test execution to validate different scenarios.

- Defect Reports: Documents that capture bugs found during testing with details like severity, priority, and steps to reproduce.

- Test Execution Reports: Results of test runs showing pass/fail status and execution metrics.

- Test Summary Report: Comprehensive report summarizing testing activities, coverage, and quality metrics.

13. Define Test Plan and Test Strategy

A test plan and test strategy are two essential documents in software testing that define the scope, approach, and objectives of the testing process. While both focus on ensuring quality, they differ in purpose and level of detail.

- Test Plan: A project-specific document that details what and how to test, including scope, resources, environment setup, risks, and timelines. It is created by the Test Lead or Manager for each release.

- Test Strategy: A high-level organizational document that defines the overall testing approach, types of testing, tools, and methodologies. It guides multiple projects and remains largely unchanged across releases.

14. What Is the Requirement Traceability Matrix in QC?

The requirement traceability matrix ensures complete test coverage by mapping each requirement to its corresponding test cases.

- Forward Traceability: Links requirements to test cases, ensuring every requirement has corresponding test coverage.

- Backward Traceability: Links test cases back to requirements, ensuring no unnecessary test cases exist.

15. What Is Regression Testing?

Regression testing re-runs previously executed test cases to confirm that new changes, bug fixes, or enhancements haven’t affected existing functionality.

Purpose:

- Verify Stability: Ensures that new code changes do not affect or break existing functionality.

- Maintain Reliability: Confirms system stability after implementing bug fixes, enhancements, or adding new features.

16. Differentiate Functional and Non-Functional Testing

Functional testing validates what the system does by verifying that software features work according to specified business requirements.

Non-functional testing evaluates how well the system performs by testing quality attributes like performance, security, and usability under various conditions.

| Aspect | Functional Testing | Non-Functional Testing |

|---|---|---|

| Definition | Tests what the system does - validates business requirements and functionality | Tests how the system performs - validates quality attributes and characteristics |

| Primary Focus | System behavior and features | System performance and quality attributes |

| Objective | Verify that the software functions according to the specified requirements | Verify system performance, reliability, scalability, and other quality factors |

| Question Asked | "Does the system do what it's supposed to do?" | "How well does the system perform its functions?" |

Understanding the difference between functional and non-functional testing helps you decide which type of testing is needed at each stage of software development.

17. What Is Black Box Testing? Give an Example

Black box testing is a software testing technique where the tester has no knowledge of the internal code, logic, or structure. Instead, the focus is on verifying input-output behavior based on requirements.

Example: Testing a login page by entering valid and invalid username-password combinations to check if access is granted or denied appropriately, without knowing how authentication is implemented. This concept is highlighted in most of the quality control interview questions.

18. What Is White Box Testing? Give an Example

White box testing is a testing technique where the tester has full visibility of the internal code, logic, and structure. It focuses on verifying code paths, conditions, and loops to ensure complete coverage and detect logic errors early.

Example: Testing a loan interest calculation function by reviewing all code paths, validating if-else conditions, and ensuring each line executes at least once. This question is frequently covered in most quality control interview questions.

19. What Is Static Testing?

Static testing is a software testing method that examines code, documentation, and design without executing the program or application. It involves manual examination through reviews, walkthroughs, inspections, and automated analysis using static analysis tools to find defects early in development.

This static testing question is the key concept, and it's often covered in most of the quality control interview questions, as it focuses on finding issues like coding standard violations, syntax errors, unused variables, and security vulnerabilities before the code is run.

20. What Is Configuration Testing?

Configuration testing verifies that software applications work correctly across different hardware, software, network, and operating system configurations.

It ensures the application performs optimally on various combinations of browsers, operating systems, devices, and network conditions that end users might have.

This testing helps identify compatibility issues and ensures the software works consistently across different environments and system configurations.

21. Name Some Common QC Tools Used for Defect Tracking

Defect tracking is a key part of quality control, helping teams manage and resolve bugs efficiently throughout the software life cycle.

Here are some widely used quality control tools for defect tracking:

- JIRA: A leading commercial tool offering customizable workflows, reporting, and third-party integrations.

- Bugzilla: Open-source web-based system with strong defect management features.

- Azure DevOps: Microsoft’s platform combining defect tracking, project management, and CI/CD tools.

- MantisBT: Free, open-source issue tracker with a simple interface for basic defect handling.

- Redmine: Web-based project management tool with built-in issue tracking.

- HP Quality Center (ALM): Enterprise-grade tool providing comprehensive defect tracking and life cycle management.

21. How Is Test Coverage Calculated?

Test coverage measures the extent to which software has been tested. It helps identify untested areas and improves overall Quality Assurance(QA).

Common Test Coverage Metrics:

- Statement Coverage: (Executed Statements / Total Statements) × 100

- Branch Coverage: (Executed Branches / Total Branches) × 100

- Function Coverage: (Called Functions / Total Functions) × 100

- Requirement Coverage: (Requirements Tested / Total Requirements) × 100

- Test Case Coverage: (Executed Test Cases / Total Test Cases) × 100

Example: If 80 out of 100 lines of code are executed, the statement coverage is 80%.

23. What Is Exploratory Testing?

Exploratory testing is an unscripted, hands-on approach where testers learn, design, and execute tests simultaneously. It’s often highlighted in quality control interview questions for its emphasis on creativity, adaptability, and real-time learning.

24. What Is Bug Severity vs Priority?

Bug severity and priority are two essential concepts in quality control that help teams assess and manage bugs efficiently. While both describe defects, they focus on different aspects; severity measures the technical impact, while priority defines the urgency of fixing them.

Bug Severity: Severity determines how much a defect affects the software’s functionality or stability. It reflects the technical seriousness of an issue.

- Critical / Blocker: System crash, data loss, or security breach.

- High: Major functionality or key feature not working.

- Medium: Minor issue with an available workaround.

- Low: Cosmetic or user interface-level defect.

Bug Priority: Priority defines how quickly a defect should be resolved based on business needs or project deadlines.

- P1 (Urgent): Requires immediate fix; blocks release.

- P2 (High): Should be fixed in the current release cycle.

- P3 (Medium): Can be addressed when resources permit.

- P4 (Low): May be deferred to a future release.

Understanding severity and priority is a common quality control interview question, as it demonstrates a tester’s ability to balance technical impact with business urgency.

25. What Are Smoke and Sanity Testing?

Both smoke testing and sanity testing are quick validation techniques used to ensure software stability, but they differ in scope and purpose.

- Smoke Testing: Smoke testing checks whether the critical functionalities of an application work after a new build is deployed. It acts as a build verification test to ensure the system is stable enough for deeper testing.

- Sanity Testing: Sanity testing focuses on verifying specific functionalities or bug fixes after small code changes. It ensures that recent updates haven’t broken existing behavior.

26. What Is Defect Leakage?

Defect leakage occurs when bugs that should have been detected during the testing phase are discovered after the software is released to users. It indicates a gap in the quality control process, often discussed in quality control interview questions.

Phase-wise Defect Leakage:

- Unit Testing Leakage: Defects found in integration testing that should have been caught during unit testing.

- System Testing Leakage: Defects found in user acceptance testing (UAT) that should have been detected in system testing.

Defect leakage highlights weaknesses in earlier testing phases and helps improve test coverage and process effectiveness.

Intermediate-Level Quality Control Interview Questions

Here are some intermediate-level quality control interview questions designed for professionals who have hands-on experience and are growing toward more advanced responsibilities. These questions focus on practical challenges such as risk-based testing, root cause analysis, defect management, continuous integration, CAPA processes, and building a strong foundation in automation.

They are designed to evaluate not just your technical knowledge, but also your ability to apply QC concepts, improve testing workflows, and support product quality across teams, key skills for mid-level QA, QC, and software quality professionals.

27. How Do You Prioritize Test Cases for Execution?

Prioritizing test cases helps ensure maximum coverage within limited time and resources, a common quality control interview question topic.

Priority Factors to Consider:

- Business Criticality: Focus on login, payment, and core business functions.

- Risk Assessment: Prioritize high-risk or frequently changed modules.

- Customer Impact: Test user-facing and revenue-critical features first.

- Technical Factors: Consider dependencies, execution time, and test data availability.

Practical Steps:

- Execute critical user journeys first.

- Test new or modified features before stable ones.

- Run automated regression tests early.

- Defer cosmetic or low-risk tests if time is short.

28. How Do You Handle Incomplete Software Requirements?

Incomplete requirements are a common challenge in software testing and are often highlighted in most of the quality control interview questions.

Here's a systematic approach to handling this situation:

- Immediate Actions: Document all gaps by creating a list of missing, unclear, or ambiguous requirements. Categorize gaps by severity and impact on testing. Schedule meetings with business analysts, product owners, and developers to get clarification. Ask specific questions about unclear functionality and escalate critical missing requirements to project management.

- Proactive Strategies: Use exploratory testing to understand how the system actually behaves and document discovered functionality that wasn't in the original requirements. Write down all assumptions you make about system behavior and get them validated by stakeholders before proceeding. Create assumption-based test cases that can be modified later as more information becomes available.

- Collaboration Techniques: Participate in requirement review sessions and work closely with developers to understand implementation details. Use prototypes or mockups to clarify expected behavior. Engage with end users when possible to understand real-world usage patterns.

- Risk Management: Identify areas where incomplete requirements pose the highest risk and focus testing efforts on well-defined requirements first. Create contingency plans for different requirement interpretations and document potential impacts of requirement gaps on the project timeline.

29. What Are the Contents of a Typical Test Plan?

A typical software test plan includes several core components, as outlined by leading testing resources and test management guides. This is a frequently asked question in quality control interviews, since creating a test plan is a fundamental step in understanding structured testing processes.

Key Contents of a Test Plan:

- Introduction & Objectives: Purpose of testing, objectives, and scope of the testing activity.

- Scope (In-scope/Out-of-scope): Features, modules, or functionalities included and excluded from testing.

- Test Items: Specific components, systems, or builds to be tested.

- Test Approach/Strategy: Testing levels, types (functional, non-functional), methods (manual/automation), and rationale.

- Test Schedule/Timeline: Start and end dates, testing phases, milestones, and deliverables.

- Resource Allocation/Roles: Assignment of tasks, roles, and responsibilities for test team members.

- Test Environment: Configuration details, hardware, software, network setups, and access requirements.

- Test Deliverables: Expected outputs, such as test cases, scripts, data, defect reports, and summary reports.

- Test Cases & Scenarios: Listing of all test conditions to be executed, stepwise procedures, and pass/fail criteria.

- Tools: Test management, automation, bug tracking, and support tools used throughout testing.

- Defect Management: Procedures and standards for reporting, tracking, and resolving bugs.

- Risk Management: Potential test risks and mitigation or contingency plans.

- Exit/Acceptance Criteria: Definition of when testing will stop and benchmarks for acceptance.

- Reporting & Communication: How progress, coverage, and results will be documented and shared with stakeholders.

30. Explain the Review and Inspection Process in QC

The review and inspection process is a key aspect of quality control and is often featured in quality control interview questions, as it demonstrates how early defect detection improves product reliability.

This approach involves peer evaluation of software artifacts like requirements, code, and test cases before progressing further in development, enhancing quality and reducing costs.

Key Steps:

- Planning: A moderator organizes the review, selects artifacts, and assigns participants.

- Preparation: Reviewers individually examine artifacts using defined checklists.

- Overview Meeting: The author explains the product’s purpose, scope, and context.

- Inspection Meeting: Reviewers discuss findings and identify potential defects.

- Defect Logging: All discovered issues are documented formally for tracking.

- Rework: The author corrects the identified defects and applies recommendations.

- Follow-up: The moderator verifies that all critical issues are resolved.

31. How Do You Ensure Requirements Coverage Through Testing?

Ensuring complete requirements coverage is a critical part of quality control and is frequently highlighted in quality control interview questions, as it shows how testers verify that every business and technical requirement is properly addressed.

This approach ensures that all aspects of the system are tested and quality is maintained throughout the software development process.

Key Practices:

- Mapping: Map each requirement to test cases using a Requirements Traceability Matrix (RTM).

- Measuring: Measure coverage metrics like requirement coverage and test case coverage.

- Updating: Continuously update the RTM as requirements evolve.

- Tracking: Use test management tools to track progress and identify coverage gaps.

- Collaborating: Collaborate with testers and stakeholders to clarify and validate requirements.

This structured approach guarantees that all business and technical requirements are accounted for and verified during the software testing process.

32. What Are the Steps of Risk-Based Testing?

Risk-based testing is a key approach in quality control, and this topic on risk-based testing is often covered in many quality control interview questions, as it demonstrates how testing efforts are prioritized based on potential risks to optimize resources and detect defects efficiently.

Key Steps:

- Risk Identification: Identify technical risks like complex algorithms, integration points, and new technologies. Consider business risks, including critical functions, high-visibility features, and regulatory compliance. Evaluate project risks such as tight deadlines, requirement changes, and resource constraints.

- Risk Analysis and Assessment: Assess Risk Probability as High (70-90%), Medium (30-70%), or Low (0-30%). Analyze Risk Impact as High (severe consequences like system failure), Medium (moderate consequences like performance issues), or Low (minor consequences like cosmetic issues). Create a Risk Priority Matrix combining probability and impact to determine High, Medium, and Low priority risks.

- Risk Prioritization:Rank all identified risks in order of priority, considering both quantitative scores and business context. Allocate more testing resources to high-risk areas and assign experienced testers to critical risk areas.

- Test Strategy Development: Design comprehensive test coverage for high-risk areas with multiple test scenarios. Select rigorous testing techniques for high-risk areas and apply exploratory testing to uncover unknown risks.

- Test Execution and Monitoring: Execute high-risk area tests first, allocating more time for thorough testing of critical functions. Monitor test results continuously and adjust the testing approach based on findings.

- Risk Review and Adjustment: Regularly review and update risk assessments, monitor actual defect patterns versus predicted risks, and adjust priorities based on new information. Analyze the effectiveness of the risk-based approach and update processes based on lessons learned.

Following these steps helps teams concentrate testing where it matters most, reduce the risk of critical escapes, and make transparent trade-offs between coverage and schedule.

33. How Do You Conduct Root Cause Analysis?

Root Cause Analysis (RCA) is a crucial technique in quality control and is often discussed in quality control interview questions, as it shows how testers identify the fundamental causes of defects to prevent recurrence and improve software reliability.

Key Steps:

- Collect and Analyze Data: Gather defect logs, test results, and related documentation to understand the issue.

- Identify Root Causes: Apply techniques like the “5 Whys” or Fishbone (Ishikawa) diagrams to trace symptoms back to underlying causes.

- Validate Causes: Confirm the identified root causes through re-testing, observations, or additional analysis.

- Document Findings: Record the root causes, corrective actions, and lessons learned for future reference.

- Implement Corrective Actions: Apply fixes or process improvements to prevent similar defects.

- Monitor Effectiveness: Continuously track outcomes to ensure corrective measures address the root causes effectively.

These steps help ensure that defects are not only fixed but prevented from recurring, ultimately improving product quality.

34. What Is Risk in Software Projects and How Do You Manage It?

In software projects, a risk is any uncertain event that could negatively impact objectives such as scope, timeline, quality, or cost. Managing risks is an important part of quality control and is often highlighted in most quality control interviews, as it shows how potential issues are identified, assessed, and mitigated to ensure reliable software delivery.

Risk Management Steps:

- Risk Identification: Brainstorm and document potential risks across project domains.

- Risk Analysis: Evaluate the likelihood and impact of each risk.

- Risk Prioritization: Rank risks based on severity.

- Risk Mitigation Planning: Develop strategies to avoid, transfer, mitigate, or accept risks.

- Monitoring: Continuously track risks and update mitigation plans as the project progresses.

35. Describe the Steps for Preparing a Quality Audit

Quality audits are crucial in the quality control process, and these questions are often featured in most of the quality control interview questions to demonstrate systematic evaluation of processes.

- Define Audit Scope & Objectives: Decide processes, projects, or areas to audit.

- Develop an Audit Plan: Include a schedule, resources, criteria, and checklists.

- Notify Stakeholders: Inform teams about audit timing and expectations.

- Review Documentation: Study quality manuals, process documents, and records.

- Conduct Pre-Audit Meeting: Clarify objectives and procedures with participants.

- Execute the Audit: Collect evidence via interviews, observations, and document reviews.

- Document Findings: Log non-conformances, observations, and best practices.

- Prepare the Audit Report: Summarize results, highlight issues, and suggest improvements.

- Follow-Up: Track corrective actions to closure.

36. How Do You Select Which Tests to Automate in QC?

Test automation is a critical part of quality control and is often asked in many quality control interview questions.

- Repetitiveness: Automate tests that run frequently or across multiple builds.

- Stability: Choose mature features unlikely to change rapidly.

- Complexity: Prefer automating tedious or error-prone manual tests.

- High ROI: Focus on tests that save significant manual effort.

- Data-Driven: Tests requiring multiple inputs benefit from automation.

- Critical Functionality: Automate tests covering essential business workflows.

- Compatibility: Ensure the environment supports automation tools effectively.

37. Explain Peer Review and Walkthrough

Peer reviews and walkthroughs are essential quality control practices that must be followed in order to ensure the quality of the product from the user perspective and are often highlighted in many of the quality control interview questions.

- Peer Review: Colleagues examine artifacts (code, requirements, test cases) to detect defects and suggest improvements before execution. Involves preparation, individual review, and group discussion.

- Walkthrough: The author guides team members step-by-step to explain content and gather feedback. Focuses on knowledge sharing and early defect detection.

Both practices reduce downstream defects and improve team communication.

38. How Do You Track and Prevent Recurring Defects?

Tracking recurring defects is a key quality control activity to maintain quality gates and is often mentioned in most quality control interview questions.

- Defect Management: Use defect management systems to tag and analyze recurring issues.

- Root Cause Analysis: Identify underlying problems causing recurring defects.

- Corrective Actions: Implement process improvements and fixes based on analysis.

- Knowledge Base: Maintain a repository of known issues and resolutions.

- Trend Analysis: Detect spikes in recurring defects to prevent future occurrences.

- Test Coverage: Enhance coverage in defect-prone areas and increase regression testing.

39. Explain Defect Cascading and How to Avoid It

Defect cascading occurs when a single defect triggers multiple downstream failures throughout the system. For example, a database connection issue might cause login failures, which then affect user session management, payment processing, and reporting features.

Prevention Strategies:

- Impact Analysis: Map dependencies and conduct thorough impact assessments before releases.

- Isolation Design: Implement circuit breakers, bulkheads, and fail-safe mechanisms.

- Early Detection: Use comprehensive unit and integration testing to catch issues before they propagate.

- Staged Rollouts: Deploy incrementally with monitoring at each stage.

- Root Cause Analysis: Focus on fixing underlying causes rather than just symptoms.

40. What Metrics Do You Use for Assessing QC Effectiveness?

QC effectiveness is commonly assessed using defect rates, test coverage, and defect leakage to ensure product quality and process reliability.

QA metrics are essential to measure how well quality control processes perform:

- Defect Detection Rate: Percentage of defects found before production.

- Escape Rate: Critical defects that reach production despite QC.

- Test Coverage: Code and requirement coverage percentages.

- Mean Time to Detection (MTTD): Speed of identifying defects.

- Cost of Quality: Comparison of prevention vs. correction costs.

- Customer Satisfaction: End-user experience metrics.

- Test Execution Efficiency: Pass/fail rates, automation coverage.

- Cycle Time: Time from defect identification to resolution.

41. What Role Do KPIs Play in QC?

KPI stands for Key Performance Indicator, and these KPIs serve as strategic metrics that align QC activities with business objectives.

- Performance Tracking: Monitor QC process effectiveness over time.

- Resource Allocation: Identify areas needing additional attention or investment.

- Continuous Improvement: Establish baselines and track improvement trends.

- Stakeholder Communication: Provide executives with measurable quality insights.

- Risk Management: Early warning indicators for potential quality issues.

- Team Accountability: Clear targets that drive focused improvement efforts.

42. How Do You Create and Maintain Automation Scripts for QC?

Automation scripts are created using standardized frameworks, clear coding practices, and reusable components to ensure reliability and scalability.

A structured approach ensures efficient and maintainable automation:

- Framework Selection: Choose suitable tools like Selenium, Cypress, or TestNG.

- Page Object Model: Implement reusable, maintainable code structures.

- Data-Driven Testing: Separate test data from test logic for flexibility.

- Environment Configuration: Parameterize scripts for different environments.

- Version Control: Track all changes with proper branching strategies.

- Regular Reviews: Conduct scheduled code reviews and refactoring.

- Failure Analysis: Investigate and fix flaky tests promptly.

- Documentation: Maintain clear coding standards and guidance.

- Modular Design: Create reusable components to reduce maintenance overhead.

43. What Role Do Cloud-Based Testing Platforms Play in Enhancing Scalability and Cross-Environment Coverage?

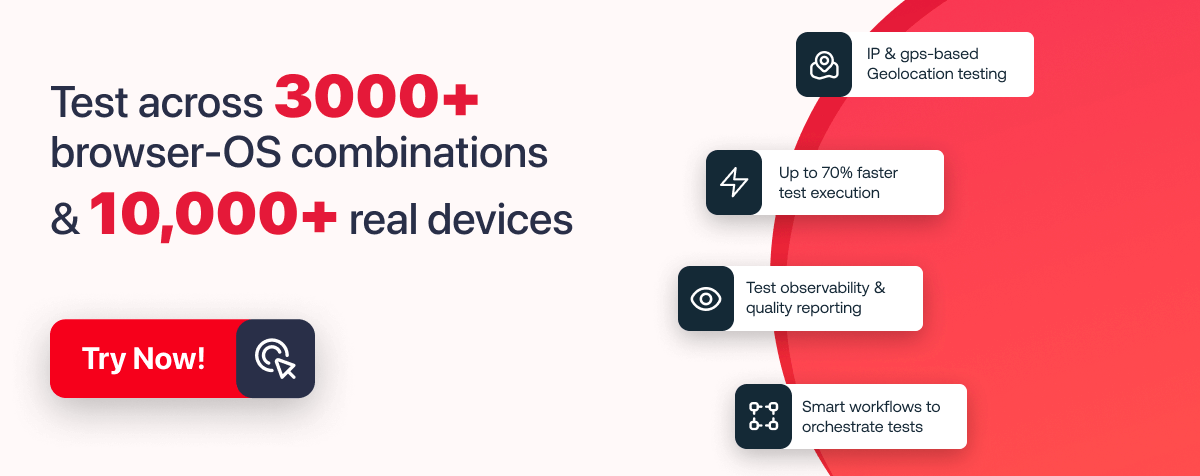

Cloud-based testing platforms play a crucial role in enhancing quality control by enabling scalable, cross-environment test execution. Platforms like LambdaTest , a quality engineer platform, empower teams to test smarter, ship faster, and maintain high standards. Built for scale, it provides a full-stack testing cloud with 10K+ real devices and 3,000+ browsers.

This approach allows quality control processes to remain robust without being constrained by local infrastructure, accelerating test execution and improving reliability.

Cloud-based platforms also streamline CI/CD integration, support parallel testing, and reduce environment-related maintenance overhead, giving QA teams greater control over test coverage, execution efficiency, and overall product quality.

44. What Is Continuous Integration and Why Is It Needed for QC?

Continuous Integration (CI) automatically integrates code changes and runs quality checks throughout development.

Why it's essential for QC:

- Early Feedback: Immediate notification of integration issues.

- Consistent Testing: Automated test suites run with each code change.

- Risk Reduction: Smaller, frequent integrations reduce complexity.

- Quality Gates: Prevent deployment of non-compliant code.

- Regression Prevention: Automated regression testing per build.

- Team Coordination: Shared visibility of build and test status.

45. What Is the CAPA (Corrective and Preventive Action) Process?

CAPA is a systematic approach to identify root causes of quality issues and implement actions to correct existing problems and prevent their recurrence.

CAPA addresses and prevents recurring quality issues:

- Problem Identification: Document the non-conformance.

- Investigation: Conduct root cause analysis using 5-Whys or Fishbone diagrams.

- Corrective Action: Fix the current issue immediately.

- Preventive Action: Implement measures to prevent recurrence.

- Implementation: Execute planned actions with defined timelines.

- Verification: Validate that actions effectively resolve the problem.

- Documentation: Record all findings and actions for audits.

- Monitoring: Continuously track to ensure sustained effectiveness.

Experienced-Level Quality Control Interview Questions

Here are some advanced, experience-level quality control interview questions crafted for senior professionals who lead testing initiatives and influence product quality at scale.

These questions explore deeper areas such as optimizing end-to-end QC processes, managing quality risks, implementing mature automation frameworks, driving defect-prevention strategies, and aligning quality practices with organizational goals.

46. How Do You Align QC Processes With Business Goals?

Aligning QC processes with business goals means ensuring that quality activities directly contribute to customer satisfaction, product reliability, and overall organizational success. You do this by connecting QC metrics to business priorities and making quality an integral part of everyday decision-making.

- Understand Business Objectives and Customer Expectations: Start by reviewing your organization’s strategic goals and what customers value most. This forms the foundation for aligning QC with business outcomes.

- Collaborate With Stakeholders to Define KPIs: Work closely with business teams to identify KPIs that reflect both quality and success, like customer satisfaction, defect rates, and time-to-market. This is a common focus in quality control interview questions because it shows how you tie QC to measurable results.

- Integrate QC Checkpoints into Key Workflows: Embed quality checks within critical processes, product development, manufacturing, or service delivery. This ensures visibility, accountability, and alignment with business priorities.

- Use Data-Driven Insights to Track QC Effectiveness: Monitor KPIs, analyze trends, and use data to adjust QC strategies. Regularly share insights with leadership to highlight how quality impacts business performance.

- Create a Continuous Feedback Loop: Use QC data to refine processes as customer needs or market conditions change. This ensures QC stays relevant and contributes to long-term success.

- Build a Culture of Quality Across Teams: Encourage cross-functional ownership of quality. When every team contributes to QC, it becomes a strategic enabler for business growth and customer trust.

47. Describe Your Approach to Process Improvement in QC

Improving QC processes starts with understanding where inefficiencies occur and using data to target meaningful changes. The goal is to enhance accuracy, reduce defects, and strengthen overall workflow reliability. A structured, iterative approach ensures improvements are measurable, sustainable, and aligned with quality goals.

- Data-Driven Analysis: Collect quality metrics and defect trends to identify bottlenecks and areas needing improvement.

- Root Cause Analysis: Use tools like Fishbone diagrams or the 5 Whys to understand underlying issues rather than just symptoms.

- Benchmarking: Compare current processes against industry best practices such as Six Sigma, Lean, or Total Quality Management (TQM).

- Iterative Improvement: Apply PDCA (Plan-Do-Check-Act) or Agile approaches to continuously test and implement improvements.

- Cross-Functional Collaboration: Engage diverse teams for brainstorming and problem-solving.

- Measurable Goals: Set KPIs to track progress and ensure effectiveness.

- CAPA Implementation: Apply corrective and preventive actions to reduce the recurrence of defects.

- Documentation & Communication: Share improvements to sustain long-term gains.

- Culture: Encourage continuous improvement across all levels.

48. How Do You Ensure Audit-Readiness for Distributed Teams?

Ensuring audit-readiness for distributed teams requires standardization, transparency, and continuous oversight. The focus is on creating consistent processes, maintaining reliable documentation, and enabling teams across locations to meet the same compliance expectations without gaps.

- Centralized Governance: Define standardized QC processes, templates, and compliance workflows. Use automated monitoring tools to track adherence across all teams in real time.

- Unified Digital Audit Trails: Ensure every activity—tests, reviews, deployments, is logged with timestamps, approvals, and traceability links through integrated toolchains.

- Regular Audit Simulations: Conduct quarterly mock audits across regions to validate readiness, identify gaps, and build audit confidence within distributed teams.

- Central Knowledge Repository: Maintain a shared library of policies, checklists, and compliance artifacts accessible to all team members regardless of location.

- Consistent Communication Rituals: Use sync meetings, dashboards, and status reviews to keep every team aligned on compliance expectations and audit milestones.

49. What Are Your Strategies for Testing Emerging Technologies (AI/ML)?

Testing AI/ML systems requires different approaches. I focus on data quality validation, model performance testing, bias detection, and explainability testing. This AI/ML adoption grows as the industry grows, and implementing this effectiveness in QC is very challenging; this question is often asked in most quality control interview questions.

- Data Validation: Automated pipelines check for drift, completeness, and consistency.

- Model Testing: A/B and shadow testing frameworks with continuous monitoring of accuracy metrics.

- Bias Detection: Diverse datasets and monitoring for discriminatory outcomes.

- Explainability Testing: Validate that model decisions are interpretable by stakeholders.

The key is treating AI/ML models as evolving systems rather than static code, requiring continuous validation approaches.

50. How Do You Handle Conflicting Priorities in QC Management?

Handling conflicting priorities in QC management requires clear risk evaluation, transparent communication, and alignment with business goals. The aim is to make objective decisions that balance quality, timelines, and resource constraints without compromising critical areas.

- Risk-Based Prioritization: Use a prioritization matrix that weighs business impact, defect likelihood, and technical complexity to determine what must be tested first.

- Data-Driven Decision Making: Present stakeholders with evidence-based scenarios, current testing coverage, reduced coverage risks, and hybrid options—to guide objective discussions.

- Collaborative Alignment: Facilitate conversations with product, engineering, and business teams to ensure decisions reflect shared priorities rather than individual preferences.

- Focus on High-Impact Areas: Direct resources toward modules with higher user impact, historical defects, or regulatory implications to avoid bottlenecks and missed risks.

- Transparent Tracking: Use dashboards and status reports to keep all stakeholders aligned on progress, trade-offs, and risk implications.

51. What Are the Challenges in Automating QC, and How Do You Overcome Them?

Automating QC comes with challenges like maintenance complexity, unstable tests, skill gaps, and ensuring meaningful ROI. The key is to build a stable framework, prioritize the right test cases, and continuously refine automation to deliver long-term value.

- High Maintenance Overhead: Reduce effort by using design patterns like Page Object Model, modular components, and centralized utilities to keep scripts scalable and easy to update.

- Flaky or Unstable Tests: Improve reliability with smart waits, retry logic, isolated test environments, and root-cause analysis to eliminate environmental or synchronization issues.

- Poor Test Case Selection: Prioritize automation based on business impact, repeatability, risk, and execution frequency instead of automating everything.

- Integration Challenges: Ensure smooth CI/CD integration using robust pipelines, environment provisioning, and automated triggers to enable continuous testing.

- Skill Gaps in the Team: Provide training on frameworks, coding best practices, and tool usage so the team can build and maintain automation confidently.

- ROI Concerns: Track metrics like execution time saved, defect detection rate, and reduced manual effort to justify investment and improve decision-making.

52. How Do You Leverage Dashboards/Reporting Tools for QC Insights?

Leveraging dashboards and reporting tools effectively means turning raw QC data into clear, actionable insights. The goal is to help leaders make strategic decisions while giving teams the visibility they need to improve daily execution.

- Role-Specific Dashboards: Build customized views, executive dashboards showing quality trends, customer impact, and cost of quality, and team-level dashboards showing test progress, defect patterns, and automation stability.

- Real-Time Visualization: Use platforms like Tableau, Grafana, or Power BI to track metrics such as pass/fail rates, defect distribution, and environment health with live updates.

- Predictive Analytics: Apply trend analysis and forecasting models to anticipate defect spikes, identify high-risk modules, or predict release readiness.

- Automation Insights: Display automation coverage, flakiness rates, execution duration, and ROI indicators to guide framework improvements.

- Early Warning Alerts: Configure automated alerts for KPI deviations such as rising defect leakage, missed test SLAs, or sudden drops in coverage.

- Cross-Functional Visibility: Share dashboards with product, engineering, and leadership teams to ensure alignment and data-driven decision-making.

53. What Is Your Experience With Regulatory Compliance (ISO, CMMI) in QC?

In my experience, I’ve often been asked about regulatory compliance. I’ve led ISO 27001 and CMMI Level 3 implementations at my organization. I focused on integrating compliance requirements into our existing QC workflows rather than creating parallel processes.

For ISO 27001, I embedded security testing directly into our standard QC procedures and implemented automated compliance monitoring. For CMMI, I concentrated on process documentation, measurement criteria, and continuous improvement mechanisms. The challenge was maintaining compliance without adding bureaucratic overhead, which I addressed through automation and by making compliance activities part of daily operations.

Regular internal audits and process refinements ensure that we not only maintain certification but also continuously improve efficiency, reinforcing a culture of quality throughout our team.

54. How Do You Optimize QC Processes for High Scalability?

Optimizing QC processes for high scalability requires balancing technical efficiency with organizational maturity. The goal is to ensure quality practices scale smoothly as products, teams, and release cycles grow in complexity.

- Cloud-Based Scalable Infrastructure: Use cloud testing platforms that auto-scale based on workload, ensuring fast execution during peak demand without resource constraints.

- Containerized Test Environments: Standardize environments using Docker/Kubernetes to ensure consistency, faster provisioning, and high parallelism across test suites.

- Parallel Execution Frameworks: Implement parallel and distributed execution to reduce cycle time, enabling large-scale test runs across multiple configurations.

- Federated QC Model: Allow teams local autonomy while enforcing central quality standards, ensuring consistency without slowing delivery.

- Self-Service Testing Platforms: Provide teams with on-demand access to test data, environments, automation pipelines, and quality gates to reduce manual dependencies.

- Risk-Based Testing: Prioritize critical modules, high-impact features, and historically defect-prone areas to prevent unnecessary test expansion as systems grow.

- Automated Quality Gates: Integrate automated checks in CI/CD to enforce quality baselines without adding human bottlenecks.

- Continuous Optimization: Review test execution data, defect trends, and pipeline metrics to refine processes as scale increases.

55. What Advanced Statistical Techniques Do You Use in QC?

Advanced statistical techniques play a major role in strengthening QC decision-making, especially when addressing analytical topics often seen in quality control interview questions. These methods help you predict risks, validate assumptions, and monitor the health of both processes and products.

- Control Charts for Process Stability: Use SPC charts (X-bar, R-chart, C-chart) to track variation, identify trends, and ensure the testing process remains stable over time.

- Regression Analysis for Defect Prediction: Apply linear or logistic regression to forecast defect likelihood based on code complexity, module size, and historical defect behavior.

- Hypothesis Testing: Use techniques like t-tests and chi-square tests to validate A/B testing outcomes, compare defect rates, or confirm process improvements.

- Machine Learning Models: Build predictive models that analyze commit history, defect density, and developer activity to identify high-risk components before testing begins.

- Bayesian Analysis: Estimate confidence levels in test coverage, assess release readiness, and update risk probabilities as new data becomes available.

- Statistical Process Control (SPC): Monitor testing effectiveness, detect deviations early, and maintain consistent quality as the system scales.

56. Describe a Time You Influenced a Team to Adhere to QC Best Practices

Demonstrating influence in QC often comes down to guiding teams toward better practices without slowing them down. The goal is to show measurable value so teams naturally adopt quality behaviors instead of seeing them as obstacles.

Situation: A development team resisted adopting code review practices because they believed it would slow delivery.

- Action 1: Instead of enforcing rules, I presented data showing how defects caught during reviews were far cheaper to fix than those found later in production.

- Action 2: I introduced a quality champions program, empowering respected developers to advocate for best practices.

- Action 3: I streamlined the entire workflow by integrating review tools, templates, and checklists directly into their existing pipeline to remove friction.

Outcome: Within three months, defect leakage dropped by 60%, review participation increased organically, and the team began requesting additional quality tools. The shift happened because I led with value, not mandates.

57. How Do You Maintain Continuous Improvement Cycles in Large Projects?

Maintaining continuous improvement in large projects requires disciplined feedback loops, strong governance, and scalable processes. This question is asked in most of the quality control interview questions, as it focuses on how you keep quality evolving as teams, systems, and workloads grow.

- Structured Feedback Loops: Establish recurring retrospectives at the sprint, release, and quarterly levels to capture insights from testing, development, and operations.

- Data-Driven Improvements: Use trends from defect density, escape rates, automation stability, and cycle time metrics to identify systemic issues and improvement opportunities.

- Standardized Yet Flexible Processes: Maintain core QC standards across all teams but allow localized adjustments so each group can refine workflows without breaking consistency.

- Continuous Automation Enhancement: Regularly review automation coverage, flakiness analysis, and execution time to improve frameworks and reduce manual effort.

- Cross-Team Knowledge Sharing: Run guilds, playbook updates, and best-practice sessions so improvements from one team benefit the entire project.

- Regular Process Audits: Conduct periodic internal audits to ensure teams follow QC practices while identifying gaps early.

- Incremental Pilot Testing: Test new tools or processes with a small team first, gather feedback, refine, then scale across the organization.

- Leadership Alignment: Present improvement outcomes to stakeholders to maintain sponsorship, resource allocation, and long-term commitment.

58. What Are the Most Critical Risks in Software QC Today?

You face several major risks in modern software QC, especially as systems grow more complex and delivery cycles accelerate.

One of the biggest challenges is ensuring AI/ML model reliability, along with managing security vulnerabilities across distributed and interconnected architectures.

Cloud-native systems introduce new testing difficulties around service interactions, failure modes, and observability. At the same time, evolving data privacy regulations add an extra layer of compliance complexity to your testing activities.

Another significant risk is the skills gap. Traditional QC methods don’t fully address modern environments like microservices, containerized stacks, or AI-driven systems. To keep pace, you need to continuously upskill your QC teams so they can handle these emerging challenges effectively.

59. How Do You Balance Rigorous QC With Fast Delivery Deadlines?

One critical area is identifying the most pressing risks in software QC today. Key risks include AI/ML model reliability, security vulnerabilities in increasingly complex architectures, and the challenge of maintaining quality in fast-paced deployment cycles.

Cloud-native architectures introduce testing challenges around service interactions and failure modes, while evolving data privacy regulations increase compliance complexity.

Another significant risk is the skills gap; traditional QC approaches often fall short for modern systems like microservices, containerized environments, and AI-based applications. Organizations must continuously upskill QC teams to mitigate these evolving risks and ensure robust software quality.

60. How Do You Ensure Documentation Version Control in Multi-team Projects?

Documentation version control is often highlighted as a critical practice in many quality control interview questions, especially when multiple teams contribute to shared processes. To manage this effectively, you implement centralized documentation repositories with automated version control, using tools like Confluence integrated with Git workflows. All changes go through peer review, similar to code, to ensure accuracy and consistency.

You define documentation ownership so each team manages specific sections, while all updates remain transparent to other teams. Automated notifications alert stakeholders to relevant changes across the project.

Regular documentation audits keep content current, and you also perform documentation testing to validate that procedures work exactly as described. This approach ensures strong traceability, accountability, and reliability across distributed teams.

61. Describe a Scenario Where QC Activities Discovered High-Impact Issues Late in the Cycle. How Did You Handle It?

During a major release at my organization, final load testing revealed a performance degradation that could have impacted 70% of our users. With only 48 hours before the planned release, I immediately activated our crisis response protocol.

I assembled a cross-functional team to identify the root cause while simultaneously preparing rollback procedures. We traced the issue to a third-party library upgrade and evaluated two options: revert the upgrade or implement a targeted workaround. We chose the workaround to avoid delaying the release, while enhancing production monitoring and preparing rapid rollback capabilities.

The release proceeded successfully, and we leveraged this experience to strengthen our earlier performance testing and risk mitigation processes. This approach demonstrated proactive decision-making, cross-team coordination, and adherence to quality control best practices.

What Are the Latest Trends in Software Quality Control?

Here are the key trends in modern software quality control:

- AI/ML in Testing: Smart test case generation using machine learning algorithms, predictive defect analysis from historical data, and self-healing test automation that adapts to UI changes automatically.

- Shift-Left & Shift-Right Testing: Early integration of QC in development phases through unit tests and code reviews, combined with continuous monitoring and testing in production for real-time feedback.

- DevSecOps Integration: Security testing embedded into CI/CD pipelines, automated vulnerability scanning, and "security as code" practices with version-controlled policies.

- Low-Code / No-Code Testing: Visual test creation tools and drag-and-drop automation platforms allow non-technical users to create and validate tests efficiently.

- Cloud-Native & Scalable Testing: Containerized test environments ensure consistency, dynamic scaling of infrastructure supports demand, and serverless architectures enable microservices validation.

- Real-Time Dashboards & Quality Analytics: Live monitoring of test execution, coverage metrics, predictive quality insights, and executive-level dashboards linking quality to business impact.

- Accessibility & Inclusivity Testing: Automated WCAG compliance validation, screen reader and keyboard navigation testing, and multi-language/cultural adaptation verification.

- Ethical AI & Bias Testing: Fairness testing across demographic groups, explainability of algorithm decisions, and compliance validation for AI governance frameworks.

- Sustainable Testing Practices: Green testing initiatives, optimized execution for energy efficiency, and monitoring of resource usage in test environments.

These trends in the QA process highlight how quality control today goes beyond defect detection; it emphasizes automation, scalability, ethical compliance, and measurable business impact, and these questions are often highlighted in most of the quality control interview questions.

Conclusion

To wrap up, mastering quality control interview questions is essential for anyone looking to excel in quality roles across industries. Whether you're just starting out, advancing your skills, or stepping into leadership, a deep understanding of QC concepts and practical problem-solving will set you apart.

Remember that continuous learning and practice are key to staying relevant in this evolving field. Use this guide as a foundation to prepare confidently and showcase your expertise in any quality control interview. Success in quality control not only helps organizations deliver reliable products but also drives business excellence.

Frequently Asked Questions (FAQs)

Did you find this page helpful?

Start your journey with LambdaTest

Get 100 minutes of automation test minutes FREE!!