Power Your Software Testing with AI and Cloud

Supercharge QA with AI for Faster & Smarter Software Testing

- Testing Basics

- Home

- /

- Learning Hub

- /

- QA Metrics Tutorial

QA Metrics Tutorial: Guide with Examples and Best Practices

Explore how to leverage QA Metrics to improve your software testing process and ensure high-quality software development.

Last Modified on: September 26, 2025

- Share:

OVERVIEW

Quality Assurance or QA metrics are the indicator that allows quantitative analysis of the quality and efficiency of the software development and testing life cycle. The QA metrics are used to estimate the progress of software development and the outcome of the test results. You can easily track and monitor the status of the QA activities, evaluate and measure team efficiency and optimize the entire Software Development Life Cycle.

Without QA metrics, you won’t be able to measure and analyze the quality of the software application. Defining the critical QA metrics during the development process and estimating how well the developed software application works is crucial.

With each day passing, the software industry is becoming highly competitive in developing quality software applications. To this, more inclination is seen towards test automation for faster software application release.

However, many developed software applications still do not perform as per the end-user expectation, leading to the loss of reputation of the organization and the uninstallation of the software application. The primary reason for this is not considering QA metrics during the software application development to track the progress and measure its quality.

The QA process in the Software Development Life Cycle has become drawn out, corresponding to the high complexity of the software applications. This requires exhaustive testing and fixing bugs before the software application is released in the market. Thus, the QA process must be planned and monitored for its successful development. To this, the QA metric is the most effective way to track the efficiency of the QA activities.

What are QA Metrics in Software Testing?

QA metrics are the quantitative values used to measure, evaluate and assess the software application's quality, function, and performance. To understand QA metrics, it is crucial to explore the QA process.

In the software application development process, it is important to perform testing to find any defect and fix it as soon as possible. The QA process systematically determines whether the software applications meet the Software Requirements Specification (SRS) and ensure the highest quality.

In this QA process, QA metrics are defined as the indicators that should be tracked and improved to ensure the quality development of software applications. You can use QA metrics in the Software Development Life Cycle phases, including requirements gathering, design, coding, testing, and maintenance. It is easy to identify areas of improvement in software applications, track progress and give data-driven knowledge into the effectiveness of the QA processes.

Why are QA Metrics important?

QA metrics signify the quality and performance of the software application. QA analysts and developers use it to enrich their productivity in the software development process. Here are some reasons why QA metrics are important:

- Helps to decide different kinds of needed improvements to develop high-quality, bug-free software applications. This is because testing metrics give data-driven insights into the performance of the test process.

- Allows to decide the next phase of activities in developing software applications like estimated cost.

- Helps to ensure that the quality of the software application being tested is as per required standards. For example, QA metrics like defect density and code coverage give information on the critical functionalities of a software application under test.

- It is possible to make informed decisions about software application development at all organizational levels. By evaluating metrics like test cycle time and automation coverage, the testing and development team can decide on the testing process and prioritize testing efforts to increase its effectiveness and efficiency.

- By tracking and sharing metrics such as test case execution or resource utilization rates, testing and development teams can align their efforts and work together towards a common goal.

Features of Best QA Metrics

The presence of an infinite number of quality assurance metrics might be challenging for QA analysts to choose the most appropriate so that they do not miss measuring crucial aspects of software applications.

There are certain critical features of the QA metrics that define its value. You should know this to choose the correct QA metrics for your software application project. Here are some of those features:

- Zero subjectivity

- Updated metrics

- Relevancy

- Measurability

- Extensive

It is a crucial feature of testing metrics. It indicates that QA metrics are not only objectively measurable but also actionable.

Updated metrics are better than outdated ones. When you test a software application, considering outdated metrics does not give an accurate estimation of its functioning. Therefore, good QA metrics are those which are regularly updated.

The QA metrics should be relevant to the organization’s goal and meet the Software Requirements Specification of the software application.

The QA metric should be easily measurable to track the QA process and identify areas for improvement.

The QA metrics should be comprehensive and extensive. This means they should be able to cover all aspects of the software application crucial to measure its quality.

How does QA Metrics improve the Test Process?

QA metrics in software testing allow tracking the status of the QA activities, assessing team effectiveness, and measuring software quality. The test analyst also uses QA metrics to determine the team's productivity and prepare for future improvement in the QA process in software development. Let's see how?

- Quality assurance metrics estimate the time required by regression testing by tracking the number of tests introduced to the new update of the software application. This will help estimate the time required in testing and find the software application component that needs improvement. This help makes the QA process more efficient by ensuring the quality of the software application

- Quality assurance metrics give information on the behavior and function of the software application. Due to this, QA analysts can identify and fix defects quickly.

- Software testing methodologies and strategies can be better planned with QA metrics. As an outcome, the testing team can be better equipped for the new Software Development Life Cycle.

However, it is important to note that QA metrics are measurements and indicators signifying the quality of the software application. To accurately analyze the QA process and team performance, you should consider the software project’s specifications, estimate the release date and workflow of the organization, etc. However, this process can be optimized if testers follow the stages of the metric life cycle strictly.

Stages of Metrics Life Cycle

QA metrics life cycle is the process of analyzing, measuring, and reporting the software testing metrics over time. In other words, gathering data on the test, analyzing it, and reporting to estimate the success of the software application. It begins by selecting the right QA metrics to indicate progress in software application development and what must be fixed.

Based on this, you collect data from test logs, performance tests, and bug-tracking systems. All the collected data are reviewed and reported to check the functioning of the software application. Based on the information, you can make changes to the software application.

Thus, tracking the QA metrics throughout the Software Development Life Cycle helps ensure that software applications are developed as per the requirement and end-user expectations. Let us see the phases of the QA metrics life cycle.

- Analysis: This involves recognizing the appropriate QA metrics for testing and defining the adopted QA standards. The testing team must identify the most relevant metrics for measuring the software's effectiveness and quality. This is followed by defining QA standards which include guidelines on how to measure and report on the identified metrics, as well as guidelines on the overall testing process.

- Communicate: Here, the testing team and other stakeholders are informed about the QA metrics. Further, the testing team is also trained on data points like its collection and measurement that must be addressed to process the testing metrics.

- Evaluation: This involves collecting and verifying the data to ensure it is complete, accurate, and error-free. Using such data, an evaluation of the value of QA metrics is done. This includes evaluating the data to find whether the QA metrics align with the testing process's objectives and give meaningful insights into the software's quality and performance.

- Report: In this phase, the testing team creates sound and compelling inferences for the data. Based on this report is created with an effective conclusion and distributed to the stakeholders and others for feedback.

Type of QA Metrics

Considering the availability of many QA metrics in software testing, they are divided into three groups for better understanding. Those three groups are as follows:

Process Metrics

The process metrics define the features and performance of the software application. It is used to improve the process efficiency of the Software Development Life Cycle. Examples of process metrics include test cycle time, defect density, and test case execution rate.

Product Metrics

It delineates software applications’ design, quality, size, performance, and complexity. Examples of product metrics include code complexity, code coverage, and defect severity.

Project Metrics

It measures the efficiency of the software testing, development team, or test tools they use to test the software application. In other words, project metrics measure the generic quality of the projects. You can estimate the team's productivity, testing costs, and any possible flaws. Examples of project metrics include cost variance, defect density, code coverage, and requirements coverage.

Type of Manual QA Metrics

Manual QA metrics is used to assess the quality and effectiveness of manual software testing efforts. You can use it to evaluate different aspects of the software testing process, like test case design, test execution, and defect management.

The two main types of manual QA metrics are as follows:

- Base or Absolute Metrics

- Calculated or Derivative Metrics

Base or Absolute Metrics

During the test case development and execution, the raw information/data (number of test cases executed, number of test cases) collected by the QA analyst helps to derive base metrics. Such information/data is tracked throughout the Software Testing Life Cycle in the form of the number of test cases that need to be executed, the number of test cases developed for software projects, the number of test cases passed/failed/blocked, etc.

The base metric is also called an absolute metric which is a quantitative measure based on the actual data obtained during the test case development and execution and tracked throughout the Software Testing Life Cycle. Base or absolute metrics give information that can be counted or measure specific aspects of the quality of software applications. In simple terms, they provide basic information about the testing process, such as the number of test cases written or the time to complete a testing cycle.

For example, defect density is an absolute metric because it measures the number of defects per code unit. Similarly, the number of test cases executed or the percentage of defects found are absolute metrics. These directly measure the progress of the software testing process and how close it is to completion.

There are different types of base QA metrics:

- Total Number of Test Cases

- Number of Passed Test Cases

- Number of Failed Test Cases

- Number of Blocked Test Cases

- Number of Identified Bugs

- Number of Accepted Bugs

- Number of Rejected Bugs

- Number of Deferred Bugs

- Number of Critical Bugs

- Number of determined Test Hours

- Number of Actual Test Hours

- Number of bugs detected after release

It is the measure of the total number of test cases developed for testing various software application functionality.

Formula:

Total number of test cases = (number of functional requirements) x (number of test cases per requirement).It is the measure of the number of test cases developed that have successfully passed during the software testing process.

Formula:

Number of test cases passed = (number of test cases executed) - (number of test cases failed) - (number of test cases blocked).It measures the number of test cases that failed during the software testing process.

Formula:

Number of test cases failed = (number of test cases executed) - (number of test cases passed) - (number of test cases blocked).It measures the number of test cases that could not be executed due to some issue or blockers. For example, the test environment may not be correctly set up or have the required hardware or software resources to run the test case. There also might be test case design issues that lead to test case blocks.

Formula:

Number of test cases blocked = (Number of test cases designed) - (Number of test cases executed).It measures the number of bugs found, including critical and non-critical bugs. Such bugs need to be addressed and fixed by the development team.

Formula:

Number of defects found = (Number of defects accepted) + (Number of defects rejected) + (Number of defects deferred).It measures the number of bugs identified during testing marked as valid issues leading to the non-functionality of the software application.

Formula:

Number of defects accepted = (Number of defects found) - (Number of defects rejected) - (Number of defects deferred).It measures the number of bugs identified during testing but is not considered valid issues that could impact the functioning of the software application.

Formula:

Number of defects rejected = (Number of defects found) - (Number of defects accepted) - (Number of defects deferred).It measures the number of bugs found but not addressed during the testing process and later deferred to the next phase.

Formula:

Number of defects deferred = (Number of defects found) - (Number of defects accepted) - (Number of defects rejected).It measures the number of critical bugs found during software testing and could lead to severe issues or non-functioning of the software application.

Formula:

Number of critical defects = (Number of defects found with severity level = Critical).It measures the total number of hours allocated or given for software testing according to the test plan.

Formula:

Number of planned test hours = (Total number of test cases) x (Average time per test case).It measures the actual number of hours spent on the software testing process.

Formula:

Number of actual test hours = (Time started testing) - (Time ended testing)It measures the number of bugs detected in the software application after its release into the market.

Formula:

Number of bugs found after shipping = Total number of bugs reported after shipping - Number of bugs fixed after releaseCalculated or Derivative Metrics

It is derived from the data gathered in the base metrics through mathematical calculations or other analytical methods. You can track calculated metrics by measuring test case execution rate, defect density, and test case effectiveness.

For example, a derivative metric could be the percentage of defects found per test case derived from the total number of defects found and the total number of test cases executed. This will help you provide more detailed and great insights into the testing process, such as identifying trends, correlations, or areas for improvement.

However, choosing the right combination of metrics is important to ensure that the derivative metric accurately reflects the intended aspect of the software application or process being measured. For this, learning about different types of calculated QA metrics is important.

Below are the various categories that help differentiate different types of QA metrics.

Test Planning

The metrics are derived to facilitate test planning which is an approach of defining test strategy, the objective of the test, resources used in software testing, and the release of software application. Using these metrics, you can have good insight into the efficiency of the testing process and further help you make informed decisions on the effective release of software applications.

Following are the metrics that help to facilitate test planning:

- Passed Test Case Percentage

- Failed Test Case Percentage

- Blocked Test Case Percentage

- Fixed Defects Percentage

- Accepted Defects Percentage

- Defects Rejected Percentage

- Defects Deferred Percentage

- Critical Defects Percentage

- Average Time to Repair Defects

It measures the total percentage of passed test cases from the total executed test cases. This shows the overall effectiveness of the test cases that have succeeded in detecting bugs and indicates the quality of the software application.

Formula:

Total number of passed test cases / Total number of test cases x 100%It measures the total percentage of failed test cases from the total executed test cases. This gives an idea of the components of software applications requiring improvements and further testing.

Formula:

Total number of failed test cases / Total number of test cases x 100%.It measures the blocked test cases percentage out of the total number of test cases executed. It indicates the issues and dependencies which require resolution or fixation before the continuation of the test.

Formula:

Total number of blocked test cases / Total number of test cases x 100%.It measures the total percentage of fixed defects out of the total number of reported defects. This mainly shows the effectiveness of the development team in addressing the reported issue.

Formula:

Total number of defects fixed / Total number of defects reported x 100%.It measures the total percentage of accepted defects out of the total number of defects reported and which are marked as valid and require fixation. This shows the accuracy and consistency of the defect-reporting process.

Formula:

Total number of defects accepted as valid / Total number of defects reported x 100%.It measures the total percentage of rejected defects out of the total number of defects reported, which are marked as invalid and do not require any further fixation. You can get an idea of the quality of the defect reports and help build collaboration between the testing and development teams.

Formula:

Total number of defects rejected as invalid / Total number of defects reported x 100%It measures the percentage of the defects marked as deferred for future release. When the defect is deferred, the development team has to postpone the fixes to a future release due to time constraints. This means that the testing team should decide which defects should be fixed first and assign the appropriate resources to address them effectively.

Formula:

Total number of defects deferred for future / Total number of defects reported x 100%It measures the percentage of defects marked as critical out of the total number of defects. Such metrics indicate the severity of the reported issue on the software application's functionality, usability, and safety.

Formula:

Total number of critical defects / Total number of defects reported x 100%It measures the average time taken to fix the reported defects during software testing ranging from its first reporting to its resolution. With the less average time to repair defects, the development team's performance in resolving the reported issue is high.

Formula:

Total time taken for fixing the bugs / Total number of bugs foundTest Efforts

The test effort metrics allow us to measure the time, resources, and effort required to complete the testing. You can easily answer the question of “how long or how many or how much? With this, you will establish a baseline for test planning and help you plan and allocate your software testing resources effectively.

However, it is important to note that such metrics measurements are just average estimations, where half of the values fall over the average and half of them under.

- Tests Run Per Period

- Test Design Efficiency

- Test Review Efficiency

- Defects Per Test Hour

- Bugs Per Test

- Time To Test A Bug

It gives the measurement of the number of tests run on a particular period of time, like days or weeks

Formula:

Tests run per period = Total number of tests run / Total time takenIt gives an estimation of the efficiency of the test design process by measuring a number of test cases designed per unit of time.

Formula:

Test design efficiency = Total number of tests designed / Total time takenIt gives an estimation of the efficiency of the test review process. It measures the number of test cases reviewed per unit of time.

Formula:

Test review efficiency = Total number of tests reviewed / Total time takenIt gives an estimation of the average number of defects found during a particular period of testing time.

Formula:

Defects per test hour = Total number of defects / Total number of test hoursIt gives an estimation of the average number of bugs found per test case in the software testing.

Formula:

Bugs per test = Total number of bugs found / Total number of testsIt gives an estimation of the average time required to retest a defect after its fixation.

Formula:

Time to test a bug = Total time taken between defect fix to retest for all defects / Total number of bugs foundTest Effectiveness

It measures software testing in terms of its ability to fix and find bugs. Test effectiveness metrics are the percentage of the total detected defects and the overall reported defect.

For example, suppose a testing team found 80 defects in a software application; the total number of defects was 100, and the test effectiveness would be 80%. This will show that the testing teams found 80% of the defects in the software application.

With such estimation and measurement, you can easily answer questions like how good the tests were or whether there is a high value of test cases. Further, test effectiveness metrics help to evaluate the quality of the individual test cases and test suites.

You can measure test effectiveness metrics by the following two means:

- Metrics Based: Test Effectiveness using Defect Containment Efficiency

- Context-Based: Test Effectiveness using Team Assessment.

- When a software application is completely developed.

- When a software application is unstable with many bugs.

- When software application development has less time and resources in executing tests.

Test effectiveness is the ratio of the total number of defects detected before the release of the software application to the total number of defects found before and after the software application release.

You can calculate the test effectiveness percentage using the formula given below.

Formula:

(Bugs detected in 1 test / Total number of bugs found in tests + bug found after release)When you get a high test effectiveness percentage, the test set will be better, and test case maintenance efforts will be lowered in the long term. For example, if the test effectiveness is 60%, 40% of the defects are eliminated from the software testing. You should know that test effectiveness cannot be 100%, so you should always aim to get high value and be worried if it is not 100%.

Following are the cases when defect containment efficiency metrics may not be helpful:

In the above cases, the QA team should focus on testing the critical features of the software application reported to have the most issues or have been modified. A context-based approach is recommended to measure the test effectiveness in such cases. This involves tailoring the software testing approach based on the specific needs and risks of a particular software application development project.

Test Coverage

Test coverage measures the extent to which a software application is tested by identifying the code or functionality included by a set of test cases. Some examples of these metrics are test case by requirement, defects per requirement, and a number of requirements.

Using the test coverage metric, you can estimate whether testing activities are completed and thus use it as the criterion to conclude software testing. Such measures signify the quality of the software applications under the testing process.

Below are the key test coverage metrics:

- Test Coverage Percentage= (Number of tests runs/Number of tests to be run) X 100

- Requirements Coverage= (Number of requirements coverage/Total number of requirements) X 100

- Test Coverage = Number of detected faults/number of predicted defects.

- Test Design Coverage

- Test Execution Coverage

Some other crucial test coverage benchmarks are explained below

It is similar to test coverage; however, it measures the test case coverage percentage against the number of requirements. With these metrics, you can analyze the functional test design coverage, improving the test coverage. You can calculate this during the test design stage, which is measured using the formula below.

Formula:

Test Design Coverage = (Total number of requirements mapped to test cases / Total number of requirements) x 100It measures the total number of test cases executed and the number of test cases pending to be executed. You can measure test execution coverage during test execution with the use of the below formula:

Formula:

Test Execution Coverage = (Total number of executed test cases or scripts / Total number of test cases or scripts planned to be executed) x 100Test Economics

In software application testing, the cost involved depends on several factors like the number of people involved in the process, the requirement of different automation testing tools and resources for testing, and the infrastructure (servers, storage system, hardware devices) required to support the software testing activities.

Considering the factors involved, organizations must compare the planned cost of testing software applications with the actual cost. This is to ensure that the cost of the software testing process remains within budget. Here comes test economics metrics, which measure the return on investment (ROI) on testing. The key test economic metrics which help in the above are as follows:

- Total Allocated Costs for Testing

- Actual Cost of Testing

- Budget Variance

- Schedule Variance

- Cost per Bug Fix

- Cost of not Testing

It refers to the total projected costs for all the testing activities for a single software application project for an entire year. It involves both indirect and direct costs associated with software testing, like human resource costs (salary of testers), equipment costs (real devices and hardware), software costs (automation tools), and many others.

Formula:

Total allocated costs for testing = Direct costs + Indirect costsIt refers to the actual amount spent on testing rather than the projected cost. You can calculate this based on cost per requirement, test case, or hour of testing.

Formula:

Actual cost of testing = Total direct costs + Total indirect costsIt is the difference between the budgeted cost and the actual cost of the software application testing. It helps to measure whether the testing activities were completed within budget.

Formula:

Budget variance = Total allocated costs for testing - Actual cost of testingIt is the difference between the planned and actual testing schedules. The value will indicate whether or not the testing activities were completed on time.

Formula:

Schedule variance = Planned testing schedule - Actual testing scheduleIt is the cost involved in identifying and fixing a single bug during the software application testing. Such value helps you to get an idea of the efficiency and effectiveness of the testing process.

Formula:

Cost per bug fix = Total testing costs / Number of bugs found and fixedIt is the cost involved in identifying the defects after the software application is released to the end-users. Here the cost of not testing consists of the cost of fixing the defects, customer support, and loss or damage to the organization’s reputation.

However, its calculation does not depend on any specific formula but varies with particular situations, resulting in the cost of not testing.

Test Team Metrics

This metric indicates that the allocation of work to each member of the software testing team is uniform. Test team metrics also help to check whether any team member requires a software testing process or software application project knowledge to proceed with the underlying activities. However, it is important to note that test team metrics should be used as learning measures rather than blame.

Following are the test team metrics.

- The Number of Defects returned per Team Members

- The Number of Open Bugs to be Retested by Each Team Member

- The Number of Test Cases Allocated to Each Team Member

- The Number of Test Cases Executed by Each Team Member

It is the measure that signifies the number of defects found by each team member during software testing activities. Such a metric will inform which team member has successfully identified more defects than others and those who require more training.

It is the measure that indicates the number of defects found during software testing which are still open and require retesting to fix it. Such a metric will give information that identifies the team members skilled at retesting and those who require training in finding and fixing bugs.

It is the measure that shows the number of test cases assigned to the distinct team members to execute the test. With this metric, you can easily identify which team member is allocated with how many test cases, and the basis of that workload can be adjusted.

It is the measure that shows the number of test cases executed by distinct team members involved in software testing. This will help understand which team member is efficient in executing test cases and the team members require additional support.

Test Execution Status

This metric gives insight into how many tests are executed. It also indicates how many are still pending to be executed. Using test execution status, you can evaluate the extent to which the test is covered during the software testing.

Test Execution/Defect Find Rate Tracking

This metric measures the percentage of failed tests during software application testing to the total number of tests executed. The value can be a sign for changing or modifying the software testing approach to get more passed tests by comparing the cumulative number of defects and test execution rates.

Formula:

Test Execution/Defect Find Rate = (Number of Failed Tests / Total Number of Tests) x 100%Defect Distribution

QA metrics must also be used to track the defects found during the software testing. However, it is impossible to debug each defect in a single sprint, and bugs need to be categorized by priority, severity, and other parameters. To do this, defect distribution metrics are helpful for the teams to prioritize which defects to address first and how to allocate resources efficiently.

The following are the type of defect distribution metrics:

- Defect Distribution by Cause

- Defect Distribution by Feature/Functional Area

- Defect Distribution by Severity

- Defect Distribution by Priority

- Defect Distribution by Type

- Defect Distribution by Tester

- Defect Distribution by Test Type

The defects found during the software testing are classified based on the cause of the issues, like coding errors, design flaws, or system configuration issues.

The defects found during the software testing are classified based on the specific feature or any functional component of the software application where they occur. This helps the team find the component of software applications more prone to error. Thus, you can prioritize testing and development accordingly.

The defects found during software testing are classified based on the severity or criticality of the issues (high, medium, and low) found in software applications. This allows the team to focus on critical errors promptly.

The defects found during software testing are classified based on the priority level (high, medium, and low). Such prioritization is based on factors like the impact of defects on end users, the probability of defects occurring, etc.

The defects found are classified based on the type of issue, like functionality issues, usability issues, or performance issues. This allows the team to track the defect in the software applications by the types through which identification of an area of software application that more testing can be made.

The defects found are classified based on the tester or testing type that identified the issue, like development testers, QA testers, UAT testers, or end-users.

The defects identified are classified based on the specific testing activity that identified the issue, like code review, walkthrough, test execution, exploratory testing, and others. With this, the team can get great insight into the effectiveness of testing activities, and based on this, they are easily adjusting their software testing strategy.

Effectiveness of Change Metrics

In software application development, changes in features or functioning are common and need to be incorporated into the software application. However, it is important to monitor the impact of such changes on the stability of the software application. The reason is that new changes or updates can induce new defects and lower the stability of the software application. Here comes the effectiveness of change metrics which measure how successful software changes are in terms of their impact on the stability and quality of the software application.

Two effectiveness change metrics are as follows:

- Effect of Testing Changes

- Defect Injection Rate

This measures the total number of defects found during the software application testing corresponding to the new changes done. For example, if the development team obtained 50 defect reports, and after classifying them, 30 were related to changes made to the software application, then the total number of defects attributable to changes would be 30.

It measures the average rate that new changes made in the software application are causing defects or errors. For example, if there were 20 changes made in the software application, and 60 defects were due to such changes, then the defect injection rate would be three defects per change.

Formula:

Defect Injection Rate = (Total number of problems attributable to the changes) / (Number of tested changes)Defect Distribution Over Time Charts

It determines the increase and decrease in the total number of defects over time. Basically, it is a graphical representation that shows a number of defects reported and resolved over a period of time, for example, at the end of the Software Testing Life Cycle. You can seek information from the graph on the number of defects reported each day or week, along with the number of defects resolved or closed during the same period of time.

The defect distribution over time graph is also classified as per different categories as mentioned below:

- Defect Distribution Over Time By Cause

- Defect Distribution Over Time By Module

- Defect Distribution Over Time By Severity

- Defect Distribution Over Time By Platform

This shows a number of defects reported over time classified by the main cause of the defects, like causes related to design, development, testing, or documentation errors.

This shows the number of defects classified by the software application's module or component that had reported defects over time.

This indicates a number of defects that are classified by the severity of the defects as critical, medium, and low. Knowing this, you can prioritize defects and ensure critical issues are addressed promptly.

This shows the number of defects classified by the platform or environment where the defects were found. Here, the environment and platforms may include operating systems like Windows, macOS, Linux, Android, or iOS, as well as web browsers, databases, and other software components that the application depends on. For example, defects may be found on operating systems or web browsers.

Other Defect Metrics

Here are some other defect metrics:

- Defect Removal Efficiency /Defect Gap Analysis

- Defect Density

- Defect Age

It is the measures that indicate the ability and extent to which the development team can manage and fix valid defects reported by the testing team. You can calculate the defect gap by taking the total number of defects submitted to the development team and a total number of defects fixed by the end of the Software Development Life Cycle.

Formula:

Defect Gap % = (Total number of defects fixed/Total number of valid defects reported) X 100It is the measure that indicates the presence of the total number of defects in a software application per unit of sizes like components, lines of code function points, etc. For example, if a software application has 10,0000 lines of code, and 1000 defects were identified during testing, then the defect density would be Defect Density = 1000 / 10,0000 = 0.01 or 1%

Formula:

Defect Density = Total number of defects / Size of the software or application areaIt measures the average time required to fix any defect by the development team. Mainly, you can measure defect age in unit days; however, for the testing team working as per rapid deployment models where they have to release weekly or daily projects, it should be measured in hours. Knowing the defect age, you can track the time from bug fixing until its complete resolution.

Formula:

Defect Age= Difference in Time created and Time-resolvedHow to Calculate QA metrics?

Considering the presence of a high number of QA metrics in software testing “what is the common approach to calculating them? In this section, we will discuss common steps for calculating quality assurance metrics.

- Identify the process to measure

- Define baseline

- Calculate the actual value

- Identify areas of improvement

At the first stage, you have to prepare a test plan by reviewing the Software Requirement Specification (SRS) of the software application. Then you are required to develop manual test case scenarios for testing different aspects of the application like components, user behavior, and performance. Following this, also prepare a list of testing procedures you want to optimize.

For example, the project head assigns the QA team to identify and fix application bugs and track the software testing budget.

On defining the QA metrics, you need to share the details with other team members, including management, for its approval. For each of the defined QA metrics, you should set baseline numbers that help to evaluate the efficiency of the testing process in each iteration.

For example, the QA team set goals to document various aspects of software testing. This may involve several test cases executed, bugs found, the cost of fixing those bugs, and the overall cost of the software testing. Here, the project head wants to limit the testing budget to 4 lakhs; this will become the baseline for the testing metrics.

After setting the baseline, you need to perform extensive tests to find defects in the software application and make the required changes. You should document the details that you wish to track and evaluate. It is important to note that using large data sets for the analysis makes the QA metrics effective.

For example, the QA team has documented all the steps required to run software tests and estimated a total cost of 6 lakhs. This will be the actual cost of software testing. The budget variance is 2 lakhs, obtained by the difference between the actual and budget costs.

Now, you have to compare the actual value of the baseline number to find the area of software application to optimize. For this, continue to execute the same steps in each iteration and document the result. Based on it, prepare a detailed report on the metrics like formulas, experiments, and results.

Knowing about various QA metrics, choosing the right one for your software development projects, and using it accurately are key to planning and executing the QA process. However, QA metrics in the Agile development process are essential, and the QA team should be closely monitored to track the software testing approach and know the exact number they have to hit. When you fail to meet those QA metrics, it is important to reorient the software testing strategy.

However, when you plan the QA process, ensure to include testing on real-world environments in your testing strategy. This is because, with real device testing, it is possible to identify all possible bugs or defects in the software application being tested.

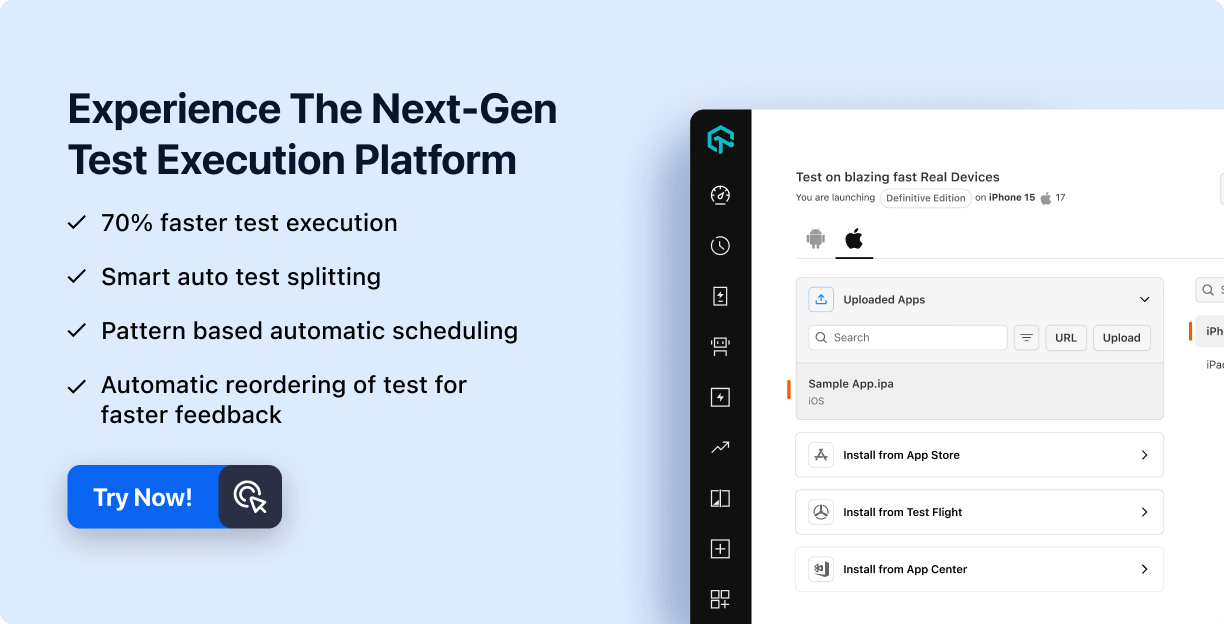

Digital experience testing platforms like LambdaTest offer a scalable cloud of 3000+ real browsers. devices, and OS combinations to automate web testing and mobile app testing. You can test on LambdaTest real device cloud to find every possible bug in software applications and get accurate results.

Subscribe to our LambdaTest YouTube Channel to get the latest updates on tutorials around Selenium testing, Cypress testing, Appium, and more.

Further, without having accurate information on the bugs, QA metrics cannot be used to set the baseline and measure the success of the software application testing.

Key Considerations when using QA Metrics

When you use QA metrics, use them accurately. For this, you should consider certain points while using QA metrics:

- You should use QA metrics as indicators, not absolutes.

- You should not depend on single QA metrics to analyze the quality of the software applications.

- Every team member involved in developing and testing the software application should understand what QA metric means and how they are calculated.

- It is possible that QA metrics get misinterpreted. For example, a high defect count might indicate poor quality of software applications. Still, it could also mean that the testing team is doing thorough work of finding and reporting issues.

Conclusion

QA metrics are the objective and subjective measurements that QA analysts collect throughout the software development and testing process. You can understand this as the absolute count or measure of the quality of the software applications.

In this tutorial, we discussed QA metrics and their different types to track and monitor the effectiveness of software application tests. Knowing about different types and use of QA metrics, you will be able to ensure the accuracy of the various tests executed and the software application’s functionality and performance.

About author

Nazneen Ahmad is an experienced technical writer with over five years of experience in the software development and testing field. As a freelancer, she has worked on various projects to create technical documentation, user manuals, training materials, and other SEO-optimized content in various domains, including IT, healthcare, finance, and education. You can also follow her on Twitter.

Frequently asked questions

- General

Did you find this page helpful?

More Related Hubs

Start your journey with LambdaTest

Get 100 minutes of automation test minutes FREE!!