Power Your Software Testing with AI and Cloud

Supercharge QA with AI for Faster & Smarter Software Testing

- Testing Basics

- Home

- /

- Learning Hub

- /

- Performance Indicators Tutorial

Performance Indicators: KPI Examples and Key Points

Improve software testing with Key Performance Indicators (KPIs). Learn their crucial role in ensuring quality and efficiency in testing processes.

Last Modified on: September 26, 2025

- Share:

OVERVIEW

Performance Indicators or KPIs play a crucial role in software testing, serving as essential metrics for assessing the testing process's effectiveness, efficiency, and quality and the applications being tested. As the complexity and diversity of applications continually increase, identifying and rectifying defects early in the Software Development Life Cycle (SDLC) is critical, and assessing the performance, functionality, and quality of testing processes. However, ensuring test results' validity, actionability, and meaningfulness while effectively communicating issues poses challenges.

To address these challenges and enhance software testing methodologies, there is a need for the systematic application of Key Performance Indicators (KPIs). Using key performance indicators, you can evaluate the applied testing approach, check how accurately the software application works, and analyze any scope for improvement. But the question remains: How can organizations effectively harness the power of Key Performance Indicators in software testing to ensure robust, reliable, and scalable applications?

Software testing plays a crucial role in identifying and rectifying defects within a software application at an early stage in the SDLC. Additionally, it provides different ways to evaluate the performance and functionality of software applications through various testing approaches, including load testing, stress testing, and more.

Furthermore, software testing verifies if the software application aligns with the needs of end users and stakeholders. It also examines how the software behaves under various conditions, such as high user traffic or low battery situations. Any bugs or errors affecting the software's reliability and scalability are identified and addressed during this process.

However, when testers conduct these tests, they must ensure they are valid, actionable, and provide meaningful results. Additionally, effectively communicating issues and sharing information about the testing process and application with the team poses challenges. In addressing these challenges, key performance indicators serve as a comprehensive solution.

Specific key performance indicators, also called testing metrics, play a crucial role in evaluating the effectiveness of testing procedures for organizations.

By utilizing these metrics, testing professionals and quality assurance teams can determine the success of the testing process and pinpoint critical areas in the software that require further attention and enhancement. Key performance indicators gauge performance testing and shed light on software testing approaches and types.

In this guide, we will understand the concept of key performance indicators, their importance in software testing, and when they can be applied, along with some key performance indicators within the software testing methods that can be used.

Understanding the key performance indicators

In software testing, the key performance indicator is the parameter that assesses the performance of the software application and test approaches used. In other words, key performance indicators play a crucial role in defining objectives for software applications and contribute to the evaluation of fundamental aspects of an organization’s software development and testing processes. They give information on the application's working and the test approach's accuracy, which can be used to monitor and analyze the testing outcome. Based on this, decisions are taken by the team to improve the testing methodologies to enhance the functionality of the software applications.

When understanding key performance indicators, it is essential to note that no single hand could evaluate the effectiveness and quality of the software application testing. Instead, different indicators define health in diverse domains like quality, efficiency, and performance.

Some common examples of key performance indicators are defect density, execution time, response time, etc. Organizations leverage them to reflect their strategic goals and objectives.

Let us understand more about key performance indicators in the following section.

Key points on the key performance indicators

Here are some key points to remember about key performance indicators in software testing:

- It gives testers quantifiable metrics that evaluate the different aspects of software testing, like resource utilization, test coverage, etc.

- It allows evaluation of the quality of the software application by reflecting different measures like pass/fail rate, defect density, etc.

- Focuses on the efficiency of the test process and identifies high-risk areas in the software application. For example, key performance indicators give data on test execution time and allow testers to focus more on critical components of software applications for optimization.

- Some indicators, such as response time and error rates, directly impact user satisfaction, making them crucial for user-centric software.

Note: Optimize your KPIs with LambdaTest for improved efficiency and quality. Try LambdaTest Now!

Considering the critical points on key performance indicators, many may relate them to QA metrics. Even though they are related to each other, they hold crucial differences.

The difference between the key performance indicators and QA metrics

The QA metrics and key performance indicators are interrelated, but their concepts differ. Metrics are the more precise and detailed measurements showing how appropriately and accurately the software application functions and performs. Those include response time, throughput, etc. On the other hand, key performance indicators are related to the complete test process, which also provides for the quality of the software applications. For instance, a KPI related to your performance testing could be the percentage of users completing a transaction within a set time frame. In contrast, a metric in the same context might pertain to the average transaction duration.

| Aspect | Key Performance Indicators (KPIs) | QA Metrics |

|---|---|---|

| Purpose | Measure the overall performance, efficiency, and effectiveness of the testing process and software application. | Focus on quantifying various quality aspects and adherence to established standards and processes. |

| Nature | Typically, KPIs are high-level and strategic, often related to business goals and objectives. | QA metrics are more specific, granular, and technical, concentrating on quality attributes and process details. |

| Examples |

|

|

| Focus | Focus on the outcome and impact of testing efforts on the software project's success and user satisfaction. | Focus on assessing the quality of the software, adherence to QA processes, and identifying areas for improvement. |

| Timeframe | Often associated with project milestones or long-term goals. | Continuous monitoring throughout the Software Development Life Cycle. |

| Responsibility | Typically, KPIs interest project managers, stakeholders, and senior management. | QA metrics are primarily used by quality assurance and testing teams. |

| Measurement Units | Typically expressed as percentages, ratios, or other business-related units. | Measured using various technical and quality-related units, such as defects, test cases, lines of code, or time. |

| Actionability | KPIs often prompt strategic decisions and actions, such as resource allocation or project prioritization. | QA metrics drive tactical decisions and actions, such as defect resolution, test case refinement, or process improvement. |

Remember that KPIs and QA metrics serve different purposes but are both valuable in ensuring software quality and project success.

For a thorough grasp of KPIs and QA metrics, their functionality, and their vital role in securing software quality and project success, explore our software testing metrics tutorial for deeper insights.

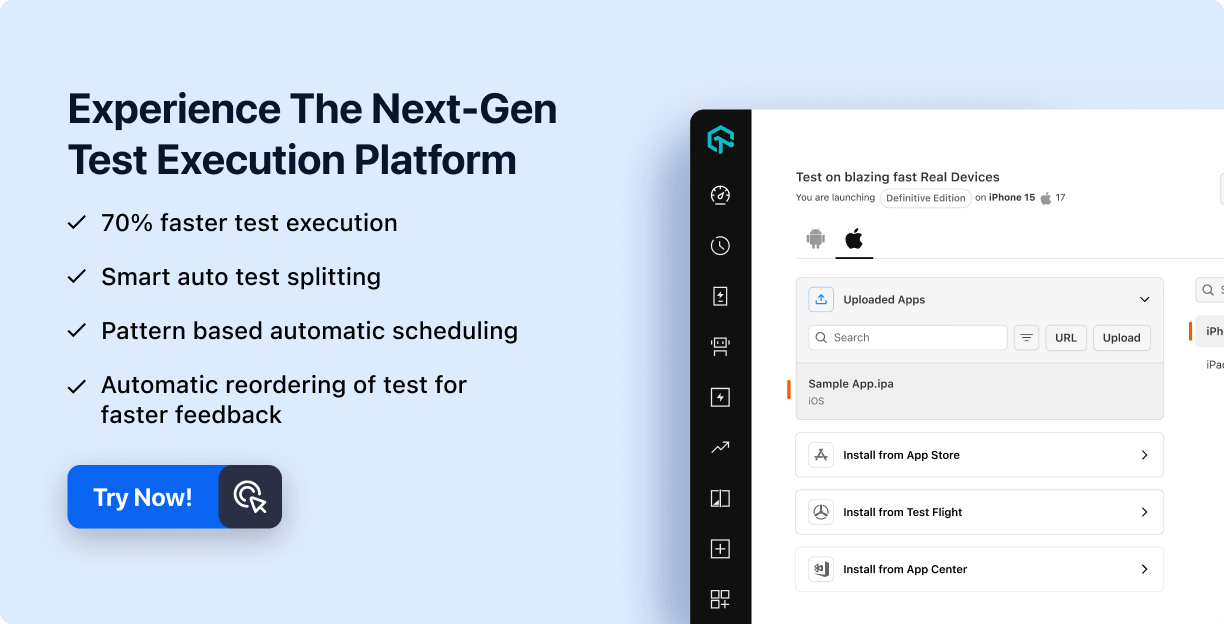

Achieving quantifiable metrics and ensuring the quality and efficiency of your testing process are most important. That is where you can make use of cloud platforms like LambdaTest. LambdaTest is an AI-Native test orchestration and execution platform that lets you run manual and automated tests at scale with 3000+ real devices, browsers, and OS combinations. With its advanced testing platform, you can evaluate and optimize key performance indicators (KPIs).LambdaTest provides real-time insights into critical KPIs like response time and error rates, ensuring a user-centric approach to software testing. It focuses on efficiency and identifies high-risk areas in your software application, making it easier to streamline your testing efforts.

If you want more detailed information on the number of tests passed or failed, try LambdaTest Test Analytics to know more about how this can be used in further sections with a better example.

In the following section, let us discover the importance of key performance indicators with software testing.

Importance of key performance indicators

In software testing, just involving automation testing tools and technologies in the team can only address the need for the testing resource but may not help optimize the testing process efficiency. Testers use key performance indicators that reflect their value and significance in assessing test process efficiency and facilitating improvements to optimize the process.

Here are some critical importance of key performance indicators:

- Assists in identifying necessary improvements for enhancing the quality and reliability of error-free software applications. It occurs because key performance indicators provide data-driven insights into the performance of different test types like performance testing, system testing, etc.

- Key performance indicators provide quantitative data on the progress of the software development project. For example, the line of code written and how many bugs have been identified and fixed. This, in turn, facilitates determining how far the test process has progressed in developing software applications.

- Help ensure the software application quality being tested meets the required standards. For instance, key performance indicators like defect density and code coverage offer insights into identified defects and assess test coverage, enabling teams to take corrective actions to meet required standards.

- Enables well-informed decision-making regarding software application development across all levels of the organization. By assessing key performance indicators like test cycle duration and automation coverage, the testing and development teams can decide on the testing approach and prioritize testing tasks to enhance efficiency and effectiveness. For instance, shorter test cycle durations and higher automation rates suggest efficient development processes, encouraging investments in automation and process improvements.

- By monitoring and sharing performance metrics such as test case execution or resource utilization rates, the testing and development teams can align their efforts and collaborate toward a shared objective.

- Key performance indicators of the software application give scope to the testers to prioritize the critical functionality for early improvement and unearth any significant risk associated with the test process. Eventually, it helps the team to focus more on testing efforts on what matters the most.

- By evaluating the key performance indicators, testers can gather and present information on the testing of applications in a much more precise and concise manner. This is because testers can present the key findings in a format that helps quickly compare charts, graphs, etc.

- By comparing actual KPIs with anticipated or desired ones, you can identify deviations, anomalies, or issues that may affect software application performance.

In the software testing process, there are certain instances that you should know when key performance indicators may be helpful or may not be beneficial. Get this in detail from the following section.

When to use key performance indicators in software testing?

It is essential to leverage key performance indicators throughout the Software Development Life Cycle to evaluate the reliability of the testing process.

- Testing Process Improvement: Once you have successfully implemented and executed the testing process several times, measuring the key performance indicators to identify areas where your testing procedure could benefit from improvement is appropriate.

- Team Efficiency: We all know that the testing team is quite extensive. Managing a sizable testing team implies a broad distribution of testing responsibilities. Consequently, it's valuable to measure specific testing KPIs, promote efficient operations, and keep everyone aligned to ensure efficient and effective task allocation.

- Methodology Update: When updating your testing methodology, measuring KPIs against the original process proves advantageous. It helps you determine the objectives you aim to accomplish with the new testing methodologies.

- Post-Release Monitoring: When software applications have been released, monitoring their performance and functionality in a live environment is essential. Here, you need to use key performance indicators like error rates, which give information on the issues that must be fixed immediately.

Before evaluating key performance indicators, organizations should clearly understand their current testing process. This comprehension is crucial for selecting key performance indicators that reveal improvement areas. Using KPIs incorrectly can lead to testing errors without this clarity.

Where key performance indicators are not applicable?

While it's crucial to measure the efficiency of a test process to ensure it's on the right track, utilizing quality KPIs for assessing the testing process may not be practical in certain situations:

- In the Initial Stages of Product Testing

When you are preparing to launch a software application for the first time, and the testing phase has just commenced, there may need to be more data to evaluate. During this period, the emphasis should be on establishing a testing process rather than measuring its effectiveness.

- For Short Testing Cycles

If you are developing a software application that won't undergo substantial changes for an extended period after its initial launch, and testing is a one-time affair, assessing the process's effectiveness might not yield significant benefits since there won't be additional testing cycles to enhance.

- With Limited Budget Constraints

Like any activity, measuring testing KPIs consumes time and effort, resulting in additional costs. Thus, when operating with a constrained testing budget, the primary focus should be implementing a cost-effective testing process rather than tracking KPIs.

The key performance indicators

Key performance indicators in software testing are essential for measuring the quality and accuracy of software applications. These key performance indicators play a crucial role in the SDLC, as the testing team relies on them to ensure the reliability of their tests. By using KPIs, testing efforts can become more effective and accurate. Here are some essential key performance indicators that you should be aware of.

Defect Detection Effectiveness (DDE)

Defect Detection Effectiveness for a testing phase signifies the proportion of identified defects within that phase relative to the total defects, presented as a percentage. It serves as a measure of the efficacy of individual steps. If, for instance, the aggregate count of identified defects amounted to 120, with 30 being pinpointed during system testing, the DDE for system testing can be computed as follows: (30 ÷ 120) x 100 = 25%.

In addition, DDE also measures the total number or percentage of identified and fixed bugs concerning the total number of defects in the software application. This information makes it possible to check how effective the testing process is and address different software system issues.

DDE = (Inadequacies detected in the specific phase ÷ Total inadequacies) x 100

Example of DDE

Imagine your software goes through these testing phases before its deployment on production servers:

- Component Testing

- Incorporation Testing

- System Testing

- Acceptance Testing

Let's suppose that following the deployment of the software in production, we have the following data:

| Phase | Defect | DDE | DDE cumulative |

|---|---|---|---|

| Unit testing | 25 | 25% | 25% |

| Integration testing | 30 | 30% | 55% |

| System testing | 20 | 20% | 75% |

| Acceptance testing | 10 | 10% | 85% |

| Production | 15 | 15% | 100% |

| 100 |

Insights Drawn from DDE

- More flaws were unearthed during incorporation testing compared to component testing, suggesting that enhancements in the unit tests may be warranted.

- Automation testing (comprising component and integration testing) only contributed to addressing 55% of the defects.

- A substantial fraction, 25%, of the defects were brought to light by users (during acceptance testing) and end-users (in the production environment). This sizable share indicates the inefficacy of internal testing.

How does it measure testing?

Here are some ways through which DDE serves as the crucial measure of the testing process:

- Testers and developers can have detailed information on the overall quality of the software application. For example, a high DDE measure is more comprehensive in testing as more defects are identified and addressed.

- The project manager uses DDE to keep a check on the progress of the testing process and, based on this, can make data-driven decisions. If there is a low DDE, it will show that more testing efforts are required to improve the quality of the software applications.

- It also helps in risk assessment in software testing. The low DDE shows some undetected issues or bugs that can interfere with production, leading to crucial issues and adversely affecting the user experience.

Significance

- Identifies deficiencies across testing phases.

- Potential for a substantial reduction in retesting efforts.

- Potential to lower the overall project costs.

- Enhances the productivity of the Quality Assurance team.

- Enable teams to identify trends and continuously improve their testing process, tools, and methodologies.

Defect Density

Defect Density, a key performance indicator, measures the number of errors and inaccuracies within the formula. Defect Density corresponds to the count of verified defects within a software module during a specific timeframe or development phase, divided by the module's size. It facilitates the determination of software readiness for release.

Defect Density is typically measured per thousand lines of code, often called KLOC.

A basic formula for determining Defect Density involves dividing the number of defects by the module's size, such as the number of lines of code.

Defect Density (DD) = (Number of Defects) / (Size of Module)

Example of Defect Density

Suppose you have added three modules to your software applications. Each module has the following count of identified glitches:

Module 1 = 10 bugs

Module 2 = 20 bugs

Module 3 = 10 bugs

Total bugs= 10+20+10 = 40

The total lines of code (LOC) for each module are as follows:

Module 1 = 1000 LOC

Module 2 = 1500 LOC

Module 3 = 1000 LOC

Total Lines of Code = 1000+1500+1000 = 3500

The calculation of Defect Density is as follows:

Defect density = 40/3500 = 0.01142 bugs/LOC = 1.14 bugs/KLOC

How does it measure testing?

- Defect density highlights the quality of the software component or module. For example, if the defect density is high, the module has more defects per unit of size, and issues must affect the software application’s functionality.

- The testing team can compare diverse types of modules within a software project. For example, the team can allocate more resources for a practical test process if an application component has a high defect density.

- A module with a high Defect Density can prompt teams to investigate the underlying causes. This can lead to improvements in coding practices and development processes.

Significance

Defect Density assists organizations in evaluating the software's quality. For instance, it can be used to determine the appropriate time for the release of a software application or when it transitions out of the alpha or beta phase (i.e., when the defect density falls below a specific threshold). It also checks for the performance of the development team.

Organizations use defect density during the system testing phase. With this, the team can pull out the issues in the code and address more substantial issues encompassing the entire program. Incorporating this approach during the development process may enable the team to give attention to minor problems, potentially moving the project beyond predetermined deadlines.

Nonetheless, there is no universally fixed benchmark for bug incidence. Having one defect per thousand lines of code is generally considered an indicator of sound project quality.

Active Defects

A straightforward yet crucial key performance indicator, It measures the status of a defect. In this context, the term "active" can encompass states such as new, open, or fixed (awaiting re-testing). It enables the team to take necessary measures to improve and resolve defects.

The testing manager must establish a cut-off point beyond which prompt action is required to reduce functional defects. The general principle is that a lower count of functional defects signifies higher application quality at a given moment.

Whether it's 100 defects, 50 defects, or 25 defects, the chosen threshold will determine what is acceptable and what isn't. Any count exceeding the established threshold falls into the "Not OK" category and should be promptly addressed.

Example of Active Defect

For example, during the test process of the software application, it was found that users cannot reset their passwords through the “forget password” feature. This issue is marked as an active defect as it does not allow the application to function correctly.

The formula to calculate Active Defects is simple:

Active Defects = Number of Defects Open and Not Resolved.

How does it measure testing?

- Active Defects provide visibility into the progress of defect resolution during the testing phase. Over time, decreasing functional defects indicates that the development team addresses and resolves issues.

- If there is any active defect, it highlights the area that needs more attention by the team.

Significance

- By addressing active defects, the team can improve the developed software application's quality and analyze the test process's effectiveness.

- Active defect monitoring is a crucial activity to maintain the timeline for the release of the software application. This helps fix the defect in time and, in turn, allows timely release of the software application.

Authored Tests

Another critical key performance indicator is that authored tests undergo evaluation by the testing manager, aiding in assessing the test design efforts undertaken by business analysts and testing engineers. This indicator quantifies the count of test cases crafted within a specified time frame. Additionally, it facilitates the analysis of test cases against established requirements, and those designed test cases can be further assessed for potential inclusion in either the regression or ad hoc test suite.

This performance indicator holds significance for test managers since it allows them to oversee the test design activities conducted by their business analysts and testing engineers. As new requirements are documented, developing corresponding system tests and making decisions regarding categorizing these test cases in the regression test suite becomes essential. In essence, the critical question is whether the test being developed by the Test Engineer adequately covers an important functionality aspect within the Application Under Testing (AUT).

If the answer is affirmative, it should be saved for inclusion in the regression testing suite and slated for automation. Conversely, if it does not meet this criterion, it should be placed in the pool of manual tests that can be executed on an ad hoc basis when needed. It is recommended to monitor "Authored Tests" in relation to the number of Requirements within a given IT project.

Authored Tests = Number of Test Cases (or Test Scripts) Prepared

Example of authored test

Suppose three QAs are testing a website in a software development project. They are in two two-week sprints, each responsible for authoring the test case. For example,

Tester 1 authored 30 test cases during the sprint.

Tester 2 authored 20 test cases during the sprint.

Tester 3 authored 10 test cases during the sprint.

Total Test Cases Authored = 30 (Tester 1) + 20 (Tester 2) + 10 (Tester 3) = 60 test cases

How does it measure testing?

- If the authored test is high in number during the test process, it shows wide test coverage. It highlights the components of the software applications that are prioritized for testing.

- Authored tests help in tracking the progress of the testing effort. It reflects the preparedness of the testing team and how many testing scenarios are ready for execution.

Significance

- When there are defined authored tests, it ensures that the software application is verified and validated in detail and the test process is correctly executed.

- Authored Tests serve as documentation of testing requirements and expected results. They help maintain testing consistency and can be reused for regression testing in future releases.

Number of Automated Tests

The automated test in the key performance indicator assesses the quantity or proportion of automated test cases within the testing suite. When expressed as a percentage, these key performance indicators can be computed by dividing the number of automated tests by the total count of test cases, encompassing both automated and manual. While measuring and interpreting key performance indicators, the test manager must pinpoint the automated tests. Although this task can be intricate, it enables the team to monitor the volume of automated tests, facilitating the detection of critical and high-priority defects introduced into the software delivery pipeline.

Percentage of Automated Tests = (Number of Automated Test Cases / Total Number of Test Cases) x 100

Example of Automated Tests

Suppose in a software development project, the team is working on the testing of a website. In this scenario, the QA team has 1,000 test cases. Out of the 1000 test cases, 700 have been automated using test automation tools and frameworks. The remaining 300 test cases are performed manually by QA testers. Therefore, the Automated Test Cases is 700.

Percentage of Automated Tests = (700 / 1000) x 100 = 70%

How does it measure testing?

- Typically, a higher percentage signifies an increased likelihood of identifying issues during automated test runs. The determination of the automation percentage threshold should be influenced by the product's nature and the associated cost.

- In general, having more automated tests in place enhances the chances of capturing critical defects introduced into the software delivery pipeline. The recommended approach with this KPI is to commence with a modest threshold, such as 20% of test cases being automated, and adjust it upwards as the QA team evolves and matures.

Significance

While manual testing retains relevance, especially for usability evaluations involving end-users, a substantial portion of the software testing process can now be automated. Given that automation is considerably swifter and more efficient than manual testing, augmenting the proportion of automated tests can enhance the overall productivity of a team, affording additional time for manual testing tasks.

Covered Requirement

This key performance indicator signifies the proportion of requirements addressed by at least one test. This key performance indicator is a measure for evaluating the alignment between test cases and requirements. The responsibility falls on the test manager to ensure that corresponding test cases accompany every need, and corrective measures should be taken when there are unlinked requirements or test cases. The objective is to maintain a 100% alignment between requirements and test cases.

Percentage of Covered Requirements = (Tested Requirements / Total Requirements) x 100

Example of Covered Requirements

Suppose a software development project has different requirements listed in the document, like user registration, secure payment processing, etc. Let's say there are 100 such requirements, and the team creates a test case to validate each condition. The QA team has tested 90 requirements to meet the specified criteria. Therefore, the Percentage of Covered Requirements = (Tested Requirements / Total Requirements) x 100 Percentage of Covered Requirements = (90 / 100) x 100 = 90%

How does it measure testing?

The percentage of covered requirements highlights the comprehensiveness of the test performed for the application. If there is a high percentage of covered requirements, it will show that the testing process has been thorough.

Analyzing the covered requirement makes tracing the condition and test cases possible. With this, testers can ensure that each requirement is aligned with test coverage. It allows us to unearth untested and under-tested areas.

Significance

- Testers can ensure that developed software applications meet user requirements by measuring the high percentage of requirements in the software project.

- There will be a lowering of the risk of undetected defects.

- When testers track covered requirements, information on insufficient testing and the need to allocate more testing efforts can be fetched.

- It assures that all specified requirements have been validated.

Defects Fixed Daily

It is a crucial performance indicator defined as the number of defects addressed and fixed by the team in a single day while performing the testing process of the software applications. When the team evaluates this KPI, they can track the daily number of fixed defects. In addition, the team's testing efforts to fix the defect can also be evaluated. Thus, by measuring the defects fixed per day, the effectiveness of the development team can be found; however, it is subjective because some of the bugs found in the software applications can be challenging to fix.

Defects Fixed Per Day = Number of Defects Resolved and Closed in a Day

Example of Defects Fixed Per Day

For example, the team detects 15 defects in the application daily while performing the test process. The team worked to fix those defects the entire day, and by the end, they could fix only 10. However, 5 defects were left unfixed. Therefore, the defect fixed per day will be 10.

How does it measure testing?

- Analyzing the defects fixed daily makes it possible to measure the team's efficiency in identifying and fixing them. If the KPI is high, it shows that the team can address the issue accurately.

- Tracking defects fixed per day helps assess the overall progress of defect resolution during the testing phase.

Significance

- With a high rate of defects fixed per day measured in the test process, you can find that issues are resolved promptly.

- It helps evaluate the development team's efficiency and capacity in addressing defects.

- Defects Fixed Per Day provides transparency into defect resolution progress. With this, stakeholders can make informed decisions on the release date of the software applications.

Passed Tests

At times, it's necessary to explore beyond the requirements level and delve into implementing every test arrangement within a test. A test arrangement is essentially an iteration of a test instance that employs varying data values. The Passed Tests key performance indicator complements your Passed Requirements KPI and assists in comprehending how efficient your test arrangements are at capturing issues.

Passed tests are the percentage of test cases/scenarios/scripts executed without failure. The team assesses the proportion of successful tests by observing the execution of each final arrangement within a test. This aids the team in gaining insight into how effective the test arrangements are at uncovering and containing issues during the testing process. To gauge the efficiency of the test case design procedure, the quantity of defects reported via formulated test instances is measured, where passed test instances indicate practical design and vice versa.

Passed Tests (%) = (Number of Successful Test Cases / Total Number of Test Cases) x 100

Example of Passed Tests

Let us consider a software application development project where the testers are working to execute test cases and verify that applications meet the specified requirements. For example, they executed a total of 100 test cases, and 15 test cases failed.

To calculate the number of test cases that passed, you can subtract the number of failed test cases from the total:

Number of Passed Test Cases = Total Test Cases - Failed Test Cases

Number of Passed Test Cases = 100 - 15 = 85

Percentage of Passed Test Cases = (Number of Passed Test Cases / Total Test Cases) x 100

Percentage of Passed Test Cases = (85 / 100) x 100 = 80%

So, in this scenario, the "Passed Test" KPI is 90%

How does it measure testing?

- The passed test KPI shows the quality and robust functionality of the software applications. For example, if the percentage passed the test is high in the test process, then the software application meets all the test requirements.

- Passed test KPI also highlights the effectiveness of defect detection as many passed tests show fewer defects detected in the test process.

- Testers track passed tests to assess the testing progress and ensure the software's functionality is systematically validated.

- The number of passed tests also helps determine whether the software application is ready for release. A high percentage suggests that the software is stable and reliable.

Significance

- Passed tests are directly associated with a positive user experience. A software product with fewer defects is more likely to satisfy users and avoid disruptions in their work.

- With these key performance indicators, testers and other teams evaluate that software applications are stable and reliable.

- It helps testing teams allocate resources efficiently by focusing on areas where tests fail and require attention.

Rejected Defects

One other significant indicator that falls within the scope of test monitoring and effectiveness is the ratio of defects rejected by the development team. Rejected defects are regarded as defects that have been identified in the test process and found that they are invalid during the review process.

This key performance indicator quantifies the proportion of defects turned down compared to the overall number of documented defects. Should this percentage surpass the established threshold, it becomes imperative to pinpoint and address the underlying concern. It could entail providing additional training to software testers or enhancing the quality of requirement documentation.

Rejected Defects = Number of Defects Rejected

Example of rejected defects

Suppose in the software development project, a team of testers identified an issue or defect in the login functionality of the application. They reported it to the development team for review and fixing. However, out of 80 reported defects, the developer team determined that 20 of them were invalid and needed to be rejected as defects.

Now, let's calculate the "Rejected Defects" KPI:

Rejected Defects KPI:

- Total Defects Reported: 80

- Rejected Defects: 20

Number or Percentage of Rejected Defects = (Rejected Defects / Total Defects Reported) x 100

Number or Percentage of Rejected Defects = (20 / 80) x 100 = 25%

How does it measure testing?

- Evaluating the rejected defect in the test process provides information to the team about the completeness of the defect identification process. The team tracks rejected defects as a high number of rejected defects shows that there is an issue in the software application that requires fixation.

- A lower percentage of rejected defects is generally preferable, as the defect reporting process is accurate, and valid issues are being identified and addressed promptly.

Significance

- The rejection of invalid defects helps maintain the integrity of the defect tracking system.

- When the team rejects defects that are not valid, the organization usually optimizes its test process and allocates the resources accordingly.

- The organization keeps the defect tracking system free of any invalid issues. And with this, it is ensured that the system can detect and address any real defects.

- Reporting the number or percentage of rejected defects provides transparency about the defect review process and highlights its effectiveness.

Reviewed Requirement

As you may have observed, several of the KPIs we've outlined concentrate on defect detection rather than strategies for their prevention in testing. The Reviewed requirement is more towards being a "Preventive KPI" rather than a "Detective KPI." This particular KPI centers on identifying needs (or user stories) that have undergone tests to eliminate ambiguity. This KPI mainly involves stakeholders responsible for assessing the requirement's completeness and accuracy.

As we know, simple requirements can result in good design choices and a good use of resources. These key performance indicators ensure that the subject matter expert has assessed each requirement the testing and development team addresses and is ready for implementation. Evaluated requirements can contribute to precise development and testing, proving cost-effective in the long term.

Reviewed Requirements (%) = (Number of Reviewed Requirements / Total Number of Requirements) x 100

Example of reviewed requirements

For example, developing a software project or website has a set of documented requirements. The team of testers is responsible for ensuring that these requirements are accurately reviewed and validated so that there is no error during the website's development process. There are 200 documented requirements, and the QA team has successfully validated 180.

To calculate the percentage of reviewed requirements, you can use the formula:

Percentage of Reviewed Requirements = (Reviewed Requirements / Total Requirements) x 100

Percentage of Reviewed Requirements = (180 / 200) x 100 = 90%

How does it measure testing?

- If the reviewed requirements are high in number, it reflects that the requirement document is of good quality and ensures that the test will be accurate.

- The organization always aims to have a high percentage of reviewed requirements because it helps to ensure that the software project’s goal is accurately understood and there is clarity on what is required to be tested.

- With the indicator of the reviewed requirement, the team finds any potential issue or error in the requirement before the release of the software application.

Significance

- The review process allows for the early identification and resolution of ambiguities, contradictions, or gaps in the requirements, reducing the likelihood of late-stage defects.

- Involving business stakeholders in the review ensures that the software aligns with the organization's strategic objectives.

Want to build quality software that meets users' requirements? Dive deep into our blog on requirement analysis to discover the power of user requirements in delivering top-notch software and take your software development to the next level.

Severe Defects

While monitoring severe defects is a valuable KPI, ensuring the testing team applies safeguards when assigning defect severity is crucial. These key performance indicators are designed to restrict the number of severe defects within an application at any given time if there is an excess of severe defects. However, before implementing this metric, it's imperative to provide the testing team with proper training to identify severe defects accurately.

Once you've established the necessary checks, you can set a threshold for these KPIs. Any defect with an Urgent or Very High-status defect should be included in this metric's count. If the total count surpasses 10, it should trigger a red flag.

Example of severe defects:

Let us consider the development of mobile applications. The testing team tests the application to detect bugs or errors and reports them to the development team for fixation. For example, the QA team identified and reported 50 defects during the testing. Each defect is assigned a severity level with four categories: Critical, High, Medium, and Low.

- Critical Defects: 10

- High Severity Defects: 15

- Medium Severity Defects: 15

- Low Severity Defects: 10

Now, let's calculate the "Severe Defects" KPI:

Severe Defects KPI:

- Critical Defects: 10

- High Severity Defects: 15

- Total Defects Reported: 50

Number or Percentage of Severe Defects = ((Critical Defects + High Severity Defects) / Total Defects Reported) x 100

Number or Percentage of Severe Defects = ((10 + 15) / 50) x 100 = (25 / 50) x 100 = 50%

How does it measure testing?

- Tracking severe defect key performance indicators helps measure the overall quality of the software being tested. If the percentage of severe defects is high, it shows increased issues or errors in the application that need more attention and resolution.

Significance

- Severe defects have a high potential to negatively impact users' experience, security, or ability to use the software effectively. Identifying and resolving them is essential for user satisfaction.

- These key performance indicators allow the detection of severe defects in the early stage of development of software applications. Therefore, the team lowers the risk of critical issues in the production environment.

Test Instances Executed

Key performance indicators are associated with the speed of the test execution plan and serve as a tool for the team to emphasize the proportion of the total instances within a test set that has been completed. Nevertheless, it's important to note that these key performance indicators do not provide any information about the build's quality. It mainly focuses on the quantity of the test cases that have been executed but does not give information on the quality of the tested software builds. It is because the quality of the software build depends on different factors like the nature of test cases, use of test data, etc.

Percentage of Test Instances Executed = (Executed Test Instances / Total Test Instances) x 100

Example of test instance executed

Let us consider the software development of a web application where the QA team has executed test instances and represented test cases to verify the functionality of the software applications. For example, the test set contains 200 test instances, and over a day, the QA team has executed 100 test instances.

Using the formula:

Percentage of Test Instances Executed = (100 / 200) x 100 = 50%.

The "Test Instances Executed" KPI in this scenario is 50%.

How does it measure testing?

- Test instance executed measures the extent to which the software testing process has covered diverse aspects of the functionality of software applications.

- Testing progress can be measured by ensuring test cases are executed per the test plan.

- It is possible to measure the effectiveness of defect detection if the test instances are executed thoroughly; there is a chance of identifying and fixing the defects.

Significance

- These key performance indicators are critical as they track the progress of the test and ensure that the testing efforts given will meet the project timelines.

- The number of test instances executed is often considered when determining whether the software is ready for release, reflecting the extent of testing coverage.

Number of Tests Executed

After determining the test instances, the team oversees various forms of test execution, including manual and automated methods. This key performance indicator quantifies the overall count of test cases executed on a build, encompassing manual and automatic processes at any given time. It falls under the category of Velocity KPIs.

Number of Tests Executed = Total Number of Test Cases Executed Successfully

Example of the number of tests executed

Let us consider the development of an application that has 300 test cases created to ensure its functionality. The test cases are executed using a combination of both manual and automated testing approaches. However, at the end of the testing phase, 250 test cases were executed.

To calculate the total number of test cases executed, you simply use the formula:

Number of Tests Executed = Total Test Cases Executed

Number of Tests Executed = 250.

The "Number of Tests Executed" KPI in this scenario is 250. It means 250 test cases, including manual and automated tests, have been executed on the specific software build.

Watch our tutorial on test analytics and observability suite to get insights on your test cases and analyze your overall performance.

Subscribe to the LambdaTest YouTube channel for more videos on Selenium testing, Cypress testing, and Playwright testing, and upgrade your testing skills!

How does it measure testing?

- These key performance indicators provide a precise count of the testing progress in executing test cases and indicate how many test scenarios have been validated.

- It indicates testing efficiency, showcasing how many tests can be executed within a given time frame.

Significance

- The testing team and project manager use test-executed KPIs to track the progress and check whether the test meets the project timelines.

- The team monitors the number of tests executed, and if the number is high, it shows that test coverage is on track.

Bonus Key Performance Indicators

Different QA teams assess various key performance indicators within the software testing based on their objectives for monitoring, controlling, or enhancing their processes. It's important to note that you can establish these indicators on different timeframes, be it weekly, monthly, annually, or any other defined period.

Below, we present some more key performance indicators that provide a way to measure the diverse scopes and activities used within this context. Here's a complete list:

Test Case Effectiveness

The test execution metric tracks the test cases executed on the current software build. This count uses various software tests covering manual and automated testing, including unit tests, regression tests, integration tests, and more. Test case effectiveness helps to evaluate the quality of the test cases in terms of the ability to find defects and verify the functionality of the software application.

Formula to Calculate Test Case Effectiveness:

Test Case Effectiveness (%) = (Number of Defects Found by Test Cases / Total Number of Test Cases Executed) x 100

- Higher effectiveness indicates that the test cases are well-designed for defect detection.

- Monitoring the overall count of executed tests is a crucial gauge of the testing team's efficiency.

- The quantity of tests executed exemplifies a velocity KPI, which assesses how quickly teams and businesses can accomplish their objectives.

Code Coverage

Code coverage is a software testing performance indicator that measures the percentage of code executed by the set of cases in an automated test. This indicator gives information on the thoroughness of testing efforts.

Formula to Calculate Test Case Effectiveness:

Code Coverage (%) = (Number of Lines of Code Executed by Tests / Total Number of Lines of Code) x 100

- Higher coverage percentages indicate that test cases have exercised more parts of the code.

- This KPI helps to identify areas of the code that are not tested or partially tested.

- It helps reduce the risk of undiscovered defects in the production environment.

Time Schedule and Constraint

This is utilized for quantifying the mean duration of test execution. Its purpose is to furnish testing time projections for release planning and development and testing schedules, thus aiding project managers in effective project management.

The formula for Average Test Execution Time:

Average Test Execution Time = (Total Time Spent on Test Execution) / (Number of Test Cases Executed)

- It aids in estimating the time required for test execution during release planning or project planning. With this performance indicator, project managers allocate resources and set realistic timelines.

- Testers help estimate the time for the testing phase and help the project manager have better plans and management.

Defect Closure Rate

This performance indicator assesses testers' efficiency in verifying and resolving fixed defects. It also contributes to improved estimation of release cycles.

The formula for Defect Closure Rate:

Defect Closure Rate (%) = (Number of Closed Defects / Total Number of Fixed Defects) x 100

- The high defect closure rate shows that the testing teams can fix the defect successfully. This lowers the risk of unresolved issues in the production environment.

- It becomes possible to estimate the release cycle, which helps to know how quickly the defect can be resolved.

Percentage of Critical and Escaped Defects

The Percentage of Critical and escaped Defects stands as a significant performance indicator demanding the consideration of software testers. It measures the proportion of critical defects not caught during testing and reported by users or found post-release. It verifies that the team's testing endeavors prioritize resolving crucial issues and product defects. Consequently, this approach aids in upholding the quality standards throughout the testing process and the final product.

The formula for Percentage of Critical and Escaped Defects

Percentage of Critical and escaped Defects (%) = (Number of Critical and Escaped Defects

/ Total Number of Critical Defects) x 100

- In the testing process, when you get a high defect closure rate, it means that defects are fixed accurately.

- Tracking the high defect closure rate in a specified period helps the team unearth any potential issues in the defect resolution process.

Time To Test

Time to Test is a performance indicator utilized in quality assurance and software development. Its purpose is to measure the speed at which an organization progresses from the initiation of testing on a new software feature to its successful completion. Simply put, the Time to Test KPI quantifies the duration testers and developers take to assess the feature and rectify any identified bugs during the testing phase.

Formula to Calculate Time to Test:

Time to Test = End Time of Testing - Start Time of Testing

- The incorporation of Time to Test enables organizations to evaluate the caliber and effectiveness of their software testing teams.

- A shorter testing duration can indicate efficient testing practices and resource utilization.

- Furthermore, this KPI can unveil intricate software features: an extended Time to Test signifies a more elaborate feature design.

Defect Resolution Time

Defect resolution time is a multifaceted key performance indicator within quality assurance. To begin with, it measures the duration it takes testing teams to pull out pre-existing issues in the software. Secondly, it measures the time required for teams to rectify these issues once they are identified. Typically, it measures the lapsed time required to identify, report, and fix any defects identified during testing.

Formula to Calculate Defect Resolution Time:

Defect Resolution Time = Date and Time of Defect Closure - Date and Time of Defect Discovery

- Monitoring the Defect resolution time aids in evaluating the level of effort put forth by a team to rectify software defects.

- A lower value for this KPI indicates a quicker ability for organizations to detect and resolve critical defects in their applications.

- Defect resolution time can be evaluated on a company-wide scale and individually to assess the efficiency and effectiveness of various testers on the team.

Successful Sprint Count Ratio

This metric, initially designed for software testing purposes, also serves as a key performance indicator for software testers. It comes into play once all pertinent sprint statistics have been compiled. It aids in calculating the percentage of successful sprints.

Formula to Calculate Successful Sprint Count Ratio:

Successful Sprint Ratio = (Number of Successful Sprints / Total Sprints) x 100.

- By tracking the successful sprint count ratio, the team can have detailed information on the progress of the software application development project. For example, a high ratio of successful sprints means the application’s progress is in the right direction.

- Teams can use these key performance indicators to identify areas for improvement throughout the test process of software applications.

Unresolved Vulnerabilities

Unresolved vulnerabilities stand as a significant security indicator, calculating the count of unresolved defects, openings, or weaknesses within the software. Businesses may further categorize this data according to the severity of each vulnerability, be it mild, moderate, or critical.

Formula to Calculate Unresolved Vulnerabilities:

Unresolved Vulnerabilities = Total Number of Identified Vulnerabilities - Number of Vulnerabilities Remediated

- The presence of many unresolved vulnerabilities indicates a higher security risk. The unresolved vulnerabilities metric aids in assessing both the quantity and severity of security issues, facilitating their prioritization.

- This metric can also be combined with other key performance indicators, such as resolution time, to understand better the efficacy of a team's software security endeavors.

Quality ratio

The Quality ratio performance indicator assesses the degree or level of achievement in the latest software testing iteration, determined by the pass or fail outcomes of the tests performed. Calculating the Quality ratio is straightforward; it involves dividing the count of successfully executed test cases by the total number of test cases and multiplying the result by 100.

Formula to Calculate Quality Ratio:

Quality Ratio (%) = (Number of Successful or Defect-Free Components / Total Number of Tested Components) x 100

- The Quality ratio offers testing managers and critical decision-makers a quick overview of the testing status. For instance, if the Quality ratio decreases between two distinct testing iterations (without introducing any additional test cases), it indicates that the new features or fixes may be negatively impacting the system.

- A higher ratio indicates a higher level of quality in the tested components in software applications.

Test Case Quality

As both a software testing metric and a performance indicator, test case quality is crucial in inspecting and scoring written test cases based on predefined criteria. Its primary objective is to ensure that every test case undergoes a thorough test, achieved by creating high-quality test case scenarios or utilizing sampling techniques.

Additionally, the team must consider specific considerations to uphold test case quality, including

- Ensuring that test cases are created to identify faults and defects. Establishing comprehensive coverage of both tests and requirements.

- Ting and documenting the areas impacted by defects.

- Providing precise and comprehensive test data that encompasses all potential scenarios.

- Encompassing both success and failure scenarios within test cases.

- Presenting expected results in an accurate and lucid format.

The formula for Test Case Quality:

Test Case Quality (%) = (Number of High-Quality Test Cases / Total Number of Test Cases) x 100

- High test case quality shows that the test process has better coverage and ensures that any critical feature of the software application is tested accurately.

- High-quality test cases can detect defects efficiently and ensure high-quality software applications.

Defect Resolution Success Ratio

This performance indicator measures the number of defects the testers address. They calculate the defect resolution success ratio and find the total number of defects resolved and reopened. In other words, this indicator measures the success rate of fixing and validating the issue in the software application.

The formula for Defect Resolution Success Ratio:

Defect Resolution Success Ratio (%) = (Number of Successfully Resolved and Verified Defects / Total Number of Defects) x 100

- If none of the defects is reopened, it signifies a 100% success rate in defect resolution.

- A high Defect Resolution Success Ratio indicates that defects are being effectively addressed.

Process Adherence and Improvement

It is another performance indicator that measures how well the testing team and organization can adhere to the testing process and implement continuous improvement effectively. The unit uses process adherence and improvement to reward them for their initiatives and contributions when they propose ideas or solutions that streamline the testing process, rendering it more agile and precise.

The formula for Process Adherence & Improvement:

Process Adherence & Improvement (%) = (Number of Process Improvement Initiatives Implemented / Total Number of Process Improvement Initiatives Planned) x 100

- High adherence to established processes contributes to better efficiency and consistency in project execution.

Conclusion

In the digital landscape, the role of key performance indicators in software development and testing cannot be ignored. This guide has explored the crucial key performance indicators in software testing and explained their need and the various types used by the team to measure the function and working of the application and test process.

It is understood from the details in the guide that the software testing procedure should undergo continuous monitoring, evaluation, and refinement to guarantee the delivery of a top-notch product within the predetermined timeframe and budget. Testers can use the mentioned vital performance indicators to evaluate the process's quality, make necessary adjustments, and enhance productivity accordingly.

On This Page

- Overview

- Understanding the key performance indicators

- Key points on the key performance indicators

- The difference between the key performance indicators and QA metrics

- Importance of key performance indicators

- When to use key performance indicators in software testing?

- Where key performance indicators are not applicable?

- The key performance indicators

- Bonus Key Performance Indicators

- Conclusion

- Frequently Asked Questions

Frequently asked questions

- General

Did you find this page helpful?

More Related Hubs

Start your journey with LambdaTest

Get 100 minutes of automation test minutes FREE!!