Power Your Software Testing with AI and Cloud

Supercharge QA with AI for Faster & Smarter Software Testing

- Testing Basics

- Home

- /

- Learning Hub

- /

- Prompt Engineering Tutorial: Examples and Best Practices

Prompt Engineering Tutorial: Examples and Best Practices

In this step-by-step guide, learn what is prompt engineering, its techniques, process, along with examples and best practices.

Last Modified on: September 26, 2025

- Share:

OVERVIEW

Prompt engineering refers to the practice of developing effective prompts or instructions given to a language model or AI system. These prompts play a crucial role in guiding the model's responses and controlling its behavior. Well-designed prompts help researchers and developers to influence the output of AI systems to achieve desired outcomes and improve the model's performance.

Artificial Intelligence is arguably the most ubiquitous entity in the world's current technological state. AI is everywhere, Be it the day-to-day facets of life or breakthroughs on the industrial grounds.

But what makes AI so distinctive from our previous groundbreaking leaps? So, engineering has always had that unvarying aim to automatize various aspects of life. It focuses on replacing manual parts of a job to the greatest possible extent and efficiency.

This hunt for automatization led us to AI, which in particular, replaces the manual task of 'thinking'! Bearing the responsibility of such a humane forte,' the development of AI becomes a rather intricate and essential process.

Thus, how the system reacts to different situations, challenges, and interrogations needs to be regulated and predictable. The AI must be fed the correct directions during its developmental phase, providing appropriate responses in any given condition. That is precisely where the role of prompt engineering comes in.

What is Prompt Engineering?

Prompt engineering is an essential part of the modus operandi in the AI development process. It involves the provision of direct instructions to the software in the form of primary or specific questions, phrases, or directions known as 'prompts.'

Using the concept of Natural Learning Processing (NLP), the developer provides specifically constrained prompts to the language model of the AI. This acclimatizes the AI to respond to puzzling, malicious, or controversial inputs.

One aspect distinguishing prompt engineering from other development and testing processes is its lack of hindrance with the overall model. Irrespective of the prompts' outcome, the system's broad parameters remain unchanged.

It is commonly used recently, especially in Large Language Models (LLMs) like ChatGPT. Developers use prompt engineering to enhance the comfort range of the AI. This process enhances the predictability of the user-software interaction. Moreover, it ensures that the AI approaches and functions in the desired direction.

Importance of Prompt Engineering

"With great power comes great responsibility" is highly applicable to AI models and the use of artificial intelligence in general. As AI systems, like language models, become more powerful and capable of performing complex tasks, the potential for errors and risks also increases.

To cover up these loopholes, effective prompt engineering is essential. Following are some factors that indicate the importance of prompt engineering in the Natural Learning Processing realm:

- Targeting particular tasks

- Checking efficiency

- Assigning validity

- Understanding range

- Avoiding redundancy

Prompt engineering is highly efficient as a replacement for the fine-tuning technique in language model development. With the engineers providing more target-specific prompts to the model, it becomes adept with a broader variety of conditions in the desired forte. This brings spontaneity and relevance to the responses allowing the system to function ideally.

To get a better idea of this, take an example of a conversational AI or chatbot for a sports website. Giving the model the relevant sports-related prompts while introducing it to the appropriate and controversial opinions would allow it to respond quickly and in greater depth to the query asked by the user.

Pre-defining the directions in which the AI has to provide its responses increases efficiency to a great extent. This way, the element of surprise is removed from the system when the user raises a query. In addition, intensive prompt engineering uses the potential of the language model to the greatest possible extent to improve the response's quality, relevance, and sophistication.

Anything that can frame an opinion can hurt sentiments, and conversational AI models are no different. For this reason, it becomes indispensable for models to be unconditionally appropriate, unbiased, and sophisticated. Prompts created mainly to seek controversial and biased responses acclimatize the language model to such testing situations.

Subscribe to our LambdaTest YouTube Channel for the latest updates on tutorials around Selenium testing, Playwright testing, and more.

Thorough prompt response analysis allows the developer to appreciate the strengths of the language model while also getting a deeper understanding of its limitations and redundancies. Prompt engineering is an important source for developers to comprehensively know their language models.

Redundancy in information is never appreciated, but providing holistic is also crucial. To find this sweet spot of context, thoughtful and accurate prompts are given to the model. This acquaints the AI with eliminating unwanted data while not playing with helpful information with higher accuracy.

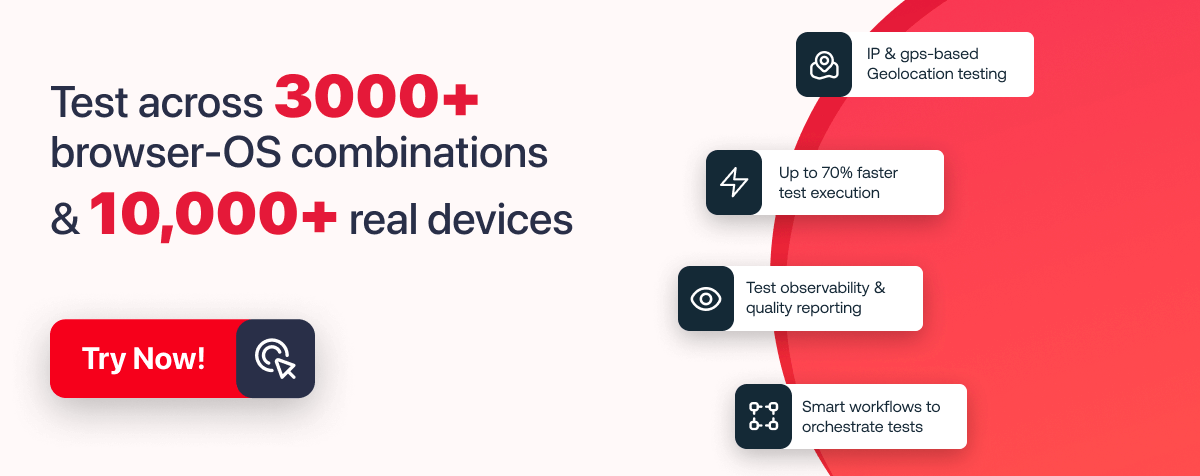

Note: Run test automation on AI-Native test orchestration and execution cloud. Try LambdaTest Now!

Benefits of Prompt Engineering

Prompt engineering imparts a plethora of perks to the AI language model. The grounds which lead to the necessity of prompt engineering in taking the model to higher standards were discussed above. Following are the benefits described in terms of enhancement of the existing traits that the AI savors after a precise, prompt engineering process:

- Enhanced maneuverability

- Enhanced creativity

- Enhanced precision

- Enhanced propriety

- Enhanced adaptability

There is nothing that delights the user more than the feeling of being in charge of the responses of the models. With better control over the broad theme of responses, including the tone, language, and even the content, the user feels at ease throughout the conversation. Prompt engineering contributes to this fluidity in the response of the AI model. With a wider range of instruction or question prompts, the system gets a knack for aligning its style and tone accordingly.

Suitable prompts increase the overall understanding of the AI model with respect to the particular topic. This enhanced understanding gives birth to the imaginative and creative skills of the system. It produces more insightful and innovative responses to a given query and introduces the user to new aspects regarding the topic.

As mentioned earlier, ideal prompts give the idea of context to the language model. This way, it acquires the idea of context and can eliminate redundancy. As a result, the content and responses are much more accurate and to the point. This is a highly desirable trait from the user's perspective, as the unnecessary inconvenience of filtering the data with respect to its relevance gets substantially reduced.

In the case of interactive or conversational AI models, opinion becomes a significant source of controversy and disagreements. While the model should be prompted to avoid framing opinions and biases irrespective of the context, it is sometimes a far-fetched idea to provide information without the flavor of stance.

In such unavoidable situations, the approach of response becomes extremely important. The language and tone must be so that even traces of bias remain shadowed. This comes with experience. And in the case of AI language models, experience comes through Natural Language Processing concepts, including prompt engineering.

Testing the system with apt prompts in such adverse situations allows it to build the skill of evading them without compromising the necessary information. Check out this article to learn how ChatGPT helps in test automation.

While topic targetted prompting imparts specificity to the expertise of AI models, wide-ranged prompt engineering allows the system to provide quality responses in various fields. This approach makes the language model adaptable to a spectrum of issues, industries, and facets.

Basic Concepts of Prompt Engineering

Prompt engineering is a complex and intricate endeavor that delves into every facet of the language model, far from the simple and straightforward task it may appear to be at first glance. Let us discuss the principles and thumb rules one must follow while designing the prompt.

Be fastidious

While giving prompts, it is essential to be picky and precise with your query demands. For example, ",What are the most common software bugs?" might not be as favored as "List the top 5 common software bugs." Even though the language model possesses information on numerous software bugs, when presented with a specific and concise request, the question remains whether it will respond adequately and as desired.

Be succinct

A common mistake in the approach to being fastidious could be to overexplain the question and unnecessarily add to the length of the prompt. However, it is better to be specific yet concise. Avoid prompts like "Provide me with a comprehensive analysis of all the possible scenarios and edge cases for testing this software application, regardless of their significance."

Be repetitive

Iterating the prompt while narrowing down the expected response is also a very advisable practice in the case of prompt engineering. Try asking the same query in as many ways as possible to allow the model to adapt to subtle changes in language with consistency in the context and expectations of the prompt.

Be clear

Haziness in prompt language is never appreciated. Since the team has to consider the conciseness and precision of the query, keeping clarity in the style and tone of the prompt becomes an ingenious task.

Be versatile

There is a wide variety of prompts that the developer can use for relevant prompt engineering. These adapt the AI model to all sorts of situations and queries in different tones and contexts.

Types of Prompts

In this section, let's discuss this variety of prompts and how the user can put them against the model during its runtime.

Role Prompting

Role prompting is the genre of prompting where the user asks the AI to play a part of a particular character and respond on its behalf or tone. By this, the user allows the information to come from a specific desired perspective and even channelizes the learning capacity of the AI model. The user may also ask the AI to assume them as a particular character and respond to them accordingly. This method can mold the language of intricate concepts into a rather understandable form.

Shot prompting

This style of prompting includes to-the-point single-line queries. Zero-shot prompting and few-shot prompting are the two types of this style. The user generally demands filtered outputs that lack redundancy from the language model. From the perspective of a prompt engineer, shot prompting acquaints the AI with the art of producing solely the demanded substrata of information from the abundant accessible data.

Option-based prompting

As the name suggests, option-based prompting includes putting forth a set of choices in front of the language model. The AI now has to pick the ideal choice based on all available facts. Often, such questions are intentionally framed with highly subjective choices.

Thus, asking the AI even to analyze the possibility of picking a choice solely based on facts and not frame any personal opinion in its responses. The response, moreover, should not leave the scope of validity in such cases. The AI should inform the user about the subjectivity of the concept or topic while giving all the necessary data to frame their opinion.

Leading prompting

Leading prompt leads the response of AI in a particular direction by providing the grounds of response in the prompt itself. Along with the query element, a statement including a fact, belief, or assumption is added to the prompt. This gives a broad idea of work to the model. A common possibility in such prompts can be misleading the AI into a factually incorrect statement or a highly subjective opinion. Thus, giving a knack for tackling such situations before runtime.

Open-ended prompting

In this category of prompts, the model can open the channels of creativity and imagination. Open-ended prompts are generally description-demanding prompts designed in such a way that a part of the response has to remain subjective. Unlike its counterparts, this prompting style is not entirely factual and, thus, is a great way to introduce the AI system to innovative thinking.

Components of a Prompt

A prompt can be broadly categorized into five major components. The proper regulation of these components based on suitability and need defines the quality of the prompt. Let us further understand each of these impacts on the overall prompt generation process.

Examples

Examples broadly act as a foundation for AI in the initial stages of its analyses of the prompt. These are the statements that the model looks up to to get a general idea and context of the upcoming prompt.

Although examples are not a mandatory part of the prompt, they provide clarity, which makes the whole prompt easier and quicker to understand for the language model. However, an overabundance of examples restricts the creativity of the AI and can even add to the complexity of relating them in a consistent form.

Directions

Directions are used to filter the response in the desired form by providing constraints or specific instructions. This significantly increases the accuracy of responses as it helps the AI understand the exact demand in the prompt. Directions also add to the target specificity of the response. In addition, they bring in the personalization aspect to the prompt.

Parameters

Parameters are the intrinsic settings of the language model that can be adjusted to get the desired variety of responses. For instance, let there be a param of 'randomness' for a particular AI model.

Now, toggling it to a higher degree would give the software a greater scope of creativity and provide responses of a wider variety. On the other hand, reducing this parameter would provide more precise and direction-specific outputs. This way, parameter changes allow a customized range of output variety for the same prompt direction and style.

Format

While the prompt format is relatively trivial for casual conversational bots, it becomes crucial for professional language models. The format is first provided as an input in the form of an example which comprises the directions, parameters, and output in a particular system. Thereafter, while feeding the main prompt query, only the directions and parameters are given as the input expecting the AI to generate the output accordingly.

Formats provide consistency in the input-output cycle and increase the efficiency of the overall process of prompt engineering. Prompts lacking formats would only give a fluid understanding of the context of inputs to the AI and, in turn, prevent an ideal generation of outputs.

Chaining

Chaining, going by its name, is the ultimate prompt component that chains together or connects different AI calls and binds them together to generate the output desired by the prompt. In the case of complex tasks, dividing the whole query into multiple calls instead of a single prompt is generally preferable. Chaining becomes an essential activity in such cases. Chaining tools like Longchain are used to perform chaining effectively.

Elements of a Prompt

The format is an essential part of maintaining the consistency of the prompt, especially in the case of a large language model, where the input-output process is much more complex than a single-line QnA. A predefined framework also increases the efficiency and versatility of the software system.

Thus, dividing elements of the prompt from the perspective of a prescribed format is vital. Broadly, a prompt comprises the input data, its context and relevance to the broader concept, the instruction for processing it, and the output space for the model to respond. Let us understand each of them in detail:

Instructions

These are the conditions and constraints put forth with respect to the type of desired task. Instructions tell the model what it is supposed to do through assertive statements. For obvious reasons, a prompt begins with instruction as it comprises the central motive of the activity.

Instructions are a mandatory element. Even the simplest of queries must contain instructions. For example, let there be a task to simplify a particular paragraph. The prompt's instructions would be “Explain the following paragraph in simpler terms.”

Input Data

This is the operable information. The model performs the tasks directed under instructions over input data. In the above prompt example, to simplify a paragraph, the input data would be the paragraph that needs to be simplified. Many a time, input data is incorporated with the instructional statement. This can be observed in the case of simple Q&A prompts. Input data is also essential since it provides the foundational information for the model to work with.

Context

This element includes the extra information that may come in handy for the AI while working in accordance with the instructions. They give a broader perspective to the model and aid in a better and quicker understanding of the output demanded.

Output indicator

The output indicator indicates the basic structure of output demanded by the prompt. This element of the prompt is like an instruction or preference with respect to the output format rather than an instruction regarding the task.

Prompt Engineering Techniques

Depending on the demanded task from the AI model and other factors involved in its source code, a suitable prompt engineering technique is applied. Understanding these techniques allows the engineer to match their suitability with the model and utilize them efficiently.

The following are the most important and commonly used prompting techniques:

N-Shot Prompting

This technique of prompt engineering provides restricted prerequisites, training, and data to the model before expecting the desired output generation. N-shot prompting is classified into two types:

- Zero-shot prompting: In the zero-shot prompting technique, the prompt includes absolutely no context for the language model to look up to. There is barely any usage of examples, and the AI has to work only according to the instructions over the input data.

- Few-shot prompting: Few-shot prompting includes labeled examples that provide some learning scope to the model and guide it toward the ideal output. This reduces the randomness in the output generation to some extent. These examples work as the only template for the language model to work with.

Generated Knowledge Prompting

External input data and context is spoonfed to the model in the prompt, giving it a further enlarged scope to understand the task better. This technique is generally used in the case of sentiment analysis, prediction demanding, opinion-based, or other subjective tasks.

The involvement of specific data to work on increases the efficiency and accuracy of the response generation process significantly. Generated knowledge prompting is performed in cases where the model lacks the data to frame a valid response. The input data is, therefore, used to fill the knowledge gaps.

Chain-Of-Thought Prompting (CoT)

CoT prompting is utilized, especially in cases where the model is required to perform a multi-step complex task. In this technique, multiple prompts are generated in such a way that they escort the response generation process of the model and narrow it down to the desired output.

These prompts are consciously designed to create a chain of thought or connective pattern in the correct sequence and direction. The model is not only provided with to-the-point output examples but also the process which leads to the particular output. This gives it a significantly greater scope to learn and understand the context and process of output generation in the specific case.

Self-Consistency

This technique can be considered a counterpart of the CoT-promoting technique. Here, instead of pre-defining the pathway that would bring about the desired result in the prompt itself, the stepwise prompts are designed so that the pathway and the model responses go hand in hand.

The AI is prompted with a similar yet simpler task, and the complexity is gradually and cautiously increased while maintaining consistency in the response logic until the model understands how to operate on the main task. It is a long and step-wise process. However, it develops the problem-solving ability of the model.

Automatic Prompt Engineer (APE)

Once the pattern of instruction and input data gets recognized, it can be automatized. In such repetitive prompt generation cases, utilizing the APE technique saves a lot of time while minimizing the sources of errors.

Prompt Engineering Process

Being a relatively complex process, many steps are involved in prompt engineering. Each of these steps has its respective significance and intricacies. Let us understand them precisely and sequentially:

- Knowing the cause

- Sentiment analysis: Subjective ideas must be directed using proper tone or specific mentioning of the intended sentiment. The general structure of the prompt engineering process relating to sentiment-inclined tasks would not revolve much around factual information and its accuracy but the correct interpretation of the subjective motive of the query.

- Question answers: Question-answering responses demand a perfect and suitable blend of facts and subjectivity while keeping in mind the context. Thus, prompts are designed to examine the precision and redundancy-eliminating potential as well as its subjectivity, bias, and opinion-handling capacity.

- Text generation: These tasks include generating literary works in their proper respective formats. Prompts demanding text generation of a wide variety of genres and specifications are, thus, taken into account.

- Casting initial prompt framework

- Examining the responses

- Rectifying the discrepancies

- Implementing the prompt

From the perspective of a prompt engineer, information regarding the tasks and situations that the AI is supposed to face is sufficient.

The type of prompts and techniques depend on the variety of these tasks. Some of which include:

Apart from the type of tasks the model is supposed to perform, many other factors revolving around its primary purpose can play an influential role in prompt engineering.

This may include a demand for a specific tone, language, slang, or overall language style. Specific instructions and filters that need to be subjugated to the model’s responses may also be included. Knowing and understanding the purpose of the model provides a holistic view of all of these factors.

Now that the purpose of the model is fully understood, the creation of initial prompts begins. While keeping in mind the goal, a clear and concise set of directives is constructed and consistently followed. The prompts include clear statements, commands, or questions that guide the responses of the AI toward the demand.

Initial prompts must lack excess information and instruction and allow the responses to originate from the natural imaginative ability of the model. Getting the desired output in the highly initial prompting stage with the help of a plethora of constraints may seem an apparently efficient outcome, but it hinders the basic purpose of prompts. The model may lack the ability to understand and adapt after such practices.

While the initial prompt inputs rarely achieve the desired output, it is important to perpetually narrow down the randomness of responses and increase the desirability of this output.

After casting the properly refined prompts in due accordance with the purpose of the overall model, the next step is to examine the responses achieved. As mentioned above, initial prompts may lead to rather vague or out-of-context responses from the model. But as the complexity further increases, properly evaluating these responses on an analytically produced desirability scale becomes important.

The role of knowing the cause of the language model emerges again while analyzing the relevance of responses. Identifying these portions and the degree of irrelevance becomes the next important task if the response appears partially irrelevant.

After examining the relevance of the output, the inspection of redundancy must be performed. Having an enormous database of information sources, the model may get carried away with its responses and provide unwanted excessive information. Portions of inaccuracy from this aspect must also be identified and noted.

Finally, after recognizing all sorts of discrepancies in the responses, the focus must be shifted to understanding the cause of such discrepancies. That is, why did the response deviate from ideality? After establishing proper reasonings with respect to this question, a natural follow-up question would be, How can it be rectified?

Once the flaws are identified and their possible causes are assumed, it is time to correct them using prompt refining. Prompt refining involves iterations of the same query in a subtly different style with the addition or elimination of input data or context in the prompt. This happens in accordance with the analytically derived cause of the flaw generated in the previous responses.

To clarify, suppose irrelevance or redundancy exists in a portion of the response. In such a case, the subsequent prompt could include an instruction or an indirect mention in the input data, which prevents the generation of this redundant data. Iteration and refining of prompt can be seen as perpetual filtration of results to reach the desired output eventually.

Suppose you have a pair of audio speakers in mind and decide to search for them on an eCommerce platform. Now, you put a very vague input, like ‘audio speakers, in the search box and see an enormous variety of audio speakers as the output. The most natural step that can be further taken is successively adding a filter to your search, for example, brand name, color, etc. until you see the desired speakers on the result page.

This is exactly how iteration and refining of prompt works. The query is repeated constantly with increasing constraints till the model responds desirably.

This process completes only when the demanded output is generated consistently by the model. Thus, a series of calls are still performed after the response meets the desirability to examine its stability with further changes in the overall prompt structure.

Now that the responses consistently meet the desired output, it is finally time to call that aspect of the set of prompts ready for implementation in other contexts and concepts with reference to the model. This process is majorly done in two ways:

- Variation generation of the prompt series:This is usually done when the set of prompts works consistently for a broader language model, and now they have to be implemented for a target-specific purpose. Consider the prompt engineering process for a tool used for translating Spanish to English.

Now, to perform prompts on a model with a specific use for English explanations of Spanish poems, the previous set of prompts can be varied for the desired purpose. This way, the engineer gets unburdened from reframing the entire prompt structure and basics.

- Automatic generation of the prompt series: In case the successive aspect of the model has little to no contextual change, it is better to recognize the pattern in the prompt series. Afterward, this pattern can be utilized to create a code that automatically generates prompts relevant to the previous pattern. This process is extremely efficient owing to the elimination of manual work and is also free from any human error, making it extremely accurate.

Implementing the prompt in different aspects of the language model marks the conclusion of the prompt engineering process.

Prompt Engineering Examples

In this section, let's see the examples of prompt engineering.

Text Classification

Text classification is a crucial task in software testing, involving the categorization of input text into specific classes, such as positive, negative, or neutral. Various natural language processing techniques can be applied to achieve this classification.

- Zero-Shot Prompt

Instruction: Classify the text into positive, negative, or neutral.

Text: "The new software update improved the system performance significantly."

Sentiment:

Output: Positive

- Few-Shot Prompt

Instruction: Classify the text into positive, negative, or neutral.

Text: "The latest bug fix introduced more issues."

Sentiment: Negative

Instruction: Classify the text into positive, negative, or neutral.

Text: "The user interface changes are impressive."

Sentiment:

Output: Positive

Question-Answer

These types of prompts include a simple dialogue of a question, which may include certain instructions and context, followed by the desired answer.

- Example-1

- Example-2

Prompt: "Which test case management tool is widely used in the industry?"

Output: One of the widely used test case management tools in the industry is "TestRail." TestRail provides a user-friendly interface and various features to manage test cases efficiently.

Prompt: Answer the question given below with proper reasoning and mention “Unsure about the answer” if uncertain.

Question: "What is mutation testing in software testing?"

Context: Software quality Software quality, code coverage, identifying bugs.

Output: Mutation testing is a technique used in software testing to evaluate the effectiveness of the test cases by introducing small changes or mutations in the source code. The idea is to check if these changes are detected by the test suite, which indicates the ability of the tests to identify bugs and improve code coverage. However, if the specific tool or method to implement mutation testing is not known, it is better to respond with "Unsure about the answer."

Text Summarization

Text summarization is a description or explanation demanding a prompt and usually achieves responses in long paragraphs and lists. However, the length and context of these responses can be guided through specific instructions.

Prompts:

- "Summarize the process of conducting regression testing."

- "Provide a concise explanation of black-box testing."

- "Explain the different levels of software testing in around 150 words."

Data Extraction

This type of prompt is engaged when factual information or data needs to be extracted. Due to the absence of subjectivity, they are generally to the point.

Prompt: "List the names of all failed test cases from the recent test run."Output: Failed test cases from the recent test run:

- Login functionality test

- Payment processing test

- User profile update test

- Security authentication test

Conversational Responses

Conversational prompts are generally suitable for AIs like chatbots, which imitate human behavior in the form of text or image generation.

Prompt:

- "Hi, can you explain the importance of smoke testing in software releases?"

- "What are the advantages of automated testing over manual testing?"

- "Can you recommend any performance testing tools for web applications?"

Logical Analysis

Logical prompts can lie anywhere from a request for a computer code in a particular programming language to complex mathematical problems. This depends on the tasks the model is meant to do.

Prompt:

- "Write a Python function to check if a given string is a palindrome."

- "Calculate the area of a circle with a radius of 5 units."

- "Find the sum of the first 50 natural numbers."

Prompt Engineering Applications

Prompt Engineering can open various channels of the potential of the AI model. Good quality prompt engineering can allow the language model to perform versatile and complex tasks efficiently. Some of the major applications of prompt engineering are:

Organized data generation

Generation of desired data in the desired format becomes a smooth task with prompt engineering. With suitable prompts, the model can produce the input or extracted data into the applicable format effectively and quite consistently.

Prompt engineering can also be applied for direct data generation. For instance, you can ask the language model to write a short blog on a specific topic providing it with relevant information. With suitable examples stated in the prompt, the model can also provide the desired number of similar data lightning fast.

Code generation

Large Language Models can also be utilized to generate the desired programming code in a variety of languages with the help of suitable prompts. You can see the conversion of prompts into computer codes as the conversion of code comments into actual programmed scripts.

In the initial stages of prompt engineering, the models will take little to no time to convert simple instructions like factorial calculations, pattern making, recursion, sorting list, and binary searches into codes in different programming languages. Gradually with a consistent understanding of the context, the model could generate complex codes and programming analysis pretty accurately.

Program-aided language models

Replacing the text guidance in the chain-of-thought prompting process with particular programmed reasoning and filters is known as the program-aided language model (PAL). Here the prompts have a broad pattern and are used as coded input in a specific programming language.

This increases the consistency in the responses of the language model to a great extent. Moreover, it reduces the scope of human errors since the input sequence of information needs to fit into the overall program, thus, improving the accuracy significantly. PAL Model is also highly time efficient as it replaces the mechanical labor of text inputs by mere data entry in the code pattern.

Responses inspired by programmed inputs are also contextually appropriate and lack irrelevance and redundancy. Programs like Python Interpreter convert textual inputs into codes that the language model interprets.

Generating visual art

Prompts can even be used for the generation of creative images and other visual forms of data. Such prompts usually include relevant examples followed by the context and instructions associated with the desired outputs.

Until now, we have explored various aspects of prompt engineering, including its components, elements, and processes. However, to implement effective, prompt engineering, prompt engineers play a cross-disciplinary role in developing, testing, and refining prompts. In the next section, let’s look at the role and responsibilities of a prompt engineer.

Responsibilities of a Prompt Engineer

The role of a prompt engineer comes with a set of responsibilities. Some of these include:

- Understanding the model and its purpose and designing proper prompts with reference to it.

- Give some scope of creativity to the model without compromising precision.

- Recognize and nullify the sources of bias in data and responses related to the model. The engineer should be aware of cultural sensitivities as well.

- Not only is prompt engineering a single-person job, but it also involves discussions with the model's creators, including managers and scientists. Thus, the engineer should be open to collaborating with different members and maintain a feeling of companionship throughout the job.

- Honing your software testing abilities by understanding the broader context while also keeping the gates of innovation open forever.

- Be aware of the recent developments in the product and its effects on the process of prompt engineering.

- Make adjustments for enhancement in the model's efficiency through consistent efforts.

Note: Test your websites across 3000+ browser environments. Try LambdaTest Now!

Does Prompt Engineering Need Coding?

Prompt engineers don't necessarily have to be apt in coding skills. However, a general understanding of the programming structure of the model brings efficiency to the grasping ability of the engineer.

While testing the complex problem-solving abilities of the AI, creating a prompt would require a general understanding of how the model works. In such cases, foundational programming knowledge would come in handy for the engineer. It must be noted that prompt engineering demands no programming degree from the engineer.

The only two prerequisites for being a prompt engineer are knowledge of LLM architecture and problem-solving abilities. LLM Architecture is the basic framework of how the large language model processes text inputs to generate the desired output.

Prompt Engineering Challenges

A lot of risks and challenges are associated with the process of prompt engineering. It is known how refined prompts can add to the efficiency and accuracy of the language model. But since everything comes with a price, prompt engineering also opens the doors for potential situations of misconduct and jeopardy. Some of these risks are:

- Adversarial prompting

- Faulty facts

- Bias

Malicious prompt engineering can be a subtle method of misconduct with the large language model. Malpractices like prompt injection, prompt leaking, and jailbreaking are used to intentionally generate undesired outputs. Adversarial prompting is generally intentional and occurs due to irresponsible prompt engineering. It can be prevented by including defending statements in the instruction element of the prompt. In addition, adversarial prompt detectors act as a defensive shield against any such malicious entries to the LLM.

This involves the hindrance with the data sources of the AI model. It is such a subtle challenge that without proper knowledge of the particular concept, it is difficult to identify the inaccuracy in the responses of the LLM. Factual inaccuracy can be prevented by providing suitable examples in the prompt or thoroughly examining the information sources.

Biased and opinionated content is forever a considerable challenge, especially in the case of conversational AI models. Improper prompt engineering may give rise to stereotypes, leading to biases. Thus, a strategically organized prompt engineering process plays a crucial role in identifying bias on behalf of the model and its remedy. To prevent the generation of bias, diversity in prompts is an essential action.

Prompt Engineering Best Practices

There are various practices usually preferred by expert prompt engineers. The inclusion of such practices defines the overall quality of the prompts. Let us know about some of them:

- Avoid negative prompting

- Avoid redundancy

- Avoid vagueness

- Avoid impatience

Instead of instructing the model about the practices it should avoid, it prefers rephrasing them as the practices that are more appropriate.

In the process of eliminating redundancy from the model’s responses, it is possible to get carried away and include redundancy in the prompt itself. Thus, being precise with the language is always better.

Focus on one aspect of the model at a time. Be target specific and stick to it till consistency in the desired output is achieved.

The process of iteration and refining can be a real test of patience at times. The rise of impatience in such situations may leave a lot of holes open in the framework of output generation.

Conclusion

Prompt engineering is a process that involves creativity and aptitude. With such broad spectra of concepts related to almost every aspect of prompt engineering, thoroughly understanding and correlating each of them becomes essential.

Moreover, the width of these concepts adds to the responsibilities held by the engineers. The process of recognizing the direction of tasks demanded from the language model and the most suitable approach of prompt engineering becomes the central idea of the whole process. Thus, requiring an in-depth grasp of each facet of prompts.

Prompt engineering has found significant adoption in areas like content generation, customer support automation, and QA workflows. For instance, here’s a detailed guide on ChatGPT Prompts for Software Testing. Also LambdaTest KaneAI ChatGPT for testers demonstrates how these techniques can be applied to streamline and enhance software testing processes.

Frequently asked questions

- General

Did you find this page helpful?

More Related Hubs

Start your journey with LambdaTest

Get 100 minutes of automation test minutes FREE!!