Power Your Software Testing with AI and Cloud

Supercharge QA with AI for Faster & Smarter Software Testing

- Testing Basics

- Home

- /

- Learning Hub

- /

- Test Analysis Tutorial

Test Analysis A Comprehensive Guide With Use Case And Best Practices

Explore the What, Why, and How of Test Analysis with use case , challenges and best practices.

Last Modified on: September 26, 2025

- Share:

OVERVIEW

In the dynamic life cycle of software development, test analysis plays a pivotal role by ensuring the quality, reliability, and effectiveness of the software being developed. It is a crucial phase that occurs after the requirements gathering and before the software testing.

Test analysis is invaluable for various stakeholders involved in the software development process for Developers It provides clear and detailed test cases, aiding them in understanding the expected behavior of the software and assisting in debugging when defects are identified, For testers It guides them in executing tests accurately and efficiently, ensuring comprehensive coverage and reliable results and also for business analysts product owners and project managers. Testers or developers must first figure out some practical and reliable test case design techniques for improving the quality of the software testing process.

However, test analysis is not without difficulties; these include the difficulty of understanding complex requirements, ensuring that there is complete coverage, managing evolving project scope etc. To solve these issues and gather the test data various patterns or strategies have been followed. Let's understand !

What is Test Analysis?

The process of gathering, analyzing and examining test artifacts or test data to create test scenarios or test cases is known as test analysis. Test analysis aims to collect requirements and develop test objectives. As a result, it is also known as Test Basis and Test Foundation. Without test analysis, we might miss important problems in the software. It helps create strong tests that ensure the software works well.

Why Test Analysis?

Given its many uses, tes analysis is a crucial step in the evaluation of any process or system designed to measure performance, accuracy, or effectiveness. It involves a comprehensive examination of test results, methodologies, and variables to extract meaningful insights and ensure the validity and reliability of the testing process.

- Enhancing Software Reliability: It assures that the software satisfies the highest requirements of performance and reliability by thoroughly analyzing test findings, giving end users confidence.

- Optimizing Test Efficiency: It enables the development of focused and efficient test scenarios, maximizing the coverage of vital features while reducing needless testing.

- Pinpointing Weaknesses: By identifying faults and vulnerabilities in software, test analysis makes sure that possible bugs are found and fixed before deployment.

- Informing Decision-Making: Test analysis equips teams to make wise decisions regarding software enhancements, resource allocation, and deployment preparedness through data-driven insights.

Factors Determining Levels of Details of Test Analysis

The depth of the test analysis depends on a number of context- and software-related variables. The following are some of the major variables that affect how in-depth a test analysis is:

- Project Complexity: Usually, extensive test analysis is necessary for very complex projects with intricate needs and functions. Testing requirements for simple projects could be less complicated.

- Criticality of the System: The amount of detail in test analysis should be higher if the program is crucial (e.g., in healthcare, aviation, or finance) to make sure that all potential scenarios and edge situations are taken into account.

- Size of the Project Team: Larger project teams might require more in-depth test analysis to make sure that everyone on the team is aware of the testing requirements and goals.

- Available Resources: The level of depth in test analysis might vary depending on the resources, including time, money, and qualified testers. A more targeted and focused test analysis may be produced by limited resources.

- Technology Stack and Tools: The level of detail in test analysis might vary depending on the complexity of the technology stack and the accessibility of software testing tools. More thorough testing may be necessary for advanced technologies.

- Change Management and Agile Practices: Smaller, iterative cycles of test analysis may take place with agile methodologies. Depending on the needs of the cycle or subsequent iterations, the level of information may change.

- Compliance with Testing Standards: Adherence to industry-standard testing practices, such as ISTQB (International Software Testing Qualifications Board) guidelines, may dictate the level of detail in test analysis.

It's important to note that finding the appropriate amount of detail in test analysis is a dynamic process that may change as the project moves along and new information becomes available, it's crucial to keep in mind. Successful test results depend on the planning and execution of the test being flexible and adaptable.

How to Gather Test Data for Test Analysis?

You can gather data through the V Model by aligning test activities with corresponding development phases, ensuring comprehensive coverage and validation at each step of the software lifecycle. Following a pattern will be more effective for collecting data and for project management.

The sources for acquiring test information are as follows:

Detail Design Document (High and Low level)

A complete technical plan for developers is provided in the Detail Design Document (DDD), which is a thorough blueprint that interprets the system architecture and requirements. Both high-level and low-level design elements are included. The high-level design provides a summary of the principal modules, their interactions, and core functionalities that make up the system's structure. The system's architecture, database layout, and external interfaces are all described. The low-level design, on the other hand, goes into greater detail with each module, defining the interfaces, data structures, and algorithms needed for implementation. It contains class diagrams, sequence diagrams, and database schema specifics, giving developers a detailed insight to code effectively.

Performance testing is primarily concerned with assessing high level design system's reactivity, scalability, and stability under a range of workloads. It seeks to guarantee that the software operates at its best in demanding or stressful circumstances. Response times, resource use, and general system behavior are measured during this testing by replicating real-world usage scenarios.

Where on the other hand Unit Testing seems more suitable for low level design where individual units or components of a software application are tested in isolation. It validates whether each unit functions as expected and meets its design specifications. Developers write unit tests to ensure that their code functions correctly and reliably. These tests are typically automated tests and run frequently during the development process to catch and fix bugs early.

Functional Design Documents

A software system's specific functionalities are described in detail in a functional design document (FDD). It offers a thorough explanation of how the system will satisfy the specified business requirements. Detailed functional requirements, process processes, user interfaces, and data models are frequently included in this document.

The foundation for comprehending the functionalities and interactions of the system is provided by the functional design documents. This data is used in integration testing to confirm that various components function properly together. Together, they make sure the program runs properly both on an individual level and when integrated into a larger system.

Software Requirement Specification

A comprehensive document that specifies the specific requirements and functions of a software system is called a software requirement specification (SRS). It functions as a contract between the client and the development team and offers a detailed road map for the software's design, development, and testing.

The SRS contains details on system architecture, user interfaces, functional and non-functional requirements, and more. Where System Testing ensures that the software actually performs as specified in the SRS. Together, they form a crucial link in the software development and testing process, ensuring that the final product aligns with the client's expectations and requirements.

Business Requirement Specification:

The foundational document that specifies the precise requirements and expectations of a software project from a business viewpoint is known as Business Requirement Specification (BRS). It provides a clear development plan and distinguishes between functional and non-functional requirements.

On the other hand User Acceptance Testing (UAT) is the last stage of the testing procedure where end users confirm whether the system satisfies their unique needs and requirements. Prior to final implementation, this testing process is essential for ensuring that the program performs as planned in the real-world operational environment. The BRS serves as a guide for UAT because it is based on the business requirements described in that document. When UAT is completed successfully, the system is prepared for deployment.

Use Case Study

Scenario:

An e-commerce company, "ShopEZ," has recently launched a new online shopping platform. The company wants to ensure that the shopping cart functionality is robust and user-friendly before the official launch.

User Actions:

Adding Items to the Cart:

User navigates to the product catalog, selects desired items, and adds them to the shopping cart.

Quantity Adjustment:

User reviews the cart, adjusts item quantities, and verifies that the cart reflects the changes accurately.

Removing Items:

User decides to remove certain items from the cart and checks if the cart updates accordingly.

Applying Coupons:

User has a discount coupon and applies it to the cart to ensure the discount is correctly calculated.

Viewing Subtotal and Total:

User checks the subtotal and total amount in the cart, confirming they are calculated accurately based on the selected items and quantities.

Proceeding to Checkout:

User initiates the checkout process, ensuring that the items in the cart are correctly transferred to the order summary.

Test Cases:

Boundary Testing for Quantity:

Verify that the system handles minimum and maximum quantity values correctly.

Coupon Validation:

Test the application with valid and invalid coupon codes to ensure correct application and rejection.

Concurrency Testing:

Simulate multiple users adding, adjusting, and removing items simultaneously to check for any conflicts or synchronization issues.

Ensure that the shopping cart functions consistently across different web browsers (Chrome, Firefox, Safari, etc.).

Error Handling:

Test scenarios where unexpected errors occur (e.g., server timeout, network disconnect) to ensure the system provides appropriate error messages and handles such situations gracefully.

Performance Testing:

Simulate high loads and stress on the shopping cart functionality to assess its responsiveness and stability under heavy user traffic.

Mobile Responsiveness:

Verify that the shopping cart is user-friendly and functions seamlessly on various mobile devices.

Expected Outcomes:

All test scenarios should pass, demonstrating that the shopping cart functionality is reliable, user-friendly, and capable of handling different user interactions effectively. Any identified issues should be documented and addressed before the official platform launch.

Now, After becoming aware of the requirements, we gathered the test data and subsequently created test cases. Following this, we conducted test analysis to assess the effectiveness of the test cases and ensure they comprehensively cover the specified requirements. But exactly how we did it?

- Examine the basics of testing:

- Determine test cases:

- Creating test scenarios

- Expected and unanticipated inputs:

Make sure the testing team is aware of the project's goals, specifications, and the particular functionality being tested. Introduce them to testing techniques, equipment, and any applicable industry standards.

Identify essential user interactions with the online shopping cart, including activities like adding, modifying, and removing products, in collaboration with stakeholders. Create scenarios for border testing, coupon validation, concurrency, cross-browser support, error handling, performance, and responsiveness on mobile devices.

Creating test scenarios entails converting selected situations into detailed test cases that describe how to reproduce user activities. Prerequisites, input values, anticipated results, and any relevant post-actions (such as reviewing the cart, using coupons, and checking out) should be included for each scenario.

For each test scenario, identify a range of acceptable and unacceptable inputs. Invalid inputs, such as entering a negative number or using a coupon that has expired, should test the system's error handling and resilience while valid inputs ought to correspond to usual user behavior.

Key Challenges of Test Analysis

Ambiguous or Insufficient Requirements: Missing or ambiguous requirements might make it challenging to develop thorough test cases and scenarios, perhaps leaving gaps in test coverage.

- Time Restrictions: Tight project constraints could make it difficult to find enough time for in-depth test analysis, which could lead to hurried or insufficient testing.

- Complicated Applications and Technology: Testing complicated systems or applications, particularly those that make use of cutting-edge technology, can be difficult to comprehend, plan, and carry out.

- Integration Testing Challenges: It can be challenging to plan and verify the interactions between different system modules or components, especially when working on complicated projects.

- Regression Testing: Regression Testing Must be thoroughly conducted, which can take a lot of time and resources. This is necessary to ensure that new additions or changes don't adversely affect existing functionality.

- Non-Functional Testing Considerations: Testing for performance, security, and usability provide additional difficulties that call for particular skills and equipment.

- Test Data Management: It can be difficult to manage sensitive or confidential data and to ensure the availability of appropriate test data.

- Environmental Dependencies: Compatibility problems might result from testing in diverse contexts (such as browsers, devices, and operating systems) and coping with environmental discrepancies.

- Documentation and Traceability: Effective test analysis depends on maintaining accurate documentation and traceability between requirements, test cases, and findings.

- Communication and Collaboration Issues: Accurate test analysis depends on effective communication between stakeholders, including developers, business analysts, and testers.

- Test Data Privacy and Security: Data protection laws must be followed carefully in order to ensure that private or sensitive information is handled safely during testing.

To overcome these obstacles, careful planning, excellent communication, and frequently the use of suitable test tools and test estimation are required. Successful test analysis also requires a proactive strategy to recognize and address these issues.

Best Practices For Test analysis

Clear Requirement Understanding: Ensure that the test analysis is in line with the planned functionality and objectives by thoroughly understanding the project requirements.

- Traceability Matrix: Create a traceability matrix to connect every test case to a particular requirement. This guarantees thorough coverage and makes requirement validation easier.

- Prioritize Test Scenarios: To concentrate testing efforts on areas that have the biggest influence on system functionality and user experience, identify important and high-impact scenarios.

- Data-Driven Testing: Integrate several test data sets to assess the system's performance under various scenarios, ensuring robustness and resilience to a variety of inputs.

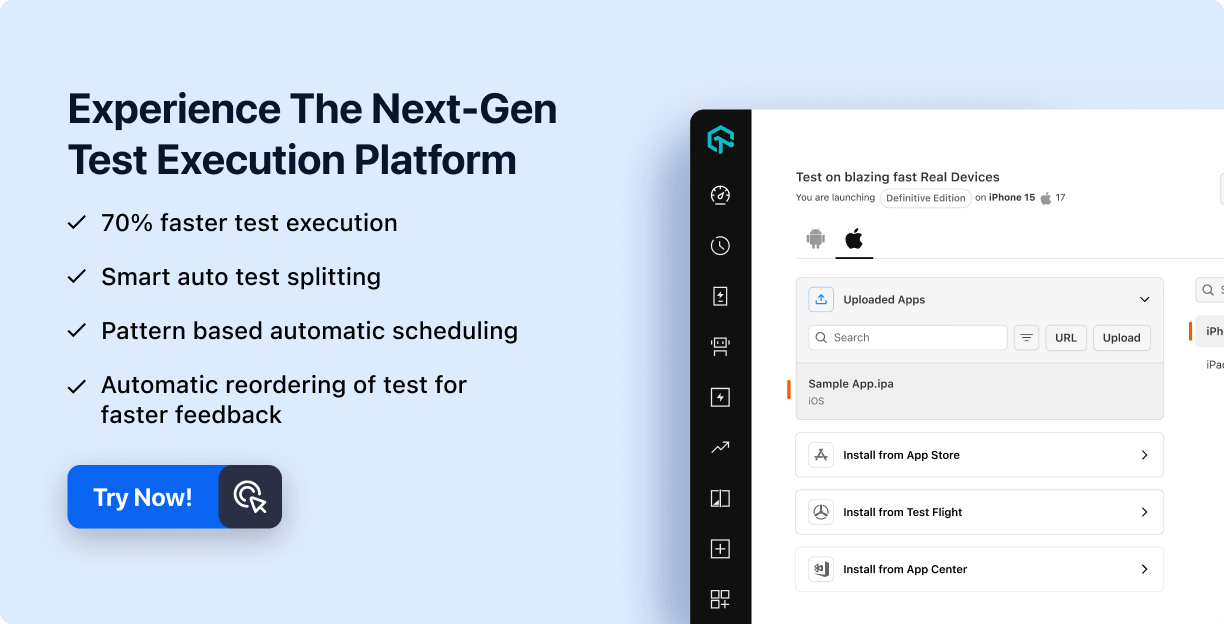

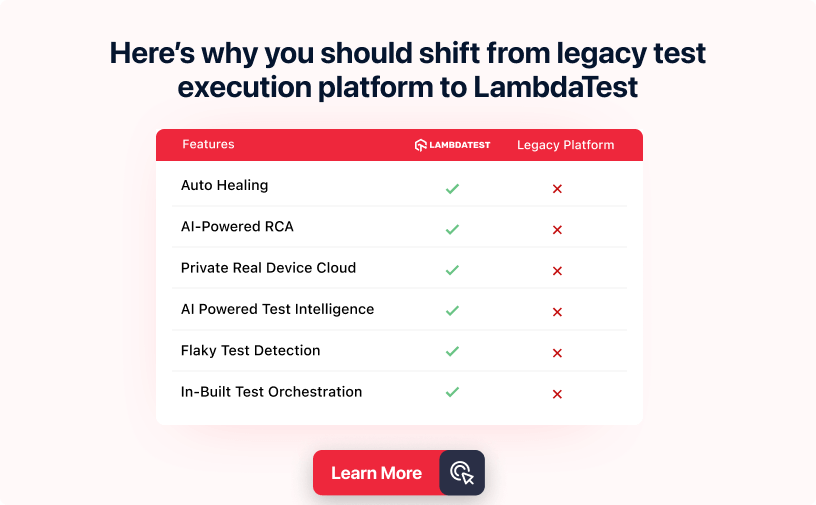

- Accelerated Regression Testing: Regression testing should be used to ensure that new additions or changes have no negative effects on current functionality. This provides protection against unwanted side effects. However, things should move forward more quickly because it often takes time. Platforms like lambdatest can accelerate regression testing through parallel testing on various browsers and OS, coupled with automation integration, enabling rapid execution and comprehensive coverage for swift release cycles. Its visual testing and smart algorithms further enhance efficiency by prioritizing critical functionalities.

- Performance Metrics: Establish specific software testing metrics and benchmarks to objectively evaluate system responsiveness, scalability, and resource utilization.

- Documentation and Reporting: Create a thorough record of the test analysis procedure, including test results, issues found, and suggestions. Making decisions and communicating effectively go hand in hand.

- Automation Opportunities: Determine the areas where automation testing can be used to boost testing effectiveness, particularly for repetitive or regression testing scenarios.

- Adaptability and Flexibility: Be ready to modify testing plans and scenarios in response to changing project specifications, user input, and additional details that turn up during the testing process.

- Leverage efficient testing tools: Consider leveraging powerful testing tools like Lambdatest to enhance your test analysis process. This versatile platform not only accelerates software testing but also provides essential project management features such as UI testing, issue tracking, test logs, and insightful reporting options. You can efficiently manage your testing projects and generate comprehensive test reports with ease, saving valuable time and ensuring the highest quality for your software.

By putting these best practices into practice, test analysis becomes more organized, efficient, and dependable, which eventually results in higher-quality software outputs.

Conclusion

In order to ensure the quality, dependability, and effectiveness of the program, test analysis is a crucial stage in the software development lifecycle. Teams can improve the effectiveness and accuracy of their testing efforts by adhering to best practices including having a clear grasp of the requirements, prioritizing test cases, and using data-driven testing. It's critical to overcome obstacles like unclear requirements and time limits, and applying strategies like regression testing and automation can help the testing process even more. In the end, thorough test analysis results in the creation of durable and dependable software systems.

Frequently asked questions

- General

Did you find this page helpful?

More Related Hubs

Start your journey with LambdaTest

Get 100 minutes of automation test minutes FREE!!