Types Of Automation Testing: Definition, Benefits And Best Practices

Urwashi Priya

Posted On: June 28, 2023

![]() 75609 Views

75609 Views

![]() 60 Min Read

60 Min Read

Automation testing has become an essential part of the software development process. It helps save time and effort and improves the accuracy of the testing process. In this article, we will discuss what is automation testing,the types of automation testing, their purpose, best practices, their examples, and how they can be used to improve the quality of software applications.

TABLE OF CONTENTS

What is Automation Testing?

Automation testing is a software testing approach that utilizes specialized tools and scripts to automate the execution of test cases. It involves creating scripts that simulate user interactions with the software and automatically running these scripts to validate functionality, reliability, and performance.

By automating repetitive and manual testing tasks, automation testing offers benefits such as faster test execution, increased test coverage, and early bug detection. It reduces human error and allows testers to focus on more complex testing aspects. It also facilitates Regression Testing by retesting previously validated functionalities to ensure they remain unaffected by changes. Overall, automation testing is crucial in improving software quality, efficiency, and the overall development process.

Refer to the hub to learn more about automation testing and its implementation. If you are preparing for an interview you can learn more through Automation Testing Interview Questions.

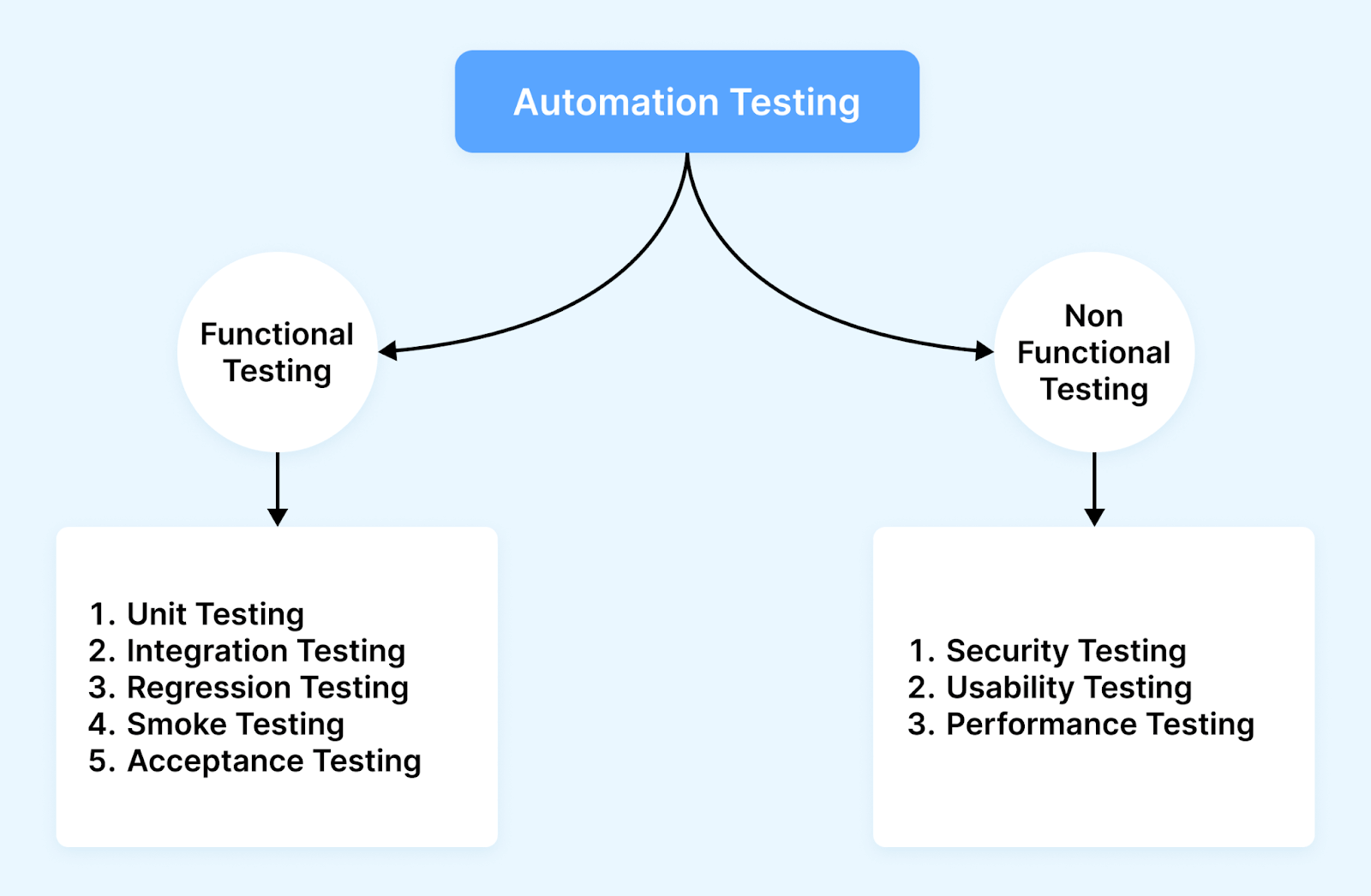

Types of Automation Testing

Functional Testing and non-Functional Testing are two broad level categories of automation testing types performed during the software development process to assess different aspects of a software application.

Functional Testing focuses on validating the functional requirements and features of the software. It involves testing the software’s behavior against the required functionalities, ensuring that the test performs as intended. This type of testing focuses on verifying software meets user requirements and operates correctly.

On the other hand, non-Functional Testing assesses the software’s attributes beyond its functionality. This testing evaluates performance, usability, security, reliability, and compatibility.

Functional Testing

Functional Testing involves testing the system’s functionality by giving inputs and checking if the outputs meet the expected or desired results.

The techniques include black box testing, where the tester mainly focuses on the inputs and outputs without examining the internal implementation of the process, as well as white box testing, which involves examining the actual internal structure and code. Various techniques, such as equivalence partitioning, boundary value analysis, decision tables, and user scenario-based testing, are employed to design and execute functional test cases.

The purpose of Functional Testing is to ensure that the software functions properly and performs the intended tasks as already specified in the requirements.

Let’s discuss some of the key objectives of Functional Testing.

- Validating Requirements: Functional Testing helps validate the system’s specified requirements. It ensures that all the functional aspects, such as user interactions, data processing, and system behavior, align with the intended functionality.

- Detecting Defects: By systematically testing the software’s functionality, Functional Testing aims to identify defects, bugs, or inconsistencies that may exist in the application. This helps in maintaining the proper functioning of the software.

- Ensuring Correct Behavior: Functional Testing verifies that the software behaves as expected under different scenarios, inputs, and user interactions. It ensures that the software operates within acceptable limits, produces correct outputs, and responds appropriately to user actions.

- Enhancing User Experience: Functional Testing plays a vital role in evaluating the user experience and usability of the software. Validating the functional aspects of the software related to user interactions, interface design, and system responsiveness helps ensure a smooth and satisfactory user experience.

- Mitigating Risks: Functional Testing helps mitigate the risks associated with software failures or malfunctions. Identifying functional issues early in the development lifecycle enables developers to address them promptly, reducing the chances of critical failures or negative impacts on end-users.

- Compliance and Standards: Functional Testing ensures that the software complies with relevant industry standards, regulations, and specifications. It verifies that the software adheres to the required functionality, performance, security, or accessibility standards mandated by the industry or regulatory bodies.

We will now look at some of the top different types of automation testing that can be categorized under Functional Testing.

Unit Testing

Unit Testing is the first on our list of types of automation testing under the broader category of Functional Testing.

Unit Testing is a software testing methodology that generally focuses on verifying the functionality of individual units or components of a software system. Here, a unit refers to the smallest testable part of a program; usually, it can be a function, method, or sometimes class.

The purpose of Unit Testing is to isolate and test each unit of code separately to ensure that it performs as intended. By testing individual units in isolation, developers can easily identify and fix bugs early in the software development life cycle(SDLC) before actually integrating the units into a larger system. Unit Testing helps improve the quality of the code by ensuring that each unit behaves correctly according to its specifications or requirements.

Unit tests are typically written by the developers, who create test cases to exercise various scenarios. They verify the expected behavior of the units. Unit Testing frameworks and tools are commonly used to automate the execution and reporting of unit tests, making the process more efficient and manageable.

Benefits of Unit Testing

Unit Testing provides several benefits in the software development process:

- Early bug detection: Unit Testing catches bugs or defects early in the development process, allowing developers to fix them before they cause more significant issues or affect other parts of the system.

- Faster debugging: When a unit test fails, it provides a specific indication of which unit or component is responsible for the failure, making it easier to fix the problem.

- Facilitates collaboration: Unit tests provide a common ground for collaboration between developers, testers, and other stakeholders, as they define the expected behavior of the code.

- Documentation: Unit tests serve as a form of documentation, illustrating how units of code are intended to be used and the expected behavior in different scenarios.

Best practices for Unit Testing

Unit Testing is an important aspect of software development, and following best practices can greatly enhance the effectiveness and efficiency of unit tests. Here are some best practices for Unit Testing:

- Test One Concept at a Time: Each unit test should focus on testing a single concept or behavior. This helps keep tests concise, specific, and easier to understand.

- Use Descriptive and Clear Test Names: A well-named test provides valuable information about what is being tested and the expected behavior. Clear test names make it easier to understand and maintain the tests.

- Keep Tests Independent and Isolated: Unit tests should be independent of each other and not rely on the state or results of other tests. Each test should set up its necessary data and clean up after itself to maintain isolation.

- Test Boundary Conditions: Test inputs at boundary conditions or edge cases to ensure that the unit behaves correctly in exceptional scenarios. This includes testing minimum and maximum values, empty or null inputs, and other critical boundary conditions.

- Test Positive and Negative Scenarios: Test both valid inputs (positive scenarios) and invalid inputs (negative scenarios) to verify that the unit handles both cases appropriately. Negative tests should check for error handling, exceptions, or failure conditions.

- Write Small and Focused Tests: Keep unit tests small and focused on a specific behavior or aspect of the unit. Smaller tests are easier to understand, debug, and maintain.

- Aim for Complete Code Coverage: Strive for high code coverage to ensure that the tests exercise most, if not all, parts of the unit. However, prioritize testing critical and complex parts of the codebase.

- Use Assertions for Verification: Use assertions or assertions libraries to validate that the actual results match the expected results. Assertions help to detect failures and identify discrepancies automatically.

- Refactor and Maintain Tests: Treat unit tests as first-class citizens and refactor them along with the production code. Update tests as the code evolves to ensure they remain accurate and effective.

- Automate and Integrate Tests: Automate the execution of unit tests and integrate them into a continuous integration (CI) system. This allows for regular and automated testing, catching issues early in the development process.

- Run Tests Frequently: Run unit tests frequently, preferably after each code change, to quickly catch regressions or issues introduced during development.

- Keep Tests Fast: Unit tests should execute quickly to provide rapid feedback. Slow-running tests can discourage developers from running them frequently, defeating their purpose.

Integration Testing

Integration Testing is second on our list of types of automation testing under the broader category of Functional Testing.

Integration Testing is a software testing technique that focuses on testing the integration and interaction between different modules, components, or subsystems of a software system. It focuses on verifying the individual components work together as expected, and the integrated system functions properly.

Integration Testing aims to identify defects that may arise when combining and interacting with different components. It ensures that the interactions between different parts of the system, such as modules, classes, databases, APIs, or external systems, are seamless and produce the desired or expected outcomes.

Benefits of Integration Testing

Integration Testing provides several benefits in the software development process:

- Component Integration Verification: Integration Testing validates that the components, which have been independently tested, integrate correctly with each other. It ensures that the interfaces, dependencies, and communication channels between components are functioning properly.

- Defect Detection and Isolation: Integration Testing helps identify defects and issues that may occur due to the integration of components. Testing the integration points, data flow, and interactions between modules allows for the detection, isolation, and resolution of integration-related defects at an early stage.

- Data Flow and Control Validation: Integration Testing ensures that the data and control flow between different components is accurate and consistent. It verifies that inputs from one component are correctly processed and utilized by other components and that the outputs are generated as expected.

- Interface and API Testing: Integration Testing validates the compatibility and correctness of interfaces and APIs used for communication between components. It verifies that the inputs and outputs exchanged through interfaces adhere to the defined specifications and protocols.

- Error Handling and Exception Testing: Integration Testing assesses the integrated system’s error handling and exception management capabilities. It verifies that errors, exceptions, and boundary cases are handled correctly and do not result in system failures or inconsistencies.

- Integration Points and Dependencies Testing: Integration Testing focuses on testing the interaction points and dependencies between different modules or subsystems. It ensures that these integration points are well-defined, understood, and implemented correctly without any compatibility issues or conflicts.

- System Behavior Validation: Integration Testing verifies that the integrated system behaves as expected and meets the functional and non-functional requirements. It helps ensure that the system as a whole exhibits the desired behavior and produces the correct outputs when components are integrated.

Best practices for Integration Testing

Integration Testing plays a critical role in ensuring that the different pieces of software come together smoothly. To make the most of one’s Integration Testing efforts, here are some best practices to consider:

- Start with a solid plan: Before diving into Integration Testing, it’s essential to have a well-thought-out plan in place. This plan should outline the objectives, scope, and strategies for testing. Identify the key integration points and prioritize them based on their importance. By having a clear plan, one can easily approach Integration Testing with a structured and organized mindset.

- Begin testing early and frequently: Integration Testing should commence as soon as individual components are stable enough to be tested together. It’s crucial to start Integration Testing early in the development process to catch any issues or conflicts as soon as possible.

- Create realistic test environments: To conduct effective Integration Testing, it’s essential to set up dedicated test environments that closely resemble the production environment. This means one must configure the hardware, software, and network components so that it looks like the real-world conditions in which the software will operate. Having realistic test environments allows for more accurate and reliable results.

- Utilize a combination of top-down and bottom-up approaches: Integration Testing can be approached in different ways. One approach is the top-down method, where higher-level components are tested first, using simulated lower-level components. Another approach is the bottom-up method, where lower-level components are tested first, using drivers or simulated higher-level components. A combination of both approaches provides better coverage and helps identify issues at various levels of integration.

- Validate data and message flows: Integration Testing should focus on verifying that data and messages flow correctly between the integrated components. Ensure that the input and output formats are consistent and that data is transferred accurately and securely.

- Simulate external dependencies: Often, integrated systems rely on dependencies that may not be readily available for testing. In such cases, it’s beneficial to simulate or create mock versions of these dependencies. This allows for controlled testing environments where the integration can be tested without relying on external factors. By simulating dependencies, one can isolate and focus solely on the integration between components.

- Leverage test automation: Test automation can significantly enhance the efficiency and effectiveness of Integration Testing. Utilize automated testing frameworks and tools to streamline the execution of integration tests. Automation enables more frequent test runs, aids in Regression Testing, and saves time and effort. It also facilitates integration with continuous integration and delivery pipelines, making the testing process more seamless.

- Monitor and analyze test results: During Integration Testing, it’s important to monitor the test execution process and log relevant information such as test inputs, outputs, and any encountered errors or failures. This data helps analyze the test results, diagnose issues, and provide valuable feedback to developers. By closely monitoring the testing process, one can ensure that any problems are identified and addressed promptly.

- Foster collaboration and communication: Integration Testing involves close collaboration between different teams and stakeholders. It’s crucial to maintain open lines of communication with developers, architects, and other teams involved in the integration process. Collaborate closely to understand integration points, clarify requirements, and ensure smooth coordination throughout the testing phase. Sharing test results and providing timely feedback helps improve the overall quality of the application.

- Maintain test coverage and adapt as needed: As the software evolves, it’s important to update and maintain the integration test suite continually. Review and update the test coverage based on changes in the system architecture or functionality. Retest integration points after significant changes or bug fixes to ensure that the fixes haven’t introduced new issues. Adapting the test suite to reflect changes in the software guarantees that Integration Testing remains effective and relevant.

Integration Testing can be performed using different approaches, for example, top-down, bottom-up, or a combination of both. It may involve manual testing, automated testing, or a combination of both, depending on the complexity and nature of the system being tested.

Regression Testing

Regression Testing is third on our list of types of automation testing under the broader category of Functional Testing.

Regression Testing can also be classified as a type of software testing that aims to verify that changes or enhancements made to an application have not introduced new bugs or caused unintended side effects. It involves retesting previously tested functionality to ensure that it still works as expected after modifications have been made to the software.

Regression Testing aims to catch any defects or issues that may have been introduced into the system due to changes in the software. It helps ensure that the existing functionality remains intact and unaffected by the modifications or additions made during the development process.

Benefits of Regression Testing

Regression Testing provides several benefits in the software development process:

- Verification of Changes: Regression Testing is conducted to verify that the modifications, bug fixes, or new features implemented in the software have not caused any regression or deterioration in the existing functionality. It ensures that the changes made to the codebase have not disrupted the smooth operation of previously working components.

- Identification of Defects: Regression Testing helps identify defects or issues that may have been introduced during the development or modification process. By retesting the previously tested areas of the software, it aims to uncover any unexpected bugs that may have emerged due to changes made elsewhere in the code.

- Risk Mitigation: Changes made to the software, even seemingly minor ones, can have unintended consequences. Regression Testing helps mitigate the risk associated with software modifications by ensuring that the software behaves as intended after the implemented changes. It reduces the possibility of new defects going unnoticed and affecting the overall performance and reliability of the application.

- Maintaining Quality: By running regression tests, software development teams can maintain the quality and stability of the software over time. It ensures that any modifications or enhancements made to the codebase do not introduce regressions that could negatively impact the user experience or business operations.

- Time and Cost Efficiency: Regression Testing helps catch defects early in the development cycle, reducing the cost and effort required for fixing them later. By identifying and fixing issues promptly, Regression Testing saves time and resources that would otherwise be spent on troubleshooting and reworking during later stages of development or deployment.

Best practices for Regression Testing

Here are some best practices to consider when performing Regression Testing:

- Test Automation: Utilize automation testing tools and frameworks to automate repetitive regression tests. This helps save time and effort, allowing for faster and more thorough Regression Testing. Automated tests can be executed regularly and consistently, ensuring the integrity of the software.

- Prioritize Test Cases: Prioritize test cases based on their impact and criticality to the application. Focus on high-risk areas and frequently used functionalities during Regression Testing. This ensures that the most important aspects of the software are thoroughly tested, and any potential regressions are identified early on.

- Test Coverage: Aim for comprehensive test coverage by including a diverse set of test cases that exercise various functionalities, edge cases, and user scenarios. This helps identify potential issues in different parts of the application and ensures that all critical paths are adequately tested.

- Version Control: Use version control systems to manage and track changes made to the software. This allows for easier identification of modified code and helps select the appropriate test cases for Regression Testing. Version control also facilitates easy rollback in case any issues are encountered during Regression Testing.

- Test Data Management: Maintain a well-organized and up-to-date test data repository. Ensure that the test data used for Regression Testing accurately represent real-world scenarios and cover a wide range of scenarios. This helps identify any issues related to data compatibility, data corruption, or data handling during Regression Testing.

- Regression Test Suites: Create and maintain dedicated regression test suites that consist of reusable test cases specifically designed for Regression Testing. These test suites should cover critical areas of the application and be updated with each new release or change. By having well-defined regression test suites, one can streamline the testing process and ensure consistent and efficient Regression Testing.

- Regression Test Environment: Set up a separate test environment that closely mimics the production environment. This helps ensure that Regression Testing is conducted in an environment that closely resembles the actual usage conditions. It also helps isolate Regression Testing from other ongoing development activities, reducing the risk of interference and conflicts.

- Defect Management: Establish a robust defect tracking and management system to log, prioritize, and address any issues identified during Regression Testing. Communicate the identified defects to the development team, providing detailed steps to reproduce and fix them. This helps in resolving issues efficiently and tracking the progress of defect resolution.

- Continuous Integration and Continuous Testing: Implement continuous integration(CI) and continuous testing(CT) practices to ensure that regression tests are executed regularly and automatically as part of the software development pipeline. This helps catch regressions early in the software development cycle and thus enabling faster feedback and quicker resolution of issues.

- Regression Test Documentation: Document the Regression Testing process, including the test cases, test data, and any known issues or limitations. This documentation serves as a reference for future Regression Testing cycles and helps maintain consistency in testing practices.

Smoke Testing

Smoke Testing is fourth on our list of types of automation testing under the broader category of Functional Testing.

Smoke Testing is a primary and essential type of software testing that is performed to quickly assess the stability and basic functionality of an application or system after a new build or major changes have been made. As its name suggests, Smoke Testing aims to identify any major issues or “smoke” that might arise from the software, indicating potential critical flaws that could hinder further testing or even deployment.

The purpose of Smoke Testing is to conduct a superficial examination of the software to ensure that the critical functionalities are working as intended without delving into exhaustive testing. It serves as an initial checkpoint to verify if the software is stable enough to undergo more rigorous testing phases. By executing a set of predetermined tests that cover essential features, Smoke Testing helps to catch severe defects early on, allowing developers to address them promptly and minimize the risk of wasting time and resources on further testing.

Imagine Smoke Testing as taking a quick peek at a newly cooked dish before serving it to guests. If you see billowing smoke or a terrible burnt smell, you know something is seriously wrong, and it’s not ready for consumption. Similarly, during Smoke Testing, if significant flaws are detected, it indicates that the software build might have critical issues that need immediate attention before proceeding with comprehensive testing and deployment.

Benefits of Smoke Testing

Smoke Testing provides several benefits in the software testing process:

- Early detection of major issues: Smoke Testing helps in quickly identifying major defects in the software. By running a subset of essential tests, it aims to ensure that the basic functionalities of the application are working as expected. If any critical issues are detected during Smoke Testing, further testing can be halted, preventing the waste of time and resources on extensive testing.

- Rapid feedback on build stability: Smoke Testing provides immediate feedback on the stability and viability of a software build. It allows testers and stakeholders to assess the overall health of the system after new changes or updates have been implemented. If the smoke tests pass, it indicates that the build is potentially stable for further testing. If the smoke tests fail, it suggests the presence of critical issues that need to be addressed before proceeding with additional testing.

- Time and resource optimization: Smoke Testing helps in optimizing time and resources by focusing on essential functionalities and critical paths. It allows testers to quickly determine if the basic functions of the software are working correctly before investing more effort into comprehensive testing. By filtering out major issues early on, Smoke Testing helps in avoiding unnecessary testing efforts on unstable builds.

- Confidence in software stability: Successful Smoke Testing instills confidence in the stability and reliability of the software. It assures stakeholders that the basic features of the application are functioning as expected, providing a solid foundation for further testing and development activities. This confidence allows for more efficient planning and decision-making in the software development lifecycle.

- Risk mitigation: Smoke Testing helps in mitigating risks associated with software releases or updates. By quickly identifying major issues, it reduces the chances of deploying faulty or unstable software to production. It serves as a safety net to catch critical defects early and facilitates timely corrective actions, ensuring a smoother and more controlled software release process.

Overall, Smoke Testing plays a vital role in ensuring the stability, reliability, and readiness of a software build. It offers an efficient way to assess the basic functionality of the application, providing quick feedback on major issues and enabling effective decision-making in the software development and testing process.

Best practices for Smoke Testing

When it comes to Smoke Testing, there are a few best practices to follow to ensure its effectiveness.

- Keep it focused: The primary purpose of Smoke Testing is to identify major issues quickly, so it’s essential to focus on critical functionalities rather than testing every single feature. Concentrate on the core features that form the backbone of the software and are crucial for its basic operation.

- Define clear criteria: Before starting Smoke Testing, establish specific criteria for passing or failing the test. Determine the expected behavior and outcomes for each critical functionality. This clarity helps in quickly identifying deviations and making informed decisions.

- Automate where possible: Automation can significantly enhance the efficiency and speed of Smoke Testing. Automate repetitive test cases or use tools that can help execute predefined tests quickly. This allows for faster feedback and reduces the chances of human error.

- Keep it lightweight: Smoke Testing is meant to be a lightweight and quick assessment. Avoid extensive testing or deep dives into individual features. Stick to high-level tests that can be executed rapidly. Remember, the goal is to detect major issues, not to achieve complete test coverage.

- Make it part of the development process: Integrate Smoke Testing into your development workflow to ensure its regular execution. Ideally, perform smoke tests after every significant build or major change to catch critical issues early on. This helps maintain the stability and functionality of the software throughout the development cycle.

- Document and track results: Maintain a record of Smoke Testing results to track the progress and identify trends over time. Document any failures or issues encountered during the test and communicate them to the development team. This documentation helps in analyzing patterns and improving the software quality.

- Collaborate with stakeholders: Involve relevant stakeholders, such as developers, testers, and project managers, in Smoke Testing. Encourage open communication and collaboration to ensure that everyone understands the purpose and scope of Smoke Testing. This alignment promotes a shared understanding of the software’s stability and aids in making informed decisions.

Acceptance Testing

Acceptance Testing is fifth on our list of types of automation testing under the broader category of Functional Testing.

Acceptance Testing is a type of Functional Testing. It is a crucial phase of software testing that focuses on evaluating the software’s readiness for deployment and its alignment with the requirements and expectations of the end users or stakeholders. The purpose of Acceptance Testing is to determine whether the software meets the agreed-upon acceptance criteria and whether it is suitable for acceptance or approval by the intended users.

Acceptance Testing serves as a final check before the software is released to ensure that it satisfies the desired functionality, usability, and overall quality standards. It validates that the software fulfills the business objectives and user needs, ultimately confirming that it is ready for deployment in the production environment.

Imagine Acceptance Testing as the final examination before a student graduates. Just like the student has to demonstrate their knowledge and skills to meet the requirements for graduation, Acceptance Testing evaluates whether the software meets the predefined criteria and performs as expected.

During Acceptance Testing, real-world scenarios are simulated to replicate how end users will use the software. It involves executing test cases that validate specific user interactions, workflows, and business processes. The focus is not only on functional aspects but also non-functional aspects such as performance, security, and compatibility.

Acceptance Testing can take different forms, such as User Acceptance Testing (UAT), where end users or representatives from the client organization validate the software, or operational Acceptance Testing (OAT), which verifies the software’s operational readiness and compatibility with the production environment.

Benefits of Acceptance Testing

Acceptance Testing provides several benefits in the software development process:

- Validation of user requirements: Acceptance Testing ensures that the software meets the specified user requirements and aligns with the expectations of the stakeholders. By involving end-users or representatives from the target audience, it allows for the validation of the software’s functionality, usability, and overall suitability for its intended purpose.

- Improved customer satisfaction: Acceptance Testing helps in enhancing customer satisfaction by involving end-users in the testing process. It provides an opportunity for users to evaluate the software and provide feedback based on their real-world scenarios and needs.

- Identification of gaps and defects: Acceptance Testing helps in identifying any gaps or discrepancies between the software and the desired outcomes. It uncovers defects, inconsistencies, or missing functionalities that might have been overlooked in earlier testing phases. By capturing and addressing these issues before the software is deployed, Acceptance Testing reduces the risk of dissatisfaction and rework in the later stages of development.

- Verification of business processes: Acceptance Testing validates the software’s ability to support and automate essential business processes. It ensures that the software accurately handles critical workflows, data inputs, and outputs, aligning with the established business rules and logic. This verification helps in reducing manual effort, streamlining operations, and increasing overall efficiency.

- Risk mitigation and compliance: Acceptance Testing helps in mitigating risks associated with software deployment. By thoroughly testing the software with representative data and scenarios, it reduces the likelihood of critical failures or business disruptions. Additionally, Acceptance Testing can also address compliance requirements by ensuring that the software meets industry standards, regulatory guidelines, or specific contractual obligations.

- Increased confidence in software quality: Successful Acceptance Testing instills confidence in the quality and readiness of the software for production use. It provides stakeholders, including both end-users and project sponsors, with evidence that the software has been thoroughly evaluated and meets the defined acceptance criteria. This confidence supports informed decision-making regarding software release and deployment.

Overall, Acceptance Testing plays a crucial role in ensuring that the software meets user expectations, enhances customer satisfaction, and reduces the risk of costly post-deployment issues. It validates the software against user requirements, identifies defects and gaps, and provides stakeholders with the necessary confidence in the software’s quality and suitability for its intended use.

Best practices for Acceptance Testing

Here are some best practices to consider when conducting Acceptance Testing:

- Involve stakeholders from the beginning: Collaborate closely with stakeholders, including end users, clients, and business representatives, right from the test planning phase. Engage them in defining acceptance criteria, requirements, and test scenarios. Their active participation ensures that the testing process aligns with their expectations and increases the chances of identifying any gaps early on.

- Define clear acceptance criteria: Clearly define the acceptance criteria that the software needs to meet. These criteria act as measurable benchmarks for determining whether the software has achieved the desired level of quality and functionality. Well-defined acceptance criteria provide clarity to both the testing team and stakeholders, making the evaluation process more effective.

- Design realistic and comprehensive test scenarios: Develop test scenarios that closely resemble real-world usage patterns and workflows. Ensure that the scenarios cover a wide range of functionalities and critical user interactions. Realistic test scenarios help validate the software’s performance, usability, and ability to handle various situations it will encounter in the actual environment.

- Prioritize test cases: Prioritize test cases based on their impact and importance to the overall software functionality. Start with high-priority test cases to quickly identify any critical issues and ensure that the core features are functioning as expected. This approach allows for the early detection of major issues and helps allocate testing resources effectively.

- Conduct both positive and negative testing: In addition to verifying expected behaviors (positive testing), also include negative test scenarios that evaluate the software’s resilience and ability to handle unexpected or erroneous inputs. Negative testing helps uncover potential vulnerabilities, error handling flaws, and boundary conditions that may impact the user experience.

- Perform end-to-end testing: Emulate end-to-end scenarios that cover the entire user journey and business processes. This type of testing ensures that all system components, integrations, and interfaces work seamlessly together. It helps identify any gaps or inconsistencies in the flow and provides smooth interaction between different modules or systems.

- Document and track issues: Maintain a systematic record of any issues, defects, or discrepancies found during Acceptance Testing. Proper documentation allows for effective communication between the testing and development teams, ensuring that all identified issues are addressed and resolved. Tracking the issues also helps in measuring progress and validating that necessary fixes have been implemented.

- Include real end users whenever possible: Whenever feasible, involve real end users in the Acceptance Testing process. Their direct feedback and insights provide valuable perspectives on usability, user experience, and overall satisfaction. Real user involvement helps fine-tune the software to better meet their needs and expectations.

Non-Functional Testing

Non-Functional Testing focuses on evaluating the attributes and characteristics of a software system beyond its functional requirements. It aims to ensure that the software not only functions correctly but also meets the desired quality standards and user expectations.

it addresses critical factors that impact the overall user experience, system performance, and the software’s ability to meet non-functional requirements. These non-functional requirements define qualities that are important for the software’s success, such as speed, responsiveness, user-friendliness, security, and availability.

It involves using various techniques, tools, and methodologies specific to each type of testing. It helps in identifying potential weaknesses, issues, and risks related to non-functional aspects, allowing for early detection and mitigation of problems. By conducting Non-Functional Testing, organizations can deliver software that not only functions correctly but also meets the desired performance, security, usability, and reliability standards, thereby enhancing user satisfaction and overall software quality.

We will now look at couple of different types of automation testing that can be categorised under Non-Functional Testing.

Security Testing

Security Testing is first in our list of types of automation testing under the broader category of Non-Functional Testing.

Security Testing is a vital aspect of software testing that focuses on identifying vulnerabilities, weaknesses, and potential threats in a system to ensure its resistance against malicious attacks. The purpose of Security Testing is to evaluate the software’s ability to protect sensitive data, maintain data integrity, and prevent unauthorized access or breaches.

Think of Security Testing as a security audit for a building. Just as a security audit assesses the building’s vulnerabilities and weaknesses to protect against theft or intrusion, Security Testing examines the software’s defenses to safeguard against unauthorized access, data breaches, or other security risks.

The goal of Security Testing is to identify and rectify security vulnerabilities before malicious actors can exploit them. By conducting thorough Security Testing, organizations can enhance the software’s resilience, protect sensitive data, and ensure compliance with relevant security standards and regulations.Security Testing covers different aspects of software security. This includes authentication, authorization, data confidentiality, data integrity, and secure communication.

Benefits of Security Testing

Security Testing offers several benefits in the software development process:

- Identifying Vulnerabilities: Security Testing helps to uncover vulnerabilities and weaknesses in software applications, networks, or systems. By systematically examining the software’s code, configurations, and infrastructure, Security Testing can identify potential entry points for malicious attacks or unauthorized access. This early detection enables organizations to address these vulnerabilities before they are exploited, reducing the risk of security breaches.

- Protecting Sensitive Data: Security Testing plays a vital role in safeguarding sensitive data, such as personal information, financial records, or intellectual property. By conducting comprehensive security assessments, organizations can identify potential data leaks, encryption flaws, or insecure data storage practices. Addressing these issues helps prevent unauthorized access and ensures the confidentiality, integrity, and availability of critical information.

- Mitigating Risks and Liabilities: Proactively conducting Security Testing minimizes the potential risks and liabilities associated with data breaches or security incidents. By identifying and addressing vulnerabilities early on, organizations can reduce the likelihood of cyberattacks, data theft, or service disruptions. This not only protects the organization’s reputation but also helps comply with regulatory requirements and avoid legal consequences.

- Building Customer Trust: Security Testing demonstrates a commitment to data privacy and protection, which builds trust among customers and stakeholders. In today’s digital landscape, where data breaches are increasingly common, customers are more inclined to engage with organizations that prioritize security. By conducting Security Testing, organizations can reassure their users that their information is safe, fostering customer loyalty and enhancing their brand reputation.

- Enhancing System Reliability: Security vulnerabilities can also impact the reliability and stability of software systems. By identifying and addressing these vulnerabilities through Security Testing, organizations can ensure the smooth functioning of their applications and networks. This, in turn, improves user experience, reduces downtime, and increases overall system reliability.

- Cost Savings: Detecting and remediating security issues early in the development lifecycle is more cost-effective than dealing with the consequences of a security breach after deployment. Security Testing helps organizations avoid the financial burden associated with data breaches, legal penalties, customer compensation, and reputational damage. By investing in Security Testing upfront, organizations can save significant costs in the long run.

- Compliance with Standards and Regulations: Security Testing helps organizations meet industry standards and regulatory requirements. Many sectors, such as finance, healthcare, and government, have specific security and privacy regulations that must be adhered to. Security Testing ensures that systems and applications align with these standards, allowing organizations to meet compliance obligations and avoid non-compliance penalties.

Best practices for Security Testing

Here are some best practices to consider when conducting Security Testing:

- Adopt a comprehensive approach: Take a holistic approach to Security Testing by considering all aspects of the software, including the application itself, underlying infrastructure, data storage, and network communication. Cover different layers of security, such as network security, access controls, encryption, and input validation, to ensure comprehensive coverage.

- Understand the application and potential threats: Gain a deep understanding of the application and its potential security risks. Analyze the architecture, design, and functionality to identify areas that may be susceptible to attacks. Consider the sensitive data being processed and stored, potential attack vectors, and the impact of security breaches.

- Follow industry standards and best practices: Adhere to industry security standards and best practices, such as OWASP (Open Web Application Security Project) guidelines, to guide your Security Testing efforts. These resources provide valuable insights into common vulnerabilities and recommended security controls. By following established standards, one can ensure that one’s Security Testing is thorough and aligns with industry best practices.

- Employ a combination of manual and automated techniques: Utilize a combination of manual and automated Security Testing techniques. Manual testing allows for in-depth analysis and identification of complex vulnerabilities, while automated tools can efficiently scan for known security issues and perform repetitive tasks. The synergy between manual and automated testing helps achieve comprehensive coverage and maximizes the efficiency of the testing process.

- Use a variety of testing techniques: Apply a variety of Security Testing techniques, including penetration testing, vulnerability scanning, code reviews, and security-focused configuration testing. Each technique offers unique insights into different aspects of security and helps uncover a wide range of vulnerabilities. Combining different techniques provides a more comprehensive evaluation of the software’s security posture.

- Test for both known and unknown vulnerabilities: While it’s essential to test for known vulnerabilities using vulnerability databases and security scanners, also focus on identifying unknown vulnerabilities through techniques like fuzz testing or security code reviews. By testing for known and unknown vulnerabilities, you can uncover potential risks that may have been overlooked.

- Involve security experts and ethical hackers: Engage the expertise of security professionals, such as security analysts or ethical hackers, to conduct thorough Security Testing. Their knowledge and experience in identifying security weaknesses can help uncover critical vulnerabilities that may be challenging to detect through traditional testing approaches. Their insights and recommendations contribute to enhancing the software’s overall security posture.

- Regularly update and retest: Security Testing is an ongoing process. Regularly update your Security Testing approach to stay ahead of emerging threats and vulnerabilities. Perform Security Testing at different stages of the software development lifecycle and after significant updates or changes. Continuously monitor security trends and incorporate new testing techniques and tools to adapt to evolving security challenges.

Usability Testing

Usability Testing is second in our list of types of automation testing under the broader category of Non-Functional Testing.

Usability Testing is a crucial part of the software testing process that focuses on evaluating how user-friendly and intuitive a software application or system is. The purpose of Usability Testing is to gauge how well users can navigate, interact with, and accomplish tasks within the software, with the ultimate goal of enhancing the overall user experience.

Think of Usability Testing as putting a product to the test by observing how people interact with it. It aims to understand users’ behaviors, preferences, and challenges when using the software, uncovering any usability issues or areas for improvement.

During Usability Testing, real users are involved in performing specific tasks or scenarios within the software, while their interactions, feedback, and observations are carefully observed and recorded. This testing typically involves a combination of qualitative and quantitative methods to gather insights on user satisfaction, efficiency, learnability, and error rates.

The main objective of Usability Testing is to identify any usability issues that may hinder user adoption, lead to frustration, or impede efficient usage of the software. It helps uncover interface design flaws, confusing workflows, inconsistent navigation, or unclear instructions that may impact the user experience negatively.

Benefits of Usability Testing

Usability Testing offers several benefits in the software development process:

- Identifying User Needs: Usability Testing helps to uncover the specific needs and expectations of users. By observing and collecting feedback from real users, we can gain insights into their preferences, pain points, and desired features. This information is invaluable for refining and tailoring the product to meet user expectations effectively.

- Enhancing User Satisfaction: By conducting usability tests, we can proactively address potential usability issues, allowing us to create a more intuitive and user-friendly experience. Identifying and resolving usability problems early on can lead to increased user satisfaction, as users are more likely to enjoy a smooth and seamless interaction with the product.

- Improving Conversion Rates: Usability Testing can uncover obstacles that hinder users from completing desired actions, such as making a purchase or signing up for a service. By identifying and rectifying these barriers, businesses can optimize their conversion rates, leading to increased customer engagement and revenue generation.

- Boosting User Retention: A product that is easy to use and navigate tends to retain users for longer periods. Usability Testing helps in uncovering pain points that may discourage users from returning or continuing to use the product. By addressing these issues, businesses can improve user retention and foster long-term customer loyalty.

- Saving Time and Costs: Identifying and rectifying usability issues early in the design and development process is more cost-effective than trying to fix them later. Usability Testing allows businesses to make informed decisions and iterate on designs, ultimately reducing the need for expensive redesigns or redevelopment.

- Gaining Competitive Advantage: By investing in Usability Testing, businesses can differentiate themselves from competitors by offering a superior user experience. Products that are easy to use, intuitive, and address user needs effectively tend to stand out in the market and attract more users.

- Facilitating Accessibility: Usability Testing can also help identify barriers faced by users with disabilities or special needs. By ensuring accessibility and inclusivity in design, businesses can expand their user base and cater to a wider range of individuals.

Best practices for Usability Testing

Here are some best practices to consider when conducting Usability Testing:

- Define clear objectives: Clearly define the goals and objectives of your Usability Testing. Identify what aspects of the software one wants to evaluate, such as navigation, task completion, or overall user satisfaction. Having clear objectives helps guide the testing process and ensures that one focuses on the most important aspects of usability.

- Identify the target user group: Identify the target user group or audience for your software. Understand their characteristics, preferences, and proficiency levels. This information helps recruit representative users for the testing process and ensures that the feedback received is relevant and actionable.

- Create realistic test scenarios: Design realistic test scenarios that mimic real-world situations and tasks that users are likely to encounter. Ensure that the tasks are clear, specific, and representative of the typical user interactions with the software. Realistic test scenarios provide valuable insights into how users navigate the software and accomplish their goals.

- Recruit diverse user participants: Aim for a diverse pool of user participants during Usability Testing. Include users with varying levels of experience, backgrounds, and demographics. This diversity ensures that one captures a broad range of perspectives and uncovers usability issues that may affect different user segments.

- Use a think-aloud protocol: Encourage participants to verbalize their thoughts and observations as they navigate through the software. This think-aloud protocol provides valuable insights into users’ decision-making processes, their struggles, and the reasoning behind their actions. It helps in understanding their cognitive processes and identifying areas where the software may cause confusion or frustration.

- Collect both qualitative and quantitative data: Gather both qualitative and quantitative data during Usability Testing. Qualitative data include observations, user feedback, and suggestions, which provide rich insights into users’ experiences. Quantitative data, such as completion rates, task times, and satisfaction ratings, allows for objective measurement and comparison of usability metrics.

- Capture and analyze usability issues: Document and analyze usability issues encountered by participants during testing. Categorize the issues based on severity and prioritize them for improvement. Identify recurring issues and common pain points to address systemic usability problems. Use the insights gained to refine and optimize the software’s design and user interface.

- Iterate and test iteratively: Usability Testing is an iterative process. Incorporate user feedback and make iterative improvements to the software based on the findings from each round of testing. Conduct multiple rounds of testing throughout the development lifecycle to ensure that usability issues are identified and addressed early on.

- Involve a multidisciplinary team: Collaborate with a multidisciplinary team that includes usability experts, designers, developers, and stakeholders. This diverse team can provide different perspectives and contribute to the interpretation and analysis of the Usability Testing results. Their collective insights can help in making informed decisions for usability improvements.

Performance Testing

Performance Testing is sixth in our list of types of automation testing under the broader category of Functional Testing.

Performance Testing is a critical type that aims to assess the speed, scalability, stability, and responsiveness of an application or system under various workload conditions. Performance Testing aims to determine how well the software performs under expected and peak load scenarios, identify any bottlenecks or performance issues, and ensure that it meets the desired performance requirements.

Think of Performance Testing as evaluating the performance of a sports car. You want to understand how fast it can go, how well it handles corners, and how it performs under different driving conditions. Similarly, Performance Testing measures the software’s ability to handle user interactions, data processing, and concurrent users, giving insights into its speed, resource consumption, and overall efficiency.

During Performance Testing, realistic scenarios are simulated to replicate different usage patterns, varying numbers of concurrent users, and specific workload conditions. The software is subjected to a series of tests to measure its response time, throughput, resource utilization, and scalability. These tests can include Load Testing, Stress Testing, Endurance Testing, and Spike Testing, depending on the specific performance goals and requirements.

Performance Testing also assists in capacity planning and scalability assessment. By understanding the software’s performance characteristics and limitations, organizations can make informed decisions regarding hardware upgrades, infrastructure scaling, and system optimization to support future growth and user demands.

Benefits of Performance Testing

Performance Testing offers several benefits in the software development process:

- Ensuring optimal software performance: Performance Testing helps in evaluating the responsiveness, speed, scalability, and stability of the software application under various load conditions. By simulating real-world usage scenarios, it assesses how the software performs in terms of its processing speed, resource utilization, and response times.

- Identifying performance bottlenecks and weaknesses: Performance Testing helps in identifying bottlenecks, weaknesses, and areas of inefficiency within the software. It helps in uncovering issues such as slow response times, high resource consumption, memory leaks, or network latency problems. By pinpointing these performance-related problems, developers can optimize the software and make necessary improvements to enhance its overall performance and user experience.

- Scalability assessment: Performance Testing allows for evaluating the software’s scalability, which is its ability to handle increasing loads and user concurrency. By gradually increasing the load and measuring the software’s performance metrics, Performance Testing helps determine how the software performs when subjected to higher user volumes or increased transaction rates. This information is crucial for capacity planning and ensuring that the software can scale effectively as the user base grows.

- User experience optimization: Performance Testing plays a vital role in optimizing the user experience. It ensures that the software responds quickly, performs tasks efficiently, and provides a seamless user interaction. By identifying performance issues early on, such as slow page load times or unresponsive interfaces, Performance Testing enables developers to make necessary optimizations that enhance the overall user experience and satisfaction.

- Cost and resource optimization: Performance Testing helps in optimizing costs and resources associated with software infrastructure. By identifying performance issues and bottlenecks, organizations can make informed decisions about hardware requirements, system configurations, and infrastructure scalability. This allows for efficient resource allocation, reducing unnecessary expenditure on hardware or infrastructure upgrades and ensuring optimal utilization of existing resources.

Best practices for Performance Testing

Here are some best practices to consider when conducting Performance Testing:

- Define clear performance objectives: Clearly define the performance goals and objectives for your software. Identify the key performance indicators (KPIs) that are important for the application, such as response time, throughput, or resource utilization. These objectives act as benchmarks for evaluating the performance of the software and help guide the testing process.

- Plan realistic test scenarios: Design test scenarios that closely resemble real-world usage patterns and workload conditions. Consider the expected number of concurrent users, data volumes, and transaction rates the software will likely encounter. Realistic test scenarios help uncover performance bottlenecks and assess the software’s ability to handle the anticipated load.

- Use representative test data: Utilize test data that accurately represents the expected production data. Realistic data helps evaluate the performance impact of different data volumes, sizes, and complexities. It provides insights into how the software handles various data scenarios and ensures that Performance Testing reflects usage patterns.

- Monitor system resources: Monitor the utilization of system resources, such as CPU, memory, network, and disk I/O, during Performance Testing. This allows for identifying resource bottlenecks that may impact the software’s performance. Tracking resource utilization helps in pinpointing potential scalability issues and optimizing system configurations.

- Leverage realistic test environments: Use test environments that closely resemble the production environment in terms of hardware, software, network configurations, and database setups. A representative test environment ensures that Performance Testing results accurately reflect the software’s behavior in the real-world production environment.

- Emphasize data consistency and repeatability: Ensure that performance tests are consistent and repeatable by properly managing test data and configurations. This allows for comparing test results and identifying performance trends over time. Consistency and repeatability provide a reliable basis for analyzing performance improvements or regressions.

- Monitor and analyze results: Continuously monitor and analyze Performance Testing results. Look for performance bottlenecks, trends, and anomalies that may require attention. Comprehensive analysis helps in understanding the root causes of performance issues and guides the optimization efforts.

- Collaborate with development and operations teams: Foster collaboration between development, testing, and operations teams during Performance Testing. Encourage open communication and knowledge sharing to ensure a shared understanding of performance requirements, test results, and potential optimizations. Collaboration promotes a collective effort to address performance issues and improve the overall performance of the software.

Types Of Automation Testing Frameworks

In this section, we will be discussing different frameworks and automation testing tools that you can consider while performing the types of automation testing discussed above.

JUnit

JUnit provides annotations, assertions, and test runners to write and execute unit tests effectively. Developers can define test cases by creating methods annotated with @Test, which signifies that these methods contain individual tests. Within each test method, developers can use various assertions provided by JUnit, such as assertEquals() or assertTrue() to verify expected outcomes and conditions.

JUnit is a primarily used for Unit Testing. It is a widely used testing framework for Java application.

NUnit

NUnit offers a rich set of assertions, attributes, and test runners to write and execute tests. One of the key advantages of NUnit is its ability to support a wide range of test cases and assertions. It also provides a rich set of attributes, for example [Test], [SetUp], and [TearDown], which helps developers to define test methods and also to set up preconditions or clean-up tasks. This flexibility helps in comprehensive testing of various edge cases, ensuring that the code behaves as expected in different situations.

Like JUnit, NUnit is also primarily used for Unit Testing. It is a framework for.NET and Mono.

PHPUnit

PHPUnit provides assertions, test runners, and mock object support for testing PHP code effectively. It includes features like data providers, allowing developers in running a single test case with multiple data sets, thus reducing code duplication and increasing test coverage.

PHPUnit is a Unit Testing framework for PHP.

pytest

pytest provides easy-to-use syntax, fixtures, and plugins for writing comprehensive tests. It emphasizes simplicity, readability, and scalability, helping developers in creating tests quickly and effectively.

pytest is a testing framework for Python that supports Functional Testing like Unit Testing and Integration Testing.

Mocha

Mocha is a feature-rich JavaScript testing framework for Node.js and browsers. It offers a flexible and expressive syntax, support for asynchronous testing, and various test runners. It provides a simple and intuitive interface for structuring and running tests, making it a favored choice among developers for test-driven development (TDD) and behavior-driven development (BDD).

Mocha is primarily used for Unit Testing and Integration Testing.

Jasmine

Jasmine is a behavior-driven development (BDD) framework for testing JavaScript code. It provides readable syntax, assertions, and spies for intuitively writing tests. It provides a rich set of built-in matchers that allow you to compare values, check for exceptions, or validate the state of objects and variables. These matchers make it convenient to write clear and concise assertions within your test cases. Jasmine also supports asynchronous testing, which is essential for handling scenarios where code execution involves asynchronous operations like AJAX requests or timeouts.

Jasmine is a primarily used for Unit Testing.

XCTest

XCTest offers a wide range of testing capabilities, including asynchronous testing, performance metrics, and UI testing for iOS and macOS applications.

XCTest is the Unit Testing framework for Swift and Objective-C in the Apple ecosystem.

Ruby

Ruby is a dynamic and object-oriented programming language. It follows the principles of object-oriented programming (OOP). Another notable feature of Ruby is its support for metaprogramming which helps developer to write code that can modify by itself or dynamically generate new code at runtime.

Ruby has several Unit Testing frameworks, including RSpec, Test::Unit, and MiniTest. RSpec follows a behavior-driven development approach, while Test::Unit and MiniTest follows traditional Unit Testing capabilities.

Understanding how Ruby’s OOP principles and its dynamic features enhance metaprogramming is crucial for tackling oops interview questions. Additionally, familiarity with Ruby’s Unit Testing frameworks like RSpec, Test::Unit, and MiniTest will help you effectively address various testing scenarios in interviews.

Postman

Postman is a popular API testing tool that allows to send requests to APIs and verify the responses. It provides a user-friendly interface for creating and managing test cases, making it suitable for testing API integrations.

Postman is used for Integration Testing, Performance Testing, Security Testing and Regression Testing.

SoapUI

SoapUI is another widely used tool for testing web services and APIs. It supports both RESTful and SOAP-based services and provides features for creating test cases, executing them, and validating responses. SoapUI allows for complex test scenarios and can handle different types of integrations.

SoapUI is primarily used for Security Testing, Compliance Testing and Regression Testing.

Apache JMeter

Apache JMeter is a versatile open-source tool. It can simulate heavy loads, concurrent users, and various protocols, making it suitable for testing integrations under different scenarios and stress conditions.

Apache JMeter is primarily used for load testing, but it can also be utilized for Integration Testing.

Selenium

Selenium is a well-known web application testing framework. It can easily automate browser interactions and perform end-to-end testing of web applications. Selenium can also be combined with other tools and frameworks to test integrations with external systems or services. One of the key features of Selenium is its ability to simulate user actions in web browsers. It can interact with web elements, fill out forms, click buttons, navigate through pages, and extract data for validation. This helps in automating repetitive tasks and saves significant time during the testing process.

It can be utilized for Integration Testing, Regression Testing and Smoke Testing.

Citrus Framework

Citrus Framework provides an easy-to-use Domain-Specific Language for creating integration test cases and offers features for simulating messaging endpoints and validating message flows.

It is specifically designed for Integration Testing of messaging systems, including JMS, HTTP, SOAP, and more.

Apache Kafka

Apache Kafka is a distributed streaming platform that can be used for Integration Testing of event-driven architectures. It provides features such as topic partitioning, event order, and fault tolerance, making it suitable for testing real-time data integrations and message-driven systems.

It is primarily used for Integration Testing.

Ranorex

Ranorex, being a commercial test automation tool, supports Regression Testing for desktop, web, and mobile applications. It offers a user-friendly interface, record-and-playback functionality, and a wide range of built-in automation features. Ranorex allows testers in creating and executing automated regression tests across multiple platforms and technologies.

It is primarily used for Regression Testing.

TestComplete

TestComplete, a comprehensive automated testing tool, offers a codesless automation testing approach. It helps testers create automated tests using a visual interface. TestComplete provides features such as object recognition, data-driven testing, and robust reporting capabilities.

It supports Regression Testing for desktop, web, and mobile applications.

HP UFT (Unified Functional Testing)

HP UFT, earlier known as HP QuickTest Professional (QTP), offers a rich set of features, including keyword-driven testing, object identification, and integration with other testing tools.

It is a widely used commercial Regression Testing tool. It supports various types of testing, which include functional, regression, and API testing.

TestNG

TestNG is a testing framework for Java that offers robust support for automated testing. It provides annotations, assertions, and reporting capabilities that can be leveraged to create and execute smoke tests efficiently.

It is primarily used for Unit Testing and Integration Testing.

Jenkins

Jenkins is an open-source automation server that can be utilized for continuous integration and delivery (CI/CD) pipelines. It provides a web-based interface helping developers to create, schedule, and manage automated jobs and workflows. One of the key benefits of Jenkins is its flexibility and extensibility. It can execute smoke tests as part of the build process, triggering the tests automatically whenever a new build or significant changes are made.

It is primarily used for Unit Testing, Integration Testing, Regression Testing, Performance Testing and Security Testing.

Robot Framework

Robot Framework is a flexible, keyword-driven test automation framework. It provides an easy-to-understand syntax and allows to create concise and readable tests for quickly evaluating essential functionalities.

It supports various test types, including Smoke Testing, Acceptance Testing and Regression Testing

Cucumber

Cucumber is a popular tool that facilitates behavior-driven development (BDD).. It uses a simple and readable syntax that allows stakeholders, testers, and developers to collaborate effectively. Cucumber enables the creation of feature files that describe the desired software behavior and generates automated acceptance tests based on those specifications.

It is primarily used for Acceptance Testing.

FitNesse

FitNesse is a wiki-based Acceptance Testing tool that promotes collaboration between stakeholders, testers, and developers. It enables the creation of executable acceptance tests using a wiki-style syntax. FitNesse allows for the easy creation and maintenance of test cases and provides real-time feedback on test results.

It is primarily used for Acceptance Testing.

OWASP ZAP (Zed Attack Proxy)

ZAP is a popular open-source Security Testing tool developed by the Open Web Application Security Project (OWASP). It helps identify security vulnerabilities in web applications by actively scanning for common issues like cross-site scripting (XSS), SQL injection, and insecure direct object references. ZAP also provides an easy-to-use user interface and features, including automated scanning and customizable reporting.

It is primarily used for Security Testing.

Burp Suite

Burp Suite is a comprehensive Security Testing tool widely used for web application Security Testing. It offers features including web vulnerability scanning, manual Security Testing, and web application proxy capabilities. It also provides advanced options for analyzing and manipulating application traffic.

It is primarily used for Security Testing.

Nessus

Nessus is a widely used vulnerability scanner that helps identify security vulnerabilities across networks, systems, and web applications. It performs automated scans and provides detailed reports on identified vulnerabilities, misconfigurations, and potential security risks.

It is primarily used for Security Testing.

Metasploit

Metasploit is a powerful framework for penetration testing and vulnerability assessment. Ethical hackers and security professionals widely use Metasploit to simulate real-world attacks, test security defenses, and validate the effectiveness of security controls.

It is primarily used for Security Testing.

Nikto

Nikto is an open-source web server scanner that specializes in identifying common web server vulnerabilities and misconfigurations. It scans web servers and applications for known security issues, such as outdated software versions, weak server configurations, and insecure file permissions.

It is primarily used for Security Testing.

Wireshark

Wireshark is a widely used network protocol analyzer that helps in network Security Testing. It captures and analyzes network traffic, allowing for the detection of suspicious or malicious activity.

It is primarily used for Security Testing.

LoadRunner

LoadRunner helps in simulating and measuring the performance of web and mobile applications under various load conditions. LoadRunner supports multiple protocols, offering advanced scripting capabilities and comprehensive reporting and analysis features.

It is primarily used for Performance Testing.

Gatling

Gatling is designed for high-performance and scalable testing. It allows the creation of realistic performance scenarios using a user-friendly Domain-Specific Language. Gatling offers real-time reporting, supports HTTP and WebSocket protocols, and provides an intuitive and developer-friendly interface.

It is primarily used for Performance Testing.

Apache Bench (ab)

Apache Bench is a command-line tool that comes bundled with the Apache HTTP Server. It is a simple and lightweight tool. Apache Bench allows us to perform load testing and measure web server response times, throughput, and concurrency.

It is primarily used for basic Performance Testing of web applications.

NeoLoad

NeoLoad provides a comprehensive set of features for load testing, stress testing, and endurance testing. It supports various protocols and technologies, including web, mobile, and cloud-based applications. NeoLoad offers user-friendly test design, advanced scripting capabilities, and detailed reporting and analysis.

It is primarily used for Performance Testing.

BlazeMeter

BlazeMeter is a cloud-based testing platform that allows running scalable performance tests from different geographic locations. It supports a wide range of protocols and integrates with popular testing tools like JMeter and Gatling. BlazeMeter offers real-time monitoring, advanced analytics, and collaboration features.

It is primarily used for Performance Testing.

UserTesting

UserTesting allows one to conduct remote usability tests with real users. It provides access to a large pool of participants who can perform tasks and provide feedback on software or website. UserTesting offers features like video recordings, screen sharing, and the ability to create custom test scenarios.

It is a popular Usability Testing platform.

Morae

Morae by TechSmith offers a range of features for capturing and analyzing user interactions. It allows to record and observe user sessions, collect user feedback, and creating detailed usability reports. Morae includes features like screen recording, eye tracking integration, and task analysis.

It is a comprehensive Usability Testing tool.

Optimal Workshop

Optimal Workshop is a suite of tools designed for different aspects of Usability Testing. It includes tools like Treejack for testing website information architecture, Chalkmark for testing the effectiveness of UI elements, and OptimalSort for conducting card-sorting exercises. Optimal Workshop offers user-friendly interfaces, data visualization capabilities, and collaboration features.

It is primarily used for Usability Testing.

UsabilityHub

UsabilityHub is a user research platform that provides several Usability Testing tools. It includes tools like the Five Second Test for quick impression testing, the Click Test for evaluating clickability and visual hierarchy, and the Navigation Test for assessing the effectiveness of website navigation. UsabilityHub offers a simple and intuitive interface and allows to gather user feedback efficiently.

It is primarily used for Usability Testing.

Lookback

Lookback allows conducting of remote, moderated Usability Testing sessions with participants. It provides video and audio recording capabilities, screen sharing, and live chat features. Lookback offers an easy-to-use interface and supports both mobile and desktop Usability Testing.

It is primarily used for Usability Testing.

Validately

Validately offers remote moderated and unmoderated testing options. It allows us to recruit participants, create tasks, and collect user feedback. Validately offers video and audio recording capabilities, screen sharing, and survey features to gather qualitative and quantitative data.

It is primarily used for Usability Testing.

The choice of tool depends on various factors, including the technology stack, integration requirements, and specific needs of one’s project. It’s always recommended to evaluate multiple tools and select the one that best fits one’s testing objectives and the context of the application.

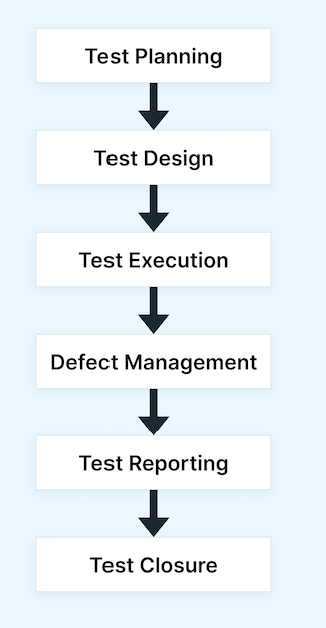

Different Phases of Testing